Hemerson Pistori

Livestock Fish Larvae Counting using DETR and YOLO based Deep Networks

Aug 09, 2024Abstract:Counting fish larvae is an important, yet demanding and time consuming, task in aquaculture. In order to address this problem, in this work, we evaluate four neural network architectures, including convolutional neural networks and transformers, in different sizes, in the task of fish larvae counting. For the evaluation, we present a new annotated image dataset with less data collection requirements than preceding works, with images of spotted sorubim and dourado larvae. By using image tiling techniques, we achieve a MAPE of 4.46% ($\pm 4.70$) with an extra large real time detection transformer, and 4.71% ($\pm 4.98$) with a medium-sized YOLOv8.

Pig aggression classification using CNN, Transformers and Recurrent Networks

Mar 13, 2024Abstract:The development of techniques that can be used to analyze and detect animal behavior is a crucial activity for the livestock sector, as it is possible to monitor the stress and animal welfare and contributes to decision making in the farm. Thus, the development of applications can assist breeders in making decisions to improve production performance and reduce costs, once the animal behavior is analyzed by humans and this can lead to susceptible errors and time consumption. Aggressiveness in pigs is an example of behavior that is studied to reduce its impact through animal classification and identification. However, this process is laborious and susceptible to errors, which can be reduced through automation by visually classifying videos captured in controlled environment. The captured videos can be used for training and, as a result, for classification through computer vision and artificial intelligence, employing neural network techniques. The main techniques utilized in this study are variants of transformers: STAM, TimeSformer, and ViViT, as well as techniques using convolutions, such as ResNet3D2, Resnet(2+1)D, and CnnLstm. These techniques were employed for pig video classification with the objective of identifying aggressive and non-aggressive behaviors. In this work, various techniques were compared to analyze the contribution of using transformers, in addition to the effectiveness of the convolution technique in video classification. The performance was evaluated using accuracy, precision, and recall. The TimerSformer technique showed the best results in video classification, with median accuracy of 0.729.

Using Deep Learning for Morphological Classification in Pigs with a Focus on Sanitary Monitoring

Mar 13, 2024Abstract:The aim of this paper is to evaluate the use of D-CNN (Deep Convolutional Neural Networks) algorithms to classify pig body conditions in normal or not normal conditions, with a focus on characteristics that are observed in sanitary monitoring, and were used six different algorithms to do this task. The study focused on five pig characteristics, being these caudophagy, ear hematoma, scratches on the body, redness, and natural stains (brown or black). The results of the study showed that D-CNN was effective in classifying deviations in pig body morphologies related to skin characteristics. The evaluation was conducted by analyzing the performance metrics Precision, Recall, and F-score, as well as the statistical analyses ANOVA and the Scott-Knott test. The contribution of this article is characterized by the proposal of using D-CNN networks for morphological classification in pigs, with a focus on characteristics identified in sanitary monitoring. Among the best results, the average Precision metric of 80.6\% to classify caudophagy was achieved for the InceptionResNetV2 network, indicating the potential use of this technology for the proposed task. Additionally, a new image database was created, containing various pig's distinct body characteristics, which can serve as data for future research.

Smartphone region-wise image indoor localization using deep learning for indoor tourist attraction

Mar 12, 2024

Abstract:Smart indoor tourist attractions, such as smart museums and aquariums, usually require a significant investment in indoor localization devices. The smartphone Global Positional Systems use is unsuitable for scenarios where dense materials such as concrete and metal block weaken the GPS signals, which is the most common scenario in an indoor tourist attraction. Deep learning makes it possible to perform region-wise indoor localization using smartphone images. This approach does not require any investment in infrastructure, reducing the cost and time to turn museums and aquariums into smart museums or smart aquariums. This paper proposes using deep learning algorithms to classify locations using smartphone camera images for indoor tourism attractions. We evaluate our proposal in a real-world scenario in Brazil. We extensively collect images from ten different smartphones to classify biome-themed fish tanks inside the Pantanal Biopark, creating a new dataset of 3654 images. We tested seven state-of-the-art neural networks, three being transformer-based, achieving precision around 90% on average and recall and f-score around 89% on average. The results indicate good feasibility of the proposal in a most indoor tourist attractions.

Aedes aegypti Egg Counting with Neural Networks for Object Detection

Mar 12, 2024

Abstract:Aedes aegypti is still one of the main concerns when it comes to disease vectors. Among the many ways to deal with it, there are important protocols that make use of egg numbers in ovitraps to calculate indices, such as the LIRAa and the Breteau Index, which can provide information on predictable outbursts and epidemics. Also, there are many research lines that require egg numbers, specially when mass production of mosquitoes is needed. Egg counting is a laborious and error-prone task that can be automated via computer vision-based techniques, specially deep learning-based counting with object detection. In this work, we propose a new dataset comprising field and laboratory eggs, along with test results of three neural networks applied to the task: Faster R-CNN, Side-Aware Boundary Localization and FoveaBox.

A New Machine Learning Dataset of Bulldog Nostril Images for Stenosis Degree Classification

Mar 11, 2024Abstract:Brachycephaly, a conformation trait in some dog breeds, causes BOAS, a respiratory disorder that affects the health and welfare of the dogs with various symptoms. In this paper, a new annotated dataset composed of 190 images of bulldogs' nostrils is presented. Three degrees of stenosis are approximately equally represented in the dataset: mild, moderate and severe stenosis. The dataset also comprises a small quantity of non stenotic nostril images. To the best of our knowledge, this is the first image dataset addressing this problem. Furthermore, deep learning is investigated as an alternative to automatically infer stenosis degree using nostril images. In this work, several neural networks were tested: ResNet50, MobileNetV3, DenseNet201, SwinV2 and MaxViT. For this evaluation, the problem was modeled in two different ways: first, as a three-class classification problem (mild or open, moderate, and severe); second, as a binary classification problem, with severe stenosis as target. For the multiclass classification, a maximum median f-score of 53.77\% was achieved by the MobileNetV3. For binary classification, a maximum median f-score of 72.08\% has been reached by ResNet50, indicating that the problem is challenging but possibly tractable.

Exploring Cluster Analysis in Nelore Cattle Visual Score Attribution

Mar 11, 2024Abstract:Assessing the biotype of cattle through human visual inspection is a very common and important practice in precision cattle breeding. This paper presents the results of a correlation analysis between scores produced by humans for Nelore cattle and a variety of measurements that can be derived from images or other instruments. It also presents a study using the k-means algorithm to generate new ways of clustering a batch of cattle using the measurements that most correlate with the animal's body weight and visual scores.

MTLSegFormer: Multi-task Learning with Transformers for Semantic Segmentation in Precision Agriculture

May 04, 2023Abstract:Multi-task learning has proven to be effective in improving the performance of correlated tasks. Most of the existing methods use a backbone to extract initial features with independent branches for each task, and the exchange of information between the branches usually occurs through the concatenation or sum of the feature maps of the branches. However, this type of information exchange does not directly consider the local characteristics of the image nor the level of importance or correlation between the tasks. In this paper, we propose a semantic segmentation method, MTLSegFormer, which combines multi-task learning and attention mechanisms. After the backbone feature extraction, two feature maps are learned for each task. The first map is proposed to learn features related to its task, while the second map is obtained by applying learned visual attention to locally re-weigh the feature maps of the other tasks. In this way, weights are assigned to local regions of the image of other tasks that have greater importance for the specific task. Finally, the two maps are combined and used to solve a task. We tested the performance in two challenging problems with correlated tasks and observed a significant improvement in accuracy, mainly in tasks with high dependence on the others.

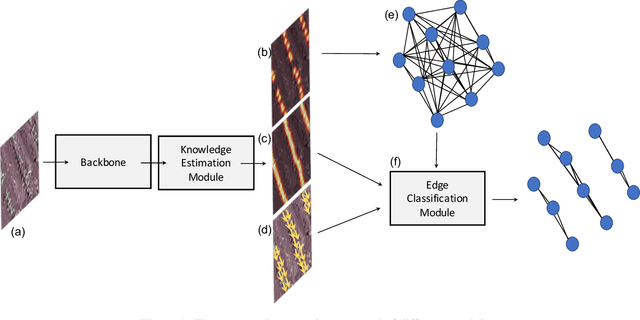

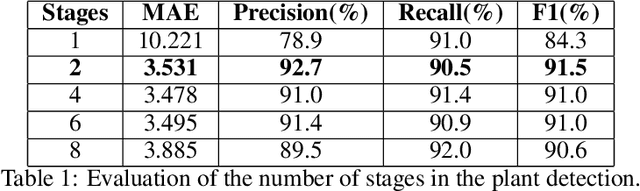

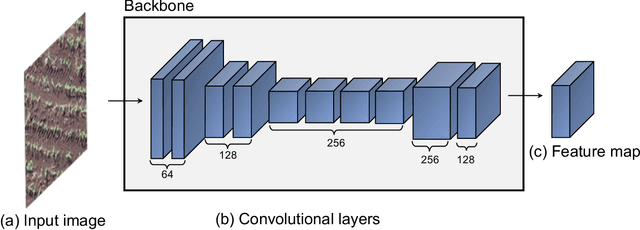

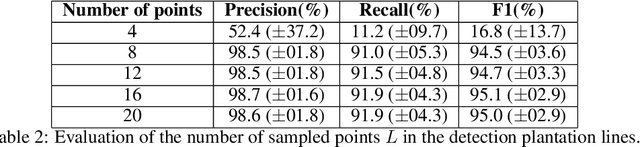

A Deep Learning Approach Based on Graphs to Detect Plantation Lines

Feb 05, 2021

Abstract:Deep learning-based networks are among the most prominent methods to learn linear patterns and extract this type of information from diverse imagery conditions. Here, we propose a deep learning approach based on graphs to detect plantation lines in UAV-based RGB imagery presenting a challenging scenario containing spaced plants. The first module of our method extracts a feature map throughout the backbone, which consists of the initial layers of the VGG16. This feature map is used as an input to the Knowledge Estimation Module (KEM), organized in three concatenated branches for detecting 1) the plant positions, 2) the plantation lines, and 3) for the displacement vectors between the plants. A graph modeling is applied considering each plant position on the image as vertices, and edges are formed between two vertices (i.e. plants). Finally, the edge is classified as pertaining to a certain plantation line based on three probabilities (higher than 0.5): i) in visual features obtained from the backbone; ii) a chance that the edge pixels belong to a line, from the KEM step; and iii) an alignment of the displacement vectors with the edge, also from KEM. Experiments were conducted in corn plantations with different growth stages and patterns with aerial RGB imagery. A total of 564 patches with 256 x 256 pixels were used and randomly divided into training, validation, and testing sets in a proportion of 60\%, 20\%, and 20\%, respectively. The proposed method was compared against state-of-the-art deep learning methods, and achieved superior performance with a significant margin, returning precision, recall, and F1-score of 98.7\%, 91.9\%, and 95.1\%, respectively. This approach is useful in extracting lines with spaced plantation patterns and could be implemented in scenarios where plantation gaps occur, generating lines with few-to-none interruptions.

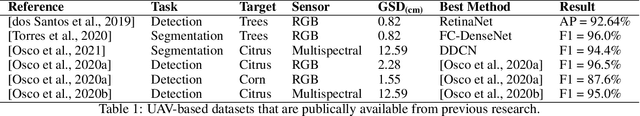

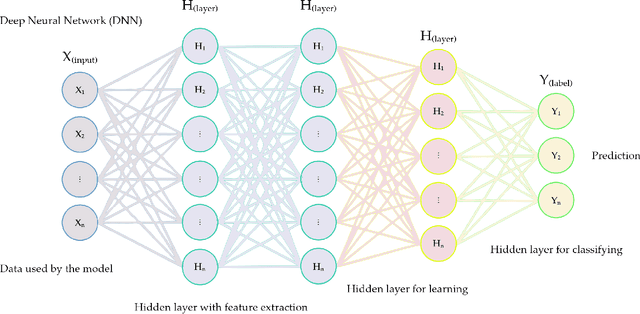

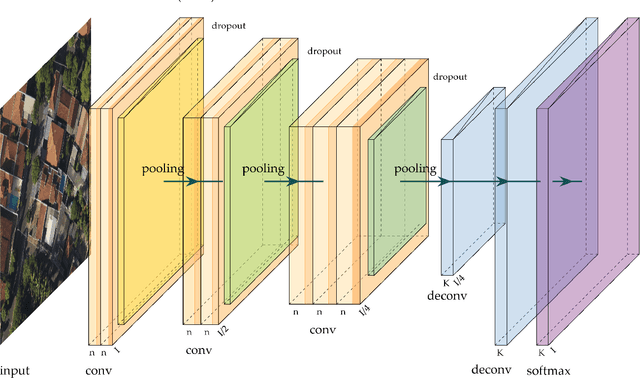

A Review on Deep Learning in UAV Remote Sensing

Jan 29, 2021

Abstract:Deep Neural Networks (DNNs) learn representation from data with an impressive capability, and brought important breakthroughs for processing images, time-series, natural language, audio, video, and many others. In the remote sensing field, surveys and literature revisions specifically involving DNNs algorithms' applications have been conducted in an attempt to summarize the amount of information produced in its subfields. Recently, Unmanned Aerial Vehicles (UAV) based applications have dominated aerial sensing research. However, a literature revision that combines both "deep learning" and "UAV remote sensing" thematics has not yet been conducted. The motivation for our work was to present a comprehensive review of the fundamentals of Deep Learning (DL) applied in UAV-based imagery. We focused mainly on describing classification and regression techniques used in recent applications with UAV-acquired data. For that, a total of 232 papers published in international scientific journal databases was examined. We gathered the published material and evaluated their characteristics regarding application, sensor, and technique used. We relate how DL presents promising results and has the potential for processing tasks associated with UAV-based image data. Lastly, we project future perspectives, commentating on prominent DL paths to be explored in the UAV remote sensing field. Our revision consists of a friendly-approach to introduce, commentate, and summarize the state-of-the-art in UAV-based image applications with DNNs algorithms in diverse subfields of remote sensing, grouping it in the environmental, urban, and agricultural contexts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge