Mauro dos Santos de Arruda

Counting and Locating High-Density Objects Using Convolutional Neural Network

Feb 08, 2021

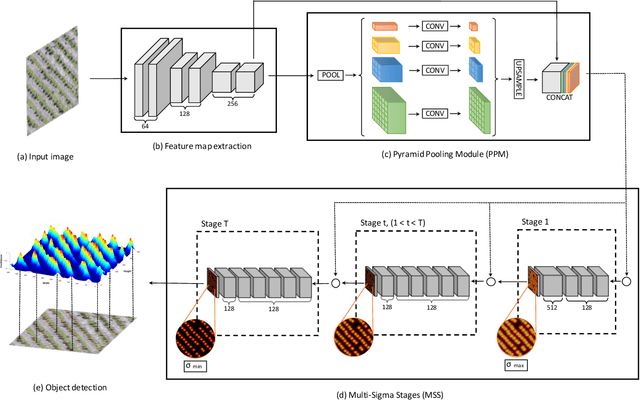

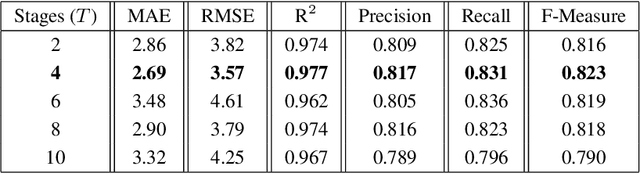

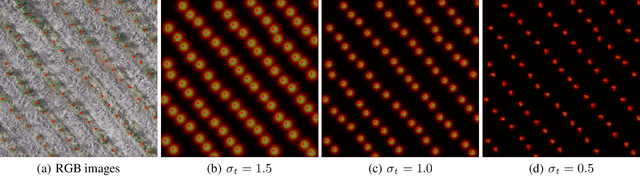

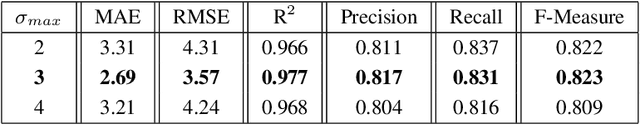

Abstract:This paper presents a Convolutional Neural Network (CNN) approach for counting and locating objects in high-density imagery. To the best of our knowledge, this is the first object counting and locating method based on a feature map enhancement and a Multi-Stage Refinement of the confidence map. The proposed method was evaluated in two counting datasets: tree and car. For the tree dataset, our method returned a mean absolute error (MAE) of 2.05, a root-mean-squared error (RMSE) of 2.87 and a coefficient of determination (R$^2$) of 0.986. For the car dataset (CARPK and PUCPR+), our method was superior to state-of-the-art methods. In the these datasets, our approach achieved an MAE of 4.45 and 3.16, an RMSE of 6.18 and 4.39, and an R$^2$ of 0.975 and 0.999, respectively. The proposed method is suitable for dealing with high object-density, returning a state-of-the-art performance for counting and locating objects.

A Deep Learning Approach Based on Graphs to Detect Plantation Lines

Feb 05, 2021

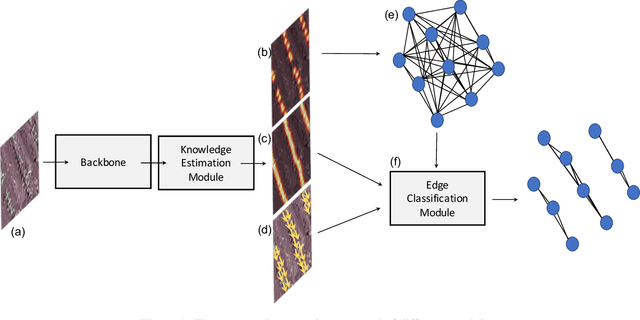

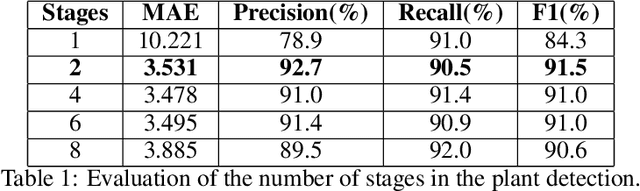

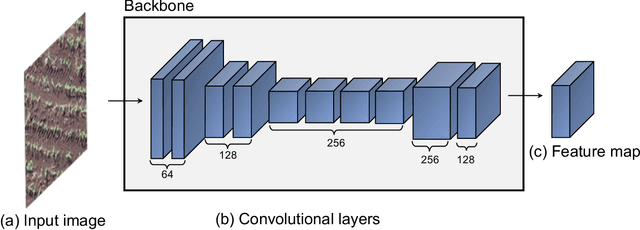

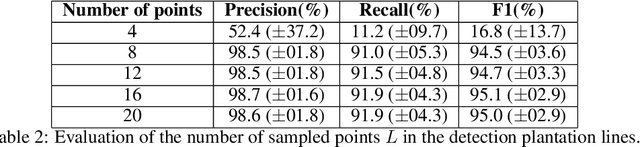

Abstract:Deep learning-based networks are among the most prominent methods to learn linear patterns and extract this type of information from diverse imagery conditions. Here, we propose a deep learning approach based on graphs to detect plantation lines in UAV-based RGB imagery presenting a challenging scenario containing spaced plants. The first module of our method extracts a feature map throughout the backbone, which consists of the initial layers of the VGG16. This feature map is used as an input to the Knowledge Estimation Module (KEM), organized in three concatenated branches for detecting 1) the plant positions, 2) the plantation lines, and 3) for the displacement vectors between the plants. A graph modeling is applied considering each plant position on the image as vertices, and edges are formed between two vertices (i.e. plants). Finally, the edge is classified as pertaining to a certain plantation line based on three probabilities (higher than 0.5): i) in visual features obtained from the backbone; ii) a chance that the edge pixels belong to a line, from the KEM step; and iii) an alignment of the displacement vectors with the edge, also from KEM. Experiments were conducted in corn plantations with different growth stages and patterns with aerial RGB imagery. A total of 564 patches with 256 x 256 pixels were used and randomly divided into training, validation, and testing sets in a proportion of 60\%, 20\%, and 20\%, respectively. The proposed method was compared against state-of-the-art deep learning methods, and achieved superior performance with a significant margin, returning precision, recall, and F1-score of 98.7\%, 91.9\%, and 95.1\%, respectively. This approach is useful in extracting lines with spaced plantation patterns and could be implemented in scenarios where plantation gaps occur, generating lines with few-to-none interruptions.

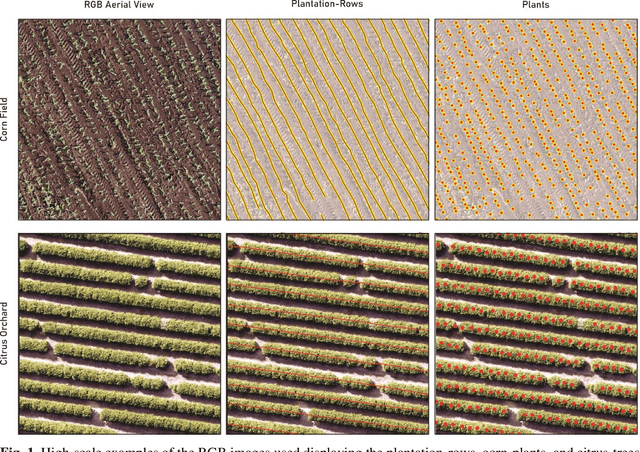

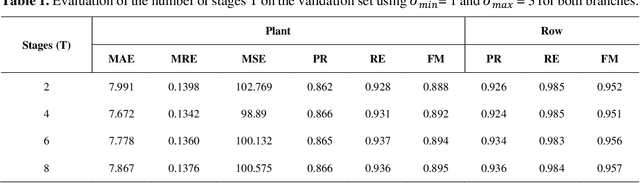

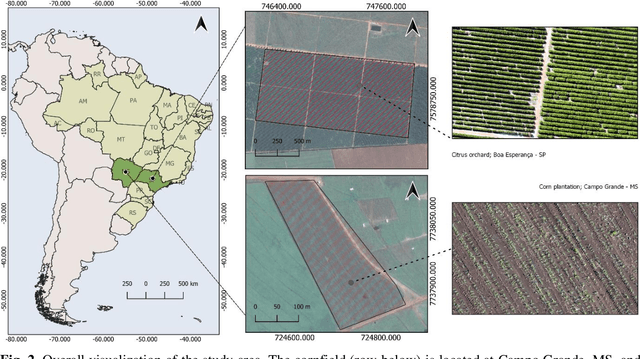

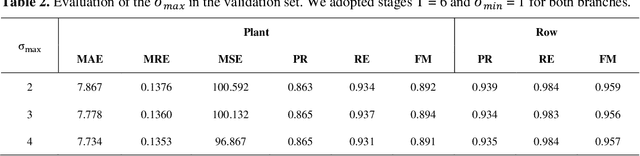

A CNN Approach to Simultaneously Count Plants and Detect Plantation-Rows from UAV Imagery

Jan 02, 2021

Abstract:In this paper, we propose a novel deep learning method based on a Convolutional Neural Network (CNN) that simultaneously detects and geolocates plantation-rows while counting its plants considering highly-dense plantation configurations. The experimental setup was evaluated in a cornfield with different growth stages and in a Citrus orchard. Both datasets characterize different plant density scenarios, locations, types of crops, sensors, and dates. A two-branch architecture was implemented in our CNN method, where the information obtained within the plantation-row is updated into the plant detection branch and retro-feed to the row branch; which are then refined by a Multi-Stage Refinement method. In the corn plantation datasets (with both growth phases, young and mature), our approach returned a mean absolute error (MAE) of 6.224 plants per image patch, a mean relative error (MRE) of 0.1038, precision and recall values of 0.856, and 0.905, respectively, and an F-measure equal to 0.876. These results were superior to the results from other deep networks (HRNet, Faster R-CNN, and RetinaNet) evaluated with the same task and dataset. For the plantation-row detection, our approach returned precision, recall, and F-measure scores of 0.913, 0.941, and 0.925, respectively. To test the robustness of our model with a different type of agriculture, we performed the same task in the citrus orchard dataset. It returned an MAE equal to 1.409 citrus-trees per patch, MRE of 0.0615, precision of 0.922, recall of 0.911, and F-measure of 0.965. For citrus plantation-row detection, our approach resulted in precision, recall, and F-measure scores equal to 0.965, 0.970, and 0.964, respectively. The proposed method achieved state-of-the-art performance for counting and geolocating plants and plant-rows in UAV images from different types of crops.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge