Heinrich H. Bülthoff

Active Perception based Formation Control for Multiple Aerial Vehicles

Jan 23, 2019

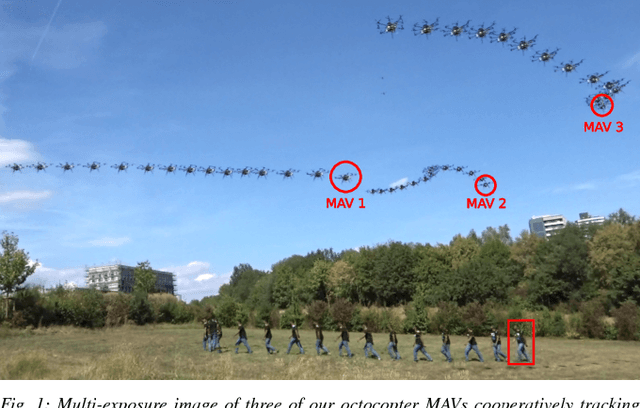

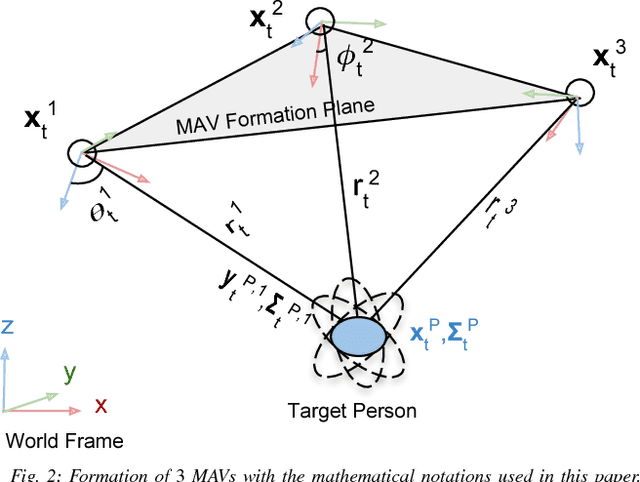

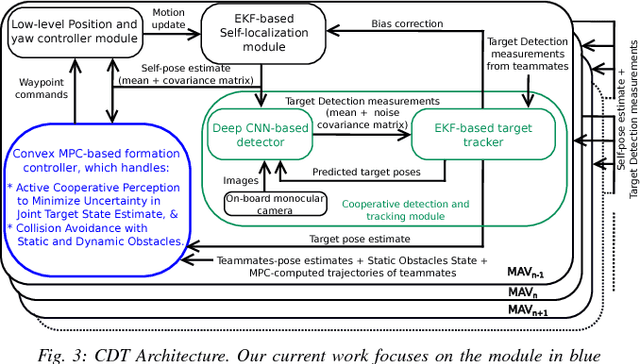

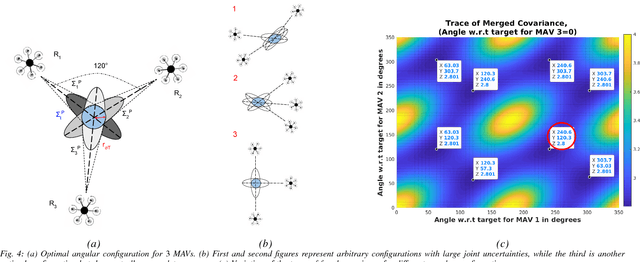

Abstract:Autonomous motion capture (mocap) systems for outdoor scenarios involving flying or mobile cameras rely on i) a robotic front-end to track and follow a human subject in real-time while he/she performs physical activities, and ii) an algorithmic back-end that estimates full body human pose and shape from the saved videos. In this paper we present a novel front-end for our aerial mocap system that consists of multiple micro aerial vehicles (MAVs) with only on-board cameras and computation. In previous work, we presented an approach for cooperative detection and tracking (CDT) of a subject using multiple MAVs. However, it did not ensure optimal view-point configurations of the MAVs to minimize the uncertainty in the person's cooperatively tracked 3D position estimate. In this article we introduce an active approach for CDT. In contrast to cooperatively tracking only the 3D positions of the person, the MAVs can now actively compute optimal local motion plans, resulting in optimal view-point configurations, which minimize the uncertainty in the tracked estimate. We achieve this by decoupling the goal of active tracking as a convex quadratic objective and non-convex constraints corresponding to angular configurations of the MAVs w.r.t. the person. We derive it using Gaussian observation model assumptions within the CDT algorithm. We also show how we embed all the non-convex constraints, including those for dynamic and static obstacle avoidance, as external control inputs in the MPC dynamics. Multiple real robot experiments and comparisons involving 3 MAVs in several challenging scenarios are presented (video link : https://youtu.be/1qWW2zWvRhA). Extensive simulation results demonstrate the scalability and robustness of our approach. ROS-based source code is also provided.

Deep Neural Network-based Cooperative Visual Tracking through Multiple Micro Aerial Vehicles

Feb 05, 2018

Abstract:Multi-camera full-body pose capture of humans and animals in outdoor environments is a highly challenging problem. Our approach to it involves a team of cooperating micro aerial vehicles (MAVs) with on-board cameras only. The key enabling-aspect of our approach is the on-board person detection and tracking method. Recent state-of-the-art methods based on deep neural networks (DNN) are highly promising in this context. However, real time DNNs are severely constrained in input data dimensions, in contrast to available camera resolutions. Therefore, DNNs often fail at objects with small scale or far away from the camera, which are typical characteristics of a scenario with aerial robots. Thus, the core problem addressed in this paper is how to achieve on-board, real-time, continuous and accurate vision-based detections using DNNs for visual person tracking through MAVs. Our solution leverages cooperation among multiple MAVs. First, each MAV fuses its own detections with those obtained by other MAVs to perform cooperative visual tracking. This allows for predicting future poses of the tracked person, which are used to selectively process only the relevant regions of future images, even at high resolutions. Consequently, using our DNN-based detector we are able to continuously track even distant humans with high accuracy and speed. We demonstrate the efficiency of our approach through real robot experiments involving two aerial robots tracking a person, while maintaining an active perception-driven formation. Our solution runs fully on-board our MAV's CPU and GPU, with no remote processing. ROS-based source code is provided for the benefit of the community.

Decentralized Simultaneous Multi-target Exploration using a Connected Network of Multiple Robots

Mar 16, 2017

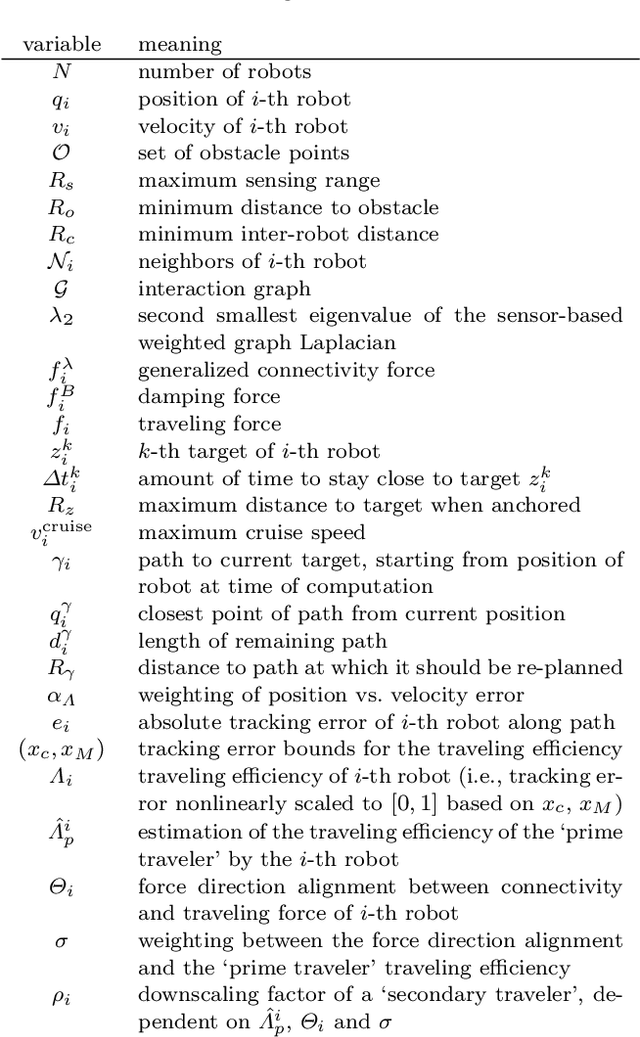

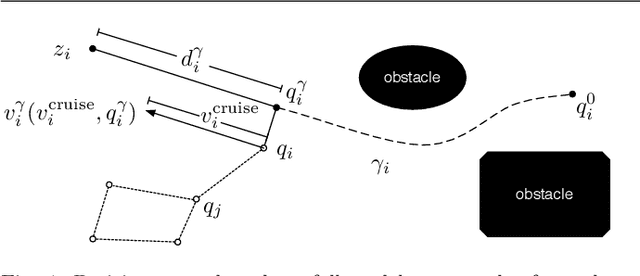

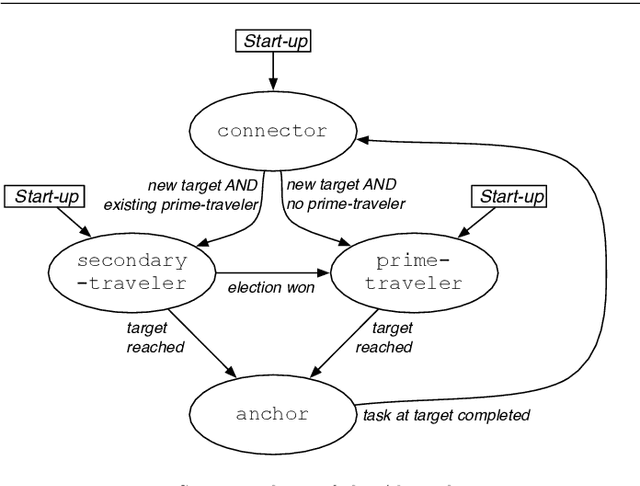

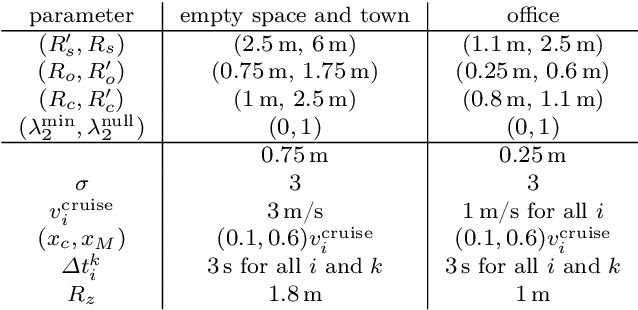

Abstract:This paper presents a novel decentralized control strategy for a multi-robot system that enables parallel multi-target exploration while ensuring a time-varying connected topology in cluttered 3D environments. Flexible continuous connectivity is guaranteed by building upon a recent connectivity maintenance method, in which limited range, line-of-sight visibility, and collision avoidance are taken into account at the same time. Completeness of the decentralized multi-target exploration algorithm is guaranteed by dynamically assigning the robots with different motion behaviors during the exploration task. One major group is subject to a suitable downscaling of the main traveling force based on the traveling efficiency of the current leader and the direction alignment between traveling and connectivity force. This supports the leader in always reaching its current target and, on a larger time horizon, that the whole team realizes the overall task in finite time. Extensive Monte~Carlo simulations with a group of several quadrotor UAVs show the scalability and effectiveness of the proposed method and experiments validate its practicability.

Decentralized Rigidity Maintenance Control with Range Measurements for Multi-Robot Systems

Sep 04, 2014

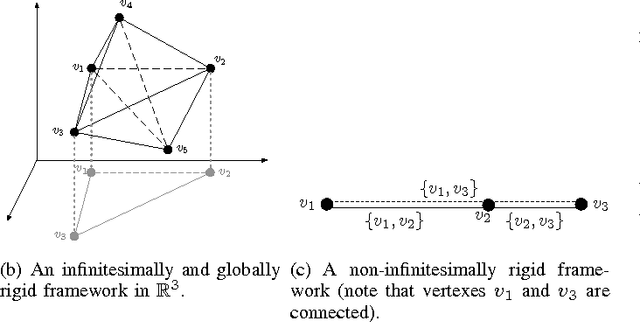

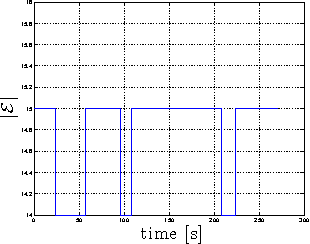

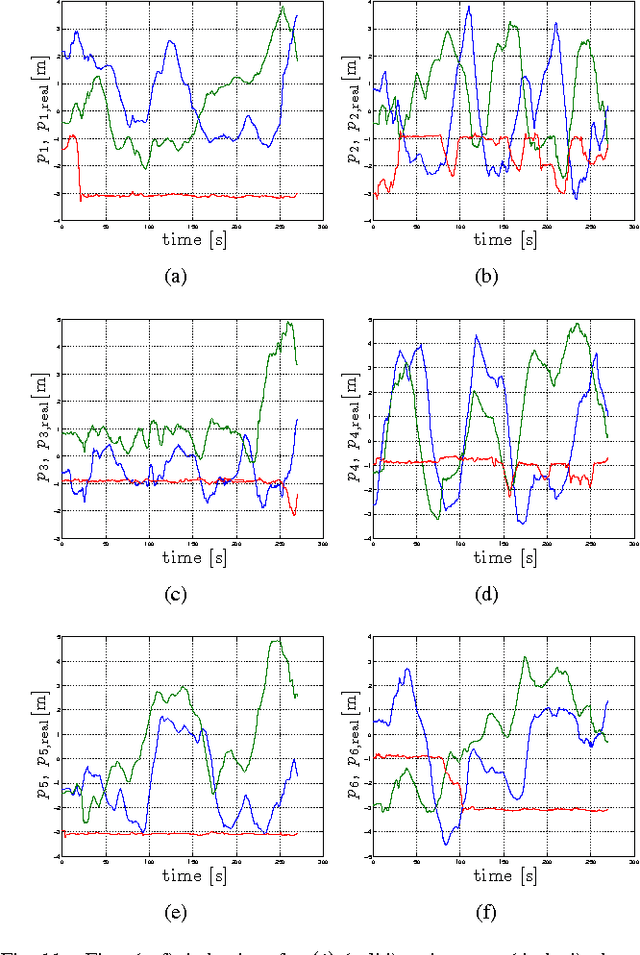

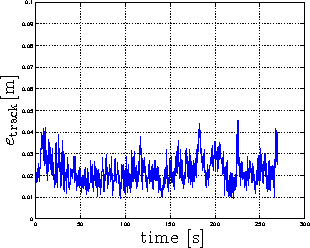

Abstract:This work proposes a fully decentralized strategy for maintaining the formation rigidity of a multi-robot system using only range measurements, while still allowing the graph topology to change freely over time. In this direction, a first contribution of this work is an extension of rigidity theory to weighted frameworks and the rigidity eigenvalue, which when positive ensures the infinitesimal rigidity of the framework. We then propose a distributed algorithm for estimating a common relative position reference frame amongst a team of robots with only range measurements in addition to one agent endowed with the capability of measuring the bearing to two other agents. This first estimation step is embedded into a subsequent distributed algorithm for estimating the rigidity eigenvalue associated with the weighted framework. The estimate of the rigidity eigenvalue is finally used to generate a local control action for each agent that both maintains the rigidity property and enforces additional con- straints such as collision avoidance and sensing/communication range limits and occlusions. As an additional feature of our approach, the communication and sensing links among the robots are also left free to change over time while preserving rigidity of the whole framework. The proposed scheme is then experimentally validated with a robotic testbed consisting of 6 quadrotor UAVs operating in a cluttered environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge