Heather Culbertson

Developing a Modular Toolkit for Rapid Prototyping of Wearable Vibrotactile Haptic Harness

Sep 06, 2024Abstract:This paper presents a toolkit for rapid harness prototyping. These wearable structures attach vibrotactile actuators to the body using modular elements like 3D printed joints, laser cut or vinyl cutter-based sheets and magnetic clasps. This facilitates easy customization and assembly. The toolkit's primary objective is to simplify the design of haptic wearables, making research in this field easier and more approachable.

An Evaluation of Three Distance Measurement Technologies for Flying Light Specks

Aug 19, 2023Abstract:This study evaluates the accuracy of three different types of time-of-flight sensors to measure distance. We envision the possible use of these sensors to localize swarms of flying light specks (FLSs) to illuminate objects and avatars of a metaverse. An FLS is a miniature-sized drone configured with RGB light sources. It is unable to illuminate a point cloud by itself. However, the inter-FLS relationship effect of an organizational framework will compensate for the simplicity of each individual FLS, enabling a swarm of cooperating FLSs to illuminate complex shapes and render haptic interactions. Distance between FLSs is an important criterion of the inter-FLS relationship. We consider sensors that use radio frequency (UWB), infrared light (IR), and sound (ultrasonic) to quantify this metric. Obtained results show only one sensor is able to measure distances as small as 1 cm with a high accuracy. A sensor may require a calibration process that impacts its accuracy in measuring distance.

Dronevision: An Experimental 3D Testbed for Flying Light Specks

Aug 19, 2023Abstract:Today's robotic laboratories for drones are housed in a large room. At times, they are the size of a warehouse. These spaces are typically equipped with permanent devices to localize the drones, e.g., Vicon Infrared cameras. Significant time is invested to fine-tune the localization apparatus to compute and control the position of the drones. One may use these laboratories to develop a 3D multimedia system with miniature sized drones configured with light sources. As an alternative, this brave new idea paper envisions shrinking these room-sized laboratories to the size of a cube or cuboid that sits on a desk and costs less than 10K dollars. The resulting Dronevision (DV) will be the size of a 1990s Television. In addition to light sources, its Flying Light Specks (FLSs) will be network-enabled drones with storage and processing capability to implement decentralized algorithms. The DV will include a localization technique to expedite development of 3D displays. It will act as a haptic interface for a user to interact with and manipulate the 3D virtual illuminations. It will empower an experimenter to design, implement, test, debug, and maintain software and hardware that realize novel algorithms in the comfort of their office without having to reserve a laboratory. In addition to enhancing productivity, it will improve safety of the experimenter by minimizing the likelihood of accidents. This paper introduces the concept of a DV, the research agenda one may pursue using this device, and our plans to realize one.

Active Acoustic Sensing for Robot Manipulation

Aug 03, 2023

Abstract:Perception in robot manipulation has been actively explored with the goal of advancing and integrating vision and touch for global and local feature extraction. However, it is difficult to perceive certain object internal states, and the integration of visual and haptic perception is not compact and is easily biased. We propose to address these limitations by developing an active acoustic sensing method for robot manipulation. Active acoustic sensing relies on the resonant properties of the object, which are related to its material, shape, internal structure, and contact interactions with the gripper and environment. The sensor consists of a vibration actuator paired with a piezo-electric microphone. The actuator generates a waveform, and the microphone tracks the waveform's propagation and distortion as it travels through the object. This paper presents the sensing principles, hardware design, simulation development, and evaluation of physical and simulated sensory data under different conditions as a proof-of-concept. This work aims to provide fundamentals on a useful tool for downstream robot manipulation tasks using active acoustic sensing, such as object recognition, grasping point estimation, object pose estimation, and external contact formation detection.

Development and Evaluation of a Learning-based Model for Real-time Haptic Texture Rendering

Dec 27, 2022Abstract:Current Virtual Reality (VR) environments lack the rich haptic signals that humans experience during real-life interactions, such as the sensation of texture during lateral movement on a surface. Adding realistic haptic textures to VR environments requires a model that generalizes to variations of a user's interaction and to the wide variety of existing textures in the world. Current methodologies for haptic texture rendering exist, but they usually develop one model per texture, resulting in low scalability. We present a deep learning-based action-conditional model for haptic texture rendering and evaluate its perceptual performance in rendering realistic texture vibrations through a multi part human user study. This model is unified over all materials and uses data from a vision-based tactile sensor (GelSight) to render the appropriate surface conditioned on the user's action in real time. For rendering texture, we use a high-bandwidth vibrotactile transducer attached to a 3D Systems Touch device. The result of our user study shows that our learning-based method creates high-frequency texture renderings with comparable or better quality than state-of-the-art methods without the need for learning a separate model per texture. Furthermore, we show that the method is capable of rendering previously unseen textures using a single GelSight image of their surface.

Masking Effects in Combined Hardness and Stiffness Rendering Using an Encountered-Type Haptic Display

Oct 13, 2021

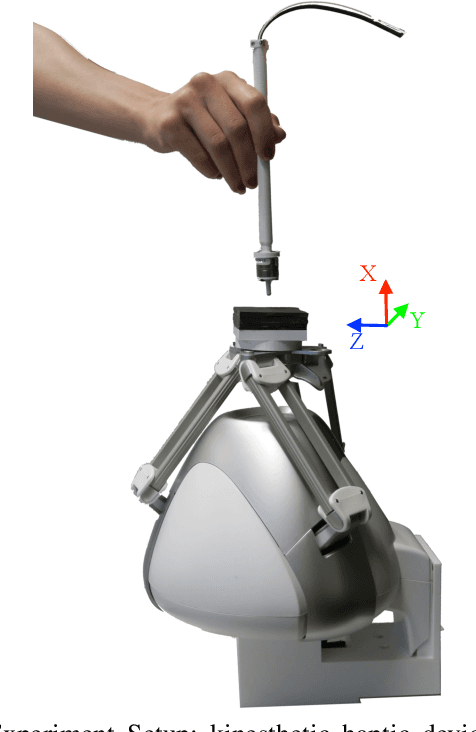

Abstract:Rendering stable hard surfaces is an important problem in haptics for many tasks, including training simulators for orthopedic surgery or dentistry. Current impedance devices cannot provide enough force and stiffness to render a wall, and the high friction and inertia of admittance devices make it difficult to render free space. We propose to address these limitations by combining haptic augmented reality, untethered haptic interaction, and an encountered-type haptic display. We attach a plate with the desired hardness on the kinesthetic device's end-effector, which the user interacts with using an untethered stylus. This method allows us to directly change the hardness of the end-effector based on the rendered object. In this paper, we evaluate how changing the hardness of the end-effector can mask the device's stiffness and affect the user's perception. The results of our human subject experiment indicate that when the end-effector is made of a hard material, it is difficult for users to perceive when the underlying stiffness being rendered by the device is changed, but this stiffness change is easy to distinguish while the end-effector is made of a soft material. These results show promise for our approach in avoiding the limitations of haptic devices when rendering hard surfaces.

Investigating Social Haptic Illusions for Tactile Stroking (SHIFTS)

Mar 02, 2020

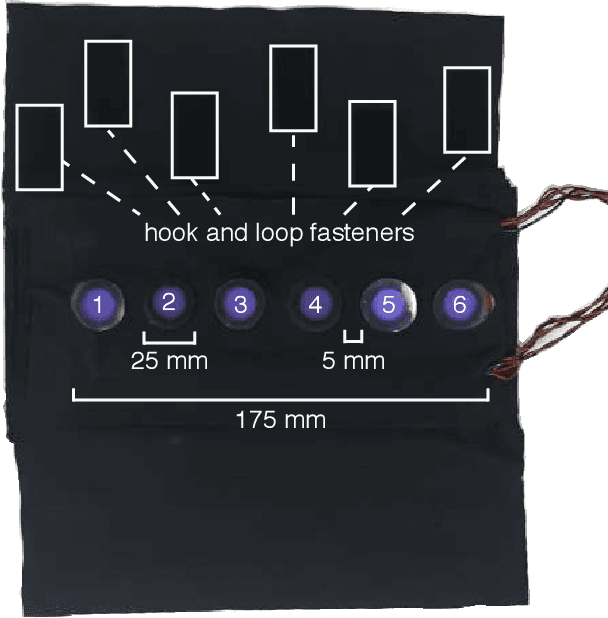

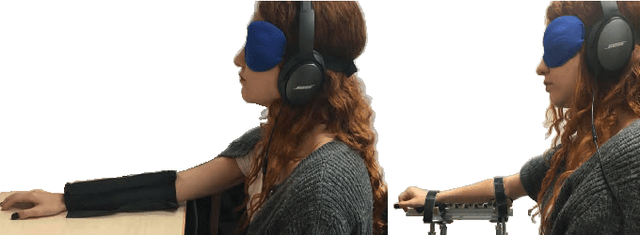

Abstract:A common and effective form of social touch is stroking on the forearm. We seek to replicate this stroking sensation using haptic illusions. This work compares two methods that provide sequential discrete stimulation: sequential normal indentation and sequential lateral skin-slip using discrete actuators. Our goals are to understand which form of stimulation more effectively creates a continuous stroking sensation, and how many discrete contact points are needed. We performed a study with 20 participants in which they rated sensations from the haptic devices on continuity and pleasantness. We found that lateral skin-slip created a more continuous sensation, and decreasing the number of contact points decreased the continuity. These results inform the design of future wearable haptic devices and the creation of haptic signals for effective social communication.

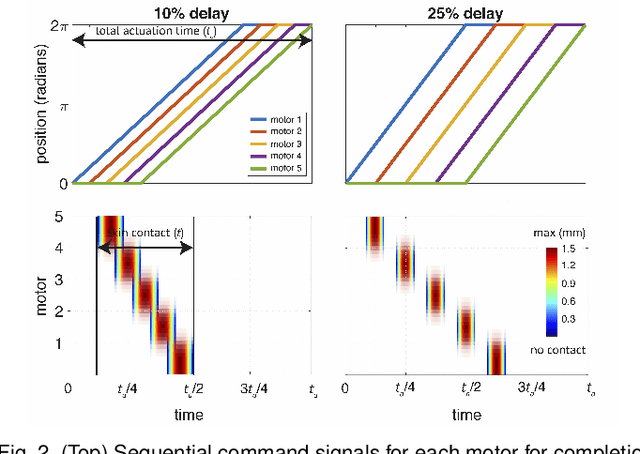

Understanding Continuous and Pleasant Linear Sensations on the Forearm from a Sequential Discrete Lateral Skin-Slip Haptic Device

Sep 03, 2019

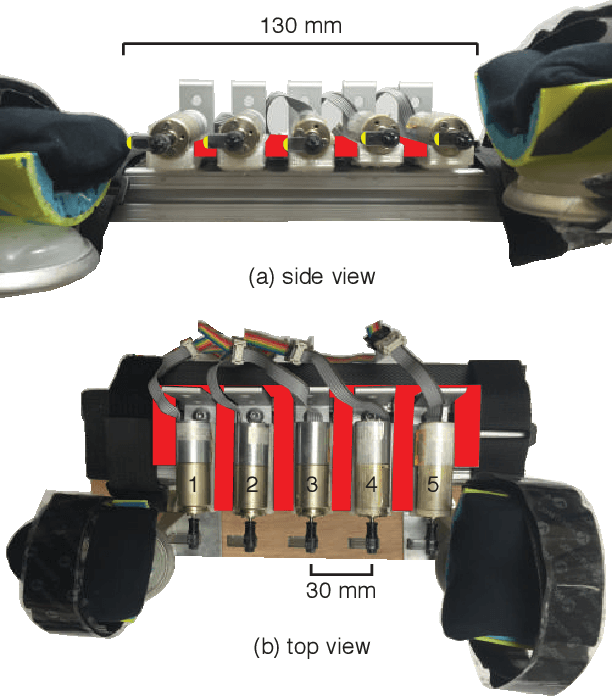

Abstract:A continuous stroking sensation on the skin can convey messages or emotion cues. We seek to induce this sensation using a combination of illusory motion and lateral stroking via a haptic device. Our system provides discrete lateral skin-slip on the forearm with rotating tactors, which independently provide lateral skin-slip in a timed sequence. We vary the sensation by changing the angular velocity and delay between adjacent tactors, such that the apparent speed of the perceived stroke ranges from 2.5 to 48.2 cm/s. We investigated which actuation parameters create the most pleasant and continuous sensations through a user study with 16 participants. On average, the sensations were rated by participants as both continuous and pleasant. The most continuous and pleasant sensations were created by apparent speeds of 7.7 and 5.1 cm/s, respectively. We also investigated the effect of spacing between contact points on the pleasantness and continuity of the stroking sensation, and found that the users experience a pleasant and continuous linear sensation even when the space between contact points is relatively large (40 mm). Understanding how sequential discrete lateral skin-slip creates continuous linear sensations can influence the design and control of future wearable haptic devices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge