Shahram Ghandeharizadeh

Flight Patterns for Swarms of Drones

Dec 17, 2024

Abstract:We present flight patterns for a collision-free passage of swarms of drones through one or more openings. The narrow openings provide drones with access to an infrastructure component such as charging stations to charge their depleted batteries and hangars for storage. The flight patterns are a staging area (queues) that match the rate at which an infrastructure component and its openings process drones. They prevent collisions and may implement different policies that control the order in which drones pass through an opening. We illustrate the flight patterns with a 3D display that uses drones configured with light sources to illuminate shapes.

An Evaluation of Three Distance Measurement Technologies for Flying Light Specks

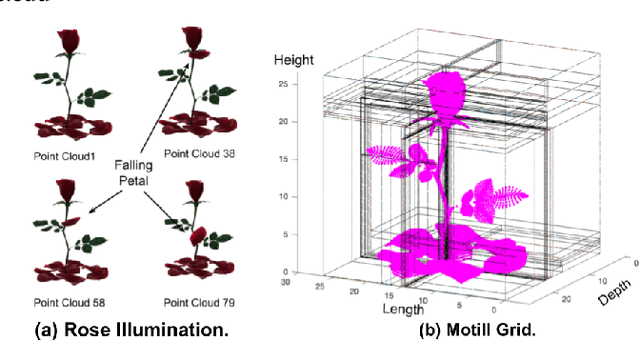

Aug 19, 2023Abstract:This study evaluates the accuracy of three different types of time-of-flight sensors to measure distance. We envision the possible use of these sensors to localize swarms of flying light specks (FLSs) to illuminate objects and avatars of a metaverse. An FLS is a miniature-sized drone configured with RGB light sources. It is unable to illuminate a point cloud by itself. However, the inter-FLS relationship effect of an organizational framework will compensate for the simplicity of each individual FLS, enabling a swarm of cooperating FLSs to illuminate complex shapes and render haptic interactions. Distance between FLSs is an important criterion of the inter-FLS relationship. We consider sensors that use radio frequency (UWB), infrared light (IR), and sound (ultrasonic) to quantify this metric. Obtained results show only one sensor is able to measure distances as small as 1 cm with a high accuracy. A sensor may require a calibration process that impacts its accuracy in measuring distance.

Dronevision: An Experimental 3D Testbed for Flying Light Specks

Aug 19, 2023Abstract:Today's robotic laboratories for drones are housed in a large room. At times, they are the size of a warehouse. These spaces are typically equipped with permanent devices to localize the drones, e.g., Vicon Infrared cameras. Significant time is invested to fine-tune the localization apparatus to compute and control the position of the drones. One may use these laboratories to develop a 3D multimedia system with miniature sized drones configured with light sources. As an alternative, this brave new idea paper envisions shrinking these room-sized laboratories to the size of a cube or cuboid that sits on a desk and costs less than 10K dollars. The resulting Dronevision (DV) will be the size of a 1990s Television. In addition to light sources, its Flying Light Specks (FLSs) will be network-enabled drones with storage and processing capability to implement decentralized algorithms. The DV will include a localization technique to expedite development of 3D displays. It will act as a haptic interface for a user to interact with and manipulate the 3D virtual illuminations. It will empower an experimenter to design, implement, test, debug, and maintain software and hardware that realize novel algorithms in the comfort of their office without having to reserve a laboratory. In addition to enhancing productivity, it will improve safety of the experimenter by minimizing the likelihood of accidents. This paper introduces the concept of a DV, the research agenda one may pursue using this device, and our plans to realize one.

Safety in the Emerging Holodeck Applications

Aug 17, 2022Abstract:Technological advances in holography, robotics, and 3D printing are starting to realize the vision of a holodeck. These immersive 3D displays must address user safety from the start to be viable. A holodeck's safety challenges are novel because its applications will involve explicit physical interactions between humans and synthesized 3D objects and experiences in real-time. This pioneering paper first proposes research directions for modeling safety in future holodeck applications from traditional physical human-robot interaction modeling. Subsequently, we propose a test-bed to enable safety validation of physical human-robot interaction based on existing augmented reality and virtual simulation technology.

Display of 3D Illuminations using Flying Light Specks

Jul 18, 2022

Abstract:This paper presents techniques to display 3D illuminations using Flying Light Specks, FLSs. Each FLS is a miniature (hundreds of micrometers) sized drone with one or more light sources to generate different colors and textures with adjustable brightness. It is network enabled with a processor and local storage. Synchronized swarms of cooperating FLSs render illumination of virtual objects in a pre-specified 3D volume, an FLS display. We present techniques to display both static and motion illuminations. Our display techniques consider the limited flight time of an FLS on a fully charged battery and the duration of time to charge the FLS battery. Moreover, our techniques assume failure of FLSs is the norm rather than an exception. We present a hardware and a software architecture for an FLS-display along with a family of techniques to compute flight paths of FLSs for illuminations. With motion illuminations, one technique (ICF) minimizes the overall distance traveled by the FLSs significantly when compared with the other techniques.

Holodeck: Immersive 3D Displays Using Swarms of Flying Light Specks

Nov 02, 2021

Abstract:Unmanned Aerial Vehicles (UAVs) have moved beyond a platform for hobbyists to enable environmental monitoring, journalism, film industry, search and rescue, package delivery, and entertainment. This paper describes 3D displays using swarms of flying light specks, FLSs. An FLS is a small (hundreds of micrometers in size) UAV with one or more light sources to generate different colors and textures with adjustable brightness. A synchronized swarm of FLSs renders an illumination in a pre-specified 3D volume, an FLS display. An FLS display provides true depth, enabling a user to perceive a scene more completely by analyzing its illumination from different angles. An FLS display may either be non-immersive or immersive. Both will support 3D acoustics. Non-immersive FLS displays may be the size of a 1980's computer monitor, enabling a surgical team to observe and control micro robots performing heart surgery inside a patient's body. Immersive FLS displays may be the size of a room, enabling users to interact with objects, e.g., a rock, a teapot. An object with behavior will be constructed using FLS-matters. FLS-matter will enable a user to touch and manipulate an object, e.g., a user may pick up a teapot or throw a rock. An immersive and interactive FLS display will approximate Star Trek's Holodeck. A successful realization of the research ideas presented in this paper will provide fundamental insights into implementing a Holodeck using swarms of FLSs. A Holodeck will transform the future of human communication and perception, and how we interact with information and data. It will revolutionize the future of how we work, learn, play and entertain, receive medical care, and socialize.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge