Harsh Tataria

Channel Sparsity Variation and Model-Based Analysis on 6, 26, and 132 GHz Measurements

Feb 17, 2023Abstract:In this paper, the level of sparsity is examined at 6, 26, and 132 GHz carrier frequencies by conducting channel measurements in an indoor office environment. By using the Gini index (value between 0 and 1) as a metric for characterizing sparsity, we show that increasing carrier frequency leads to increased levels of sparsity. The measured channel impulse responses are used to derive a Third-Generation Partnership Project (3GPP)-style propagation model, used to calculate the Gini index for the comparison of the channel sparsity between the measurement and simulation based on the 3GPP model. Our results show that the mean value of the Gini index in measurement is over twice the value in simulation, implying that the 3GPP channel model does not capture the effects of sparsity in the delay domain as frequency increases. In addition, a new intra-cluster power allocation model based on measurements is proposed to characterize the effects of sparsity in the delay domain of the 3GPP channel model. The accuracy of the proposed model is analyzed using theoretical derivations and simulations. Using the derived intra-cluster power allocation model, the mean value of the Gini index is 0.97, while the spread of variability is restricted to 0.01, demonstrating that the proposed model is suitable for 3GPP-type channels. To our best knowledge, this paper is the first to perform measurements and analysis at three different frequencies for the evaluation of channel sparsity in the same environment.

Massive MIMO Evolution Towards 3GPP Release 18

Oct 15, 2022

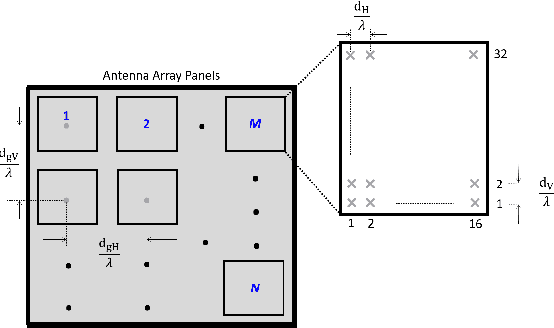

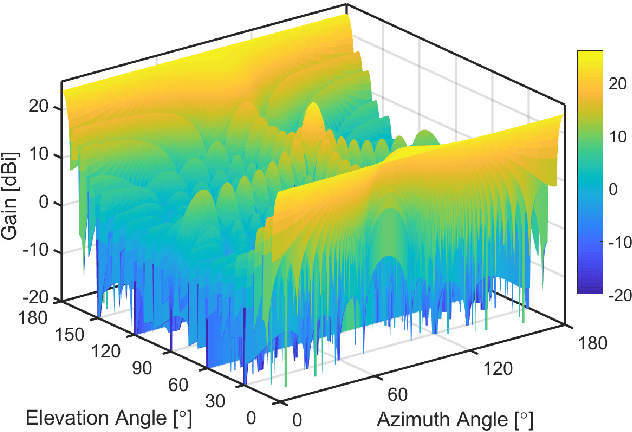

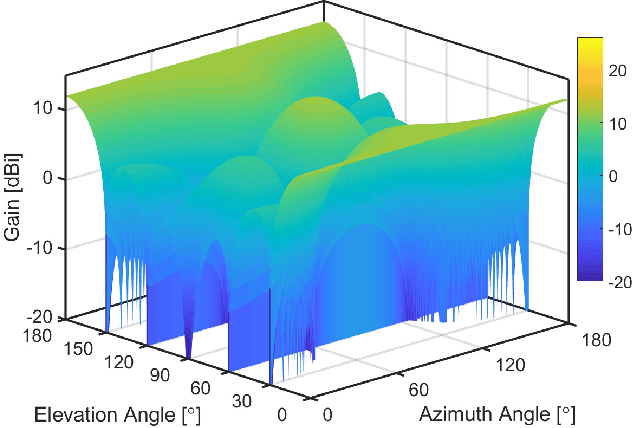

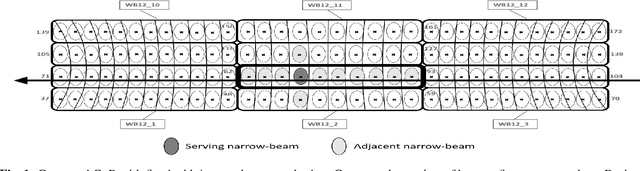

Abstract:Since the introduction of fifth-generation new radio (5G-NR) in Third Generation Partnership Project (3GPP) Release 15, swift progress has been made to evolve 5G with 3GPP Release 18 emerging. A critical aspect is the design of massive multiple-input multiple-output (MIMO) technology. In this line, this paper makes several important contributions: We provide a comprehensive overview of the evolution of standardized massive MIMO features from 3GPP Release 15 to 17 for both time/frequency-division duplex operation across bands FR-1 and FR-2. We analyze the progress on channel state information (CSI) frameworks, beam management frameworks and present enhancements for uplink CSI. We shed light on emerging 3GPP Release 18 problems requiring imminent attention. These include advanced codebook design and sounding reference signal design for coherent joint transmission (CJT) with multiple transmission/reception points (multi- TRPs). We discuss advancements in uplink demodulation reference signal design, enhancements for mobility to provide accurate CSI estimates, and unified transmission configuration indicator framework tailored for FR-2 bands. For each concept, we provide system level simulation results to highlight their performance benefits. Via field trials in an outdoor environment at Shanghai Jiaotong University, we demonstrate the gains of multi-TRP CJT relative to single TRP at 3.7 GHz.

RF Interference in Lens-Based Massive MIMO Systems -- An Application Note

May 05, 2022

Abstract:We analyze the uplink radio frequency (RF) interference from a multiplicity of single-antenna user equipments transmitting to a cellular base station (BS) within the same time-frequency resource. The BS is assumed to operate with a lens antenna array, which induces additional focusing gain for the incoming signals. Considering line-of-sight propagation conditions, we characterize the multiuser RF interference properties via approximation of the mainlobe interference as well as the effective interferer probability. The results derived in this application note are foundational to more general multiuser interference analysis across different propagation conditions, which we present in a follow up paper.

A Review of Indoor Millimeter Wave Device-based Localization and Device-free Sensing Technologies

Dec 10, 2021

Abstract:The commercial availability of low-cost millimeter wave (mmWave) communication and radar devices is starting to improve the penetration of such technologies in consumer markets, paving the way for large-scale and dense deployments in fifth-generation (5G)-and-beyond as well as 6G networks. At the same time, pervasive mmWave access will enable device localization and device-free sensing with unprecedented accuracy, especially with respect to sub-6 GHz commercial-grade devices. This paper surveys the state of the art in device-based localization and device-free sensing using mmWave communication and radar devices, with a focus on indoor deployments. We first overview key concepts about mmWave signal propagation and system design. Then, we provide a detailed account of approaches and algorithms for localization and sensing enabled by mmWaves. We consider several dimensions in our analysis, including the main objectives, techniques, and performance of each work, whether each research reached some degree of implementation, and which hardware platforms were used for this purpose. We conclude by discussing that better algorithms for consumer-grade devices, data fusion methods for dense deployments, as well as an educated application of machine learning methods are promising, relevant and timely research directions.

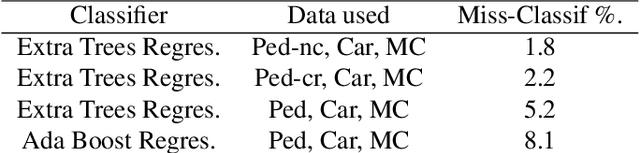

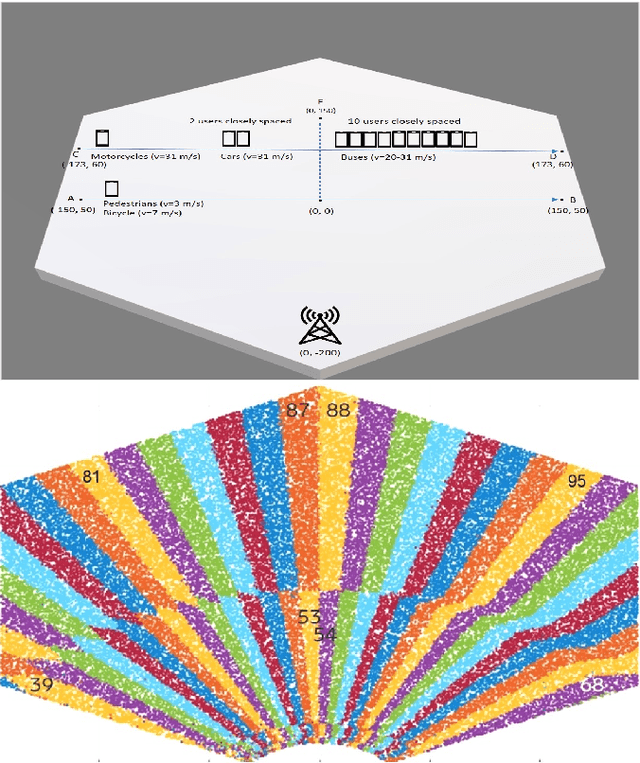

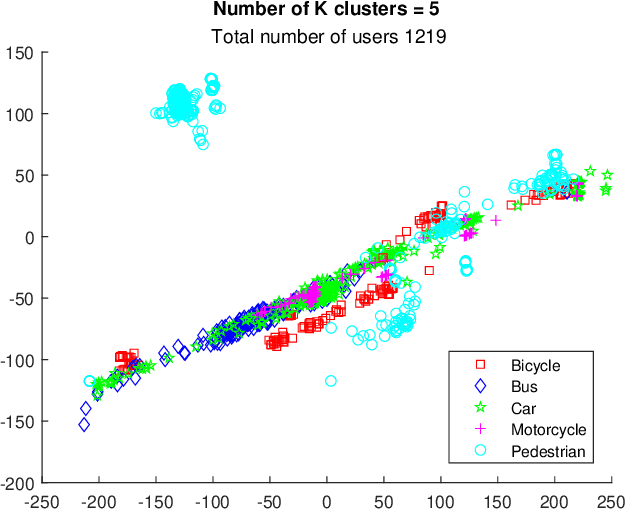

Learning-Based UE Classification in Millimeter-Wave Cellular Systems With Mobility

Sep 13, 2021

Abstract:Millimeter-wave cellular communication requires beamforming procedures that enable alignment of the transmitter and receiver beams as the user equipment (UE) moves. For efficient beam tracking it is advantageous to classify users according to their traffic and mobility patterns. Research to date has demonstrated efficient ways of machine learning based UE classification. Although different machine learning approaches have shown success, most of them are based on physical layer attributes of the received signal. This, however, imposes additional complexity and requires access to those lower layer signals. In this paper, we show that traditional supervised and even unsupervised machine learning methods can successfully be applied on higher layer channel measurement reports in order to perform UE classification, thereby reducing the complexity of the classification process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge