Paolo Casari

OTFS-IDMA: An Unsourced Multiple Access Scheme for Doubly-Dispersive Channels

Jan 19, 2026Abstract:We present an unsourced multiple access (UMAC) scheme tailored to high-mobility wireless channels. The proposed construction is based on orthogonal time frequency space (OTFS) modulation and sparse interleaver division multiple access (IDMA) in the delay-Doppler (DD) domain. The receiver runs a compressive-sensing joint activity-detection and channel estimation process followed by a single-user decoder which harnesses multipath diversity via the maximal-ratio combining (MRC) principle. Numerical results show the potential of DD-based uncoordinated schemes in the presence of double selectivity, while remarking the design tradeoffs and remaining challenges introduced by the proposed design.

A low-PAPR Pilot Design and Optimization for OTFS Modulation

Mar 19, 2025Abstract:Orthogonal time frequency space (OTFS) modulation has been proposed recently as a new waveform in the context of doubly-selective multi-path channels. This article proposes a novel pilot design that improves OTFS spectral efficiency (SE) while reducing its peak-to-average power ratio (PAPR). Instead of adopting an embedded data-orthogonal pilot for channel estimation, our scheme relies on Chu sequences superimposed to data symbols. We optimize the construction by investigating the best energy split between pilot and data symbols. Two equalizers, and an iterative channel estimation and equalization procedure are considered. We present extensive numerical results of relevant performance metrics, including the normalized mean squared error of the estimator, bit error rate, PAPR and SE. Our results show that, while the embedded pilot scheme estimates the channel more accurately, our approach yields a better tradeoff by achieving much higher spectral efficiency and lower PAPR.

Self-Supervised Millimeter Wave Indoor Localization using Tiny Neural Networks

Jan 02, 2024

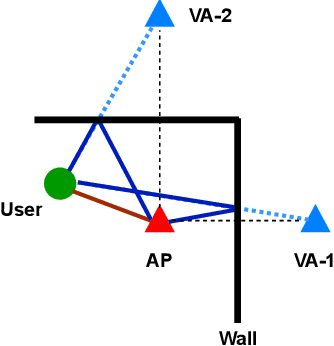

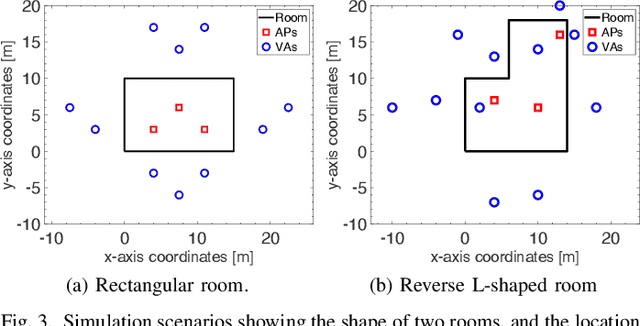

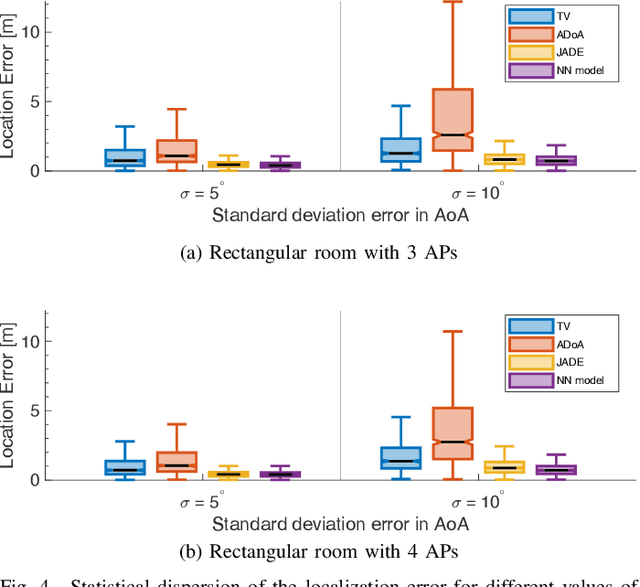

Abstract:The quasi-optical propagation of millimeter-wave signals enables high-accuracy localization algorithms that employ geometric approaches or machine learning models. However, most algorithms require information on the indoor environment, may entail the collection of large training datasets, or bear an infeasible computational burden for commercial off-the-shelf (COTS) devices. In this work, we propose to use tiny neural networks (NNs) to learn the relationship between angle difference-of-arrival (ADoA) measurements and locations of a receiver in an indoor environment. To relieve training data collection efforts, we resort to a self-supervised approach by bootstrapping the training of our neural network through location estimates obtained from a state-of-the-art localization algorithm. We evaluate our scheme via mmWave measurements from indoor 60-GHz double-directional channel sounding. We process the measurements to yield dominant multipath components, use the corresponding angles to compute ADoA values, and finally obtain location fixes. Results show that the tiny NN achieves sub-meter errors in 74\% of the cases, thus performing as good as or even better than the state-of-the-art algorithm, with significantly lower computational complexity.

Indoor Millimeter Wave Localization using Multiple Self-Supervised Tiny Neural Networks

Nov 30, 2023

Abstract:We consider the localization of a mobile millimeter-wave client in a large indoor environment using multilayer perceptron neural networks (NNs). Instead of training and deploying a single deep model, we proceed by choosing among multiple tiny NNs trained in a self-supervised manner. The main challenge then becomes to determine and switch to the best NN among the available ones, as an incorrect NN will fail to localize the client. In order to upkeep the localization accuracy, we propose two switching schemes: one based on a Kalman filter, and one based on the statistical distribution of the training data. We analyze the proposed schemes via simulations, showing that our approach outperforms both geometric localization schemes and the use of a single NN.

ORACLE: Occlusion-Resilient And self-Calibrating mmWave Radar Network for People Tracking

Aug 30, 2022

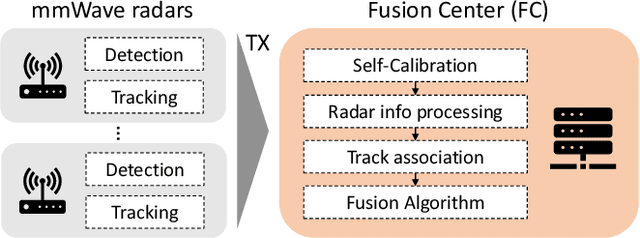

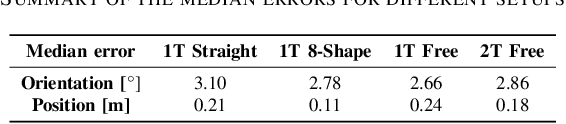

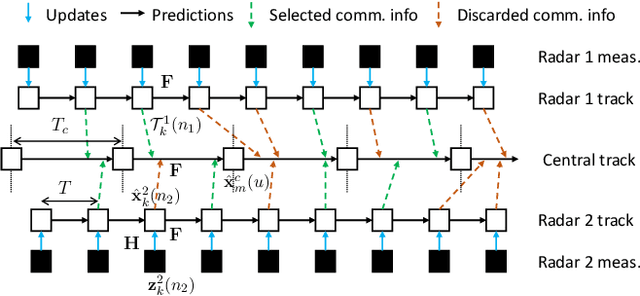

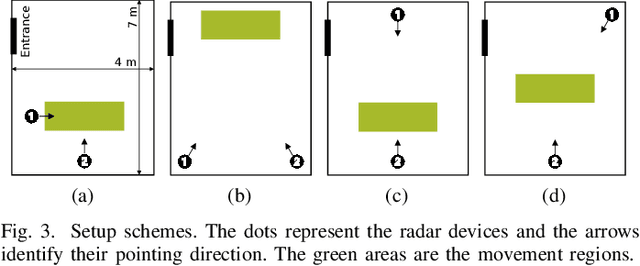

Abstract:Millimeter wave (mmWave) radars are emerging as valid alternatives to cameras for the pervasive contactless monitoring of industrial environments, enabling very tight human-machine interactions. However, commercial mmWave radars feature a limited range (up to 6-8 m) and are subject to occlusion, which may constitute a significant drawback in large industrial settings containing machinery and obstacles. Thus, covering large indoor spaces requires multiple radars with known relative position and orientation. As a result, we necessitate algorithms to combine their outputs. In this work, we present ORACLE, an autonomous system that (i) integrates automatic relative position and orientation estimation from multiple radar devices by exploiting the trajectories of people moving freely in the radars' common fields of view and (ii) fuses the tracking information from multiple radars to obtain a unified tracking among all sensors. Our implementation and experimental evaluation of ORACLE results in median errors of 0.18 m and 2.86{\deg} for radars location and orientation estimates, respectively. The fused tracking improves upon single sensor tracking by 6%, in terms of mean tracking accuracy, while reaching a mean tracking error as low as 0.14 m. Finally, ORACLE is robust to fusion relative time synchronization mismatches between -20% and +50%.

Machine Learning-Based Distributed Authentication of UWAN Nodes with Limited Shared Information

Aug 19, 2022

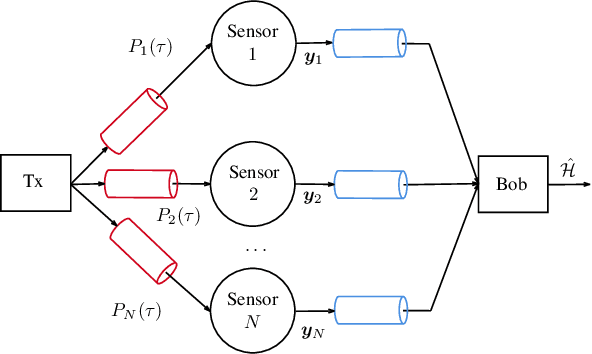

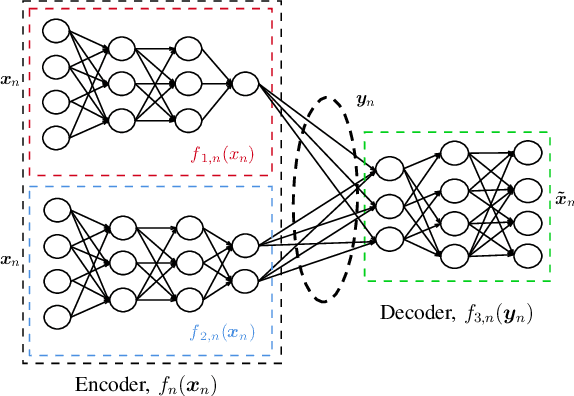

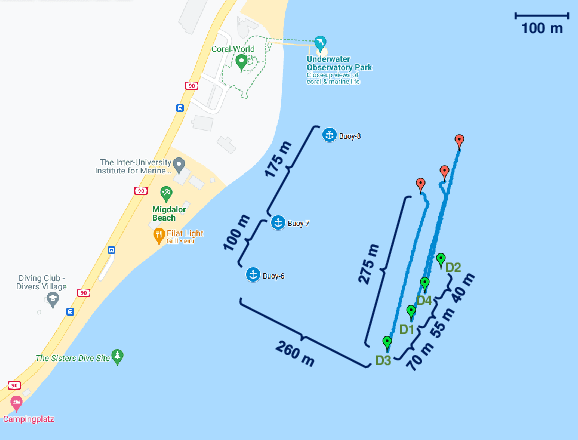

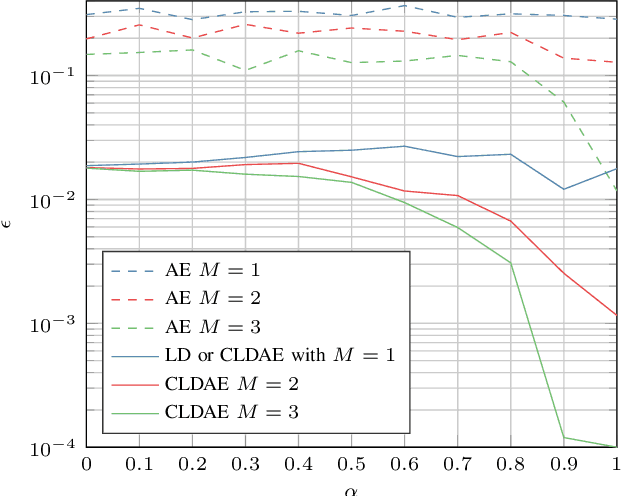

Abstract:We propose a technique to authenticate received packets in underwater acoustic networks based on the physical layer features of the underwater acoustic channel (UWAC). Several sensors a) locally estimate features (e.g., the number of taps or the delay spread) of the UWAC over which the packet is received, b) obtain a compressed feature representation through a neural network (NN), and c) transmit their representations to a central sink node that, using a NN, decides whether the packet has been transmitted by the legitimate node or by an impersonating attacker. Although the purpose of the system is to make a binary decision as to whether a packet is authentic or not, we show the importance of having a rich set of compressed features, while still taking into account transmission rate limits among the nodes. We consider both global training, where all NNs are trained together, and local training, where each NN is trained individually. For the latter scenario, several alternatives for the NN structure and loss function were used for training.

A Review of Indoor Millimeter Wave Device-based Localization and Device-free Sensing Technologies

Dec 10, 2021

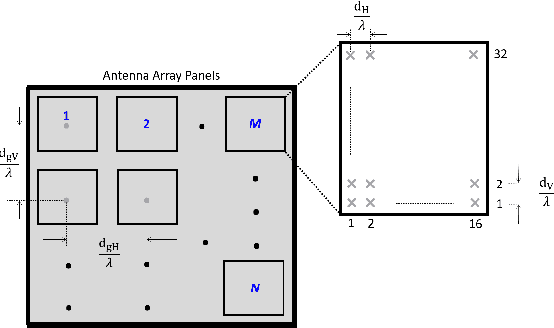

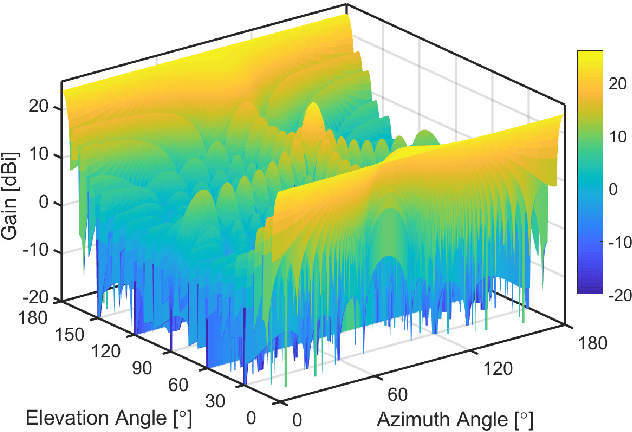

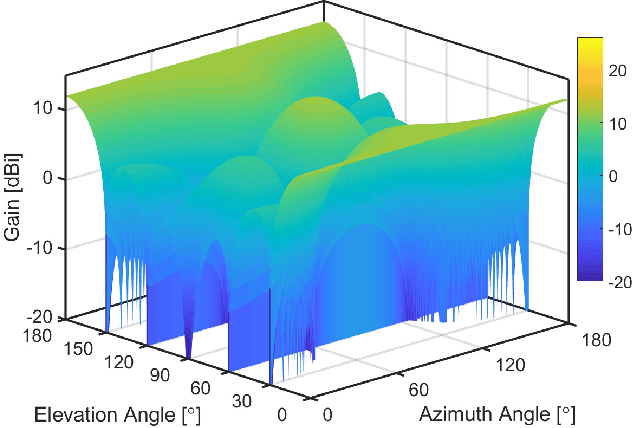

Abstract:The commercial availability of low-cost millimeter wave (mmWave) communication and radar devices is starting to improve the penetration of such technologies in consumer markets, paving the way for large-scale and dense deployments in fifth-generation (5G)-and-beyond as well as 6G networks. At the same time, pervasive mmWave access will enable device localization and device-free sensing with unprecedented accuracy, especially with respect to sub-6 GHz commercial-grade devices. This paper surveys the state of the art in device-based localization and device-free sensing using mmWave communication and radar devices, with a focus on indoor deployments. We first overview key concepts about mmWave signal propagation and system design. Then, we provide a detailed account of approaches and algorithms for localization and sensing enabled by mmWaves. We consider several dimensions in our analysis, including the main objectives, techniques, and performance of each work, whether each research reached some degree of implementation, and which hardware platforms were used for this purpose. We conclude by discussing that better algorithms for consumer-grade devices, data fusion methods for dense deployments, as well as an educated application of machine learning methods are promising, relevant and timely research directions.

Millimeter Wave Localization with Imperfect Training Data using Shallow Neural Networks

Dec 09, 2021

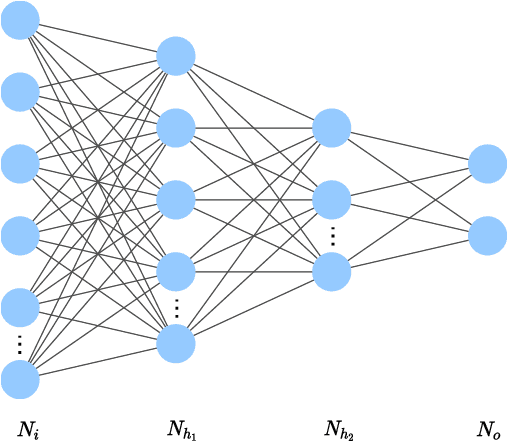

Abstract:Millimeter wave (mmWave) localization algorithms exploit the quasi-optical propagation of mmWave signals, which yields sparse angular spectra at the receiver. Geometric approaches to angle-based localization typically require to know the map of the environment and the location of the access points. Thus, several works have resorted to automated learning in order to infer a device's location from the properties of the received mmWave signals. However, collecting training data for such models is a significant burden. In this work, we propose a shallow neural network model to localize mmWave devices indoors. This model requires significantly fewer weights than those proposed in the literature. Therefore, it is amenable for implementation in resource-constrained hardware, and needs fewer training samples to converge. We also propose to relieve training data collection efforts by retrieving (inherently imperfect) location estimates from geometry-based mmWave localization algorithms. Even in this case, our results show that the proposed neural networks perform as good as or better than state-of-the-art algorithms.

SQLR: Short Term Memory Q-Learning for Elastic Provisioning

Sep 12, 2019

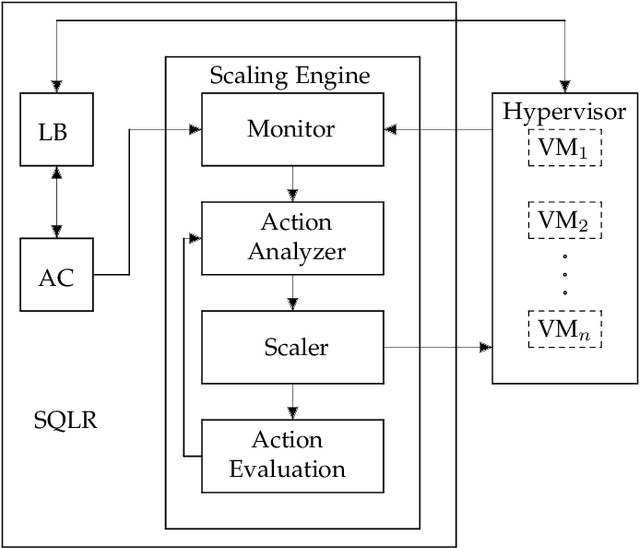

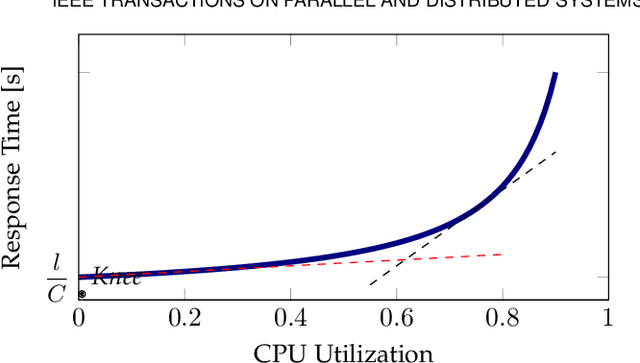

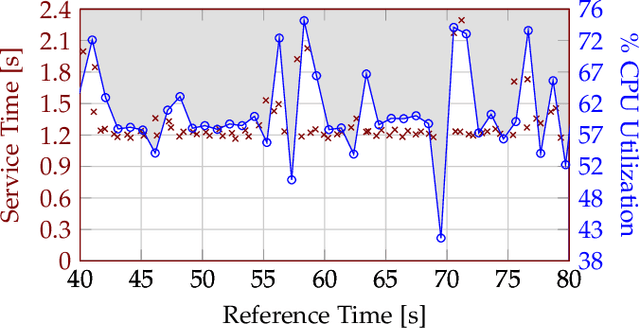

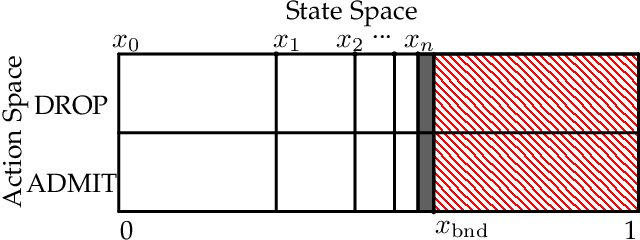

Abstract:As more and more application providers transition to the cloud and deliver their services on a Software as a Service (SaaS) basis, cloud providers need to make their provisioning systems agile enough to deliver on Service Level Agreements. At the same time they should guard against over-provisioning which limits their capacity to accommodate more tenants. To this end we propose SQLR, a dynamic provisioning system employing a customized model-free reinforcement learning algorithm that is capable of reusing contextual knowledge learned from one workload to optimize resource provisioning for different workload patterns. SQLR achieves results comparable to those where resources are unconstrained, with minimal overhead. Our experiments show that we can reduce the amount of resources that need to be provisioned by almost 25% with less than 1% overall service unavailability due to blocking, and still deliver similar response times as an over-provisioned system.

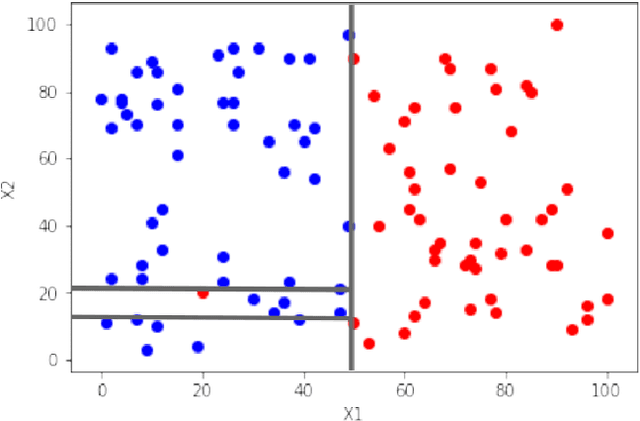

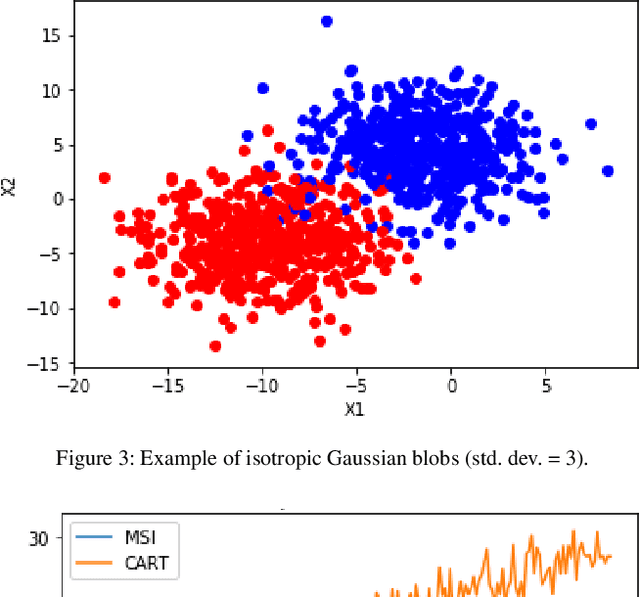

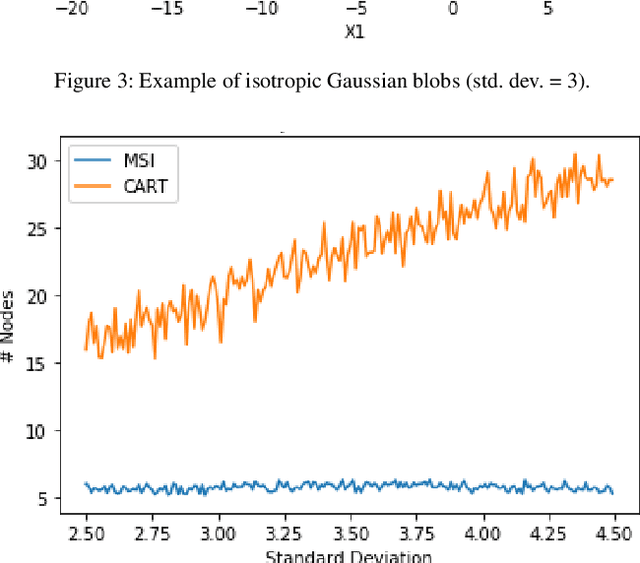

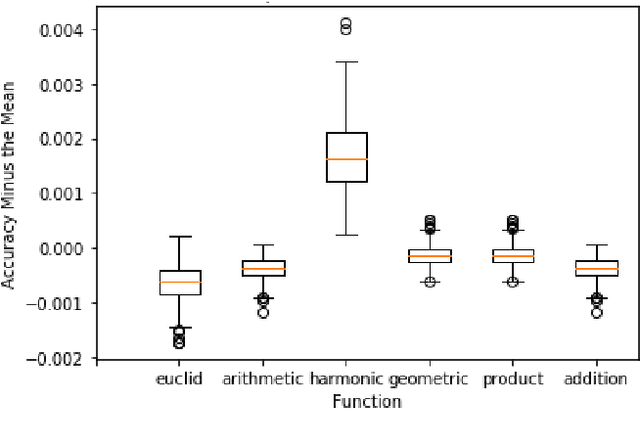

A Novel Hyperparameter-free Approach to Decision Tree Construction that Avoids Overfitting by Design

Jun 04, 2019

Abstract:Decision trees are an extremely popular machine learning technique. Unfortunately, overfitting in decision trees still remains an open issue that sometimes prevents achieving good performance. In this work, we present a novel approach for the construction of decision trees that avoids the overfitting by design, without losing accuracy. A distinctive feature of our algorithm is that it requires neither the optimization of any hyperparameters, nor the use of regularization techniques, thus significantly reducing the decision tree training time. Moreover, our algorithm produces much smaller and shallower trees than traditional algorithms, facilitating the interpretability of the resulting models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge