Hamidreza Mirkhani

CAPS: Context-Aware Priority Sampling for Enhanced Imitation Learning in Autonomous Driving

Mar 03, 2025Abstract:In this paper, we introduce CAPS (Context-Aware Priority Sampling), a novel method designed to enhance data efficiency in learning-based autonomous driving systems. CAPS addresses the challenge of imbalanced training datasets in imitation learning by leveraging Vector Quantized Variational Autoencoders (VQ-VAEs). The use of VQ-VAE provides a structured and interpretable data representation, which helps reveal meaningful patterns in the data. These patterns are used to group the data into clusters, with each sample being assigned a cluster ID. The cluster IDs are then used to re-balance the dataset, ensuring that rare yet valuable samples receive higher priority during training. By ensuring a more diverse and informative training set, CAPS improves the generalization of the trained planner across a wide range of driving scenarios. We evaluate our method through closed-loop simulations in the CARLA environment. The results on Bench2Drive scenarios demonstrate that our framework outperforms state-of-the-art methods, leading to notable improvements in model performance.

Learning Soft Driving Constraints from Vectorized Scene Embeddings while Imitating Expert Trajectories

Dec 07, 2024Abstract:The primary goal of motion planning is to generate safe and efficient trajectories for vehicles. Traditionally, motion planning models are trained using imitation learning to mimic the behavior of human experts. However, these models often lack interpretability and fail to provide clear justifications for their decisions. We propose a method that integrates constraint learning into imitation learning by extracting driving constraints from expert trajectories. Our approach utilizes vectorized scene embeddings that capture critical spatial and temporal features, enabling the model to identify and generalize constraints across various driving scenarios. We formulate the constraint learning problem using a maximum entropy model, which scores the motion planner's trajectories based on their similarity to the expert trajectory. By separating the scoring process into distinct reward and constraint streams, we improve both the interpretability of the planner's behavior and its attention to relevant scene components. Unlike existing constraint learning methods that rely on simulators and are typically embedded in reinforcement learning (RL) or inverse reinforcement learning (IRL) frameworks, our method operates without simulators, making it applicable to a wider range of datasets and real-world scenarios. Experimental results on the InD and TrafficJams datasets demonstrate that incorporating driving constraints enhances model interpretability and improves closed-loop performance.

Vectorized Representation Dreamer (VRD): Dreaming-Assisted Multi-Agent Motion-Forecasting

Jun 20, 2024Abstract:For an autonomous vehicle to plan a path in its environment, it must be able to accurately forecast the trajectory of all dynamic objects in its proximity. While many traditional methods encode observations in the scene to solve this problem, there are few approaches that consider the effect of the ego vehicle's behavior on the future state of the world. In this paper, we introduce VRD, a vectorized world model-inspired approach to the multi-agent motion forecasting problem. Our method combines a traditional open-loop training regime with a novel dreamed closed-loop training pipeline that leverages a kinematic reconstruction task to imagine the trajectory of all agents, conditioned on the action of the ego vehicle. Quantitative and qualitative experiments are conducted on the Argoverse 2 multi-world forecasting evaluation dataset and the intersection drone (inD) dataset to demonstrate the performance of our proposed model. Our model achieves state-of-the-art performance on the single prediction miss rate metric on the Argoverse 2 dataset and performs on par with the leading models for the single prediction displacement metrics.

Learning from Mistakes: a Weakly-supervised Method for Mitigating the Distribution Shift in Autonomous Vehicle Planning

Jun 03, 2024

Abstract:The planning problem constitutes a fundamental aspect of the autonomous driving framework. Recent strides in representation learning have empowered vehicles to comprehend their surrounding environments, thereby facilitating the integration of learning-based planning strategies. Among these approaches, Imitation Learning stands out due to its notable training efficiency. However, traditional Imitation Learning methodologies encounter challenges associated with the co-variate shift phenomenon. We propose Learn from Mistakes (LfM) as a remedy to address this issue. The essence of LfM lies in deploying a pre-trained planner across diverse scenarios. Instances where the planner deviates from its immediate objectives, such as maintaining a safe distance from obstacles or adhering to traffic rules, are flagged as mistakes. The environments corresponding to these mistakes are categorized as out-of-distribution states and compiled into a new dataset termed closed-loop mistakes dataset. Notably, the absence of expert annotations for the closed-loop data precludes the applicability of standard imitation learning approaches. To facilitate learning from the closed-loop mistakes, we introduce Validity Learning, a weakly supervised method, which aims to discern valid trajectories within the current environmental context. Experimental evaluations conducted on the InD and Nuplan datasets reveal substantial enhancements in closed-loop metrics such as Progress and Collision Rate, underscoring the effectiveness of the proposed methodology.

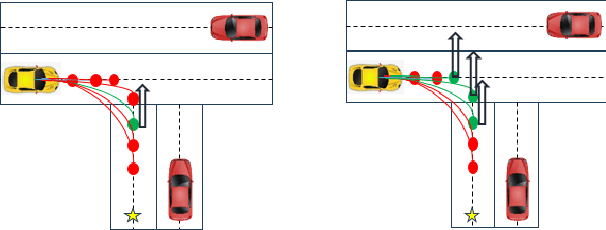

Augmenting Safety-Critical Driving Scenarios while Preserving Similarity to Expert Trajectories

Apr 20, 2024Abstract:Trajectory augmentation serves as a means to mitigate distributional shift in imitation learning. However, imitating trajectories that inadequately represent the original expert data can result in undesirable behaviors, particularly in safety-critical scenarios. We propose a trajectory augmentation method designed to maintain similarity with expert trajectory data. To accomplish this, we first cluster trajectories to identify minority yet safety-critical groups. Then, we combine the trajectories within the same cluster through geometrical transformation to create new trajectories. These trajectories are then added to the training dataset, provided that they meet our specified safety-related criteria. Our experiments exhibit that training an imitation learning model using these augmented trajectories can significantly improve closed-loop performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge