Hakmin Lee

Defending Against Person Hiding Adversarial Patch Attack with a Universal White Frame

Apr 27, 2022

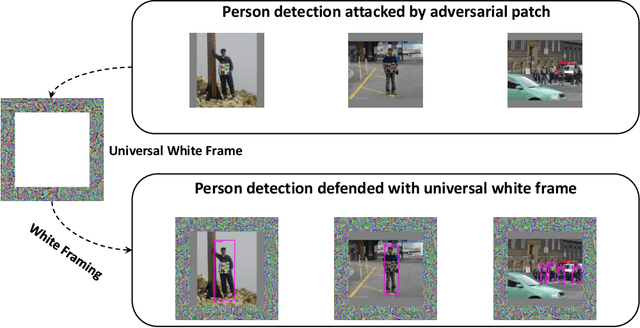

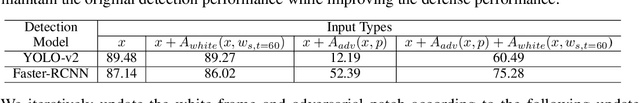

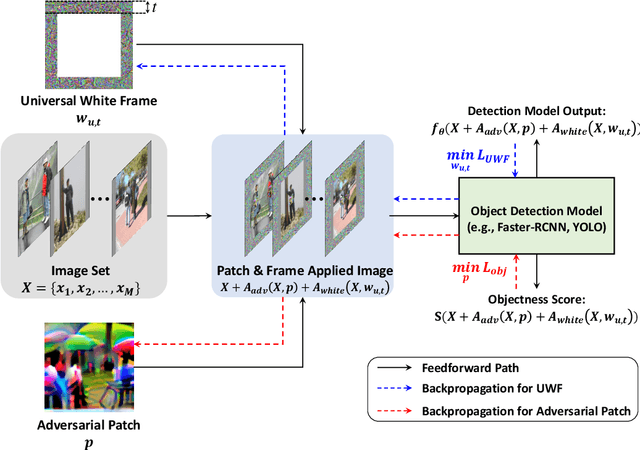

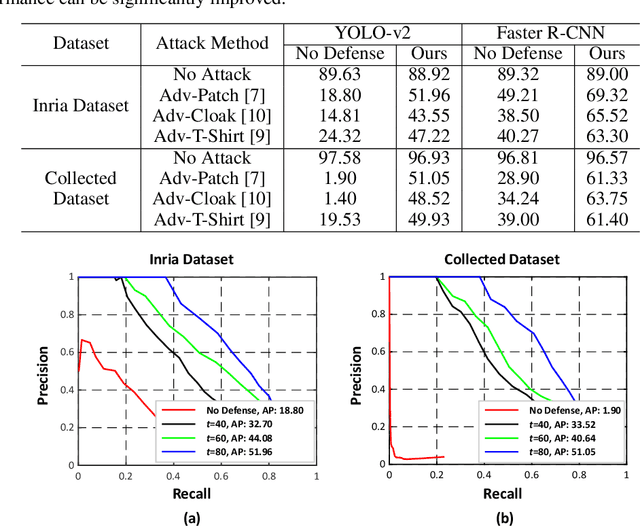

Abstract:Object detection has attracted great attention in the computer vision area and has emerged as an indispensable component in many vision systems. In the era of deep learning, many high-performance object detection networks have been proposed. Although these detection networks show high performance, they are vulnerable to adversarial patch attacks. Changing the pixels in a restricted region can easily fool the detection network in the physical world. In particular, person-hiding attacks are emerging as a serious problem in many safety-critical applications such as autonomous driving and surveillance systems. Although it is necessary to defend against an adversarial patch attack, very few efforts have been dedicated to defending against person-hiding attacks. To tackle the problem, in this paper, we propose a novel defense strategy that mitigates a person-hiding attack by optimizing defense patterns, while previous methods optimize the model. In the proposed method, a frame-shaped pattern called a 'universal white frame' (UWF) is optimized and placed on the outside of the image. To defend against adversarial patch attacks, UWF should have three properties (i) suppressing the effect of the adversarial patch, (ii) maintaining its original prediction, and (iii) applicable regardless of images. To satisfy the aforementioned properties, we propose a novel pattern optimization algorithm that can defend against the adversarial patch. Through comprehensive experiments, we demonstrate that the proposed method effectively defends against the adversarial patch attack.

Efficient Ensemble Model Generation for Uncertainty Estimation with Bayesian Approximation in Segmentation

May 22, 2020

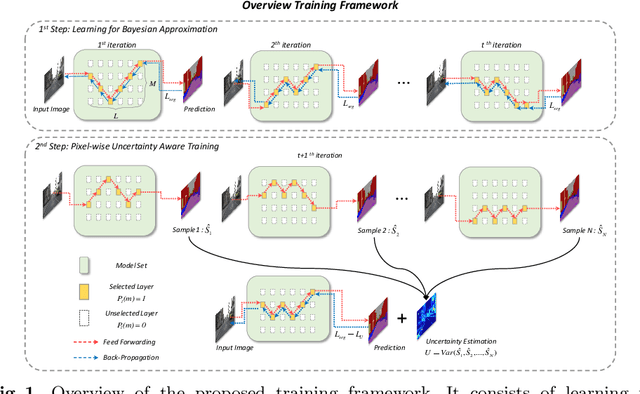

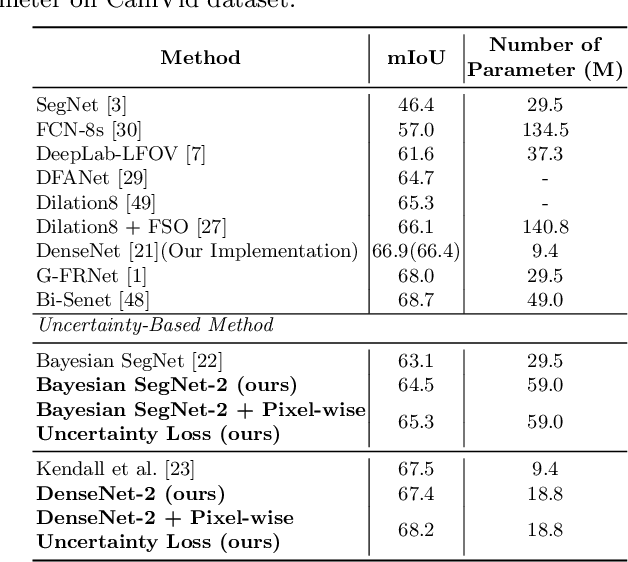

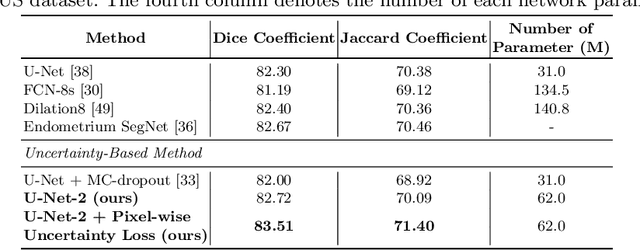

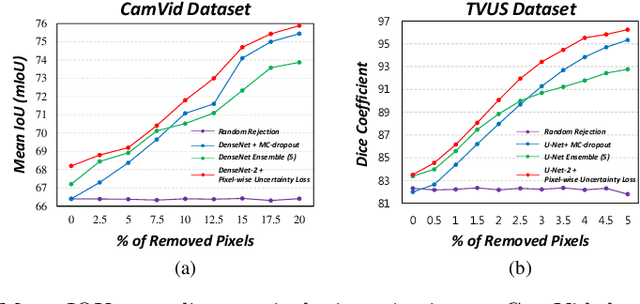

Abstract:Recent studies have shown that ensemble approaches could not only improve accuracy and but also estimate model uncertainty in deep learning. However, it requires a large number of parameters according to the increase of ensemble models for better prediction and uncertainty estimation. To address this issue, a generic and efficient segmentation framework to construct ensemble segmentation models is devised in this paper. In the proposed method, ensemble models can be efficiently generated by using the stochastic layer selection method. The ensemble models are trained to estimate uncertainty through Bayesian approximation. Moreover, to overcome its limitation from uncertain instances, we devise a new pixel-wise uncertainty loss, which improves the predictive performance. To evaluate our method, comprehensive and comparative experiments have been conducted on two datasets. Experimental results show that the proposed method could provide useful uncertainty information by Bayesian approximation with the efficient ensemble model generation and improve the predictive performance.

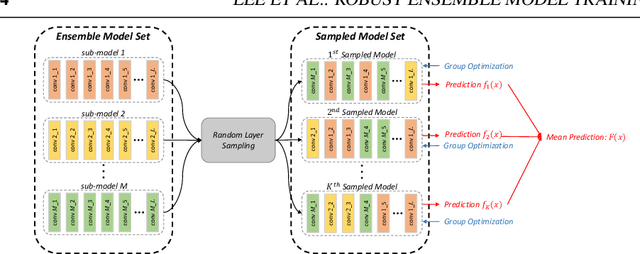

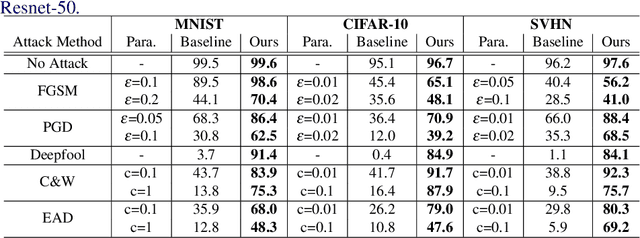

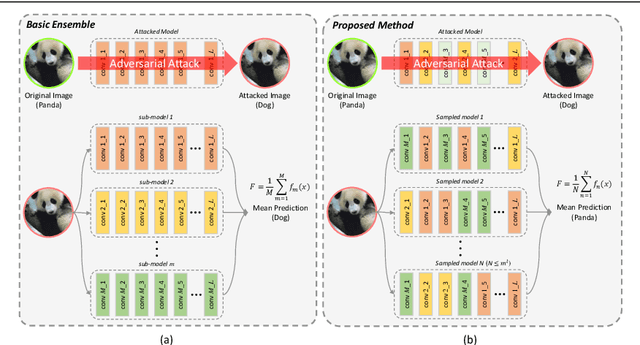

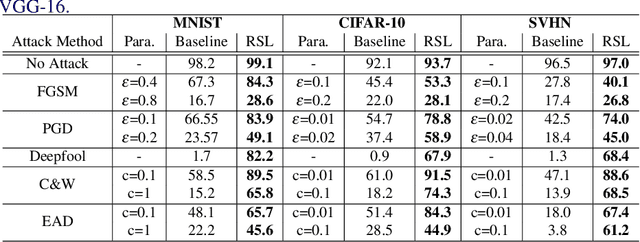

Robust Ensemble Model Training via Random Layer Sampling Against Adversarial Attack

May 21, 2020

Abstract:Deep neural networks have achieved substantial achievements in several computer vision areas, but have vulnerabilities that are often fooled by adversarial examples that are not recognized by humans. This is an important issue for security or medical applications. In this paper, we propose an ensemble model training framework with random layer sampling to improve the robustness of deep neural networks. In the proposed training framework, we generate various sampled model through the random layer sampling and update the weight of the sampled model. After the ensemble models are trained, it can hide the gradient efficiently and avoid the gradient-based attack by the random layer sampling method. To evaluate our proposed method, comprehensive and comparative experiments have been conducted on three datasets. Experimental results show that the proposed method improves the adversarial robustness.

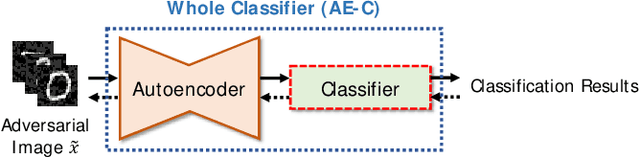

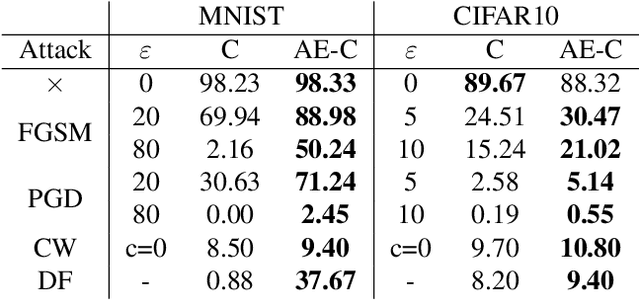

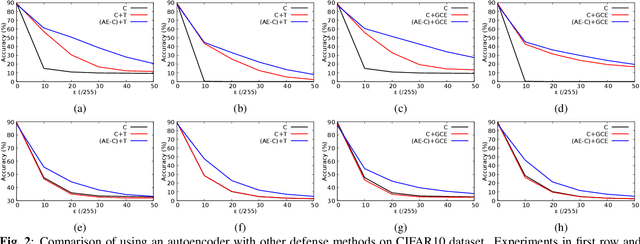

Revisiting Role of Autoencoders in Adversarial Settings

May 21, 2020

Abstract:To combat against adversarial attacks, autoencoder structure is widely used to perform denoising which is regarded as gradient masking. In this paper, we revisit the role of autoencoders in adversarial settings. Through the comprehensive experimental results and analysis, this paper presents the inherent property of adversarial robustness in the autoencoders. We also found that autoencoders may use robust features that cause inherent adversarial robustness. We believe that our discovery of the adversarial robustness of the autoencoders can provide clues to the future research and applications for adversarial defense.

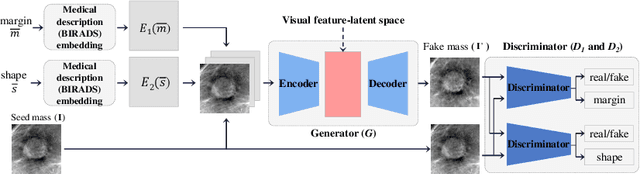

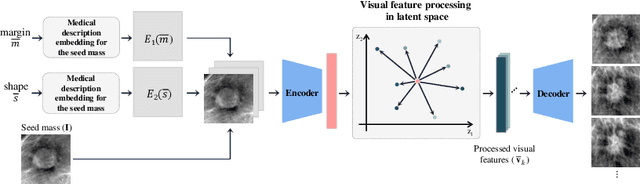

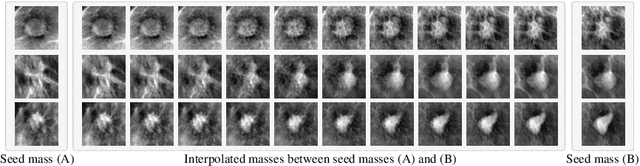

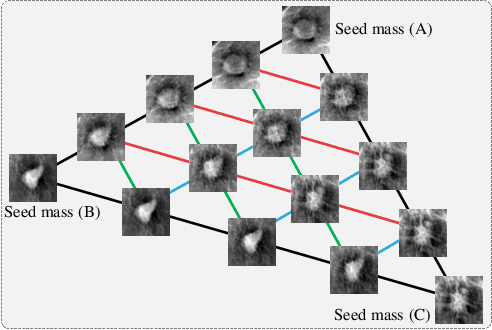

Feature2Mass: Visual Feature Processing in Latent Space for Realistic Labeled Mass Generation

Sep 17, 2018

Abstract:This paper deals with a method for generating realistic labeled masses. Recently, there have been many attempts to apply deep learning to various bio-image computing fields including computer-aided detection and diagnosis. In order to learn deep network model to be well-behaved in bio-image computing fields, a lot of labeled data is required. However, in many bioimaging fields, the large-size of labeled dataset is scarcely available. Although a few researches have been dedicated to solving this problem through generative model, there are some problems as follows: 1) The generated bio-image does not seem realistic; 2) the variation of generated bio-image is limited; and 3) additional label annotation task is needed. In this study, we propose a realistic labeled bio-image generation method through visual feature processing in latent space. Experimental results have shown that mass images generated by the proposed method were realistic and had wide expression range of targeted mass characteristics.

ICADx: Interpretable computer aided diagnosis of breast masses

May 23, 2018Abstract:In this study, a novel computer aided diagnosis (CADx) framework is devised to investigate interpretability for classifying breast masses. Recently, a deep learning technology has been successfully applied to medical image analysis including CADx. Existing deep learning based CADx approaches, however, have a limitation in explaining the diagnostic decision. In real clinical practice, clinical decisions could be made with reasonable explanation. So current deep learning approaches in CADx are limited in real world deployment. In this paper, we investigate interpretability in CADx with the proposed interpretable CADx (ICADx) framework. The proposed framework is devised with a generative adversarial network, which consists of interpretable diagnosis network and synthetic lesion generative network to learn the relationship between malignancy and a standardized description (BI-RADS). The lesion generative network and the interpretable diagnosis network compete in an adversarial learning so that the two networks are improved. The effectiveness of the proposed method was validated on public mammogram database. Experimental results showed that the proposed ICADx framework could provide the interpretability of mass as well as mass classification. It was mainly attributed to the fact that the proposed method was effectively trained to find the relationship between malignancy and interpretations via the adversarial learning. These results imply that the proposed ICADx framework could be a promising approach to develop the CADx system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge