Hajo A. Reijers

From Chaos to Automation: Enabling the Use of Unstructured Data for Robotic Process Automation

Jul 15, 2025Abstract:The growing volume of unstructured data within organizations poses significant challenges for data analysis and process automation. Unstructured data, which lacks a predefined format, encompasses various forms such as emails, reports, and scans. It is estimated to constitute approximately 80% of enterprise data. Despite the valuable insights it can offer, extracting meaningful information from unstructured data is more complex compared to structured data. Robotic Process Automation (RPA) has gained popularity for automating repetitive tasks, improving efficiency, and reducing errors. However, RPA is traditionally reliant on structured data, limiting its application to processes involving unstructured documents. This study addresses this limitation by developing the UNstructured Document REtrieval SyStem (UNDRESS), a system that uses fuzzy regular expressions, techniques for natural language processing, and large language models to enable RPA platforms to effectively retrieve information from unstructured documents. The research involved the design and development of a prototype system, and its subsequent evaluation based on text extraction and information retrieval performance. The results demonstrate the effectiveness of UNDRESS in enhancing RPA capabilities for unstructured data, providing a significant advancement in the field. The findings suggest that this system could facilitate broader RPA adoption across processes traditionally hindered by unstructured data, thereby improving overall business process efficiency.

HOEG: A New Approach for Object-Centric Predictive Process Monitoring

Apr 08, 2024

Abstract:Predictive Process Monitoring focuses on predicting future states of ongoing process executions, such as forecasting the remaining time. Recent developments in Object-Centric Process Mining have enriched event data with objects and their explicit relations between events. To leverage this enriched data, we propose the Heterogeneous Object Event Graph encoding (HOEG), which integrates events and objects into a graph structure with diverse node types. It does so without aggregating object features, thus creating a more nuanced and informative representation. We then adopt a heterogeneous Graph Neural Network architecture, which incorporates these diverse object features in prediction tasks. We evaluate the performance and scalability of HOEG in predicting remaining time, benchmarking it against two established graph-based encodings and two baseline models. Our evaluation uses three Object-Centric Event Logs (OCELs), including one from a real-life process at a major Dutch financial institution. The results indicate that HOEG competes well with existing models and surpasses them when OCELs contain informative object attributes and event-object interactions.

Measuring the Stability of Process Outcome Predictions in Online Settings

Oct 13, 2023Abstract:Predictive Process Monitoring aims to forecast the future progress of process instances using historical event data. As predictive process monitoring is increasingly applied in online settings to enable timely interventions, evaluating the performance of the underlying models becomes crucial for ensuring their consistency and reliability over time. This is especially important in high risk business scenarios where incorrect predictions may have severe consequences. However, predictive models are currently usually evaluated using a single, aggregated value or a time-series visualization, which makes it challenging to assess their performance and, specifically, their stability over time. This paper proposes an evaluation framework for assessing the stability of models for online predictive process monitoring. The framework introduces four performance meta-measures: the frequency of significant performance drops, the magnitude of such drops, the recovery rate, and the volatility of performance. To validate this framework, we applied it to two artificial and two real-world event logs. The results demonstrate that these meta-measures facilitate the comparison and selection of predictive models for different risk-taking scenarios. Such insights are of particular value to enhance decision-making in dynamic business environments.

CREATED: Generating Viable Counterfactual Sequences for Predictive Process Analytics

Mar 28, 2023Abstract:Predictive process analytics focuses on predicting future states, such as the outcome of running process instances. These techniques often use machine learning models or deep learning models (such as LSTM) to make such predictions. However, these deep models are complex and difficult for users to understand. Counterfactuals answer ``what-if'' questions, which are used to understand the reasoning behind the predictions. For example, what if instead of emailing customers, customers are being called? Would this alternative lead to a different outcome? Current methods to generate counterfactual sequences either do not take the process behavior into account, leading to generating invalid or infeasible counterfactual process instances, or heavily rely on domain knowledge. In this work, we propose a general framework that uses evolutionary methods to generate counterfactual sequences. Our framework does not require domain knowledge. Instead, we propose to train a Markov model to compute the feasibility of generated counterfactual sequences and adapt three other measures (delta in outcome prediction, similarity, and sparsity) to ensure their overall viability. The evaluation shows that we generate viable counterfactual sequences, outperform baseline methods in viability, and yield similar results when compared to the state-of-the-art method that requires domain knowledge.

The Analysis of Online Event Streams: Predicting the Next Activity for Anomaly Detection

Mar 17, 2022

Abstract:Anomaly detection in process mining focuses on identifying anomalous cases or events in process executions. The resulting diagnostics are used to provide measures to prevent fraudulent behavior, as well as to derive recommendations for improving process compliance and security. Most existing techniques focus on detecting anomalous cases in an offline setting. However, to identify potential anomalies in a timely manner and take immediate countermeasures, it is necessary to detect event-level anomalies online, in real-time. In this paper, we propose to tackle the online event anomaly detection problem using next-activity prediction methods. More specifically, we investigate the use of both ML models (such as RF and XGBoost) and deep models (such as LSTM) to predict the probabilities of next-activities and consider the events predicted unlikely as anomalies. We compare these predictive anomaly detection methods to four classical unsupervised anomaly detection approaches (such as Isolation forest and LOF) in the online setting. Our evaluation shows that the proposed method using ML models tends to outperform the one using a deep model, while both methods outperform the classical unsupervised approaches in detecting anomalous events.

All That Glitters Is Not Gold: Towards Process Discovery Techniques with Guarantees

Dec 23, 2020

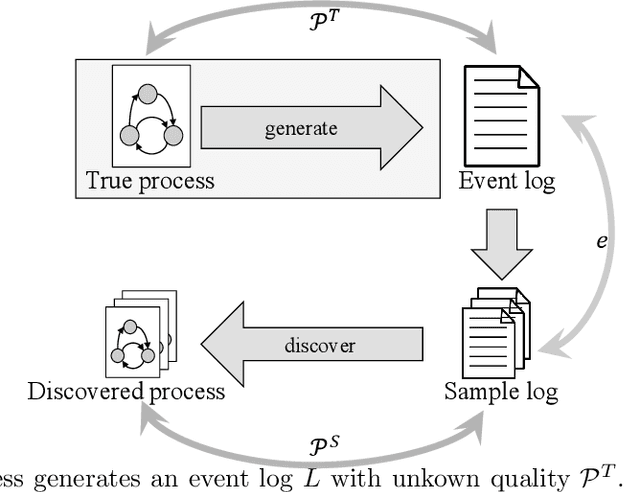

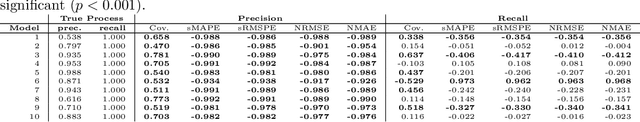

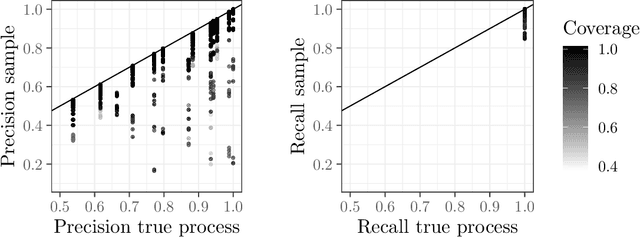

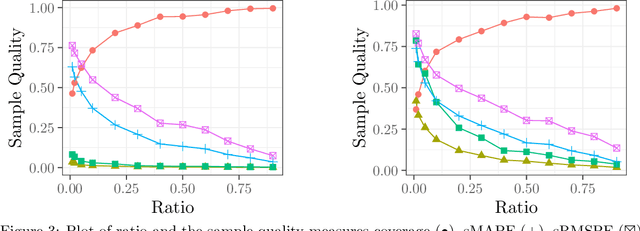

Abstract:The aim of a process discovery algorithm is to construct from event data a process model that describes the underlying, real-world process well. Intuitively, the better the quality of the event data, the better the quality of the model that is discovered. However, existing process discovery algorithms do not guarantee this relationship. We demonstrate this by using a range of quality measures for both event data and discovered process models. This paper is a call to the community of IS engineers to complement their process discovery algorithms with properties that relate qualities of their inputs to those of their outputs. To this end, we distinguish four incremental stages for the development of such algorithms, along with concrete guidelines for the formulation of relevant properties and experimental validation. We will also use these stages to reflect on the state of the art, which shows the need to move forward in our thinking about algorithmic process discovery.

Discovering Hierarchical Processes Using Flexible Activity Trees for Event Abstraction

Oct 16, 2020

Abstract:Processes, such as patient pathways, can be very complex, comprising of hundreds of activities and dozens of interleaved subprocesses. While existing process discovery algorithms have proven to construct models of high quality on clean logs of structured processes, it still remains a challenge when the algorithms are being applied to logs of complex processes. The creation of a multi-level, hierarchical representation of a process can help to manage this complexity. However, current approaches that pursue this idea suffer from a variety of weaknesses. In particular, they do not deal well with interleaving subprocesses. In this paper, we propose FlexHMiner, a three-step approach to discover processes with multi-level interleaved subprocesses. We implemented FlexHMiner in the open source Process Mining toolkit ProM. We used seven real-life logs to compare the qualities of hierarchical models discovered using domain knowledge, random clustering, and flat approaches. Our results indicate that the hierarchical process models that the FlexHMiner generates compare favorably to approaches that do not exploit hierarchy.

Identifying Patient Groups based on Frequent Patterns of Patient Samples

Apr 03, 2019

Abstract:Grouping patients meaningfully can give insights about the different types of patients, their needs, and the priorities. Finding groups that are meaningful is however very challenging as background knowledge is often required to determine what a useful grouping is. In this paper we propose an approach that is able to find groups of patients based on a small sample of positive examples given by a domain expert. Because of that, the approach relies on very limited efforts by the domain experts. The approach groups based on the activities and diagnostic/billing codes within health pathways of patients. To define such a grouping based on the sample of patients efficiently, frequent patterns of activities are discovered and used to measure the similarity between the care pathways of other patients to the patients in the sample group. This approach results in an insightful definition of the group. The proposed approach is evaluated using several datasets obtained from a large university medical center. The evaluation shows F1-scores of around 0.7 for grouping kidney injury and around 0.6 for diabetes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge