Haichuan Che

Lessons from Learning to Spin "Pens"

Jul 26, 2024

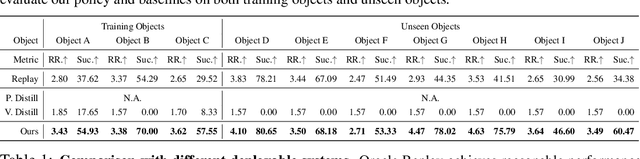

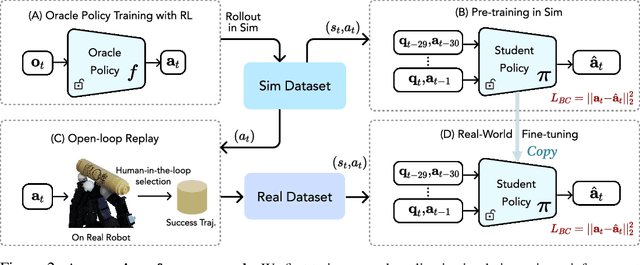

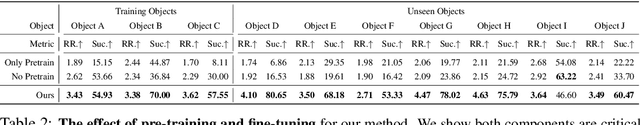

Abstract:In-hand manipulation of pen-like objects is an important skill in our daily lives, as many tools such as hammers and screwdrivers are similarly shaped. However, current learning-based methods struggle with this task due to a lack of high-quality demonstrations and the significant gap between simulation and the real world. In this work, we push the boundaries of learning-based in-hand manipulation systems by demonstrating the capability to spin pen-like objects. We first use reinforcement learning to train an oracle policy with privileged information and generate a high-fidelity trajectory dataset in simulation. This serves two purposes: 1) pre-training a sensorimotor policy in simulation; 2) conducting open-loop trajectory replay in the real world. We then fine-tune the sensorimotor policy using these real-world trajectories to adapt it to the real world dynamics. With less than 50 trajectories, our policy learns to rotate more than ten pen-like objects with different physical properties for multiple revolutions. We present a comprehensive analysis of our design choices and share the lessons learned during development.

Robot Synesthesia: In-Hand Manipulation with Visuotactile Sensing

Dec 04, 2023

Abstract:Executing contact-rich manipulation tasks necessitates the fusion of tactile and visual feedback. However, the distinct nature of these modalities poses significant challenges. In this paper, we introduce a system that leverages visual and tactile sensory inputs to enable dexterous in-hand manipulation. Specifically, we propose Robot Synesthesia, a novel point cloud-based tactile representation inspired by human tactile-visual synesthesia. This approach allows for the simultaneous and seamless integration of both sensory inputs, offering richer spatial information and facilitating better reasoning about robot actions. The method, trained in a simulated environment and then deployed to a real robot, is applicable to various in-hand object rotation tasks. Comprehensive ablations are performed on how the integration of vision and touch can improve reinforcement learning and Sim2Real performance. Our project page is available at https://yingyuan0414.github.io/visuotactile/ .

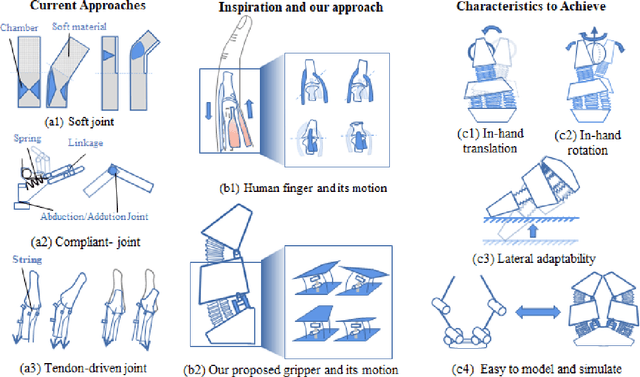

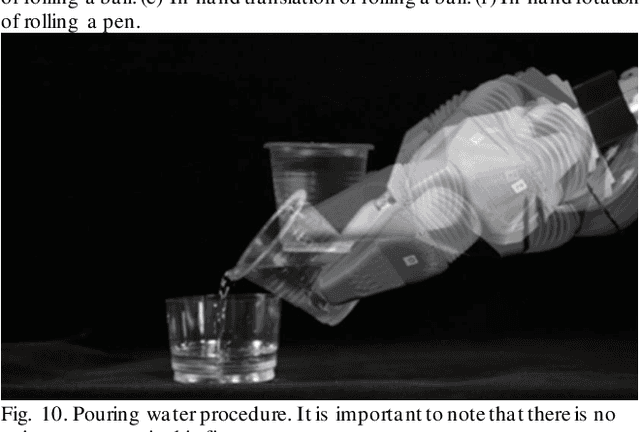

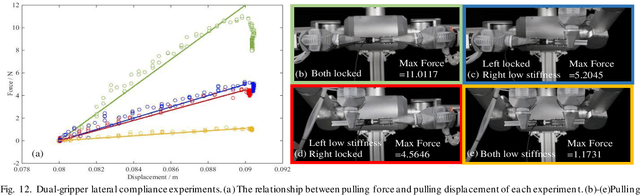

A Soft-Rigid Hybrid Gripper with Lateral Compliance and Dexterous In-hand Manipulation

Oct 19, 2021

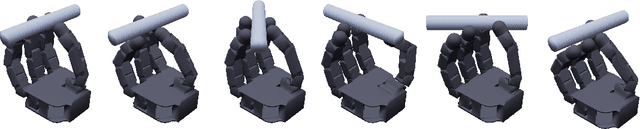

Abstract:Soft grippers are receiving growing attention due to their compliance-based interactive safety and dexterity. Hybrid gripper (soft actuators enhanced by rigid constraints) is a new trend in soft gripper design. With right structural components actuated by soft actuators, they could achieve excellent grasping adaptability and payload, while also being easy to model and control with conventional kinematics. However, existing works were mostly focused on achieving superior payload and perception with simple planar workspaces, resulting in far less dexterity compared with conventional grippers. In this work, we took inspiration from the human Metacarpophalangeal (MCP) joint and proposed a new hybrid gripper design with 8 independent muscles. It was shown that adding the MCP complexity was critical in enabling a range of novel features in the hybrid gripper, including in-hand manipulation, lateral passive compliance, as well as new control modes. A prototype gripper was fabricated and tested on our proprietary dual-arm robot platform with vision guided grasping. With very lightweight pneumatic bellows soft actuators, the gripper could grasp objects over 25 times its own weight with lateral compliance. Using the dual-arm platform, highly anthropomorphic dexterous manipulations were demonstrated using two hybrid grippers, from Tug-of-war on a rigid rod, to passing a soft towel between two grippers using in-hand manipulation. Matching with the novel features and performance specifications of the proposed hybrid gripper, the underlying modeling, actuation, control, and experimental validation details were also presented, offering a promising approach to achieving enhanced dexterity, strength, and compliance in robotic grippers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge