Guotao Meng

Embedding Novel Views in a Single JPEG Image

Aug 30, 2021

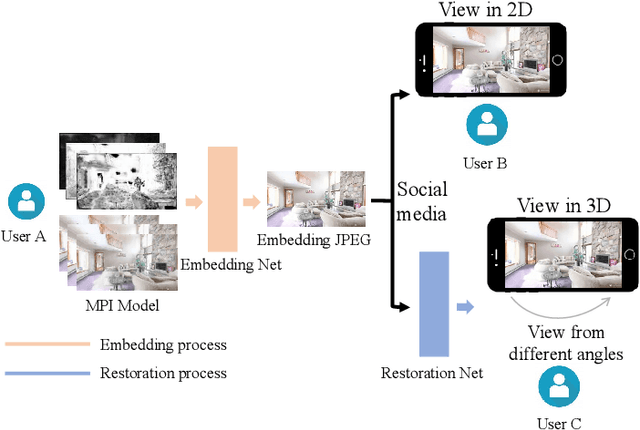

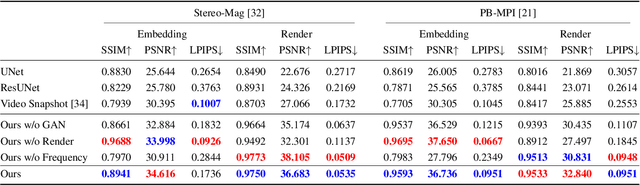

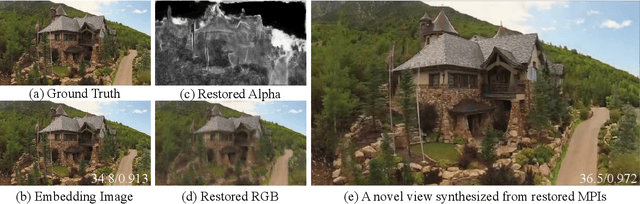

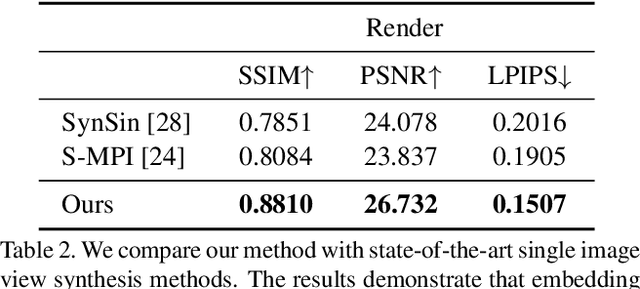

Abstract:We propose a novel approach for embedding novel views in a single JPEG image while preserving the perceptual fidelity of the modified JPEG image and the restored novel views. We adopt the popular novel view synthesis representation of multiplane images (MPIs). Our model first encodes 32 MPI layers (totally 128 channels) into a 3-channel JPEG image that can be decoded for MPIs to render novel views, with an embedding capacity of 1024 bits per pixel. We conducted experiments on public datasets with different novel view synthesis methods, and the results show that the proposed method can restore high-fidelity novel views from a slightly modified JPEG image. Furthermore, our method is robust to JPEG compression, color adjusting, and cropping. Our source code will be publicly available.

Video Super-Resolution with Long-Term Self-Exemplars

Jun 24, 2021

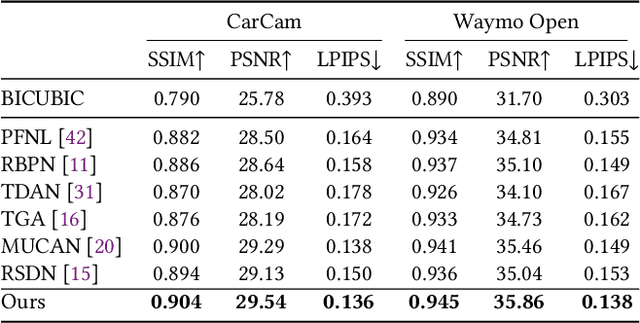

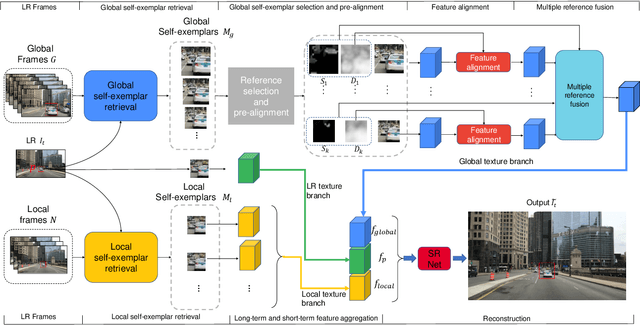

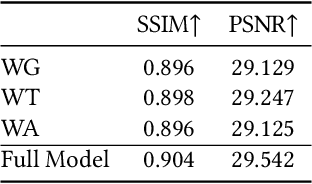

Abstract:Existing video super-resolution methods often utilize a few neighboring frames to generate a higher-resolution image for each frame. However, the redundant information between distant frames has not been fully exploited in these methods: corresponding patches of the same instance appear across distant frames at different scales. Based on this observation, we propose a video super-resolution method with long-term cross-scale aggregation that leverages similar patches (self-exemplars) across distant frames. Our model also consists of a multi-reference alignment module to fuse the features derived from similar patches: we fuse the features of distant references to perform high-quality super-resolution. We also propose a novel and practical training strategy for referenced-based super-resolution. To evaluate the performance of our proposed method, we conduct extensive experiments on our collected CarCam dataset and the Waymo Open dataset, and the results demonstrate our method outperforms state-of-the-art methods. Our source code will be publicly available.

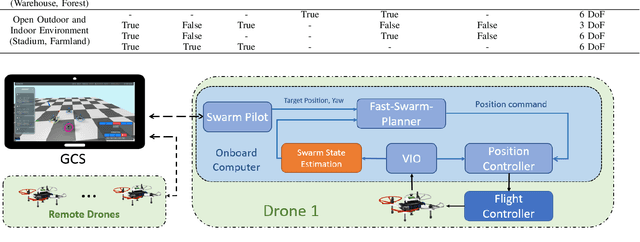

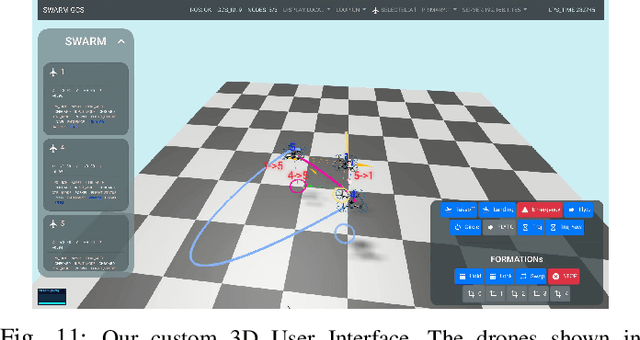

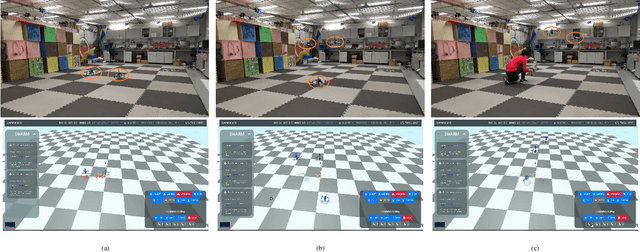

Omni-swarm: A Decentralized Omnidirectional Visual-Inertial-UWB State Estimation System for Aerial Swarm

Apr 04, 2021

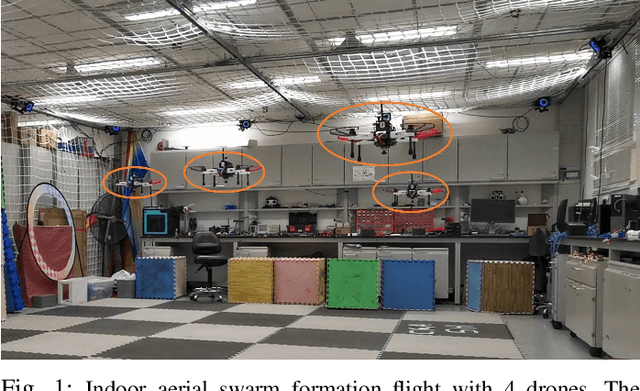

Abstract:The decentralized state estimation is one of the most fundamental components for autonomous aerial swarm systems in GPS-denied areas, which still remains a highly challenging research topic. To address this research niche, the Omni-swarm, a decentralized omnidirectional visual-inertial-UWB state estimation system for the aerial swarm is proposed in this paper. In order to solve the issues of observability, complicated initialization, insufficient accuracy and lack of global consistency, we introduce an omnidirectional perception system as the front-end of the Omni-swarm, consisting of omnidirectional sensors, which includes stereo fisheye cameras and ultra-wideband (UWB) sensors, and algorithms, which includes fisheye visual inertial odometry (VIO), multi-drone map-based localization and visual object detector. A graph-based optimization and forward propagation working as the back-end of the Omni-swarm to fuse the measurements from the front-end. According to the experiment result, the proposed decentralized state estimation method on the swarm system achieves centimeter-level relative state estimation accuracy while ensuring global consistency. Moreover, supported by the Omni-swarm, inter-drone collision avoidance can be accomplished in a whole decentralized scheme without any external device, demonstrating the potential of Omni-swarm to be the foundation of autonomous aerial swarm flights in different scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge