Guohang He

Transformer-based Single-Cell Language Model: A Survey

Jul 18, 2024Abstract:The transformers have achieved significant accomplishments in the natural language processing as its outstanding parallel processing capabilities and highly flexible attention mechanism. In addition, increasing studies based on transformers have been proposed to model single-cell data. In this review, we attempt to systematically summarize the single-cell language models and applications based on transformers. First, we provide a detailed introduction about the structure and principles of transformers. Then, we review the single-cell language models and large language models for single-cell data analysis. Moreover, we explore the datasets and applications of single-cell language models in downstream tasks such as batch correction, cell clustering, cell type annotation, gene regulatory network inference and perturbation response. Further, we discuss the challenges of single-cell language models and provide promising research directions. We hope this review will serve as an up-to-date reference for researchers interested in the direction of single-cell language models.

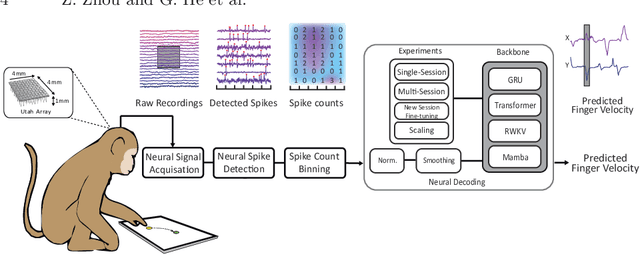

Benchmarking Neural Decoding Backbones towards Enhanced On-edge iBCI Applications

Jun 08, 2024

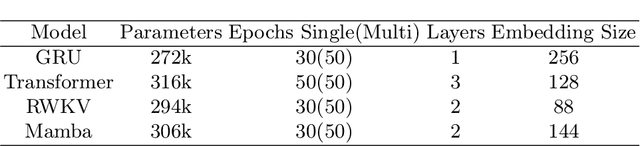

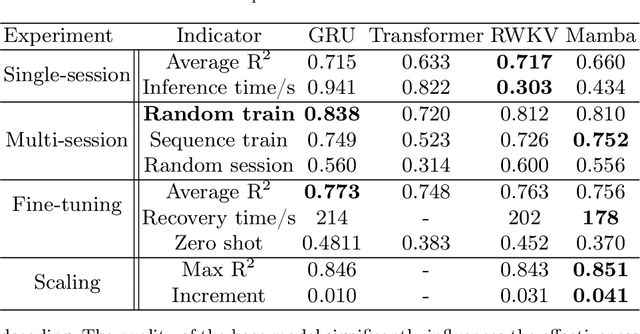

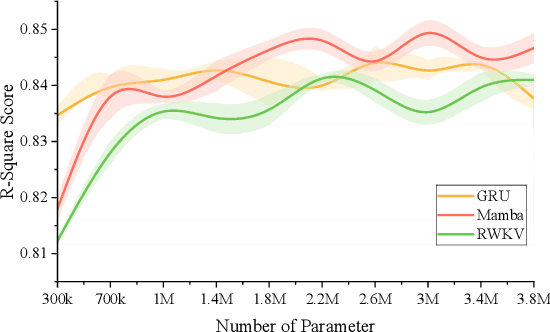

Abstract:Traditional invasive Brain-Computer Interfaces (iBCIs) typically depend on neural decoding processes conducted on workstations within laboratory settings, which prevents their everyday usage. Implementing these decoding processes on edge devices, such as the wearables, introduces considerable challenges related to computational demands, processing speed, and maintaining accuracy. This study seeks to identify an optimal neural decoding backbone that boasts robust performance and swift inference capabilities suitable for edge deployment. We executed a series of neural decoding experiments involving nonhuman primates engaged in random reaching tasks, evaluating four prospective models, Gated Recurrent Unit (GRU), Transformer, Receptance Weighted Key Value (RWKV), and Selective State Space model (Mamba), across several metrics: single-session decoding, multi-session decoding, new session fine-tuning, inference speed, calibration speed, and scalability. The findings indicate that although the GRU model delivers sufficient accuracy, the RWKV and Mamba models are preferable due to their superior inference and calibration speeds. Additionally, RWKV and Mamba comply with the scaling law, demonstrating improved performance with larger data sets and increased model sizes, whereas GRU shows less pronounced scalability, and the Transformer model requires computational resources that scale prohibitively. This paper presents a thorough comparative analysis of the four models in various scenarios. The results are pivotal in pinpointing an optimal backbone that can handle increasing data volumes and is viable for edge implementation. This analysis provides essential insights for ongoing research and practical applications in the field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge