Guofeng Li

DART: Depth-Enhanced Accurate and Real-Time Background Matting

Feb 24, 2024

Abstract:Matting with a static background, often referred to as ``Background Matting" (BGM), has garnered significant attention within the computer vision community due to its pivotal role in various practical applications like webcasting and photo editing. Nevertheless, achieving highly accurate background matting remains a formidable challenge, primarily owing to the limitations inherent in conventional RGB images. These limitations manifest in the form of susceptibility to varying lighting conditions and unforeseen shadows. In this paper, we leverage the rich depth information provided by the RGB-Depth (RGB-D) cameras to enhance background matting performance in real-time, dubbed DART. Firstly, we adapt the original RGB-based BGM algorithm to incorporate depth information. The resulting model's output undergoes refinement through Bayesian inference, incorporating a background depth prior. The posterior prediction is then translated into a "trimap," which is subsequently fed into a state-of-the-art matting algorithm to generate more precise alpha mattes. To ensure real-time matting capabilities, a critical requirement for many real-world applications, we distill the backbone of our model from a larger and more versatile BGM network. Our experiments demonstrate the superior performance of the proposed method. Moreover, thanks to the distillation operation, our method achieves a remarkable processing speed of 33 frames per second (fps) on a mid-range edge-computing device. This high efficiency underscores DART's immense potential for deployment in mobile applications}

Deep Clustering: A Comprehensive Survey

Oct 09, 2022

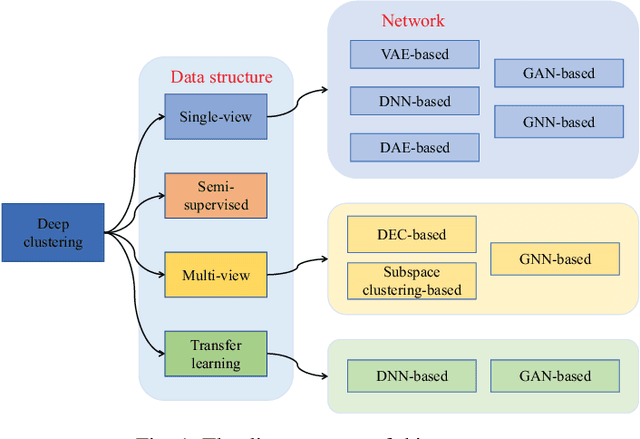

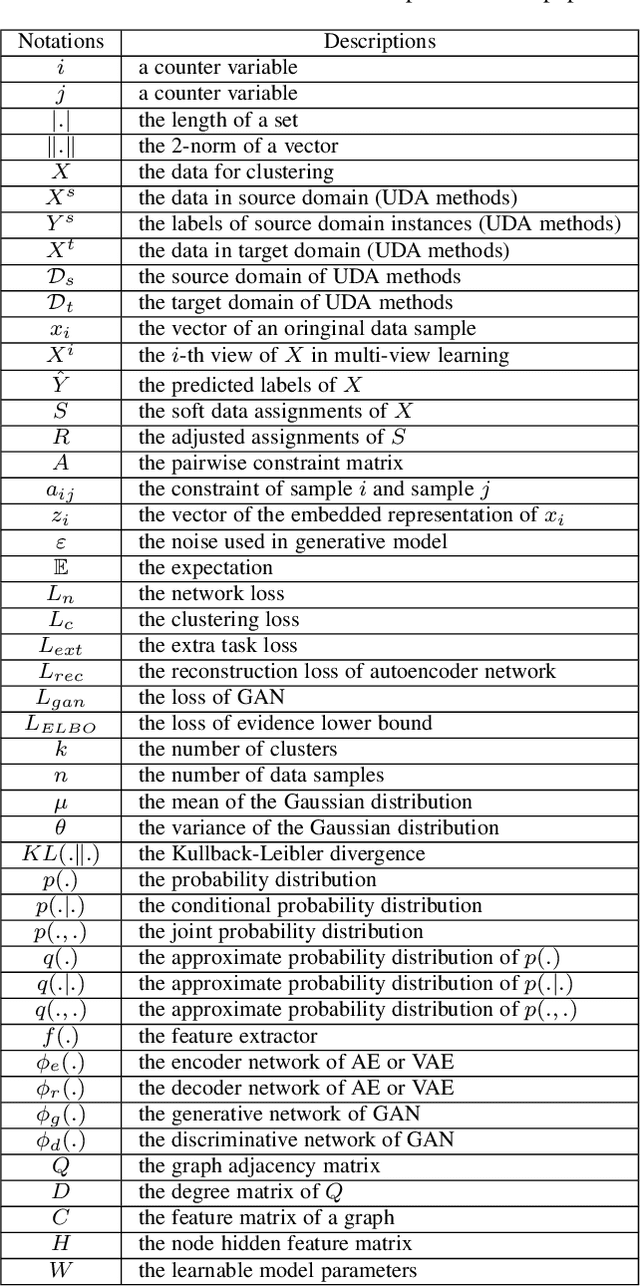

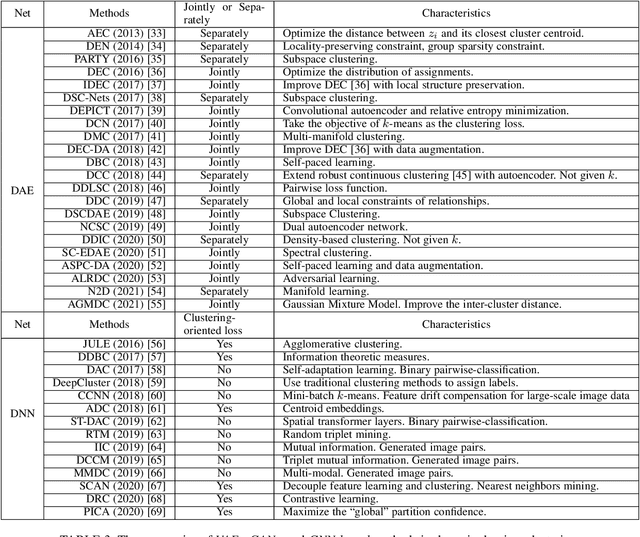

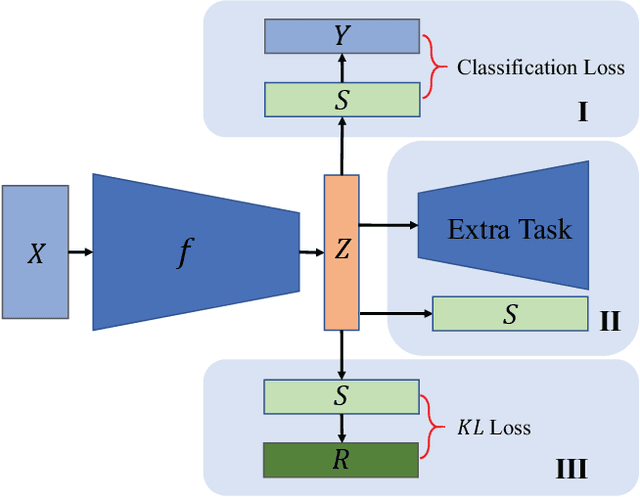

Abstract:Cluster analysis plays an indispensable role in machine learning and data mining. Learning a good data representation is crucial for clustering algorithms. Recently, deep clustering, which can learn clustering-friendly representations using deep neural networks, has been broadly applied in a wide range of clustering tasks. Existing surveys for deep clustering mainly focus on the single-view fields and the network architectures, ignoring the complex application scenarios of clustering. To address this issue, in this paper we provide a comprehensive survey for deep clustering in views of data sources. With different data sources and initial conditions, we systematically distinguish the clustering methods in terms of methodology, prior knowledge, and architecture. Concretely, deep clustering methods are introduced according to four categories, i.e., traditional single-view deep clustering, semi-supervised deep clustering, deep multi-view clustering, and deep transfer clustering. Finally, we discuss the open challenges and potential future opportunities in different fields of deep clustering.

Deep Embedded Multi-view Clustering with Collaborative Training

Jul 26, 2020

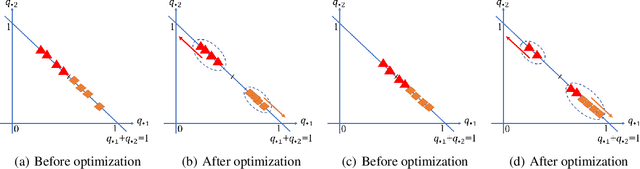

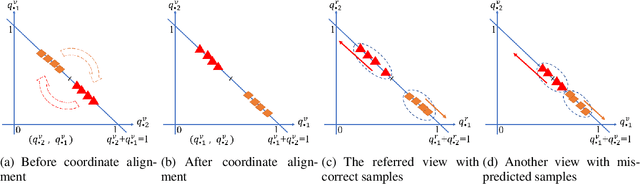

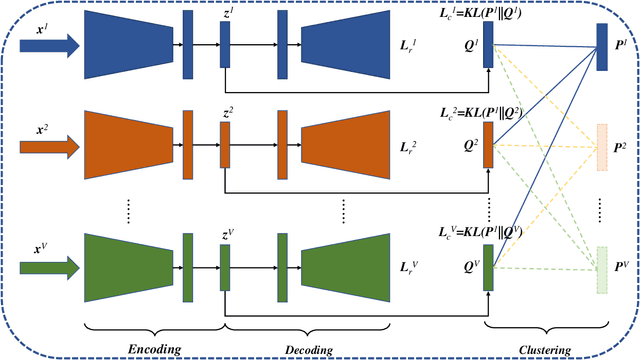

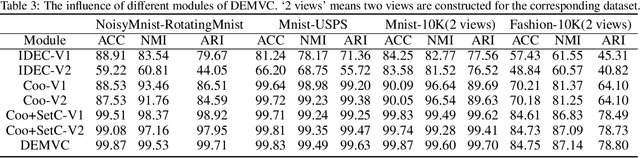

Abstract:Multi-view clustering has attracted increasing attentions recently by utilizing information from multiple views. However, existing multi-view clustering methods are either with high computation and space complexities, or lack of representation capability. To address these issues, we propose deep embedded multi-view clustering with collaborative training (DEMVC) in this paper. Firstly, the embedded representations of multiple views are learned individually by deep autoencoders. Then, both consensus and complementary of multiple views are taken into account and a novel collaborative training scheme is proposed. Concretely, the feature representations and cluster assignments of all views are learned collaboratively. A new consistency strategy for cluster centers initialization is further developed to improve the multi-view clustering performance with collaborative training. Experimental results on several popular multi-view datasets show that DEMVC achieves significant improvements over state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge