Guido Cervone

MeltwaterBench: Deep learning for spatiotemporal downscaling of surface meltwater

Dec 13, 2025

Abstract:The Greenland ice sheet is melting at an accelerated rate due to processes that are not fully understood and hard to measure. The distribution of surface meltwater can help understand these processes and is observable through remote sensing, but current maps of meltwater face a trade-off: They are either high-resolution in time or space, but not both. We develop a deep learning model that creates gridded surface meltwater maps at daily 100m resolution by fusing data streams from remote sensing observations and physics-based models. In particular, we spatiotemporally downscale regional climate model (RCM) outputs using synthetic aperture radar (SAR), passive microwave (PMW), and a digital elevation model (DEM) over the Helheim Glacier in Eastern Greenland from 2017-2023. Using SAR-derived meltwater as "ground truth", we show that a deep learning-based method that fuses all data streams is over 10 percentage points more accurate over our study area than existing non deep learning-based approaches that only rely on a regional climate model (83% vs. 95% Acc.) or passive microwave observations (72% vs. 95% Acc.). Alternatively, creating a gridded product through a running window calculation with SAR data underestimates extreme melt events, but also achieves notable accuracy (90%) and does not rely on deep learning. We evaluate standard deep learning methods (UNet and DeepLabv3+), and publish our spatiotemporally aligned dataset as a benchmark, MeltwaterBench, for intercomparisons with more complex data-driven downscaling methods. The code and data are available at $\href{https://github.com/blutjens/hrmelt}{github.com/blutjens/hrmelt}$.

GIScience in the Era of Artificial Intelligence: A Research Agenda Towards Autonomous GIS

Apr 01, 2025Abstract:The advent of generative AI exemplified by large language models (LLMs) opens new ways to represent and compute geographic information and transcend the process of geographic knowledge production, driving geographic information systems (GIS) towards autonomous GIS. Leveraging LLMs as the decision core, autonomous GIS can independently generate and execute geoprocessing workflows to perform spatial analysis. In this vision paper, we elaborate on the concept of autonomous GIS and present a framework that defines its five autonomous goals, five levels of autonomy, five core functions, and three operational scales. We demonstrate how autonomous GIS could perform geospatial data retrieval, spatial analysis, and map making with four proof-of-concept GIS agents. We conclude by identifying critical challenges and future research directions, including fine-tuning and self-growing decision cores, autonomous modeling, and examining the ethical and practical implications of autonomous GIS. By establishing the groundwork for a paradigm shift in GIScience, this paper envisions a future where GIS moves beyond traditional workflows to autonomously reason, derive, innovate, and advance solutions to pressing global challenges.

Improving the Thermal Infrared Monitoring of Volcanoes: A Deep Learning Approach for Intermittent Image Series

Sep 27, 2021

Abstract:Active volcanoes are globally distributed and pose societal risks at multiple geographic scales, ranging from local hazards to regional/international disruptions. Many volcanoes do not have continuous ground monitoring networks; meaning that satellite observations provide the only record of volcanic behavior and unrest. Among these remote sensing observations, thermal imagery is inspected daily by volcanic observatories for examining the early signs, onset, and evolution of eruptive activity. However, thermal scenes are often obstructed by clouds, meaning that forecasts must be made off image sequences whose scenes are only usable intermittently through time. Here, we explore forecasting this thermal data stream from a deep learning perspective using existing architectures that model sequences with varying spatiotemporal considerations. Additionally, we propose and evaluate new architectures that explicitly model intermittent image sequences. Using ASTER Kinetic Surface Temperature data for $9$ volcanoes between $1999$ and $2020$, we found that a proposed architecture (ConvLSTM + Time-LSTM + U-Net) forecasts volcanic temperature imagery with the lowest RMSE ($4.164^{\circ}$C, other methods: $4.217-5.291^{\circ}$C). Additionally, we examined performance on multiple time series derived from the thermal imagery and the effect of training with data from singular volcanoes. Ultimately, we found that models with the lowest RMSE on forecasting imagery did not possess the lowest RMSE on recreating time series derived from that imagery and that training with individual volcanoes generally worsened performance relative to a multi-volcano data set. This work highlights the potential of data-driven deep learning models for volcanic unrest forecasting while revealing the need for carefully constructed optimization targets.

Ill-posed Surface Emissivity Retrieval from Multi-Geometry Hyperspectral Images using a Hybrid Deep Neural Network

Jul 17, 2021

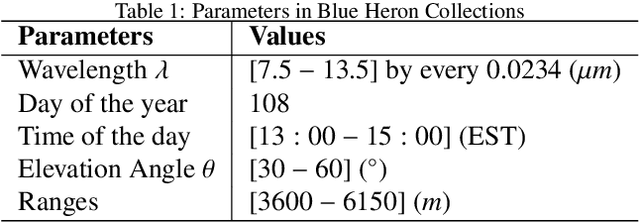

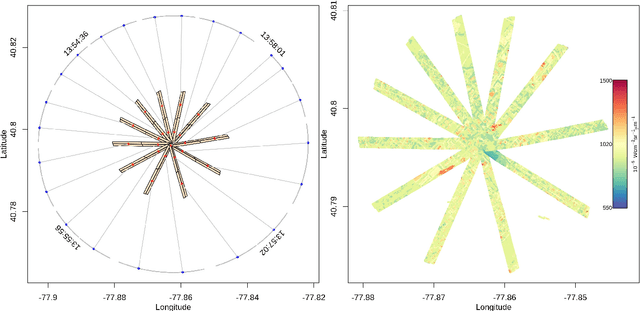

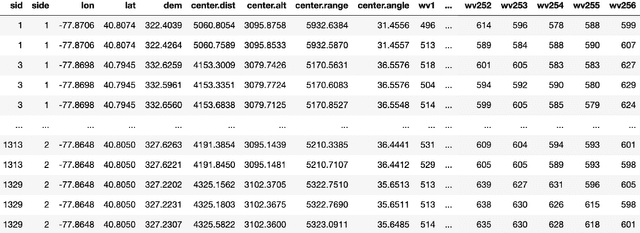

Abstract:Atmospheric correction is a fundamental task in remote sensing because observations are taken either of the atmosphere or looking through the atmosphere. Atmospheric correction errors can significantly alter the spectral signature of the observations, and lead to invalid classifications or target detection. This is even more crucial when working with hyperspectral data, where a precise measurement of spectral properties is required. State-of-the-art physics-based atmospheric correction approaches require extensive prior knowledge about sensor characteristics, collection geometry, and environmental characteristics of the scene being collected. These approaches are computationally expensive, prone to inaccuracy due to lack of sufficient environmental and collection information, and often impossible for real-time applications. In this paper, a geometry-dependent hybrid neural network is proposed for automatic atmospheric correction using multi-scan hyperspectral data collected from different geometries. The proposed network can characterize the atmosphere without any additional meteorological data. A grid-search method is also proposed to solve the temperature emissivity separation problem. Results show that the proposed network has the capacity to accurately characterize the atmosphere and estimate target emissivity spectra with a Mean Absolute Error (MAE) under 0.02 for 29 different materials. This solution can lead to accurate atmospheric correction to improve target detection for real time applications.

Weather Analogs with a Machine Learning Similarity Metric for Renewable Resource Forecasting

Mar 09, 2021

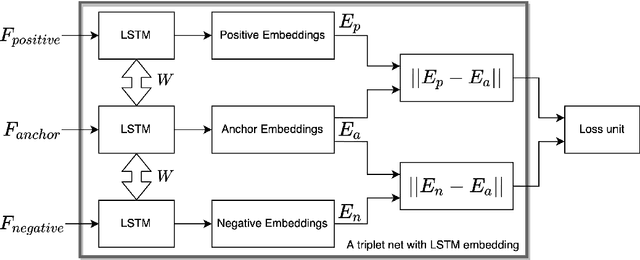

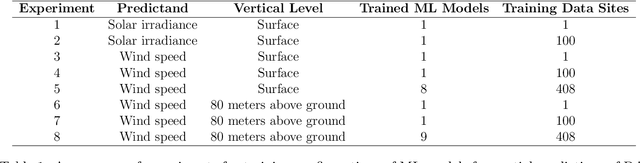

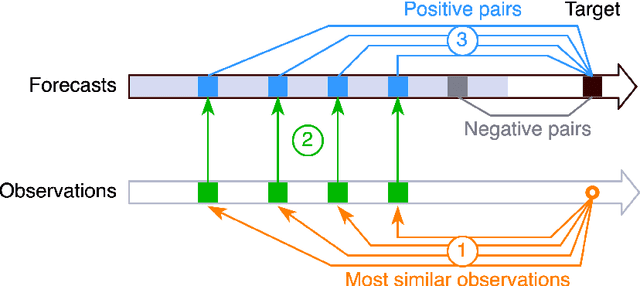

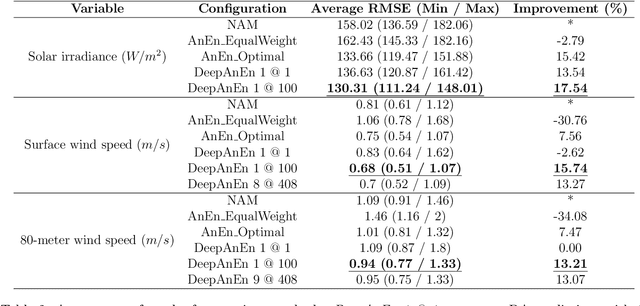

Abstract:The Analog Ensemble (AnEn) technique has been shown effective on several weather problems. Unlike previous weather analogs that are sought within a large spatial domain and an extended temporal window, AnEn strictly confines space and time, and independently generates results at each grid point within a short time window. AnEn can find similar forecasts that lead to accurate and calibrated ensemble forecasts. The central core of the AnEn technique is a similarity metric that sorts historical forecasts with respect to a new target prediction. A commonly used metric is Euclidean distance. However, a significant difficulty using this metric is the definition of the weights for all the parameters. Generally, feature selection and extensive weight search are needed. This paper proposes a novel definition of weather analogs through a Machine Learning (ML) based similarity metric. The similarity metric uses neural networks that are trained and instantiated to search for weather analogs. This new metric allows incorporating all variables without requiring a prior feature selection and weight optimization. Experiments are presented on the application of this new metric to forecast wind speed and solar irradiance. Results show that the ML metric generally outperforms the original metric. The ML metric has a better capability to correct for larger errors and to take advantage of a larger search repository. Spatial predictions using a learned metric also show the ability to define effective latent features that are transferable to other locations.

Using Long Short-Term Memory and Internet of Things for localized surface temperature forecasting in an urban environment

Feb 04, 2021

Abstract:The rising temperature is one of the key indicators of a warming climate, and it can cause extensive stress to biological systems as well as built structures. Due to the heat island effect, it is most severe in urban environments compared to other landscapes due to the decrease in vegetation associated with a dense human-built environment. It is essential to adequately monitor the local temperature dynamics to mitigate risks associated with increasing temperatures, which can include short term strategy to protect people and animals, to long term strategy to how to build a new structure and cope with extreme events. Observed temperature is also a very important input for atmospheric models, and accurate data can lead to better future forecasts. Ambient temperature collected at ground level can have a higher variability when compared to regional weather forecasts, which fail to capture the local dynamics. There remains a clear need for an accurate air temperature prediction at the sub-urban scale at high temporal and spatial resolution. This research proposed a framework based on Long Short-Term Memory (LSTM) deep learning network to generate day-ahead hourly temperature forecast with high spatial resolution. A case study is shown which uses historical in-situ observations and Internet of Things (IoT) observations for New York City, USA. By leveraging the historical air temperature data from in-situ observations, the LSTM model can be exposed to more historical patterns that might not be present in the IoT observations. Meanwhile, by using IoT observations, the spatial resolution of air temperature predictions is significantly improved.

Probabilistic Forecasting using Deep Generative Models

Sep 26, 2019

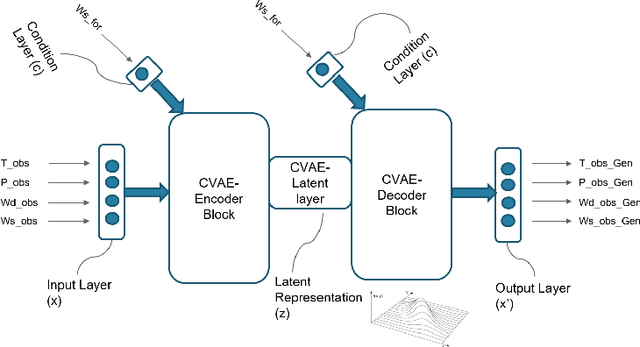

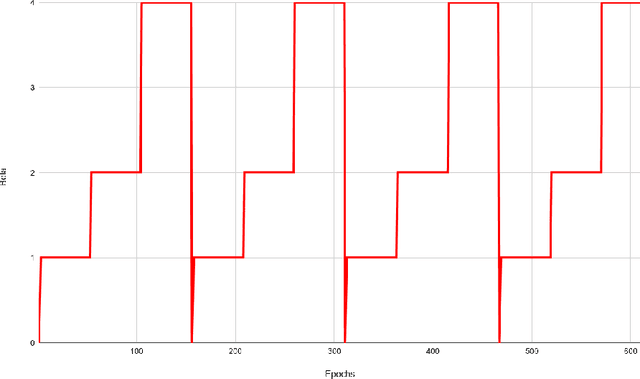

Abstract:The Analog Ensemble (AnEn) method tries to estimate the probability distribution of the future state of the atmosphere with a set of past observations that correspond to the best analogs of a deterministic Numerical Weather Prediction (NWP). This model post-processing method has been successfully used to improve the forecast accuracy for several weather-related applications including air quality, and short-term wind and solar power forecasting, to name a few. In order to provide a meaningful probabilistic forecast, the AnEn method requires storing a historical set of past predictions and observations in memory for a period of at least several months and spanning the seasons relevant for the prediction of interest. Although the memory and computing costs of the AnEn method are less expensive than using a brute-force dynamical ensemble approach, for a large number of stations and large datasets, the amount of memory required for AnEn can easily become prohibitive. Furthermore, in order to find the best analogs associated with a certain prediction produced by a NWP model, the current approach requires searching over the entire dataset by applying a certain metric. This approach requires applying the metric over the entire historical dataset, which may take a substantial amount of time. In this work, we investigate an alternative way to implement the AnEn method using deep generative models. By doing so, a generative model can entirely or partially replace the dataset of pairs of predictions and observations, reducing the amount of memory required to produce the probabilistic forecast by several orders of magnitude. Furthermore, the generative model can generate a meaningful set of analogs associated with a certain forecast in constant time without performing any search, saving a considerable amount of time even in the presence of huge historical datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge