Alessandro Fanfarillo

Probabilistic Forecasting using Deep Generative Models

Sep 26, 2019

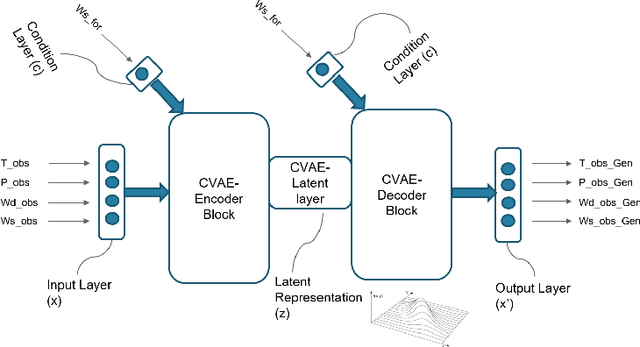

Abstract:The Analog Ensemble (AnEn) method tries to estimate the probability distribution of the future state of the atmosphere with a set of past observations that correspond to the best analogs of a deterministic Numerical Weather Prediction (NWP). This model post-processing method has been successfully used to improve the forecast accuracy for several weather-related applications including air quality, and short-term wind and solar power forecasting, to name a few. In order to provide a meaningful probabilistic forecast, the AnEn method requires storing a historical set of past predictions and observations in memory for a period of at least several months and spanning the seasons relevant for the prediction of interest. Although the memory and computing costs of the AnEn method are less expensive than using a brute-force dynamical ensemble approach, for a large number of stations and large datasets, the amount of memory required for AnEn can easily become prohibitive. Furthermore, in order to find the best analogs associated with a certain prediction produced by a NWP model, the current approach requires searching over the entire dataset by applying a certain metric. This approach requires applying the metric over the entire historical dataset, which may take a substantial amount of time. In this work, we investigate an alternative way to implement the AnEn method using deep generative models. By doing so, a generative model can entirely or partially replace the dataset of pairs of predictions and observations, reducing the amount of memory required to produce the probabilistic forecast by several orders of magnitude. Furthermore, the generative model can generate a meaningful set of analogs associated with a certain forecast in constant time without performing any search, saving a considerable amount of time even in the presence of huge historical datasets.

AITuning: Machine Learning-based Tuning Tool for Run-Time Communication Libraries

Sep 13, 2019

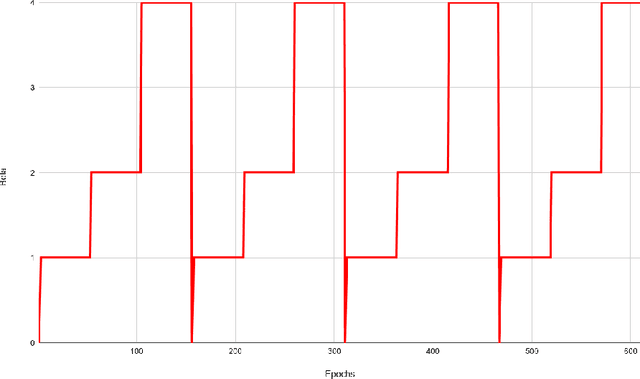

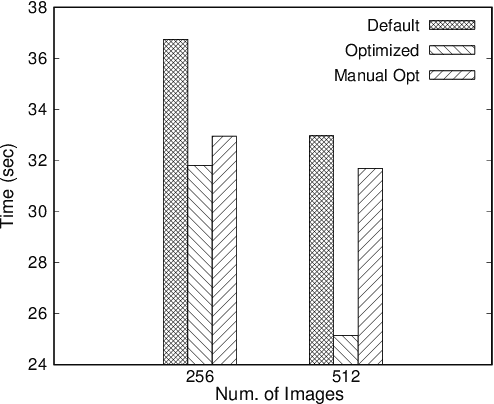

Abstract:In this work, we address the problem of tuning communication libraries by using a deep reinforcement learning approach. Reinforcement learning is a machine learning technique incredibly effective in solving game-like situations. In fact, tuning a set of parameters in a communication library in order to get better performance in a parallel application can be expressed as a game: Find the right combination/path that provides the best reward. Even though AITuning has been designed to be utilized with different run-time libraries, we focused this work on applying it to the OpenCoarrays run-time communication library, built on top of MPI-3. This work not only shows the potential of using a reinforcement learning algorithm for tuning communication libraries, but also demonstrates how the MPI Tool Information Interface, introduced by the MPI-3 standard, can be used effectively by run-time libraries to improve the performance without human intervention.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge