Guangming Huang

Similarity-Dissimilarity Loss with Supervised Contrastive Learning for Multi-label Classification

Oct 17, 2024

Abstract:Supervised contrastive learning has been explored in making use of label information for multi-label classification, but determining positive samples in multi-label scenario remains challenging. Previous studies have examined strategies for identifying positive samples, considering label overlap proportion between anchors and samples. However, they ignore various relations between given anchors and samples, as well as how to dynamically adjust the weights in contrastive loss functions based on different relations, leading to great ambiguity. In this paper, we introduce five distinct relations between multi-label samples and propose a Similarity-Dissimilarity Loss with contrastive learning for multi-label classification. Our loss function re-weights the loss by computing the similarity and dissimilarity between positive samples and a given anchor based on the introduced relations. We mainly conduct experiments for multi-label text classification on MIMIC datasets, then further extend the evaluation on MS-COCO. The Experimental results show that our proposed loss effectively improves the performance on all encoders under supervised contrastive learning paradigm, demonstrating its effectiveness and robustness.

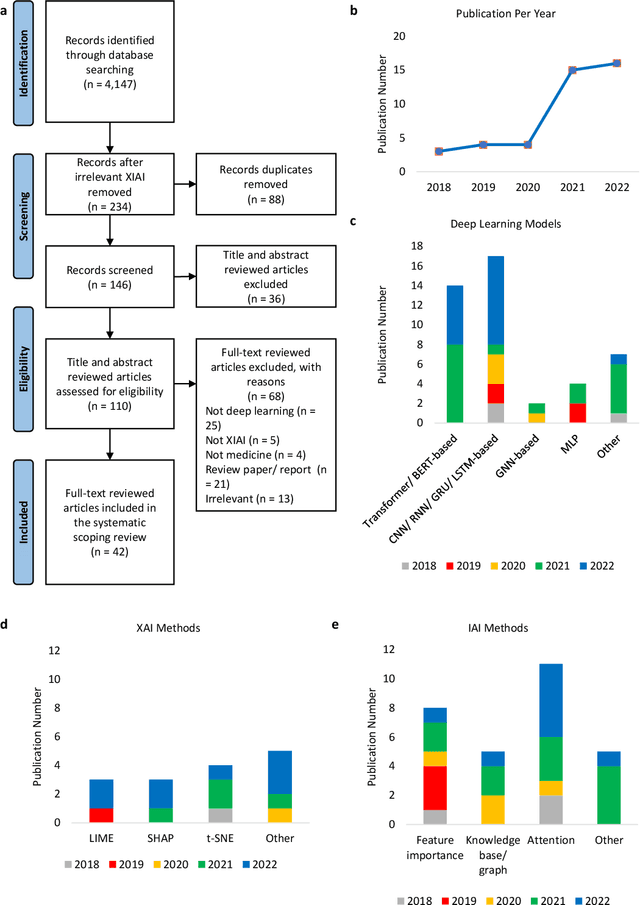

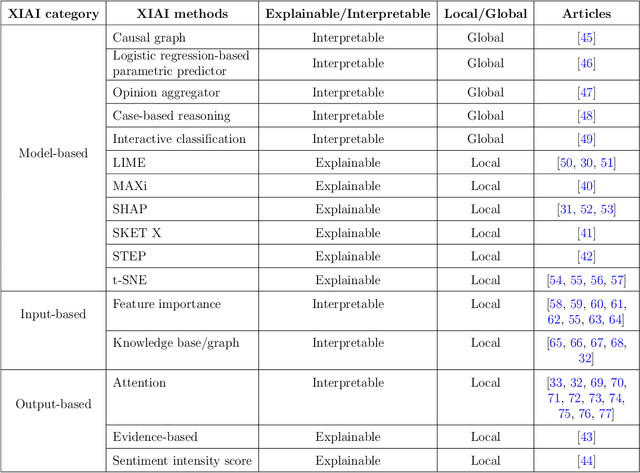

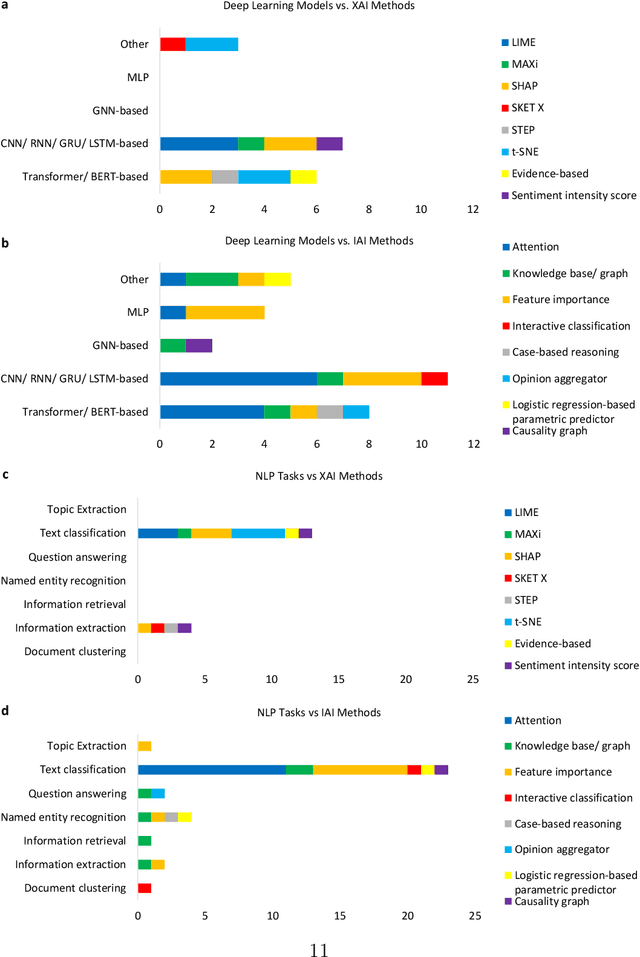

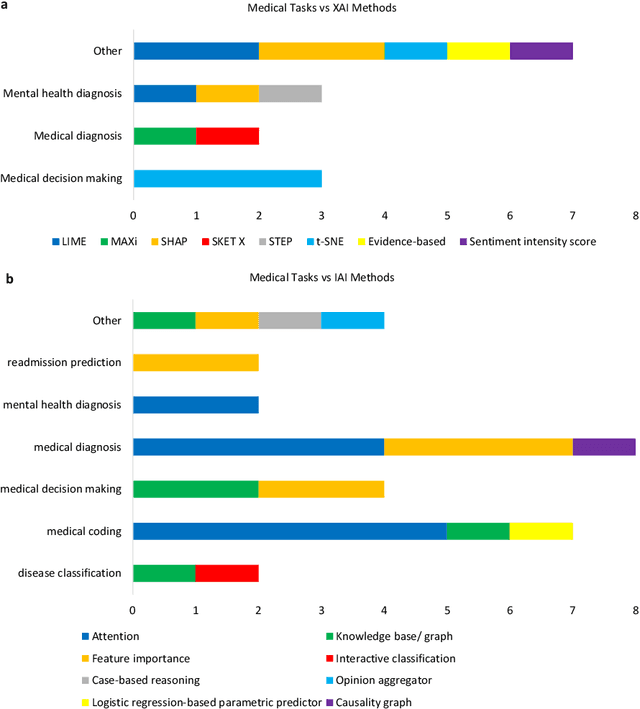

From explainable to interpretable deep learning for natural language processing in healthcare: how far from reality?

Mar 18, 2024

Abstract:Deep learning (DL) has substantially enhanced healthcare research by addressing various natural language processing (NLP) tasks. Yet, the increasing complexity of DL-based NLP methods necessitates transparent model interpretability, or at least explainability, for reliable decision-making. This work presents a thorough scoping review on explainable and interpretable DL in healthcare NLP. The term "XIAI" (eXplainable and Interpretable Artificial Intelligence) was introduced to distinguish XAI from IAI. Methods were further categorized based on their functionality (model-, input-, output-based) and scope (local, global). Our analysis shows that attention mechanisms were the most dominant emerging IAI. Moreover, IAI is increasingly used against XAI. The major challenges identified are that most XIAI do not explore "global" modeling processes, the lack of best practices, and the unmet need for systematic evaluation and benchmarks. Important opportunities were raised such as using "attention" to enhance multi-modal XIAI for personalized medicine and combine DL with causal reasoning. Our discussion encourages the integration of XIAI in LLMs and domain-specific smaller models. Our review can stimulate further research and benchmarks toward improving inherent IAI and engaging complex NLP in healthcare.

Prompting Explicit and Implicit Knowledge for Multi-hop Question Answering Based on Human Reading Process

Mar 04, 2024Abstract:Pre-trained language models (PLMs) leverage chains-of-thought (CoT) to simulate human reasoning and inference processes, achieving proficient performance in multi-hop QA. However, a gap persists between PLMs' reasoning abilities and those of humans when tackling complex problems. Psychological studies suggest a vital connection between explicit information in passages and human prior knowledge during reading. Nevertheless, current research has given insufficient attention to linking input passages and PLMs' pre-training-based knowledge from the perspective of human cognition studies. In this study, we introduce a Prompting Explicit and Implicit knowledge (PEI) framework, which uses prompts to connect explicit and implicit knowledge, aligning with human reading process for multi-hop QA. We consider the input passages as explicit knowledge, employing them to elicit implicit knowledge through unified prompt reasoning. Furthermore, our model incorporates type-specific reasoning via prompts, a form of implicit knowledge. Experimental results show that PEI performs comparably to the state-of-the-art on HotpotQA. Ablation studies confirm the efficacy of our model in bridging and integrating explicit and implicit knowledge.

Label-free timing analysis of modularized nuclear detectors with physics-constrained deep learning

Apr 28, 2023Abstract:Pulse timing is an important topic in nuclear instrumentation, with far-reaching applications from high energy physics to radiation imaging. While high-speed analog-to-digital converters become more and more developed and accessible, their potential uses and merits in nuclear detector signal processing are still uncertain, partially due to associated timing algorithms which are not fully understood and utilized. In this paper, we propose a novel method based on deep learning for timing analysis of modularized nuclear detectors without explicit needs of labelling event data. By taking advantage of the inner time correlation of individual detectors, a label-free loss function with a specially designed regularizer is formed to supervise the training of neural networks towards a meaningful and accurate mapping function. We mathematically demonstrate the existence of the optimal function desired by the method, and give a systematic algorithm for training and calibration of the model. The proposed method is validated on two experimental datasets. In the toy experiment, the neural network model achieves the single-channel time resolution of 8.8 ps and exhibits robustness against concept drift in the dataset. In the electromagnetic calorimeter experiment, several neural network models (FC, CNN and LSTM) are tested to show their conformance to the underlying physical constraint and to judge their performance against traditional methods. In total, the proposed method works well in either ideal or noisy experimental condition and recovers the time information from waveform samples successfully and precisely.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge