From explainable to interpretable deep learning for natural language processing in healthcare: how far from reality?

Paper and Code

Mar 18, 2024

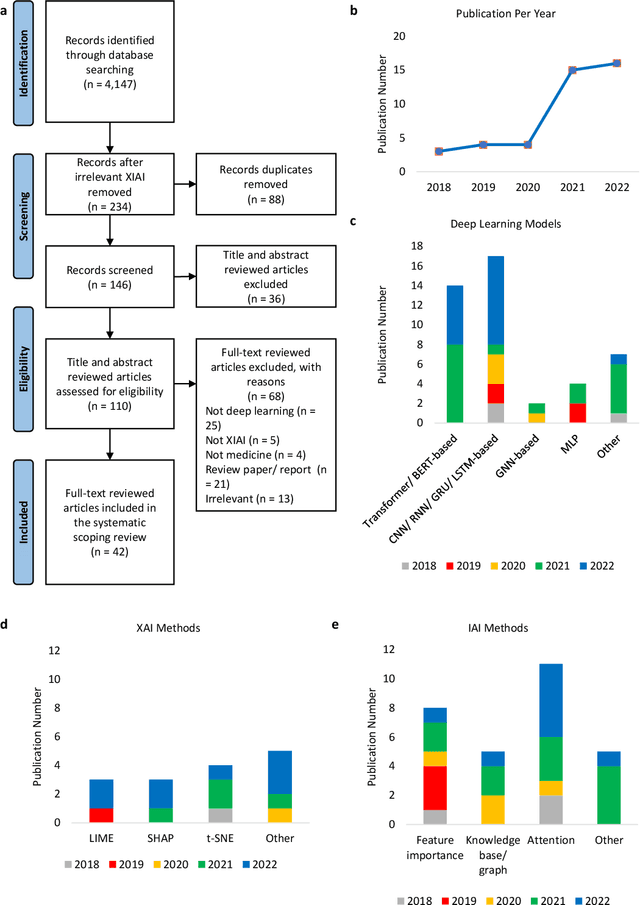

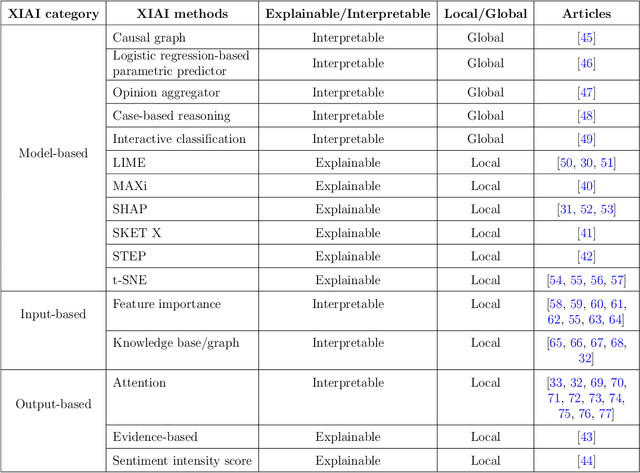

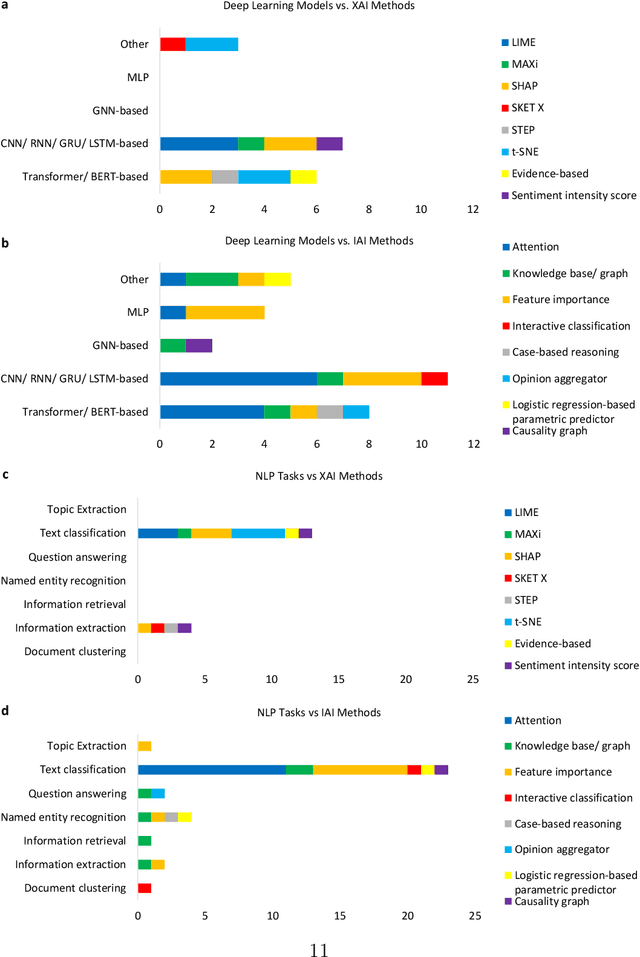

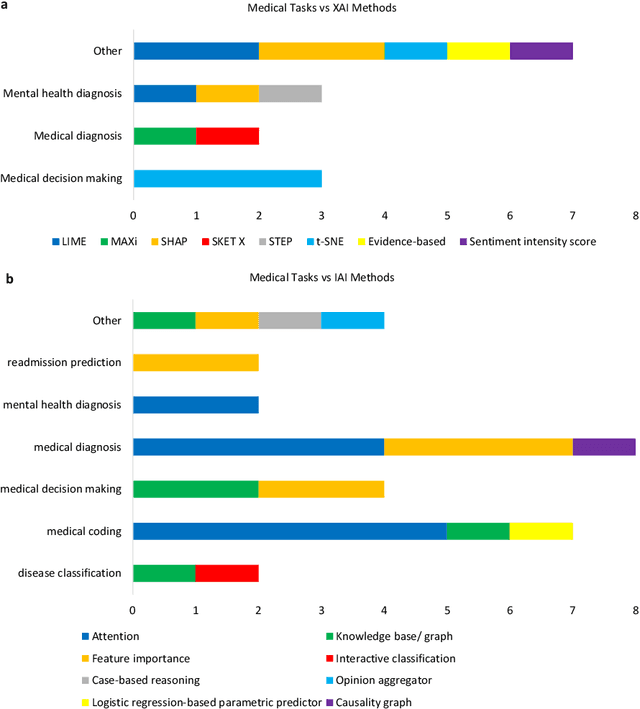

Deep learning (DL) has substantially enhanced healthcare research by addressing various natural language processing (NLP) tasks. Yet, the increasing complexity of DL-based NLP methods necessitates transparent model interpretability, or at least explainability, for reliable decision-making. This work presents a thorough scoping review on explainable and interpretable DL in healthcare NLP. The term "XIAI" (eXplainable and Interpretable Artificial Intelligence) was introduced to distinguish XAI from IAI. Methods were further categorized based on their functionality (model-, input-, output-based) and scope (local, global). Our analysis shows that attention mechanisms were the most dominant emerging IAI. Moreover, IAI is increasingly used against XAI. The major challenges identified are that most XIAI do not explore "global" modeling processes, the lack of best practices, and the unmet need for systematic evaluation and benchmarks. Important opportunities were raised such as using "attention" to enhance multi-modal XIAI for personalized medicine and combine DL with causal reasoning. Our discussion encourages the integration of XIAI in LLMs and domain-specific smaller models. Our review can stimulate further research and benchmarks toward improving inherent IAI and engaging complex NLP in healthcare.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge