Grace Tsai

Panoptic Segmentation Forecasting

Apr 08, 2021

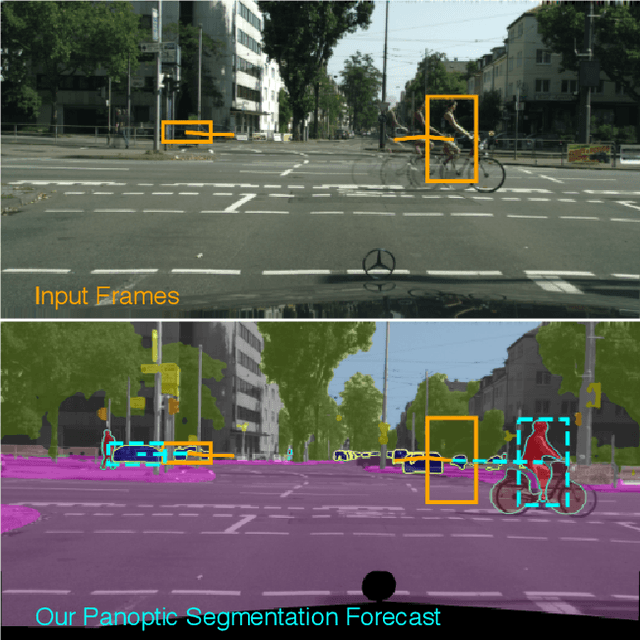

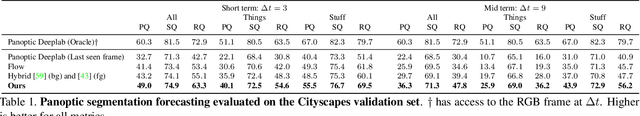

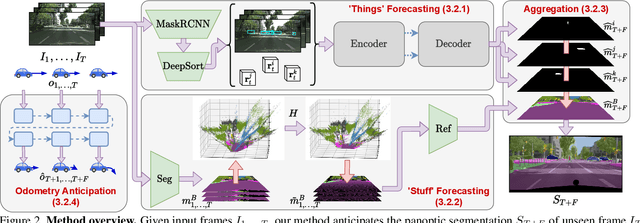

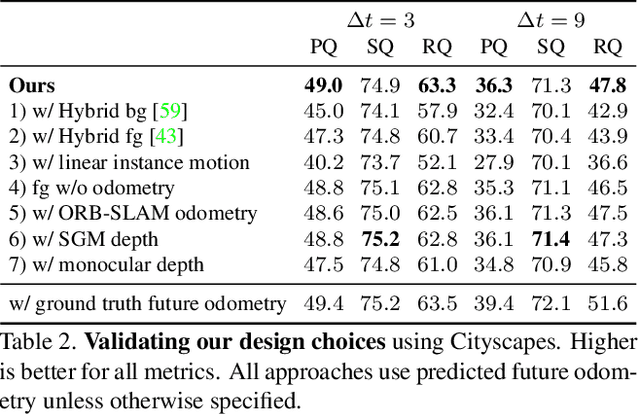

Abstract:Our goal is to forecast the near future given a set of recent observations. We think this ability to forecast, i.e., to anticipate, is integral for the success of autonomous agents which need not only passively analyze an observation but also must react to it in real-time. Importantly, accurate forecasting hinges upon the chosen scene decomposition. We think that superior forecasting can be achieved by decomposing a dynamic scene into individual 'things' and background 'stuff'. Background 'stuff' largely moves because of camera motion, while foreground 'things' move because of both camera and individual object motion. Following this decomposition, we introduce panoptic segmentation forecasting. Panoptic segmentation forecasting opens up a middle-ground between existing extremes, which either forecast instance trajectories or predict the appearance of future image frames. To address this task we develop a two-component model: one component learns the dynamics of the background stuff by anticipating odometry, the other one anticipates the dynamics of detected things. We establish a leaderboard for this novel task, and validate a state-of-the-art model that outperforms available baselines.

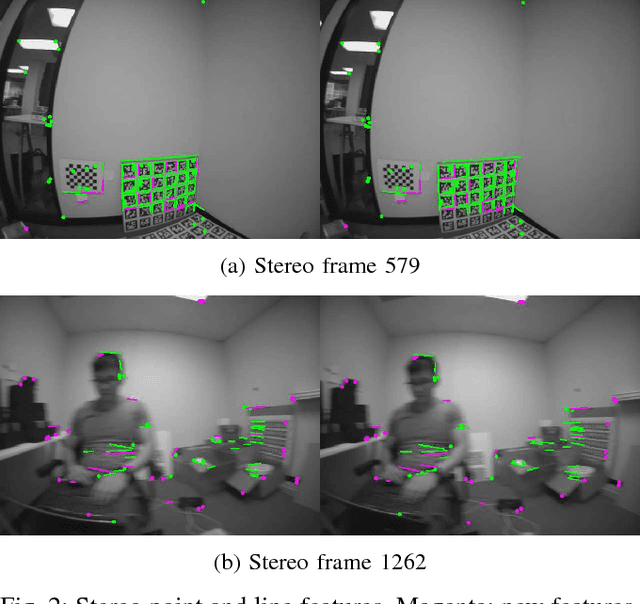

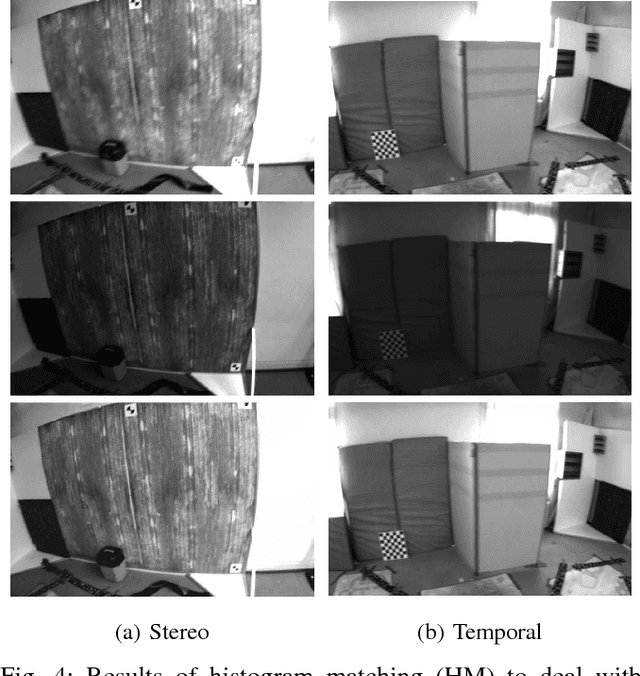

Trifo-VIO: Robust and Efficient Stereo Visual Inertial Odometry using Points and Lines

Sep 18, 2018

Abstract:In this paper, we present the Trifo Visual Inertial Odometry (Trifo-VIO), a tightly-coupled filtering-based stereo VIO system using both points and lines. Line features help improve system robustness in challenging scenarios when point features cannot be reliably detected or tracked, e.g. low-texture environment or lighting change. In addition, we propose a novel lightweight filtering-based loop closing technique to reduce accumulated drift without global bundle adjustment or pose graph optimization. We formulate loop closure as EKF updates to optimally relocate the current sliding window maintained by the filter to past keyframes. We also present the Trifo Ironsides dataset, a new visual-inertial dataset, featuring high-quality synchronized stereo camera and IMU data from the Ironsides sensor [3] with various motion types and textures and millimeter-accuracy groundtruth. To validate the performance of the proposed system, we conduct extensive comparison with state-of-the-art approaches (OKVIS, VINS-MONO and S-MSCKF) using both the public EuRoC dataset and the Trifo Ironsides dataset.

PIRVS: An Advanced Visual-Inertial SLAM System with Flexible Sensor Fusion and Hardware Co-Design

Oct 02, 2017

Abstract:In this paper, we present the PerceptIn Robotics Vision System (PIRVS) system, a visual-inertial computing hardware with embedded simultaneous localization and mapping (SLAM) algorithm. The PIRVS hardware is equipped with a multi-core processor, a global-shutter stereo camera, and an IMU with precise hardware synchronization. The PIRVS software features a novel and flexible sensor fusion approach to not only tightly integrate visual measurements with inertial measurements and also to loosely couple with additional sensor modalities. It runs in real-time on both PC and the PIRVS hardware. We perform a thorough evaluation of the proposed system using multiple public visual-inertial datasets. Experimental results demonstrate that our system reaches comparable accuracy of state-of-the-art visual-inertial algorithms on PC, while being more efficient on the PIRVS hardware.

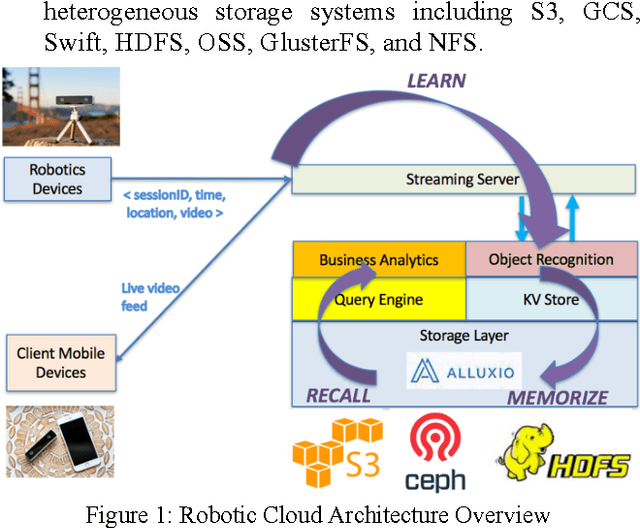

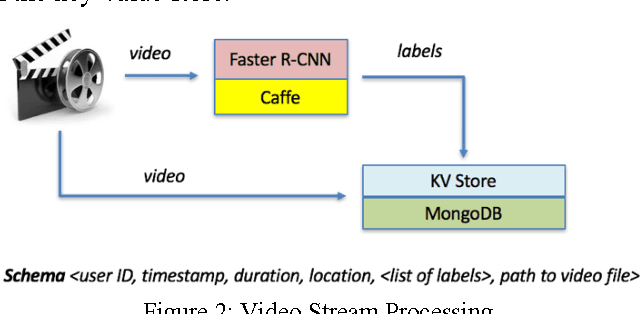

Learn-Memorize-Recall-Reduce A Robotic Cloud Computing Paradigm

Apr 18, 2017

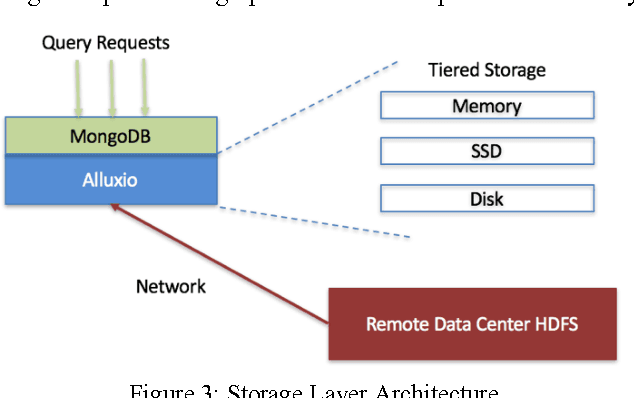

Abstract:The rise of robotic applications has led to the generation of a huge volume of unstructured data, whereas the current cloud infrastructure was designed to process limited amounts of structured data. To address this problem, we propose a learn-memorize-recall-reduce paradigm for robotic cloud computing. The learning stage converts incoming unstructured data into structured data; the memorization stage provides effective storage for the massive amount of data; the recall stage provides efficient means to retrieve the raw data; while the reduction stage provides means to make sense of this massive amount of unstructured data with limited computing resources.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge