Girik Malik

Towards Experience-Centered AI: A Framework for Integrating Lived Experience in Design and Development

Aug 09, 2025Abstract:Lived experiences fundamentally shape how individuals interact with AI systems, influencing perceptions of safety, trust, and usability. While prior research has focused on developing techniques to emulate human preferences, and proposed taxonomies to categorize risks (such as psychological harms and algorithmic biases), these efforts have provided limited systematic understanding of lived human experiences or actionable strategies for embedding them meaningfully into the AI development lifecycle. This work proposes a framework for meaningfully integrating lived experience into the design and evaluation of AI systems. We synthesize interdisciplinary literature across lived experience philosophy, human-centered design, and human-AI interaction, arguing that centering lived experience can lead to models that more accurately reflect the retrospective, emotional, and contextual dimensions of human cognition. Drawing from a wide body of work across psychology, education, healthcare, and social policy, we present a targeted taxonomy of lived experiences with specific applicability to AI systems. To ground our framework, we examine three application domains (i) education, (ii) healthcare, and (iii) cultural alignment, illustrating how lived experience informs user goals, system expectations, and ethical considerations in each context. We further incorporate insights from AI system operators and human-AI partnerships to highlight challenges in responsibility allocation, mental model calibration, and long-term system adaptation. We conclude with actionable recommendations for developing experience-centered AI systems that are not only technically robust but also empathetic, context-aware, and aligned with human realities. This work offers a foundation for future research that bridges technical development with the lived experiences of those impacted by AI systems.

InsTALL: Context-aware Instructional Task Assistance with Multi-modal Large Language Models

Jan 21, 2025

Abstract:The improved competence of generative models can help building multi-modal virtual assistants that leverage modalities beyond language. By observing humans performing multi-step tasks, one can build assistants that have situational awareness of actions and tasks being performed, enabling them to cater assistance based on this understanding. In this paper, we develop a Context-aware Instructional Task Assistant with Multi-modal Large Language Models (InsTALL) that leverages an online visual stream (e.g. a user's screen share or video recording) and responds in real-time to user queries related to the task at hand. To enable useful assistance, InsTALL 1) trains a multi-modal model on task videos and paired textual data, and 2) automatically extracts task graph from video data and leverages it at training and inference time. We show InsTALL achieves state-of-the-art performance across proposed sub-tasks considered for multimodal activity understanding -- task recognition (TR), action recognition (AR), next action prediction (AP), and plan prediction (PP) -- and outperforms existing baselines on two novel sub-tasks related to automatic error identification.

Tracking objects that change in appearance with phase synchrony

Oct 02, 2024

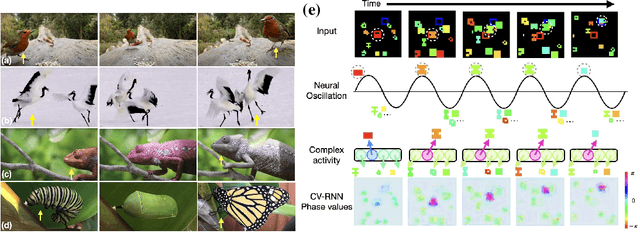

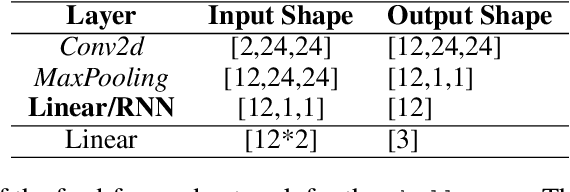

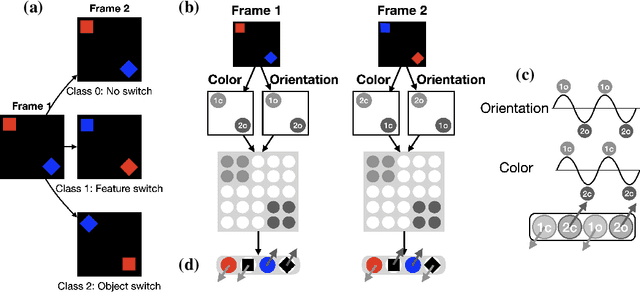

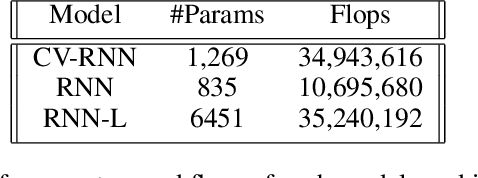

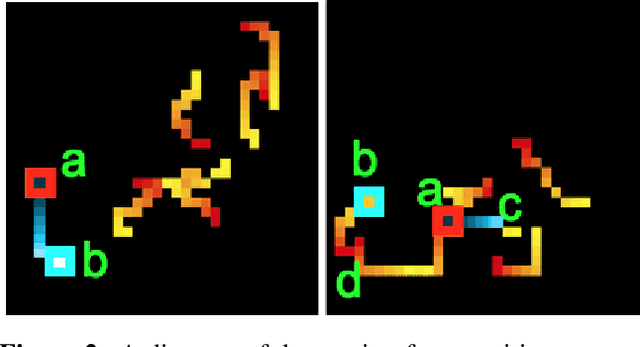

Abstract:Objects we encounter often change appearance as we interact with them. Changes in illumination (shadows), object pose, or movement of nonrigid objects can drastically alter available image features. How do biological visual systems track objects as they change? It may involve specific attentional mechanisms for reasoning about the locations of objects independently of their appearances -- a capability that prominent neuroscientific theories have associated with computing through neural synchrony. We computationally test the hypothesis that the implementation of visual attention through neural synchrony underlies the ability of biological visual systems to track objects that change in appearance over time. We first introduce a novel deep learning circuit that can learn to precisely control attention to features separately from their location in the world through neural synchrony: the complex-valued recurrent neural network (CV-RNN). Next, we compare object tracking in humans, the CV-RNN, and other deep neural networks (DNNs), using FeatureTracker: a large-scale challenge that asks observers to track objects as their locations and appearances change in precisely controlled ways. While humans effortlessly solved FeatureTracker, state-of-the-art DNNs did not. In contrast, our CV-RNN behaved similarly to humans on the challenge, providing a computational proof-of-concept for the role of phase synchronization as a neural substrate for tracking appearance-morphing objects as they move about.

Solving Royal Game of Ur Using Reinforcement Learning

Aug 23, 2022

Abstract:Reinforcement Learning has recently surfaced as a very powerful tool to solve complex problems in the domain of board games, wherein an agent is generally required to learn complex strategies and moves based on its own experiences and rewards received. While RL has outperformed existing state-of-the-art methods used for playing simple video games and popular board games, it is yet to demonstrate its capability on ancient games. Here, we solve one such problem, where we train our agents using different methods namely Monte Carlo, Qlearning and Expected Sarsa to learn optimal policy to play the strategic Royal Game of Ur. The state space for our game is complex and large, but our agents show promising results at playing the game and learning important strategic moves. Although it is hard to conclude that when trained with limited resources which algorithm performs better overall, but Expected Sarsa shows promising results when it comes to fastest learning.

Robustness of Humans and Machines on Object Recognition with Extreme Image Transformations

May 09, 2022

Abstract:Recent neural network architectures have claimed to explain data from the human visual cortex. Their demonstrated performance is however still limited by the dependence on exploiting low-level features for solving visual tasks. This strategy limits their performance in case of out-of-distribution/adversarial data. Humans, meanwhile learn abstract concepts and are mostly unaffected by even extreme image distortions. Humans and networks employ strikingly different strategies to solve visual tasks. To probe this, we introduce a novel set of image transforms and evaluate humans and networks on an object recognition task. We found performance for a few common networks quickly decreases while humans are able to recognize objects with a high accuracy.

The Challenge of Appearance-Free Object Tracking with Feedforward Neural Networks

Sep 30, 2021

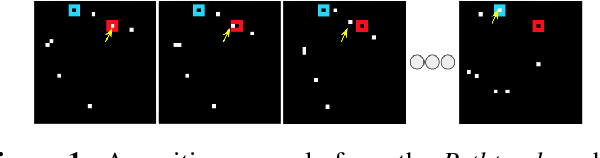

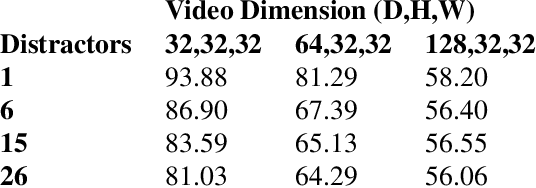

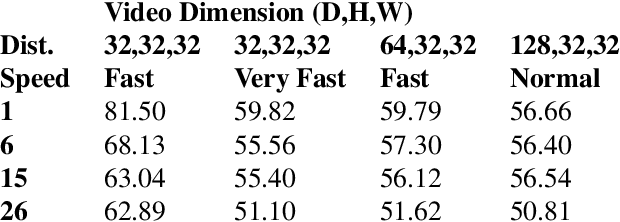

Abstract:Nearly all models for object tracking with artificial neural networks depend on appearance features extracted from a "backbone" architecture, designed for object recognition. Indeed, significant progress on object tracking has been spurred by introducing backbones that are better able to discriminate objects by their appearance. However, extensive neurophysiology and psychophysics evidence suggests that biological visual systems track objects using both appearance and motion features. Here, we introduce $\textit{PathTracker}$, a visual challenge inspired by cognitive psychology, which tests the ability of observers to learn to track objects solely by their motion. We find that standard 3D-convolutional deep network models struggle to solve this task when clutter is introduced into the generated scenes, or when objects travel long distances. This challenge reveals that tracing the path of object motion is a blind spot of feedforward neural networks. We expect that strategies for appearance-free object tracking from biological vision can inspire solutions these failures of deep neural networks.

Tracking Without Re-recognition in Humans and Machines

Jun 03, 2021

Abstract:Imagine trying to track one particular fruitfly in a swarm of hundreds. Higher biological visual systems have evolved to track moving objects by relying on both appearance and motion features. We investigate if state-of-the-art deep neural networks for visual tracking are capable of the same. For this, we introduce PathTracker, a synthetic visual challenge that asks human observers and machines to track a target object in the midst of identical-looking "distractor" objects. While humans effortlessly learn PathTracker and generalize to systematic variations in task design, state-of-the-art deep networks struggle. To address this limitation, we identify and model circuit mechanisms in biological brains that are implicated in tracking objects based on motion cues. When instantiated as a recurrent network, our circuit model learns to solve PathTracker with a robust visual strategy that rivals human performance and explains a significant proportion of their decision-making on the challenge. We also show that the success of this circuit model extends to object tracking in natural videos. Adding it to a transformer-based architecture for object tracking builds tolerance to visual nuisances that affect object appearance, resulting in a new state-of-the-art performance on the large-scale TrackingNet object tracking challenge. Our work highlights the importance of building artificial vision models that can help us better understand human vision and improve computer vision.

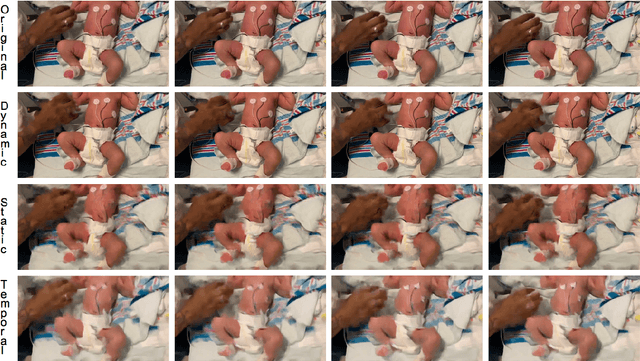

Little Motion, Big Results: Using Motion Magnification to Reveal Subtle Tremors in Infants

Aug 01, 2020

Abstract:Detecting tremors is challenging for both humans and machines. Infants exposed to opioids during pregnancy often show signs and symptoms of withdrawal after birth, which are easy to miss with the human eye. The constellation of clinical features, termed as Neonatal Abstinence Syndrome (NAS), include tremors, seizures, irritability, etc. The current standard of care uses Finnegan Neonatal Abstinence Syndrome Scoring System (FNASS), based on subjective evaluations. Monitoring with FNASS requires highly skilled nursing staff, making continuous monitoring difficult. In this paper we propose an automated tremor detection system using amplified motion signals. We demonstrate its applicability on bedside video of infant exhibiting signs of NAS. Further, we test different modes of deep convolutional network based motion magnification, and identify that dynamic mode works best in the clinical setting, being invariant to common orientational changes. We propose a strategy for discharge and follow up for NAS patients, using motion magnification to supplement the existing protocols. Overall our study suggests methods for bridging the gap in current practices, training and resource utilization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge