Giorgos Giannopoulos

GLANCE: Global Actions in a Nutshell for Counterfactual Explainability

May 29, 2024

Abstract:Counterfactual explanations have emerged as an important tool to understand, debug, and audit complex machine learning models. To offer global counterfactual explainability, state-of-the-art methods construct summaries of local explanations, offering a trade-off among conciseness, counterfactual effectiveness, and counterfactual cost or burden imposed on instances. In this work, we provide a concise formulation of the problem of identifying global counterfactuals and establish principled criteria for comparing solutions, drawing inspiration from Pareto dominance. We introduce innovative algorithms designed to address the challenge of finding global counterfactuals for either the entire input space or specific partitions, employing clustering and decision trees as key components. Additionally, we conduct a comprehensive experimental evaluation, considering various instances of the problem and comparing our proposed algorithms with state-of-the-art methods. The results highlight the consistent capability of our algorithms to generate meaningful and interpretable global counterfactual explanations.

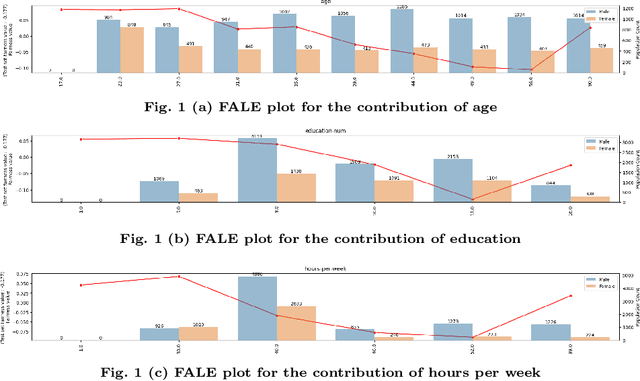

FALE: Fairness-Aware ALE Plots for Auditing Bias in Subgroups

Apr 29, 2024

Abstract:Fairness is steadily becoming a crucial requirement of Machine Learning (ML) systems. A particularly important notion is subgroup fairness, i.e., fairness in subgroups of individuals that are defined by more than one attributes. Identifying bias in subgroups can become both computationally challenging, as well as problematic with respect to comprehensibility and intuitiveness of the finding to end users. In this work we focus on the latter aspects; we propose an explainability method tailored to identifying potential bias in subgroups and visualizing the findings in a user friendly manner to end users. In particular, we extend the ALE plots explainability method, proposing FALE (Fairness aware Accumulated Local Effects) plots, a method for measuring the change in fairness for an affected population corresponding to different values of a feature (attribute). We envision FALE to function as an efficient, user friendly, comprehensible and reliable first-stage tool for identifying subgroups with potential bias issues.

Next day fire prediction via semantic segmentation

Mar 20, 2024

Abstract:In this paper we present a deep learning pipeline for next day fire prediction. The next day fire prediction task consists in learning models that receive as input the available information for an area up until a certain day, in order to predict the occurrence of fire for the next day. Starting from our previous problem formulation as a binary classification task on instances (daily snapshots of each area) represented by tabular feature vectors, we reformulate the problem as a semantic segmentation task on images; there, each pixel corresponds to a daily snapshot of an area, while its channels represent the formerly tabular training features. We demonstrate that this problem formulation, built within a thorough pipeline achieves state of the art results.

Fairness Aware Counterfactuals for Subgroups

Jun 26, 2023Abstract:In this work, we present Fairness Aware Counterfactuals for Subgroups (FACTS), a framework for auditing subgroup fairness through counterfactual explanations. We start with revisiting (and generalizing) existing notions and introducing new, more refined notions of subgroup fairness. We aim to (a) formulate different aspects of the difficulty of individuals in certain subgroups to achieve recourse, i.e. receive the desired outcome, either at the micro level, considering members of the subgroup individually, or at the macro level, considering the subgroup as a whole, and (b) introduce notions of subgroup fairness that are robust, if not totally oblivious, to the cost of achieving recourse. We accompany these notions with an efficient, model-agnostic, highly parameterizable, and explainable framework for evaluating subgroup fairness. We demonstrate the advantages, the wide applicability, and the efficiency of our approach through a thorough experimental evaluation of different benchmark datasets.

Auditing for Spatial Fairness

Feb 23, 2023

Abstract:This paper studies algorithmic fairness when the protected attribute is location. To handle protected attributes that are continuous, such as age or income, the standard approach is to discretize the domain into predefined groups, and compare algorithmic outcomes across groups. However, applying this idea to location raises concerns of gerrymandering and may introduce statistical bias. Prior work addresses these concerns but only for regularly spaced locations, while raising other issues, most notably its inability to discern regions that are likely to exhibit spatial unfairness. Similar to established notions of algorithmic fairness, we define spatial fairness as the statistical independence of outcomes from location. This translates into requiring that for each region of space, the distribution of outcomes is identical inside and outside the region. To allow for localized discrepancies in the distribution of outcomes, we compare how well two competing hypotheses explain the observed outcomes. The null hypothesis assumes spatial fairness, while the alternate allows different distributions inside and outside regions. Their goodness of fit is then assessed by a likelihood ratio test. If there is no significant difference in how well the two hypotheses explain the observed outcomes, we conclude that the algorithm is spatially fair.

Data-driven soiling detection in PV modules

Jan 30, 2023

Abstract:Soiling is the accumulation of dirt in solar panels which leads to a decreasing trend in solar energy yield and may be the cause of vast revenue losses. The effect of soiling can be reduced by washing the panels, which is, however, a procedure of non-negligible cost. Moreover, soiling monitoring systems are often unreliable or very costly. We study the problem of estimating the soiling ratio in photo-voltaic (PV) modules, i.e., the ratio of the real power output to the power output that would be produced if solar panels were clean. A key advantage of our algorithms is that they estimate soiling, without needing to train on labelled data, i.e., periods of explicitly monitoring the soiling in each park, and without relying on generic analytical formulas which do not take into account the peculiarities of each installation. We consider as input a time series comprising a minimum set of measurements, that are available to most PV park operators. Our experimental evaluation shows that we significantly outperform current state-of-the-art methods for estimating soiling ratio.

Simplifying Impact Prediction for Scientific Articles

Dec 30, 2020

Abstract:Estimating the expected impact of an article is valuable for various applications (e.g., article/cooperator recommendation). Most existing approaches attempt to predict the exact number of citations each article will receive in the near future, however this is a difficult regression analysis problem. Moreover, most approaches rely on the existence of rich metadata for each article, a requirement that cannot be adequately fulfilled for a large number of them. In this work, we take advantage of the fact that solving a simpler machine learning problem, that of classifying articles based on their expected impact, is adequate for many real world applications and we propose a simplified model that can be trained using minimal article metadata. Finally, we examine various configurations of this model and evaluate their effectiveness in solving the aforementioned classification problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge