Frederico Santos de Oliveira

FreeSVC: Towards Zero-shot Multilingual Singing Voice Conversion

Jan 09, 2025Abstract:This work presents FreeSVC, a promising multilingual singing voice conversion approach that leverages an enhanced VITS model with Speaker-invariant Clustering (SPIN) for better content representation and the State-of-the-Art (SOTA) speaker encoder ECAPA2. FreeSVC incorporates trainable language embeddings to handle multiple languages and employs an advanced speaker encoder to disentangle speaker characteristics from linguistic content. Designed for zero-shot learning, FreeSVC enables cross-lingual singing voice conversion without extensive language-specific training. We demonstrate that a multilingual content extractor is crucial for optimal cross-language conversion. Our source code and models are publicly available.

No Saved Kaleidosope: an 100% Jitted Neural Network Coding Language with Pythonic Syntax

Sep 17, 2024

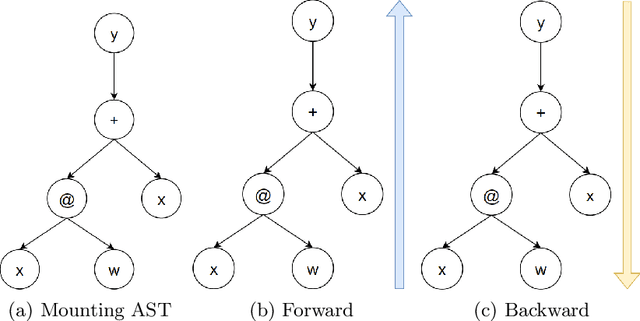

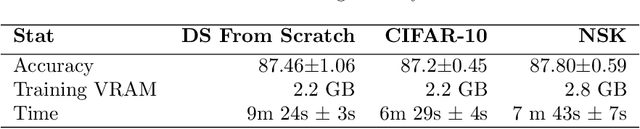

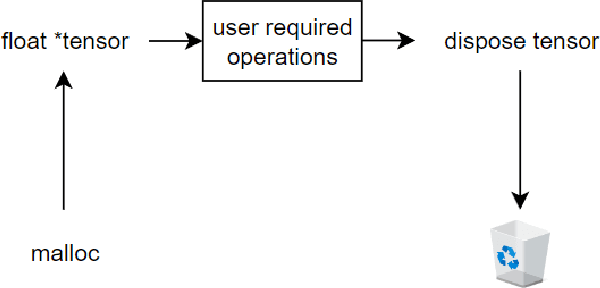

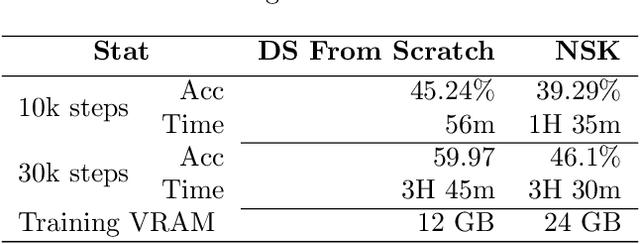

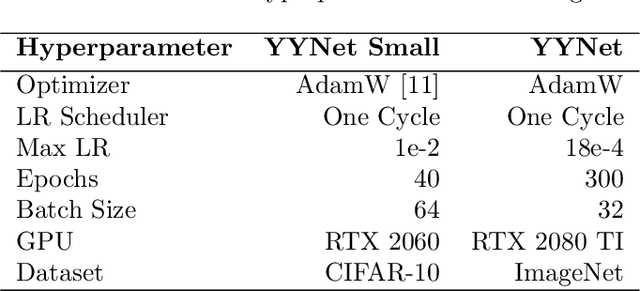

Abstract:We developed a jitted compiler for training Artificial Neural Networks using C++, LLVM and Cuda. It features object-oriented characteristics, strong typing, parallel workers for data pre-processing, pythonic syntax for expressions, PyTorch like model declaration and Automatic Differentiation. We implement the mechanisms of cache and pooling in order to manage VRAM, cuBLAS for high performance matrix multiplication and cuDNN for convolutional layers. Our experiments with Residual Convolutional Neural Networks on ImageNet, we reach similar speed but degraded performance. Also, the GRU network experiments show similar accuracy, but our compiler have degraded speed in that task. However, our compiler demonstrates promising results at the CIFAR-10 benchmark, in which we reach the same performance and about the same speed as PyTorch. We make the code publicly available at: https://github.com/NoSavedDATA/NoSavedKaleidoscope

Yin Yang Convolutional Nets: Image Manifold Extraction by the Analysis of Opposites

Oct 24, 2023

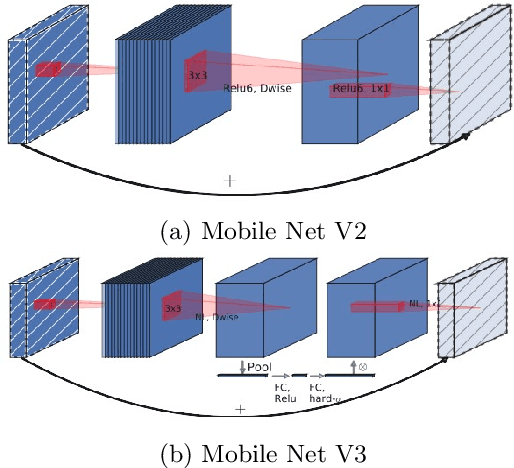

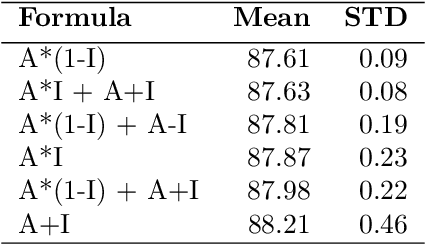

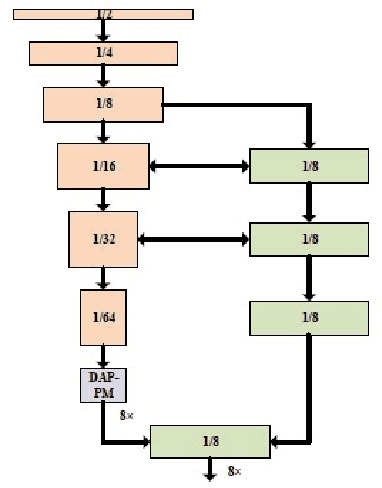

Abstract:Computer vision in general presented several advances such as training optimizations, new architectures (pure attention, efficient block, vision language models, generative models, among others). This have improved performance in several tasks such as classification, and others. However, the majority of these models focus on modifications that are taking distance from realistic neuroscientific approaches related to the brain. In this work, we adopt a more bio-inspired approach and present the Yin Yang Convolutional Network, an architecture that extracts visual manifold, its blocks are intended to separate analysis of colors and forms at its initial layers, simulating occipital lobe's operations. Our results shows that our architecture provides State-of-the-Art efficiency among low parameter architectures in the dataset CIFAR-10. Our first model reached 93.32\% test accuracy, 0.8\% more than the older SOTA in this category, while having 150k less parameters (726k in total). Our second model uses 52k parameters, losing only 3.86\% test accuracy. We also performed an analysis on ImageNet, where we reached 66.49\% validation accuracy with 1.6M parameters. We make the code publicly available at: https://github.com/NoSavedDATA/YinYang_CNN.

CORAA: a large corpus of spontaneous and prepared speech manually validated for speech recognition in Brazilian Portuguese

Oct 14, 2021

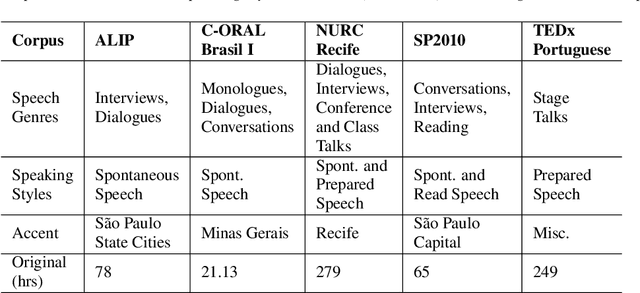

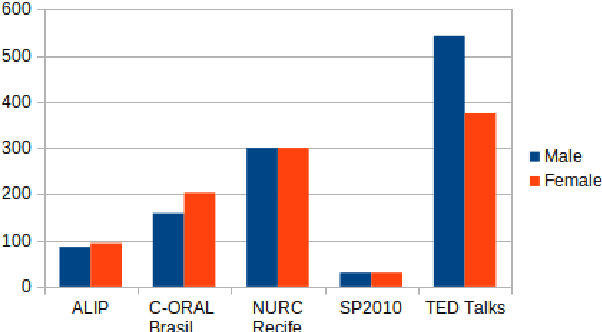

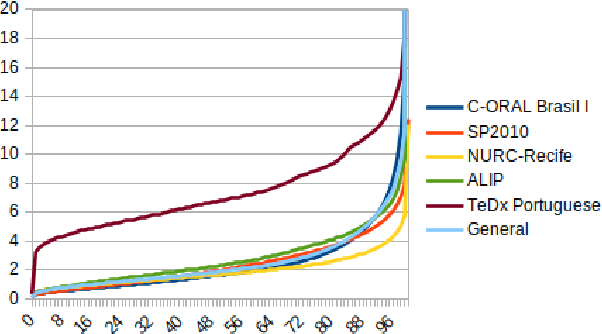

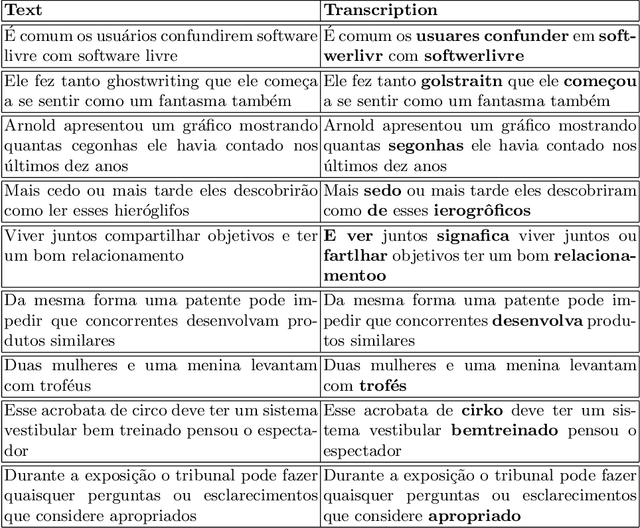

Abstract:Automatic Speech recognition (ASR) is a complex and challenging task. In recent years, there have been significant advances in the area. In particular, for the Brazilian Portuguese (BP) language, there were about 376 hours public available for ASR task until the second half of 2020. With the release of new datasets in early 2021, this number increased to 574 hours. The existing resources, however, are composed of audios containing only read and prepared speech. There is a lack of datasets including spontaneous speech, which are essential in different ASR applications. This paper presents CORAA (Corpus of Annotated Audios) v1. with 291 hours, a publicly available dataset for ASR in BP containing validated pairs (audio-transcription). CORAA also contains European Portuguese audios (4.69 hours). We also present two public ASR models based on Wav2Vec 2.0 XLSR-53 and fine-tuned over CORAA. Our best model achieved a Word Error Rate of 27.35% on CORAA test set and 16.01% on Common Voice test set. When measuring the Character Error Rate, we obtained 14.26% and 5.45% for CORAA and Common Voice, respectively. CORAA corpora were assembled to both improve ASR models in BP with phenomena from spontaneous speech and motivate young researchers to start their studies on ASR for Portuguese. All the corpora are publicly available at https://github.com/nilc-nlp/CORAA under the CC BY-NC-ND 4.0 license.

Brazilian Portuguese Speech Recognition Using Wav2vec 2.0

Jul 23, 2021

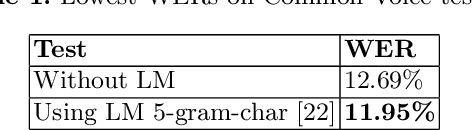

Abstract:Deep learning techniques have been shown to be efficient in various tasks, especially in the development of speech recognition systems, that is, systems that aim to transcribe a sentence in audio in a sequence of words. Despite the progress in the area, speech recognition can still be considered difficult, especially for languages lacking available data, as Brazilian Portuguese. In this sense, this work presents the development of an public Automatic Speech Recognition system using only open available audio data, from the fine-tuning of the Wav2vec 2.0 XLSR-53 model pre-trained in many languages over Brazilian Portuguese data. The final model presents a Word Error Rate of 11.95% (Common Voice Dataset). This corresponds to 13% less than the best open Automatic Speech Recognition model for Brazilian Portuguese available according to our best knowledge, which is a promising result for the language. In general, this work validates the use of self-supervising learning techniques, in special, the use of the Wav2vec 2.0 architecture in the development of robust systems, even for languages having few available data.

SC-GlowTTS: an Efficient Zero-Shot Multi-Speaker Text-To-Speech Model

Apr 02, 2021

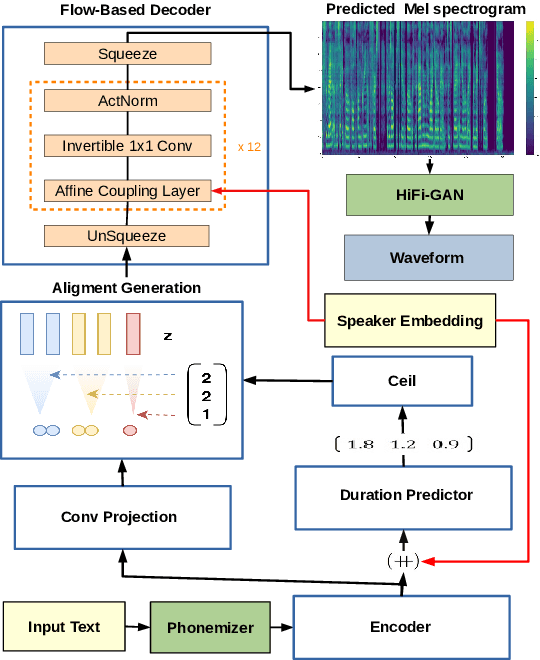

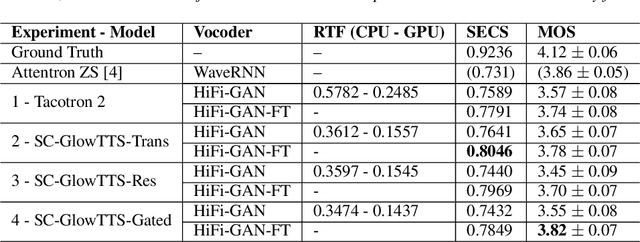

Abstract:In this paper, we propose SC-GlowTTS: an efficient zero-shot multi-speaker text-to-speech model that improves similarity for speakers unseen in training. We propose a speaker-conditional architecture that explores a flow-based decoder that works in a zero-shot scenario. As text encoders, we explore a dilated residual convolutional-based encoder, gated convolutional-based encoder, and transformer-based encoder. Additionally, we have shown that adjusting a GAN-based vocoder for the spectrograms predicted by the TTS model on the training dataset can significantly improve the similarity and speech quality for new speakers. Our model is able to converge in training, using only 11 speakers, reaching state-of-the-art results for similarity with new speakers, as well as high speech quality.

End-To-End Speech Synthesis Applied to Brazilian Portuguese

May 11, 2020

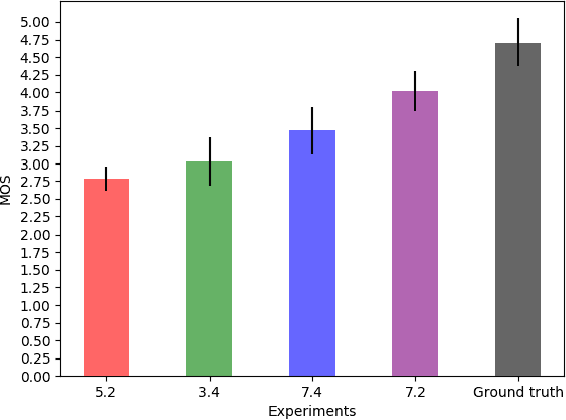

Abstract:Voice synthesis systems are popular in different applications, such as personal assistants, GPS applications, screen readers and accessibility tools. Voice provides an natural way for human-computer interaction. However, not all languages are in the same level when accounting resources and systems for voice synthesis. This work consists of the creation of publicly available resources for the Brazilian Portuguese language in the form of a dataset and deep learning models for end-to-end voice synthesis. The dataset has 10.5 hours from a single speaker. We investigated three different architectures to perform end-to-end speech synthesis: Tacotron 1, DCTTS and Mozilla TTS. We also analysed the performance of models according to different vocoders (RTISI-LA, WaveRNN and Universal WaveRNN), phonetic transcriptions usage, transfer learning (from English) and denoising. In the proposed scenario, a model based on Mozilla TTS and RTISI-LA vocoder presented the best performance, achieving a 4.03 MOS value. We also verified that transfer learning, phonetic transcriptions and denoising are useful to train the models over the presented dataset. The obtained results are comparable to related works covering English, even using a smaller dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge