Francesco Rundo

SalFoM: Dynamic Saliency Prediction with Video Foundation Models

Apr 03, 2024

Abstract:Recent advancements in video saliency prediction (VSP) have shown promising performance compared to the human visual system, whose emulation is the primary goal of VSP. However, current state-of-the-art models employ spatio-temporal transformers trained on limited amounts of data, hindering generalizability adaptation to downstream tasks. The benefits of vision foundation models present a potential solution to improve the VSP process. However, adapting image foundation models to the video domain presents significant challenges in modeling scene dynamics and capturing temporal information. To address these challenges, and as the first initiative to design a VSP model based on video foundation models, we introduce SalFoM, a novel encoder-decoder video transformer architecture. Our model employs UnMasked Teacher (UMT) as feature extractor and presents a heterogeneous decoder which features a locality-aware spatio-temporal transformer and integrates local and global spatio-temporal information from various perspectives to produce the final saliency map. Our qualitative and quantitative experiments on the challenging VSP benchmark datasets of DHF1K, Hollywood-2 and UCF-Sports demonstrate the superiority of our proposed model in comparison with the state-of-the-art methods.

Deep Learning Algorithm for Advanced Level-3 Inverse-Modeling of Silicon-Carbide Power MOSFET Devices

Oct 16, 2023Abstract:Inverse modelling with deep learning algorithms involves training deep architecture to predict device's parameters from its static behaviour. Inverse device modelling is suitable to reconstruct drifted physical parameters of devices temporally degraded or to retrieve physical configuration. There are many variables that can influence the performance of an inverse modelling method. In this work the authors propose a deep learning method trained for retrieving physical parameters of Level-3 model of Power Silicon-Carbide MOSFET (SiC Power MOS). The SiC devices are used in applications where classical silicon devices failed due to high-temperature or high switching capability. The key application of SiC power devices is in the automotive field (i.e. in the field of electrical vehicles). Due to physiological degradation or high-stressing environment, SiC Power MOS shows a significant drift of physical parameters which can be monitored by using inverse modelling. The aim of this work is to provide a possible deep learning-based solution for retrieving physical parameters of the SiC Power MOSFET. Preliminary results based on the retrieving of channel length of the device are reported. Channel length of power MOSFET is a key parameter involved in the static and dynamic behaviour of the device. The experimental results reported in this work confirmed the effectiveness of a multi-layer perceptron designed to retrieve this parameter.

Car-Driver Drowsiness Assessment through 1D Temporal Convolutional Networks

Jul 27, 2023

Abstract:Recently, the scientific progress of Advanced Driver Assistance System solutions (ADAS) has played a key role in enhancing the overall safety of driving. ADAS technology enables active control of vehicles to prevent potentially risky situations. An important aspect that researchers have focused on is the analysis of the driver attention level, as recent reports confirmed a rising number of accidents caused by drowsiness or lack of attentiveness. To address this issue, various studies have suggested monitoring the driver physiological state, as there exists a well-established connection between the Autonomic Nervous System (ANS) and the level of attention. For our study, we designed an innovative bio-sensor comprising near-infrared LED emitters and photo-detectors, specifically a Silicon PhotoMultiplier device. This allowed us to assess the driver physiological status by analyzing the associated PhotoPlethysmography (PPG) signal.Furthermore, we developed an embedded time-domain hyper-filtering technique in conjunction with a 1D Temporal Convolutional architecture that embdes a progressive dilation setup. This integrated system enables near real-time classification of driver drowsiness, yielding remarkable accuracy levels of approximately 96%.

Non-Linear Self Augmentation Deep Pipeline for Cancer Treatment outcome Prediction

Jul 26, 2023Abstract:Immunotherapy emerges as promising approach for treating cancer. Encouraging findings have validated the efficacy of immunotherapy medications in addressing tumors, resulting in prolonged survival rates and notable reductions in toxicity compared to conventional chemotherapy methods. However, the pool of eligible patients for immunotherapy remains relatively small, indicating a lack of comprehensive understanding regarding the physiological mechanisms responsible for favorable treatment response in certain individuals while others experience limited benefits. To tackle this issue, the authors present an innovative strategy that harnesses a non-linear cellular architecture in conjunction with a deep downstream classifier. This approach aims to carefully select and enhance 2D features extracted from chest-abdomen CT images, thereby improving the prediction of treatment outcomes. The proposed pipeline has been meticulously designed to seamlessly integrate with an advanced embedded Point of Care system. In this context, the authors present a compelling case study focused on Metastatic Urothelial Carcinoma (mUC), a particularly aggressive form of cancer. Performance evaluation of the proposed approach underscores its effectiveness, with an impressive overall accuracy of approximately 93%

Visual Saliency Detection in Advanced Driver Assistance Systems

Jul 26, 2023Abstract:Visual Saliency refers to the innate human mechanism of focusing on and extracting important features from the observed environment. Recently, there has been a notable surge of interest in the field of automotive research regarding the estimation of visual saliency. While operating a vehicle, drivers naturally direct their attention towards specific objects, employing brain-driven saliency mechanisms that prioritize certain elements over others. In this investigation, we present an intelligent system that combines a drowsiness detection system for drivers with a scene comprehension pipeline based on saliency. To achieve this, we have implemented a specialized 3D deep network for semantic segmentation, which has been pretrained and tailored for processing the frames captured by an automotive-grade external camera. The proposed pipeline was hosted on an embedded platform utilizing the STA1295 core, featuring ARM A7 dual-cores, and embeds an hardware accelerator. Additionally, we employ an innovative biosensor embedded on the car steering wheel to monitor the driver drowsiness, gathering the PhotoPlethysmoGraphy (PPG) signal of the driver. A dedicated 1D temporal deep convolutional network has been devised to classify the collected PPG time-series, enabling us to assess the driver level of attentiveness. Ultimately, we compare the determined attention level of the driver with the corresponding saliency-based scene classification to evaluate the overall safety level. The efficacy of the proposed pipeline has been validated through extensive experimental results.

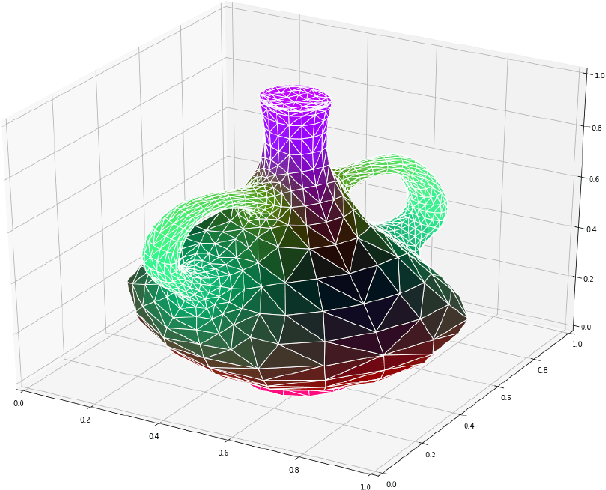

MeT: A Graph Transformer for Semantic Segmentation of 3D Meshes

Jul 03, 2023

Abstract:Polygonal meshes have become the standard for discretely approximating 3D shapes, thanks to their efficiency and high flexibility in capturing non-uniform shapes. This non-uniformity, however, leads to irregularity in the mesh structure, making tasks like segmentation of 3D meshes particularly challenging. Semantic segmentation of 3D mesh has been typically addressed through CNN-based approaches, leading to good accuracy. Recently, transformers have gained enough momentum both in NLP and computer vision fields, achieving performance at least on par with CNN models, supporting the long-sought architecture universalism. Following this trend, we propose a transformer-based method for semantic segmentation of 3D mesh motivated by a better modeling of the graph structure of meshes, by means of global attention mechanisms. In order to address the limitations of standard transformer architectures in modeling relative positions of non-sequential data, as in the case of 3D meshes, as well as in capturing the local context, we perform positional encoding by means the Laplacian eigenvectors of the adjacency matrix, replacing the traditional sinusoidal positional encodings, and by introducing clustering-based features into the self-attention and cross-attention operators. Experimental results, carried out on three sets of the Shape COSEG Dataset, on the human segmentation dataset proposed in Maron et al., 2017 and on the ShapeNet benchmark, show how the proposed approach yields state-of-the-art performance on semantic segmentation of 3D meshes.

A baseline on continual learning methods for video action recognition

Apr 26, 2023Abstract:Continual learning has recently attracted attention from the research community, as it aims to solve long-standing limitations of classic supervisedly-trained models. However, most research on this subject has tackled continual learning in simple image classification scenarios. In this paper, we present a benchmark of state-of-the-art continual learning methods on video action recognition. Besides the increased complexity due to the temporal dimension, the video setting imposes stronger requirements on computing resources for top-performing rehearsal methods. To counteract the increased memory requirements, we present two method-agnostic variants for rehearsal methods, exploiting measures of either model confidence or data information to select memorable samples. Our experiments show that, as expected from the literature, rehearsal methods outperform other approaches; moreover, the proposed memory-efficient variants are shown to be effective at retaining a certain level of performance with a smaller buffer size.

Early detection of hip periprosthetic joint infections through CNN on Computed Tomography images

Apr 18, 2023Abstract:Early detection of an infection prior to prosthesis removal (e.g., hips, knees or other areas) would provide significant benefits to patients. Currently, the detection task is carried out only retrospectively with a limited number of methods relying on biometric or other medical data. The automatic detection of a periprosthetic joint infection from tomography imaging is a task never addressed before. This study introduces a novel method for early detection of the hip prosthesis infections analyzing Computed Tomography images. The proposed solution is based on a novel ResNeSt Convolutional Neural Network architecture trained on samples from more than 100 patients. The solution showed exceptional performance in detecting infections with an experimental high level of accuracy and F-score.

Deep Learning Systems for Advanced Driving Assistance

Apr 05, 2023Abstract:Next generation cars embed intelligent assessment of car driving safety through innovative solutions often based on usage of artificial intelligence. The safety driving monitoring can be carried out using several methodologies widely treated in scientific literature. In this context, the author proposes an innovative approach that uses ad-hoc bio-sensing system suitable to reconstruct the physio-based attentional status of the car driver. To reconstruct the car driver physiological status, the author proposed the use of a bio-sensing probe consisting of a coupled LEDs at Near infrared (NiR) spectrum with a photodetector. This probe placed over the monitored subject allows to detect a physiological signal called PhotoPlethysmoGraphy (PPG). The PPG signal formation is regulated by the change in oxygenated and non-oxygenated hemoglobin concentration in the monitored subject bloodstream which will be directly connected to cardiac activity in turn regulated by the Autonomic Nervous System (ANS) that characterizes the subject's attention level. This so designed car driver drowsiness monitoring will be combined with further driving safety assessment based on correlated intelligent driving scenario understanding.

UniCT DMI Solution for 3rd COV19D Competition on COVID-19 Detection through attention-based CNN for CT Scan

Mar 22, 2023Abstract:This paper presents our solution for the first challenge of the 3rd Covid-19 competition, which is part of the "AI-enabled Medical Image Analysis Workshop" organized by IEEE International Conference on Acoustic, Speech and Signal Processing (ICASSP) 2023. Our proposed solution is based on a Resnet as a backbone network with the addition of attention mechanisms. The Resnet provides an effective feature extractor for the classification task, while the attention mechanisms improve the model's ability to focus on important regions of interest within the images. We conducted extensive experiments on the provided dataset and achieved promising results. Our proposed approach has the potential to assist in the accurate diagnosis of Covid-19 from chest computed tomography images, which can aid in the early detection and management of the disease.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge