Fabio Saggese

Toward ISAC-empowered subnetworks: Cooperative localization and iterative node selection

Nov 15, 2025Abstract:This paper tackles the sensing-communication trade-off in integrated sensing and communication (ISAC)-empowered subnetworks for mono-static target localization. We propose a low-complexity iterative node selection algorithm that exploits the spatial diversity of subnetwork deployments and dynamically refines the set of sensing subnetworks to maximize localization accuracy under tight resource constraints. Simulation results show that our method achieves sub-7 cm accuracy in additive white Gaussian noise (AWGN) channels within only three iterations, yielding over 97% improvement compared to the best-performing benchmark under the same sensing budget. We further demonstrate that increasing spatial diversity through additional antennas and subnetworks enhances sensing robustness, especially in fading channels. Finally, we quantify the sensing-communication trade-off, showing that reducing sensing iterations and the number of sensing subnetworks improves throughput at the cost of reduced localization precision.

Multi-dimensional Parameter Estimation in RIS-aided MU-MIMO Channels

May 05, 2025Abstract:We address the channel estimation problem in reconfigurable intelligent surface (RIS) aided broadband systems by proposing a dual-structure and multi-dimensional transformations (DS-MDT) algorithm. The proposed approach leverages the dual-structure features of the channel parameters to assist users experiencing weaker channel conditions, thereby enhancing estimation performance. Moreover, given that the channel parameters are distributed across multiple dimensions of the received tensor, the proposed algorithm employs multi-dimensional transformations to effectively isolate and extract distinct parameters. The numerical results demonstrate the proposed algorithm reduces the normalized mean square error (NMSE) by up to 10 dB while maintaining lower complexity compared to state-of-the-art methods.

Integrated Sensing and Communications for Resource Allocation in Non-Terrestrial Networks

Jul 09, 2024Abstract:The integration of Non-Terrestrial Networks (NTNs) with Low Earth Orbit (LEO) satellite constellations into 5G and Beyond is essential to achieve truly global connectivity. A distinctive characteristic of LEO mega-constellations is that they constitute a global infrastructure with predictable dynamics, which enables the pre-planned allocation of the radio resources. However, the different bands that can be used for ground-to-satellite communication are affected differently by atmospheric conditions such as precipitation, which introduces uncertainty on the attenuation of the communication links at high frequencies. Based on this, we present a compelling case for applying integrated sensing and communications (ISAC) in heterogeneous and multi-layer LEO satellite constellations over wide areas. Specifically, we present an ISAC framework and frame structure to accurately estimate the attenuation in the communication links due to precipitation, with the aim of finding the optimal serving satellites and resource allocation for downlink communication with users on ground. The results show that, by dedicating an adequate amount of resources for sensing and solving the association and resource allocation problems jointly, it is feasible to increase the average throughput by 59% and the fairness by 600% when compared to solving these problems separately.

Coexistence of Pull and Push Communication in Wireless Access for IoT Devices

Apr 11, 2024

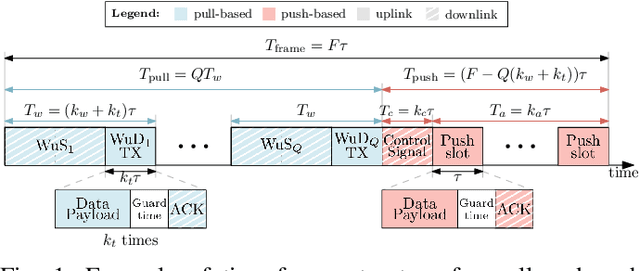

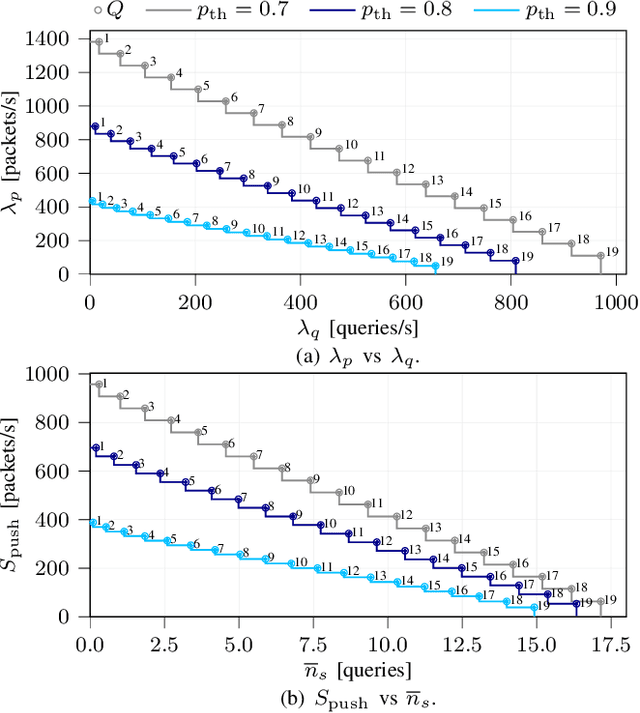

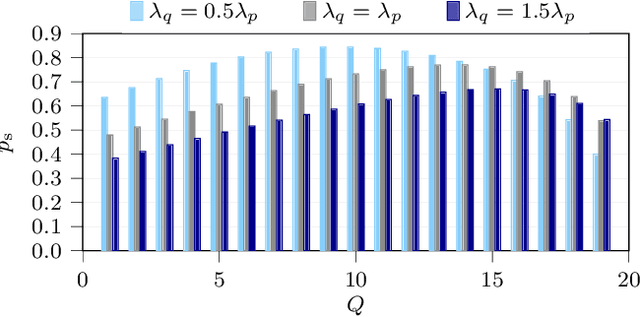

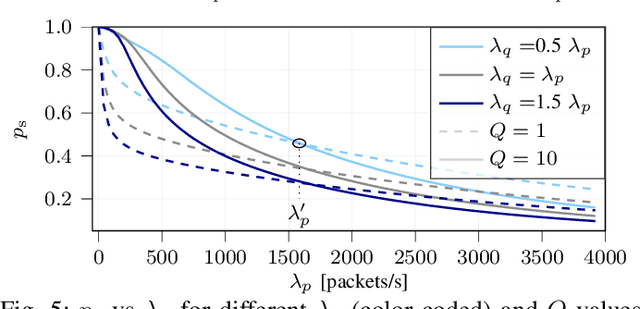

Abstract:We consider a setup with Internet of Things (IoT), where a base station (BS) collects data from nodes that use two different communication modes. The first is pull-based, where the BS retrieves the data from specific nodes through queries. In addition, the nodes that apply pull-based communication contain a wake-up receiver: upon a query, the BS sends wake-up signal (WuS) to activate the corresponding devices equipped with wake-up receiver (WuDs). The second one is push-based communication, in which the nodes decide when to send to the BS. Consider a time-slotted model, where the time slots in each frame are shared for both pull-based and push-based communications. Therein, this coexistence scenario gives rise to a new type of problem with fundamental trade-offs in sharing communication resources: the objective to serve a maximum number of queries, within a specified deadline, limits the transmission opportunities for push sensors, and vice versa. This work develops a mathematical model that characterizes these trade-offs, validates them through simulations, and optimizes the frame design to meet the objectives of both the pull- and push-based communications.

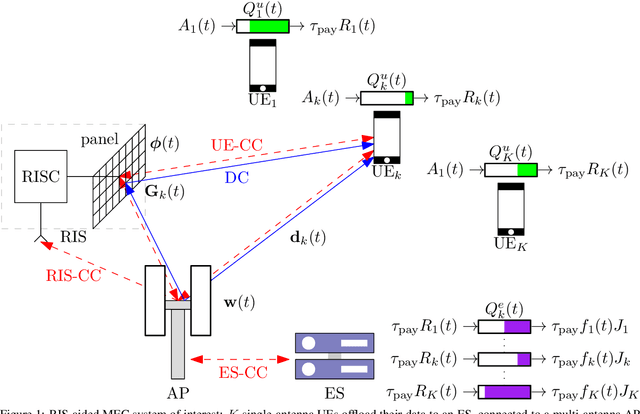

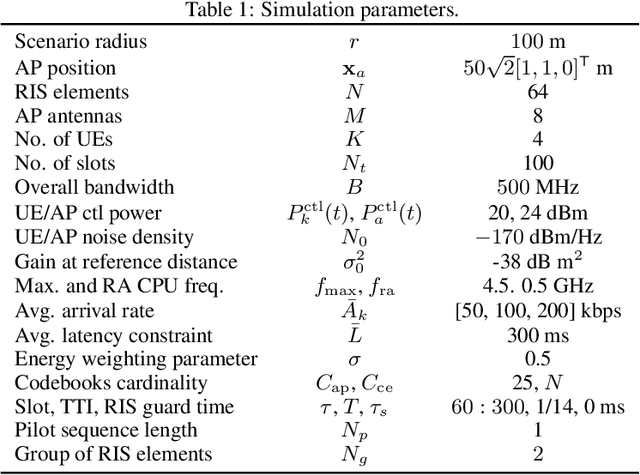

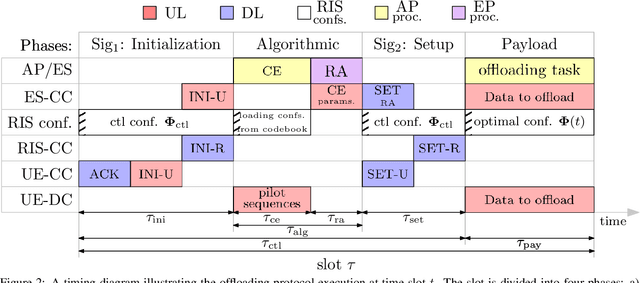

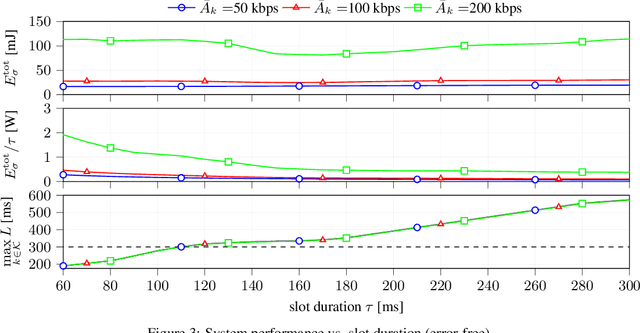

Control Aspects for Using RIS in Latency-Constrained Mobile Edge Computing

Dec 19, 2023

Abstract:This paper investigates the role and the impact of control operations for dynamic mobile edge computing (MEC) empowered by Reconfigurable Intelligent Surfaces (RISs), in which multiple devices offload their computation tasks to an access point (AP) equipped with an edge server (ES), with the help of the RIS. While usually ignored, the control aspects related to channel estimation (CE), resource allocation (RA), and control signaling play a fundamental role in the user-perceived delay and energy consumption. In general, the higher the resources involved in the control operations, the higher their reliability; however, this introduces an overhead, which reduces the number of resources available for computation offloading, possibly increasing the overall latency experienced. Conversely, a lower control overhead translates to more resources available for computation offloading but impacts the CE accuracy and RA flexibility. This paper establishes a basic framework for integrating the impact of control operations in the performance evaluation of the RIS-aided MEC paradigm, clarifying their trade-offs through theoretical analysis and numerical simulations.

An Orchestration Framework for Open System Models of Reconfigurable Intelligent Surfaces

Apr 21, 2023

Abstract:To obviate the control of reflective intelligent surfaces (RISs) and the related control overhead, recent works envisioned autonomous and self-configuring RISs that do not need explicit use of control channels. Instead, these devices, named hybrid RISs (HRISs), are equipped with receiving radio-frequency (RF) chains and can perform sensing operations to act independently and in parallel to the other network entities. A natural problem then emerges: as the HRIS operates concurrently with the communication protocols, how should its operation modes be scheduled in time such that it helps the network while minimizing any undesirable effects? In this paper, we propose an orchestration framework that answers this question revealing an engineering trade-off, called the self-configuring trade-off, that characterizes the applicability of self-configuring HRISs under the consideration of massive multiple-input multiple-output (mMIMO) networks. We evaluate our proposed framework considering two different HRIS hardware architectures, the power- and signal-based HRISs that differ in their hardware complexity. The numerical results show that the self-configuring HRIS can offer significant performance gains when adopting our framework.

A Framework for Control Channels Applied to Reconfigurable Intelligent Surfaces

Mar 29, 2023

Abstract:The research on Reconfigurable Intelligent Surfaces (RISs) has dominantly been focused on physical-layer aspects and analyses of the achievable adaptation of the propagation environment. Compared to that, the questions related to link/MAC protocol and system-level integration of RISs have received much less attention. This paper addresses the problem of designing and analyzing control/signaling procedures, which are necessary for the integration of RISs as a new type of network element within the overall wireless infrastructure. We build a general model for designing control channels along two dimensions: i) allocated bandwidth (in-band and out-of band) and ii) rate selection (multiplexing or diversity). Specifically, the second dimension results in two transmission schemes, one based on channel estimation and the subsequent adapted RIS configuration, while the other is based on sweeping through predefined RIS phase profiles. The paper analyzes the performance of the control channel in multiple communication setups, obtained as combinations of the aforementioned dimensions. While necessarily simplified, our analysis reveals the basic trade-offs in designing control channels and the associated communication algorithms. Perhaps the main value of this work is to serve as a framework for subsequent design and analysis of various system-level aspects related to the RIS technology.

Localization-based OFDM framework for RIS-aided systems

Mar 22, 2023Abstract:Efficient integration of reconfigurable intelligent surfaces (RISs) into the current wireless network standard is not a trivial task due to the overhead generated by performing channel estimation (CE) and phase-shift optimization. In this paper, we propose a framework enabling the coexistence between orthogonal-frequency division multiplexing (OFDM) and RIS technologies. Instead of wasting communication symbols for the CE and optimization, the proposed framework exploits the localization information obtainable by RIS-aided communications to provide a robust allocation strategy for user multiplexing. The results demonstrate the effectiveness of the proposed approach with respect to CE-based transmission methods.

Randomized Control of Wireless Temporal Coherence via Reconfigurable Intelligent Surface

Jan 31, 2023

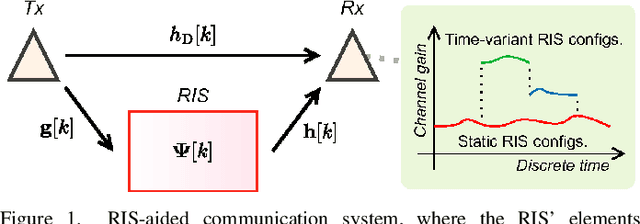

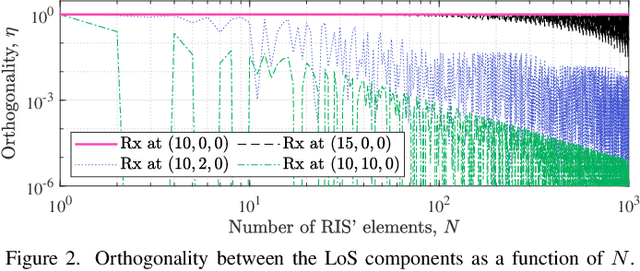

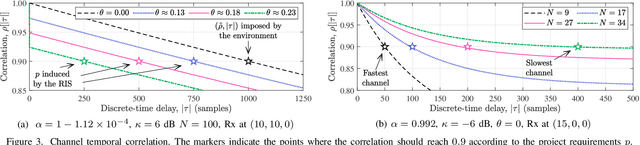

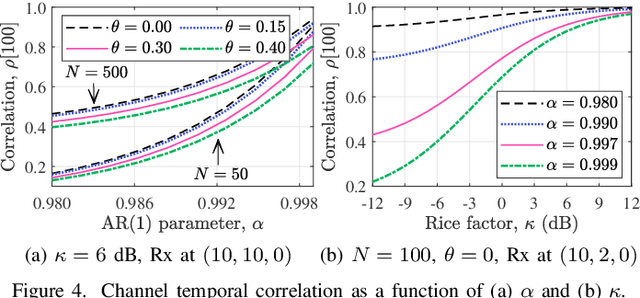

Abstract:A reconfigurable intelligent surface (RIS) can shape the wireless propagation channel by inducing controlled phase shift variations to the impinging signals. Multiple works have considered the use of RIS by time-varying configurations of reflection coefficients. In this work we use the RIS to control the channel coherence time and introduce a generalized discrete-time-varying channel model for RIS-aided systems. We characterize the temporal variation of channel correlation by assuming that a configuration of RIS' elements changes at every time step. The analysis converges to a randomized framework to control the channel coherence time by setting the number of RIS' elements and their phase shifts. The main result is a framework for a flexible block-fading model, where the number of samples within a coherence block can be dynamically adapted.

Random Access Protocol with Channel Oracle Enabled by a Reconfigurable Intelligent Surface

Oct 09, 2022

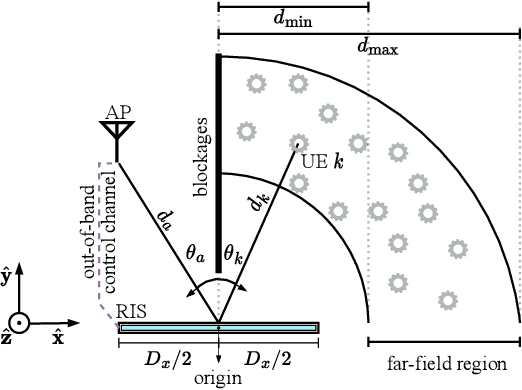

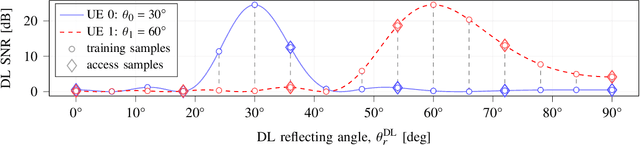

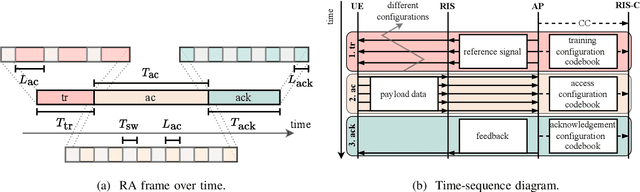

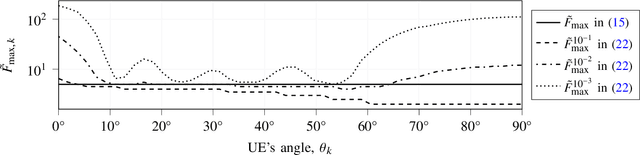

Abstract:The widespread adoption of Reconfigurable Intelligent Surfaces (RISs) in future practical wireless systems is critically dependent on the design and implementation of efficient access protocols, an issue that has received less attention in the research literature. In this paper, we propose a grant-free random access (RA) protocol for a RIS-assisted wireless communication setting, where a massive number of users' equipment (UEs) try to access an access point (AP). The proposed protocol relies on a channel oracle, which enables the UEs to infer the best RIS configurations that provide opportunistic access to UEs. The inference is based on a model created during a training phase with a greatly reduced set of RIS configurations. Specifically, we consider a system whose operation is divided into three blocks: i) a downlink training block, which trains the model used by the oracle, ii) an uplink access block, where the oracle infers the best access slots, and iii) a downlink acknowledgment block, which provides feedback to the UEs that were successfully decoded by the AP during access. Numerical results show that the proper integration of the RIS into the protocol design is able to increase the expected end-to-end throughput by approximately 40% regarding the regular repetition slotted ALOHA protocol.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge