Israel Leyva-Mayorga

Tunable Gaussian Pulse for Delay-Doppler ISAC

Dec 16, 2025

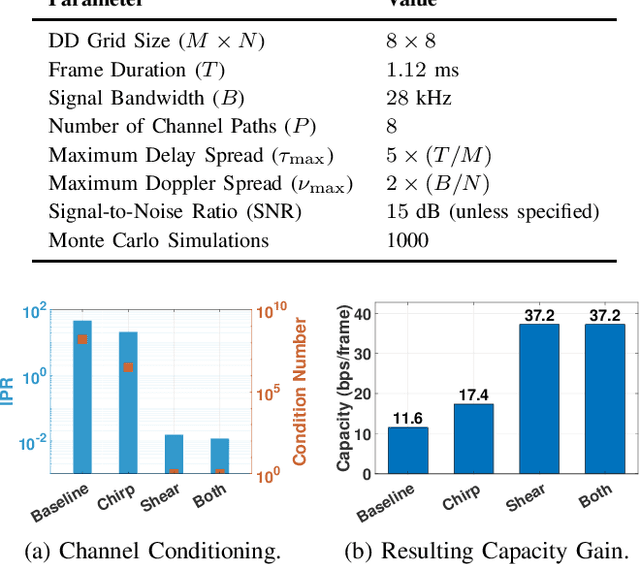

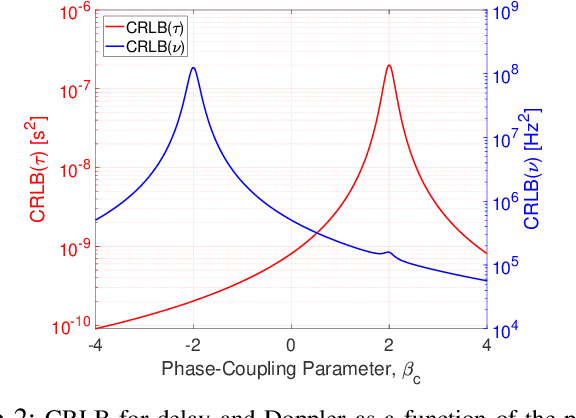

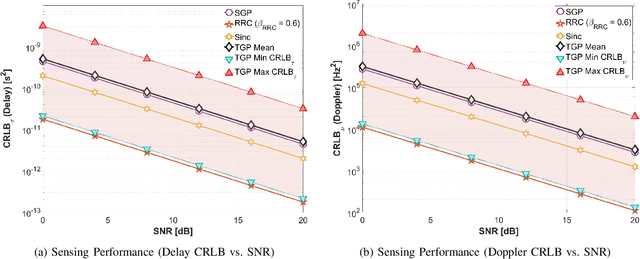

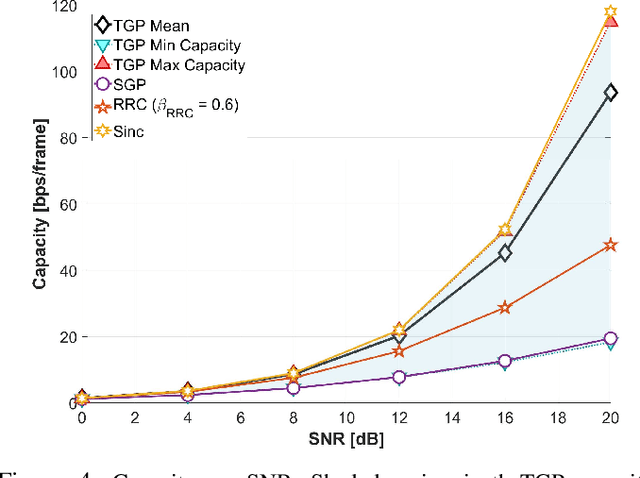

Abstract:Integrated sensing and communication (ISAC) for next-generation networks targets robust operation under high mobility and high Doppler spread, leading to severe inter-carrier interference (ICI) in systems based on orthogonal frequency-division multiplexing (OFDM) waveforms. Delay--Doppler (DD)-domain ISAC offers a more robust foundation under high mobility, but it requires a suitable DD-domain pulse-shaping filter. The prevailing DD pulse designs are either communication-centric or static, which limits adaptation to non-stationary channels and diverse application demands. To address this limitation, this paper introduces the tunable Gaussian pulse (TGP), a DD-native, analytically tunable pulse shape parameterized by its aspect ratio \( γ\), chirp rate \( α_c \), and phase coupling \( β_c \). On the sensing side, we derive closed-form Cramér--Rao lower bounds (CRLBs) that map \( (γ,α_c,β_c) \) to fundamental delay and Doppler precision. On the communications side, we show that \( α_c \) and \( β_c \) reshape off-diagonal covariance, and thus inter-symbol interference (ISI), without changing received power, isolating capacity effects to interference structure rather than power loss. A comprehensive trade-off analysis demonstrates that the TGP spans a flexible operational region from the high capacity of the Sinc pulse to the high precision of the root raised cosine (RRC) pulse. Notably, TGP attains near-RRC sensing precision while retaining over \( 90\% \) of Sinc's maximum capacity, achieving a balanced operating region that is not attainable by conventional static pulse designs.

Policy Gradient Algorithms for Age-of-Information Cost Minimization

Dec 12, 2025

Abstract:Recent developments in cyber-physical systems have increased the importance of maximizing the freshness of the information about the physical environment. However, optimizing the access policies of Internet of Things devices to maximize the data freshness, measured as a function of the Age-of-Information (AoI) metric, is a challenging task. This work introduces two algorithms to optimize the information update process in cyber-physical systems operating under the generate-at-will model, by finding an online policy without knowing the characteristics of the transmission delay or the age cost function. The optimization seeks to minimize the time-average cost, which integrates the AoI at the receiver and the data transmission cost, making the approach suitable for a broad range of scenarios. Both algorithms employ policy gradient methods within the framework of model-free reinforcement learning (RL) and are specifically designed to handle continuous state and action spaces. Each algorithm minimizes the cost using a distinct strategy for deciding when to send an information update. Moreover, we demonstrate that it is feasible to apply the two strategies simultaneously, leading to an additional reduction in cost. The results demonstrate that the proposed algorithms exhibit good convergence properties and achieve a time-average cost within 3% of the optimal value, when the latter is computable. A comparison with other state-of-the-art methods shows that the proposed algorithms outperform them in one or more of the following aspects: being applicable to a broader range of scenarios, achieving a lower time-average cost, and requiring a computational cost at least one order of magnitude lower.

Toward ISAC-empowered subnetworks: Cooperative localization and iterative node selection

Nov 15, 2025Abstract:This paper tackles the sensing-communication trade-off in integrated sensing and communication (ISAC)-empowered subnetworks for mono-static target localization. We propose a low-complexity iterative node selection algorithm that exploits the spatial diversity of subnetwork deployments and dynamically refines the set of sensing subnetworks to maximize localization accuracy under tight resource constraints. Simulation results show that our method achieves sub-7 cm accuracy in additive white Gaussian noise (AWGN) channels within only three iterations, yielding over 97% improvement compared to the best-performing benchmark under the same sensing budget. We further demonstrate that increasing spatial diversity through additional antennas and subnetworks enhances sensing robustness, especially in fading channels. Finally, we quantify the sensing-communication trade-off, showing that reducing sensing iterations and the number of sensing subnetworks improves throughput at the cost of reduced localization precision.

Reinforcement Learning-Based Policy Optimisation For Heterogeneous Radio Access

Jun 18, 2025Abstract:Flexible and efficient wireless resource sharing across heterogeneous services is a key objective for future wireless networks. In this context, we investigate the performance of a system where latency-constrained internet-of-things (IoT) devices coexist with a broadband user. The base station adopts a grant-free access framework to manage resource allocation, either through orthogonal radio access network (RAN) slicing or by allowing shared access between services. For the IoT users, we propose a reinforcement learning (RL) approach based on double Q-Learning (QL) to optimise their repetition-based transmission strategy, allowing them to adapt to varying levels of interference and meet a predefined latency target. We evaluate the system's performance in terms of the cumulative distribution function of IoT users' latency, as well as the broadband user's throughput and energy efficiency (EE). Our results show that the proposed RL-based access policies significantly enhance the latency performance of IoT users in both RAN Slicing and RAN Sharing scenarios, while preserving desirable broadband throughput and EE. Furthermore, the proposed policies enable RAN Sharing to be energy-efficient at low IoT traffic levels, and RAN Slicing to be favourable under high IoT traffic.

Scalable Data Transmission Framework for Earth Observation Satellites with Channel Adaptation

Dec 16, 2024Abstract:The immense volume of data generated by Earth observation (EO) satellites presents significant challenges in transmitting it to Earth over rate-limited satellite-to-ground communication links. This paper presents an efficient downlink framework for multi-spectral satellite images, leveraging adaptive transmission techniques based on pixel importance and link capacity. By integrating semantic communication principles, the framework prioritizes critical information, such as changed multi-spectral pixels, to optimize data transmission. The process involves preprocessing, assessing pixel importance to encode only significant changes, and dynamically adjusting transmissions to match channel conditions. Experimental results on the real dataset and simulated link demonstrate that the proposed approach ensures high-quality data delivery while significantly reducing number of transmitted data, making it highly suitable for satellite-based EO applications.

Coexistence of Radar and Communication with Rate-Splitting Wireless Access

Nov 20, 2024

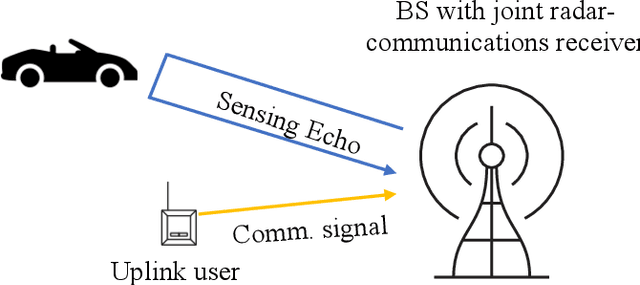

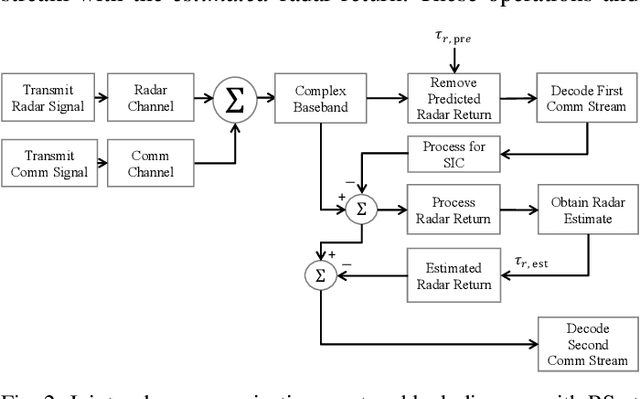

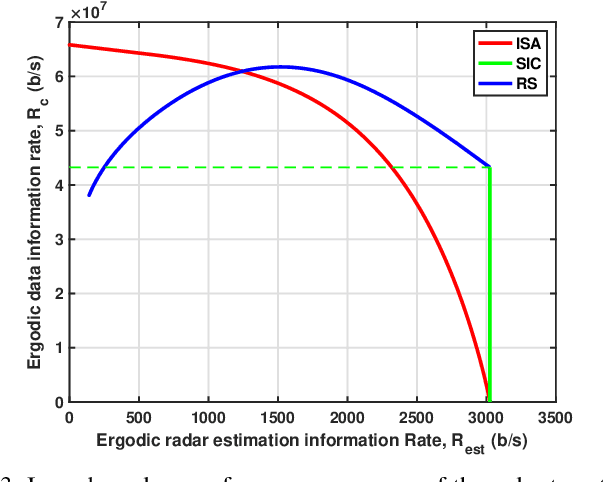

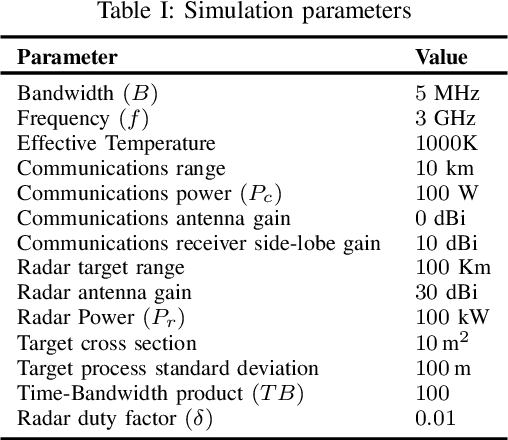

Abstract:This work investigates the coexistence of sensing and communication functionalities in a base station (BS) serving a communication user in the uplink and simultaneously detecting a radar target with the same frequency resources. To address inter-functionality interference, we employ rate-splitting (RS) at the communication user and successive interference cancellation (SIC) at the joint radar-communication receiver at the BS. This approach is motivated by RS's proven effectiveness in mitigating inter-user interference among communication users. Building on the proposed system model based on RS, we derive inner bounds on performance in terms of ergodic data information rate for communication and ergodic radar estimation information rate for sensing. Additionally, we present a closed-form solution for the optimal power split in RS that maximizes the communication user's performance. The bounds achieved with RS are compared to conventional methods, including spectral isolation and full spectral sharing with SIC. We demonstrate that RS offers a superior performance trade-off between sensing and communication functionalities compared to traditional approaches. Pertinently, while the original concept of RS deals only with digital signals, this work brings forward RS as a general method for including non-orthogonal access for sensing signals. As a consequence, the work done in this paper provides a systematic and parametrized way to effectuate non-orthogonal sensing and communication waveforms.

Coexistence of Real-Time Source Reconstruction and Broadband Services Over Wireless Networks

Nov 20, 2024Abstract:Achieving a flexible and efficient sharing of wireless resources among a wide range of novel applications and services is one of the major goals of the sixth-generation of mobile systems (6G). Accordingly, this work investigates the performance of a real-time system that coexists with a broadband service in a frame-based wireless channel. Specifically, we consider real-time remote tracking of an information source, where a device monitors its evolution and sends updates to a base station (BS), which is responsible for real-time source reconstruction and, potentially, remote actuation. To achieve this, the BS employs a grant-free access mechanism to serve the monitoring device together with a broadband user, which share the available wireless resources through orthogonal or non-orthogonal multiple access schemes. We analyse the performance of the system with time-averaged reconstruction error, time-averaged cost of actuation error, and update-delivery cost as performance metrics. Furthermore, we analyse the performance of the broadband user in terms of throughput and energy efficiency. Our results show that an orthogonal resource sharing between the users is beneficial in most cases where the broadband user requires maximum throughput. However, sharing the resources in a non-orthogonal manner leads to a far greater energy efficiency.

Content-based Wake-up for Energy-efficient and Timely Top-k IoT Sensing Data Retrieval

Oct 08, 2024

Abstract:Energy efficiency and information freshness are key requirements for sensor nodes serving Industrial Internet of Things (IIoT) applications, where a sink node collects informative and fresh data before a deadline, e.g., to control an external actuator. Content-based wake-up (CoWu) activates a subset of nodes that hold data relevant for the sink's goal, thereby offering an energy-efficient way to attain objectives related to information freshness. This paper focuses on a scenario where the sink collects fresh information on top-k values, defined as data from the nodes observing the k highest readings at the deadline. We introduce a new metric called top-k Query Age of Information (k-QAoI), which allows us to characterize the performance of CoWu by considering the characteristics of the physical process. Further, we show how to select the CoWu parameters, such as its timing and threshold, to attain both information freshness and energy efficiency. The numerical results reveal the effectiveness of the CoWu approach, which is able to collect top-k data with higher energy efficiency while reducing k-QAoI when compared to round-robin scheduling, especially when the number of nodes is large and the required size of k is small.

Integrated Sensing and Communications for Resource Allocation in Non-Terrestrial Networks

Jul 09, 2024Abstract:The integration of Non-Terrestrial Networks (NTNs) with Low Earth Orbit (LEO) satellite constellations into 5G and Beyond is essential to achieve truly global connectivity. A distinctive characteristic of LEO mega-constellations is that they constitute a global infrastructure with predictable dynamics, which enables the pre-planned allocation of the radio resources. However, the different bands that can be used for ground-to-satellite communication are affected differently by atmospheric conditions such as precipitation, which introduces uncertainty on the attenuation of the communication links at high frequencies. Based on this, we present a compelling case for applying integrated sensing and communications (ISAC) in heterogeneous and multi-layer LEO satellite constellations over wide areas. Specifically, we present an ISAC framework and frame structure to accurately estimate the attenuation in the communication links due to precipitation, with the aim of finding the optimal serving satellites and resource allocation for downlink communication with users on ground. The results show that, by dedicating an adequate amount of resources for sensing and solving the association and resource allocation problems jointly, it is feasible to increase the average throughput by 59% and the fairness by 600% when compared to solving these problems separately.

Continual Deep Reinforcement Learning for Decentralized Satellite Routing

May 20, 2024Abstract:This paper introduces a full solution for decentralized routing in Low Earth Orbit satellite constellations based on continual Deep Reinforcement Learning (DRL). This requires addressing multiple challenges, including the partial knowledge at the satellites and their continuous movement, and the time-varying sources of uncertainty in the system, such as traffic, communication links, or communication buffers. We follow a multi-agent approach, where each satellite acts as an independent decision-making agent, while acquiring a limited knowledge of the environment based on the feedback received from the nearby agents. The solution is divided into two phases. First, an offline learning phase relies on decentralized decisions and a global Deep Neural Network (DNN) trained with global experiences. Then, the online phase with local, on-board, and pre-trained DNNs requires continual learning to evolve with the environment, which can be done in two different ways: (1) Model anticipation, where the predictable conditions of the constellation are exploited by each satellite sharing local model with the next satellite; and (2) Federated Learning (FL), where each agent's model is merged first at the cluster level and then aggregated in a global Parameter Server. The results show that, without high congestion, the proposed Multi-Agent DRL framework achieves the same E2E performance as a shortest-path solution, but the latter assumes intensive communication overhead for real-time network-wise knowledge of the system at a centralized node, whereas ours only requires limited feedback exchange among first neighbour satellites. Importantly, our solution adapts well to congestion conditions and exploits less loaded paths. Moreover, the divergence of models over time is easily tackled by the synergy between anticipation, applied in short-term alignment, and FL, utilized for long-term alignment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge