Anders E. Kalør

Content-based Wake-up for Energy-efficient and Timely Top-k IoT Sensing Data Retrieval

Oct 08, 2024

Abstract:Energy efficiency and information freshness are key requirements for sensor nodes serving Industrial Internet of Things (IIoT) applications, where a sink node collects informative and fresh data before a deadline, e.g., to control an external actuator. Content-based wake-up (CoWu) activates a subset of nodes that hold data relevant for the sink's goal, thereby offering an energy-efficient way to attain objectives related to information freshness. This paper focuses on a scenario where the sink collects fresh information on top-k values, defined as data from the nodes observing the k highest readings at the deadline. We introduce a new metric called top-k Query Age of Information (k-QAoI), which allows us to characterize the performance of CoWu by considering the characteristics of the physical process. Further, we show how to select the CoWu parameters, such as its timing and threshold, to attain both information freshness and energy efficiency. The numerical results reveal the effectiveness of the CoWu approach, which is able to collect top-k data with higher energy efficiency while reducing k-QAoI when compared to round-robin scheduling, especially when the number of nodes is large and the required size of k is small.

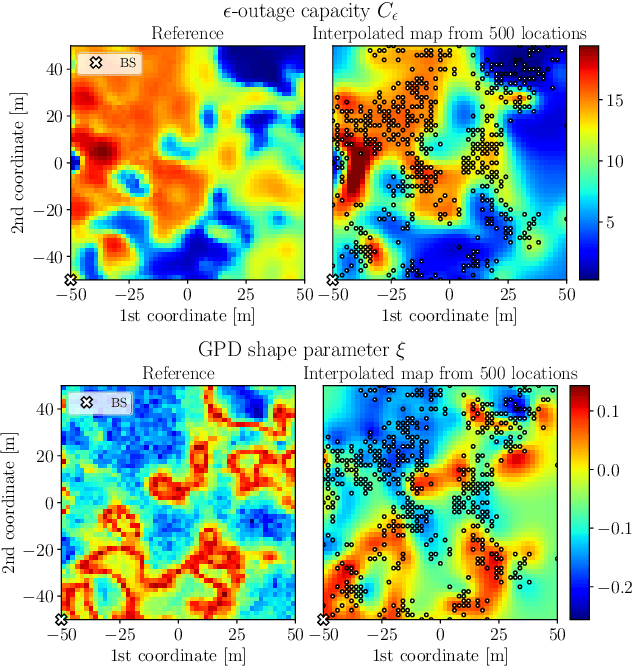

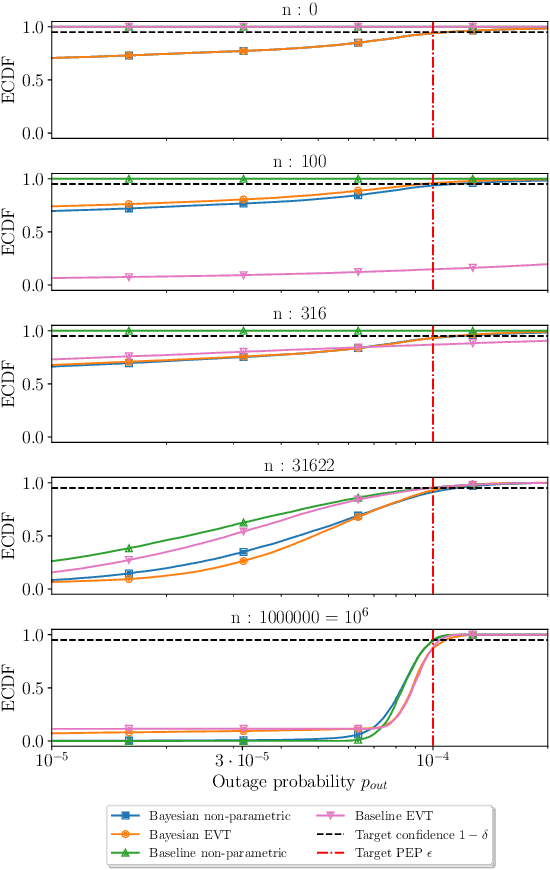

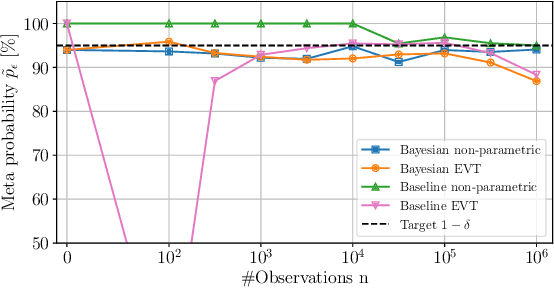

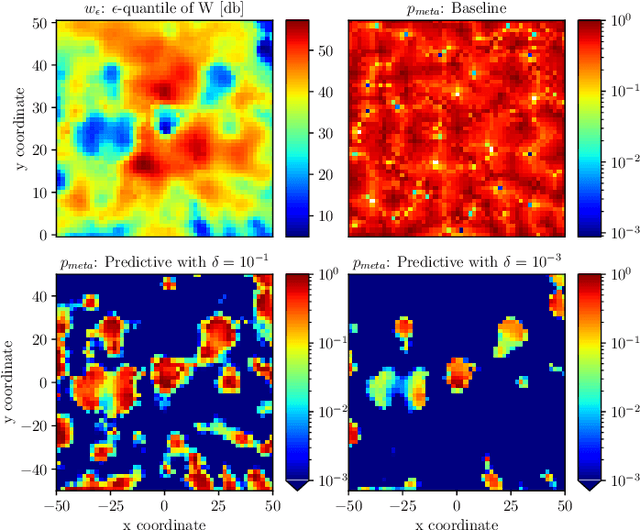

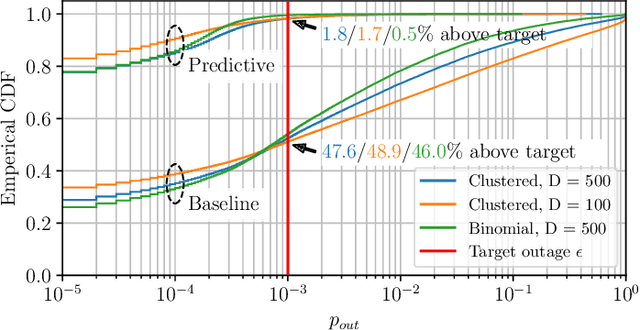

Prediction of Rare Channel Conditions using Bayesian Statistics and Extreme Value Theory

Jul 01, 2024

Abstract:Estimating the probability of rare channel conditions is a central challenge in ultra-reliable wireless communication, where random events, such as deep fades, can cause sudden variations in the channel quality. This paper proposes a sample-efficient framework for predicting the statistics of such events by utilizing spatial dependency between channel measurements acquired from various locations. The proposed framework combines radio maps with non-parametric models and extreme value theory (EVT) to estimate rare-event channel statistics under a Bayesian formulation. The framework can be applied to a wide range of problems in wireless communication and is exemplified by rate selection in ultra-reliable communications. Notably, besides simulated data, the proposed framework is also validated with experimental measurements. The results in both cases show that the Bayesian formulation provides significantly better results in terms of throughput compared to baselines that do not leverage measurements from surrounding locations. It is also observed that the models based on EVT are generally more accurate in predicting rare-event statistics than non-parametric models, especially when only a limited number of channel samples are available. Overall, the proposed methods can significantly reduce the number of measurements required to predict rare channel conditions and guarantee reliability.

Unified Timing Analysis for Closed-Loop Goal-Oriented Wireless Communication

May 25, 2024

Abstract:Goal-oriented communication has become one of the focal concepts in sixth-generation communication systems owing to its potential to provide intelligent, immersive, and real-time mobile services. The emerging paradigms of goal-oriented communication constitute closed loops integrating communication, computation, and sensing. However, challenges arise for closed-loop timing analysis due to multiple random factors that affect the communication/computation latency, as well as the heterogeneity of feedback mechanisms across multi-modal sensing data. To tackle these problems, we aim to provide a unified timing analysis framework for closed-loop goal-oriented communication (CGC) systems over fading channels. The proposed framework is unified as it considers computation, compression, and communication latency in the loop with different configurations. To capture the heterogeneity across multi-modal feedback, we categorize the sensory data into the periodic-feedback and event-triggered, respectively. We formulate timing constraints based on average and tail performance, covering timeliness, jitter, and reliability of CGC systems. A method based on saddlepoint approximation is proposed to obtain the distribution of closed-loop latency. The results show that the modified saddlepoint approximation is capable of accurately characterizing the latency distribution of the loop with analytically tractable expressions. This sets the basis for low-complexity co-design of communication and computation.

Experimental Study of Spatial Statistics for Ultra-Reliable Communications

Feb 17, 2024

Abstract:This paper presents an experimental validation for prediction of rare fading events using channel distribution information (CDI) maps that predict channel statistics from measurements acquired at surrounding locations using spatial interpolation. Using experimental channel measurements from 127 locations, we demonstrate the use case of providing statistical guarantees for rate selection in ultra-reliable low-latency communication (URLLC) using CDI maps. By using only the user location and the estimated map, we are able to meet the desired outage probability with a probability between 93.6-95.6% targeting 95%. On the other hand, a model-based baseline scheme that assumes Rayleigh fading meets the target outage requirement with a probability of 77.2%. The results demonstrate the practical relevance of CDI maps for resource allocation in URLLC.

Timely and Massive Communication in 6G: Pragmatics, Learning, and Inference

Jun 30, 2023

Abstract:5G has expanded the traditional focus of wireless systems to embrace two new connectivity types: ultra-reliable low latency and massive communication. The technology context at the dawn of 6G is different from the past one for 5G, primarily due to the growing intelligence at the communicating nodes. This has driven the set of relevant communication problems beyond reliable transmission towards semantic and pragmatic communication. This paper puts the evolution of low-latency and massive communication towards 6G in the perspective of these new developments. At first, semantic/pragmatic communication problems are presented by drawing parallels to linguistics. We elaborate upon the relation of semantic communication to the information-theoretic problems of source/channel coding, while generalized real-time communication is put in the context of cyber-physical systems and real-time inference. The evolution of massive access towards massive closed-loop communication is elaborated upon, enabling interactive communication, learning, and cooperation among wireless sensors and actuators.

Goal-Oriented Scheduling in Sensor Networks with Application Timing Awareness

Jun 06, 2023Abstract:Taking inspiration from linguistics, the communications theoretical community has recently shown a significant recent interest in pragmatic , or goal-oriented, communication. In this paper, we tackle the problem of pragmatic communication with multiple clients with different, and potentially conflicting, objectives. We capture the goal-oriented aspect through the metric of Value of Information (VoI), which considers the estimation of the remote process as well as the timing constraints. However, the most common definition of VoI is simply the Mean Square Error (MSE) of the whole system state, regardless of the relevance for a specific client. Our work aims to overcome this limitation by including different summary statistics, i.e., value functions of the state, for separate clients, and a diversified query process on the client side, expressed through the fact that different applications may request different functions of the process state at different times. A query-aware Deep Reinforcement Learning (DRL) solution based on statically defined VoI can outperform naive approaches by 15-20%.

Over-the-Air Multi-View Pooling for Distributed Sensing

Feb 20, 2023Abstract:Sensing is envisioned as a key network function of the 6G mobile networks. Artificial intelligence (AI)-empowered sensing fuses features of multiple sensing views from devices distributed in edge networks for the edge server to perform accurate inference. This process, known as multi-view pooling, creates a communication bottleneck due to multi-access by many devices. To alleviate this issue, we propose a task-oriented simultaneous access scheme for distributed sensing called Over-the-Air Pooling (AirPooling). The existing Over-the-Air Computing (AirComp) technique can be directly applied to enable Average-AirPooling by exploiting the waveform superposition property of a multi-access channel. However, despite being most popular in practice, the over-the-air maximization, called Max-AirPooling, is not AirComp realizable as AirComp addresses a limited subset of functions. We tackle the challenge by proposing the novel generalized AirPooling framework that can be configured to support both Max- and Average-AirPooling by controlling a configuration parameter. The former is realized by adding to AirComp the designed pre-processing at devices and post-processing at the server. To characterize the end-to-end sensing performance, the theory of classification margin is applied to relate the classification accuracy and the AirPooling error. Furthermore, the analysis reveals an inherent tradeoff of Max-AirPooling between the accuracy of the pooling-function approximation and the effectiveness of noise suppression. Using the tradeoff, we optimize the configuration parameter of Max-AirPooling, yielding a sub-optimal closed-form method of adaptive parametric control. Experimental results obtained on real-world datasets show that AirPooling provides sensing accuracies close to those achievable by the traditional digital air interface but dramatically reduces the communication latency.

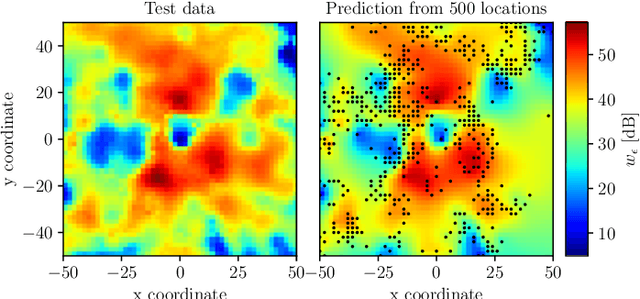

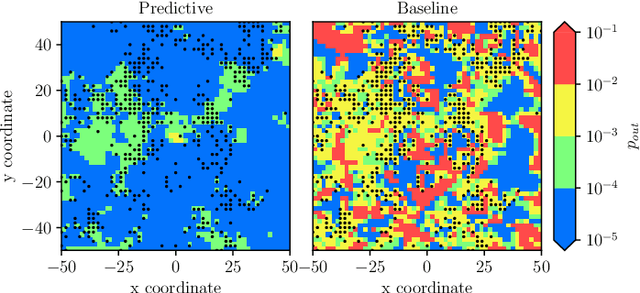

Predictive Rate Selection for Ultra-Reliable Communication using Statistical Radio Maps

May 30, 2022

Abstract:This paper proposes exploiting the spatial correlation of wireless channel statistics beyond the conventional received signal strength maps by constructing statistical radio maps to predict any relevant channel statistics to assist communications. Specifically, from stored channel samples acquired by previous users in the network, we use Gaussian processes (GPs) to estimate quantiles of the channel distribution at a new position using a non-parametric model. This prior information is then used to select the transmission rate for some target level of reliability. The approach is tested with synthetic data, simulated from urban micro-cell environments, highlighting how the proposed solution helps to reduce the training estimation phase, which is especially attractive for the tight latency constraints inherent to ultra-reliable low-latency (URLLC) deployments.

Latency-Constrained Prediction of mmWave/THz Link Blockages through Meta-Learning

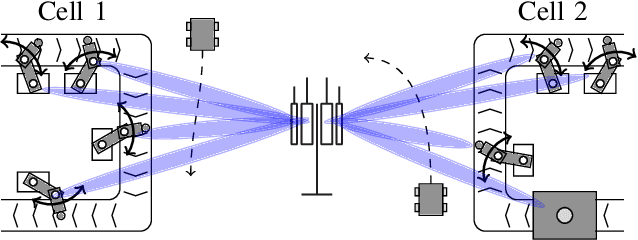

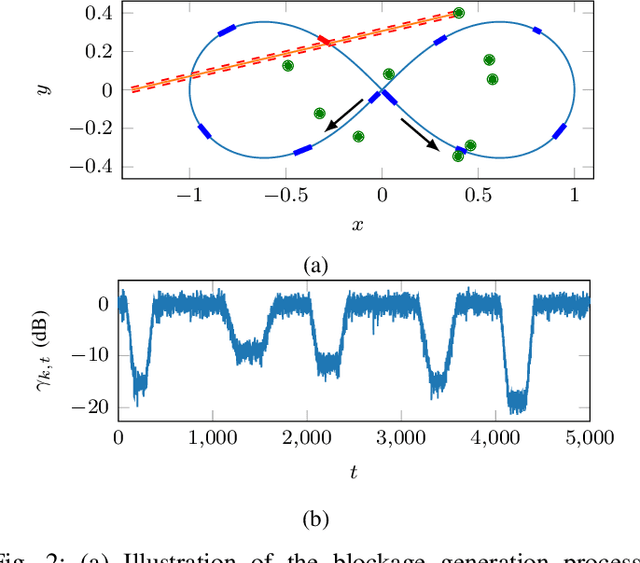

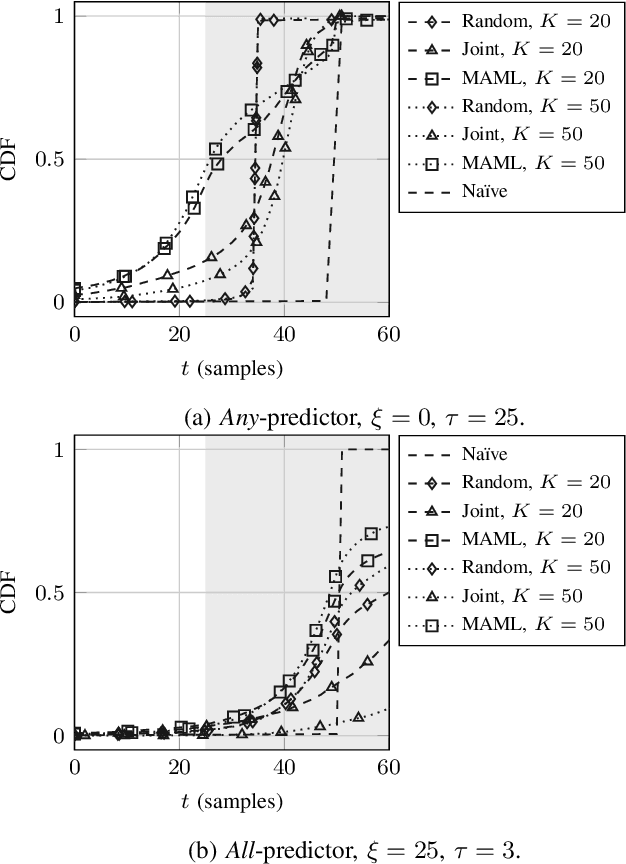

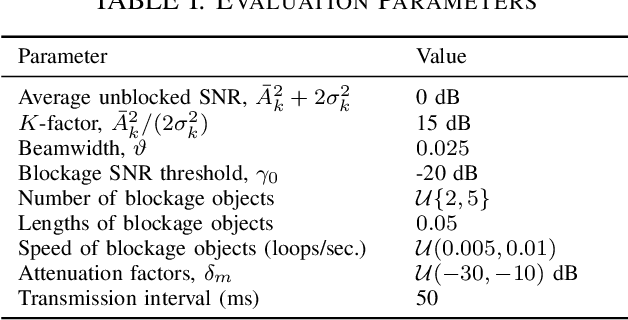

Jun 14, 2021

Abstract:Wireless applications that use high-reliability low-latency links depend critically on the capability of the system to predict link quality. This dependence is especially acute at the high carrier frequencies used by mmWave and THz systems, where the links are susceptible to blockages. Predicting blockages with high reliability requires a large number of data samples to train effective machine learning modules. With the aim of mitigating data requirements, we introduce a framework based on meta-learning, whereby data from distinct deployments are leveraged to optimize a shared initialization that decreases the data set size necessary for any new deployment. Predictors of two different events are studied: (1) at least one blockage occurs in a time window, and (2) the link is blocked for the entire time window. The results show that an RNN-based predictor trained using meta-learning is able to predict blockages after observing fewer samples than predictors trained using standard methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge