Eungchang Mason Lee

LODESTAR: Degeneracy-Aware LiDAR-Inertial Odometry with Adaptive Schmidt-Kalman Filter and Data Exploitation

Nov 12, 2025Abstract:LiDAR-inertial odometry (LIO) has been widely used in robotics due to its high accuracy. However, its performance degrades in degenerate environments, such as long corridors and high-altitude flights, where LiDAR measurements are imbalanced or sparse, leading to ill-posed state estimation. In this letter, we present LODESTAR, a novel LIO method that addresses these degeneracies through two key modules: degeneracy-aware adaptive Schmidt-Kalman filter (DA-ASKF) and degeneracy-aware data exploitation (DA-DE). DA-ASKF employs a sliding window to utilize past states and measurements as additional constraints. Specifically, it introduces degeneracy-aware sliding modes that adaptively classify states as active or fixed based on their degeneracy level. Using Schmidt-Kalman update, it partially optimizes active states while preserving fixed states. These fixed states influence the update of active states via their covariances, serving as reference anchors--akin to a lodestar. Additionally, DA-DE prunes less-informative measurements from active states and selectively exploits measurements from fixed states, based on their localizability contribution and the condition number of the Jacobian matrix. Consequently, DA-ASKF enables degeneracy-aware constrained optimization and mitigates measurement sparsity, while DA-DE addresses measurement imbalance. Experimental results show that LODESTAR outperforms existing LiDAR-based odometry methods and degeneracy-aware modules in terms of accuracy and robustness under various degenerate conditions.

Quatro++: Robust Global Registration Exploiting Ground Segmentation for Loop Closing in LiDAR SLAM

Nov 02, 2023

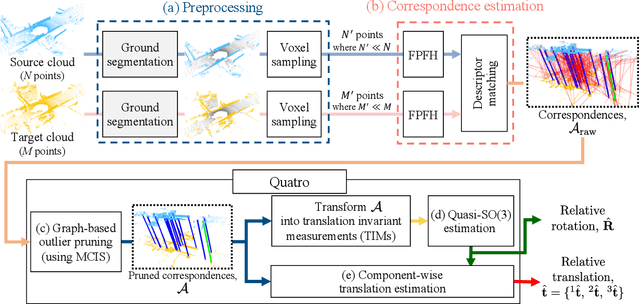

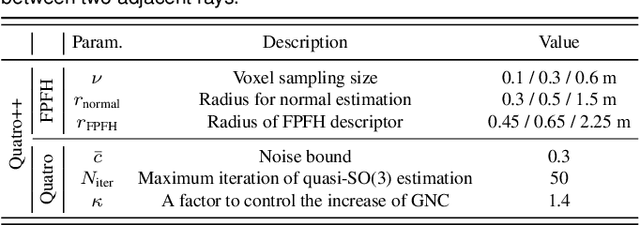

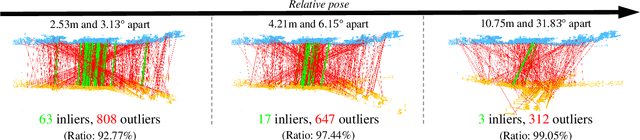

Abstract:Global registration is a fundamental task that estimates the relative pose between two viewpoints of 3D point clouds. However, there are two issues that degrade the performance of global registration in LiDAR SLAM: one is the sparsity issue and the other is degeneracy. The sparsity issue is caused by the sparse characteristics of the 3D point cloud measurements in a mechanically spinning LiDAR sensor. The degeneracy issue sometimes occurs because the outlier-rejection methods reject too many correspondences, leaving less than three inliers. These two issues have become more severe as the pose discrepancy between the two viewpoints of 3D point clouds becomes greater. To tackle these problems, we propose a robust global registration framework, called \textit{Quatro++}. Extending our previous work that solely focused on the global registration itself, we address the robust global registration in terms of the loop closing in LiDAR SLAM. To this end, ground segmentation is exploited to achieve robust global registration. Through the experiments, we demonstrate that our proposed method shows a higher success rate than the state-of-the-art global registration methods, overcoming the sparsity and degeneracy issues. In addition, we show that ground segmentation significantly helps to increase the success rate for the ground vehicles. Finally, we apply our proposed method to the loop closing module in LiDAR SLAM and confirm that the quality of the loop constraints is improved, showing more precise mapping results. Therefore, the experimental evidence corroborated the suitability of our method as an initial alignment in the loop closing. Our code is available at https://quatro-plusplus.github.io.

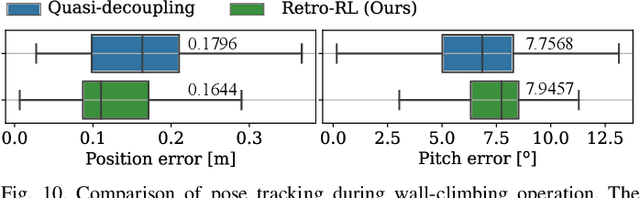

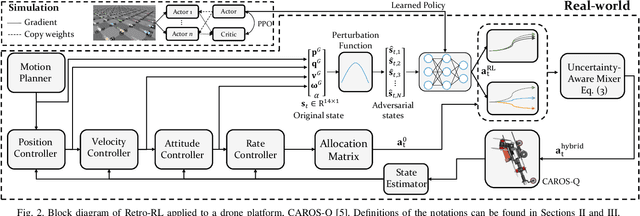

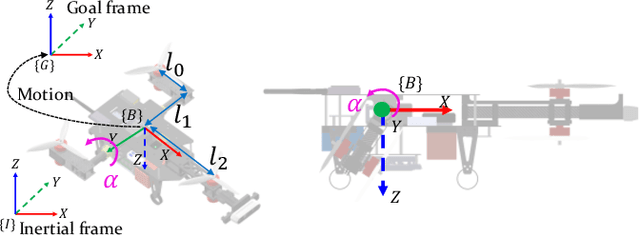

Retro-RL: Reinforcing Nominal Controller With Deep Reinforcement Learning for Tilting-Rotor Drones

Jul 07, 2022

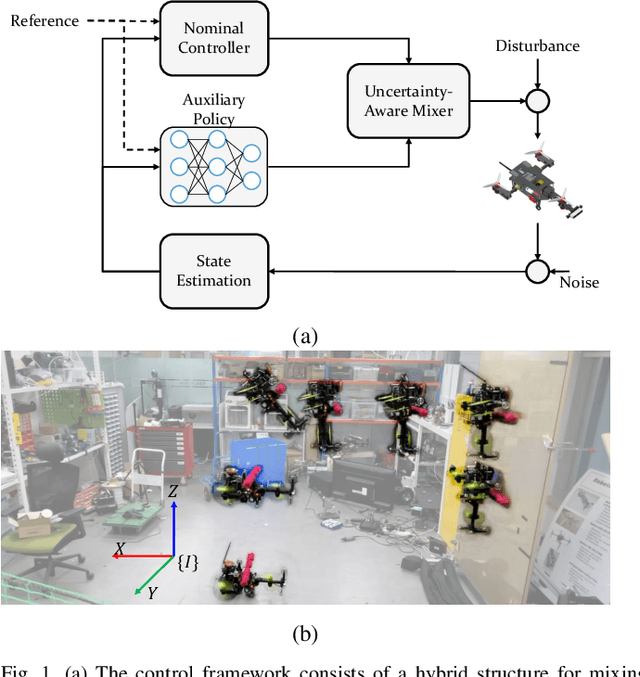

Abstract:Studies that broaden drone applications into complex tasks require a stable control framework. Recently, deep reinforcement learning (RL) algorithms have been exploited in many studies for robot control to accomplish complex tasks. Unfortunately, deep RL algorithms might not be suitable for being deployed directly into a real-world robot platform due to the difficulty in interpreting the learned policy and lack of stability guarantee, especially for a complex task such as a wall-climbing drone. This paper proposes a novel hybrid architecture that reinforces a nominal controller with a robust policy learned using a model-free deep RL algorithm. The proposed architecture employs an uncertainty-aware control mixer to preserve guaranteed stability of a nominal controller while using the extended robust performance of the learned policy. The policy is trained in a simulated environment with thousands of domain randomizations to achieve robust performance over diverse uncertainties. The performance of the proposed method was verified through real-world experiments and then compared with a conventional controller and the state-of-the-art learning-based controller trained with a vanilla deep RL algorithm.

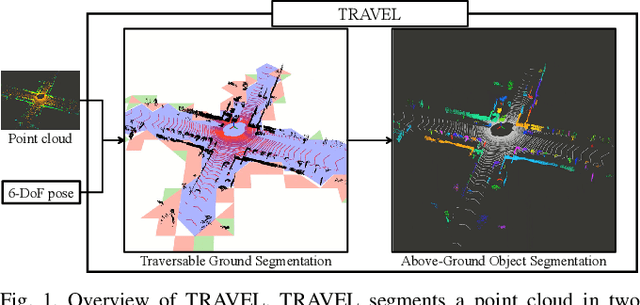

TRAVEL: Traversable Ground and Above-Ground Object Segmentation Using Graph Representation of 3D LiDAR Scans

Jun 07, 2022

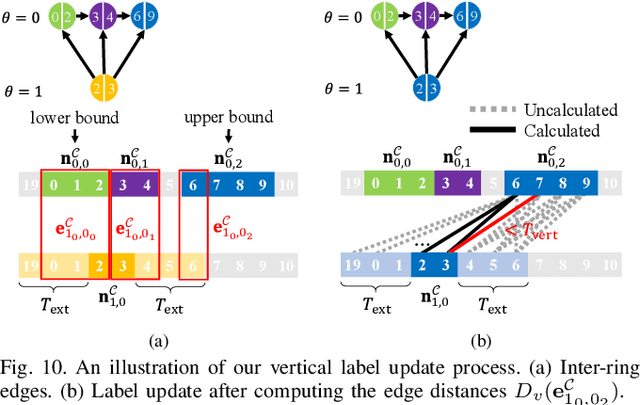

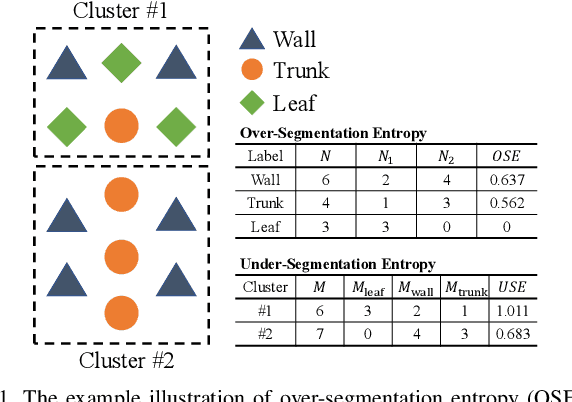

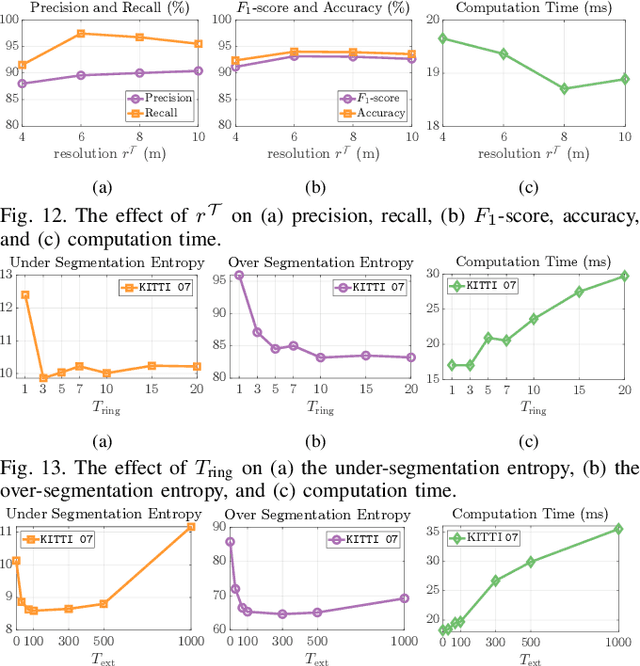

Abstract:Perception of traversable regions and objects of interest from a 3D point cloud is one of the critical tasks in autonomous navigation. A ground vehicle needs to look for traversable terrains that are explorable by wheels. Then, to make safe navigation decisions, the segmentation of objects positioned on those terrains has to be followed up. However, over-segmentation and under-segmentation can negatively influence such navigation decisions. To that end, we propose TRAVEL, which performs traversable ground detection and object clustering simultaneously using the graph representation of a 3D point cloud. To segment the traversable ground, a point cloud is encoded into a graph structure, tri-grid field, which treats each tri-grid as a node. Then, the traversable regions are searched and redefined by examining local convexity and concavity of edges that connect nodes. On the other hand, our above-ground object segmentation employs a graph structure by representing a group of horizontally neighboring 3D points in a spherical-projection space as a node and vertical/horizontal relationship between nodes as an edge. Fully leveraging the node-edge structure, the above-ground segmentation ensures real-time operation and mitigates over-segmentation. Through experiments using simulations, urban scenes, and our own datasets, we have demonstrated that our proposed traversable ground segmentation algorithm outperforms other state-of-the-art methods in terms of the conventional metrics and that our newly proposed evaluation metrics are meaningful for assessing the above-ground segmentation. We will make the code and our own dataset available to public at https://github.com/url-kaist/TRAVEL.

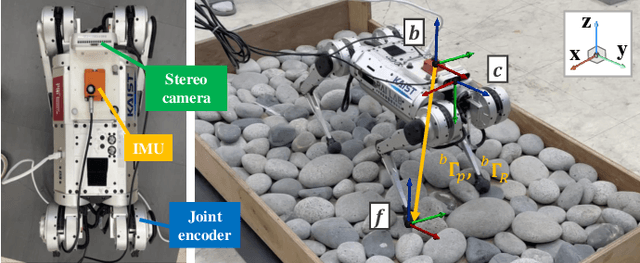

STEP: State Estimator for Legged Robots Using a Preintegrated foot Velocity Factor

Feb 11, 2022

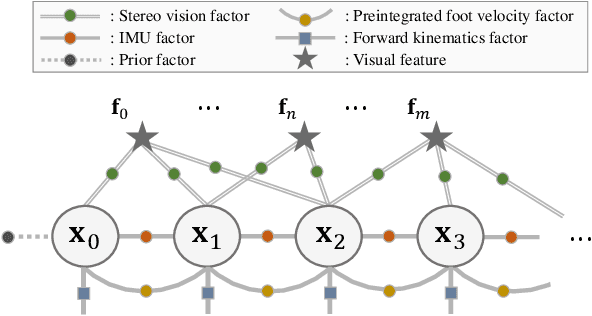

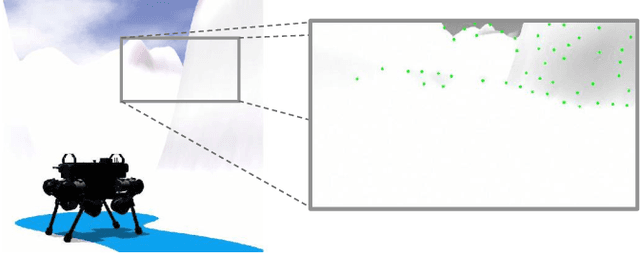

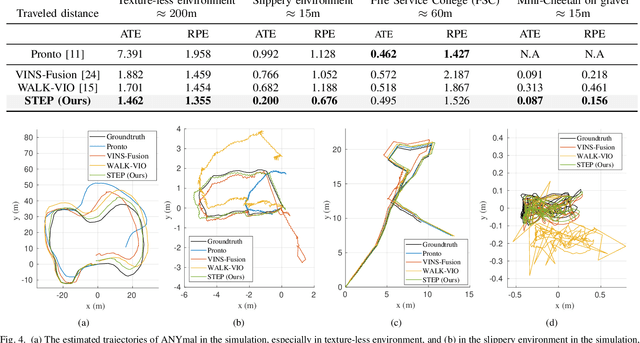

Abstract:We propose a novel state estimator for legged robots, STEP, achieved through a novel preintegrated foot velocity factor. In the preintegrated foot velocity factor, the usual non-slip assumption is not adopted. Instead, the end effector velocity becomes observable by exploiting the body speed obtained from a stereo camera. In other words, the preintegrated end effector's pose can be estimated. Another advantage of our approach is that it eliminates the necessity for a contact detection step, unlike the typical approaches. The proposed method has also been validated in harsh-environment simulations and real-world experiments containing uneven or slippery terrains.

REAL: Rapid Exploration with Active Loop-Closing toward Large-Scale 3D Mapping using UAVs

Aug 05, 2021

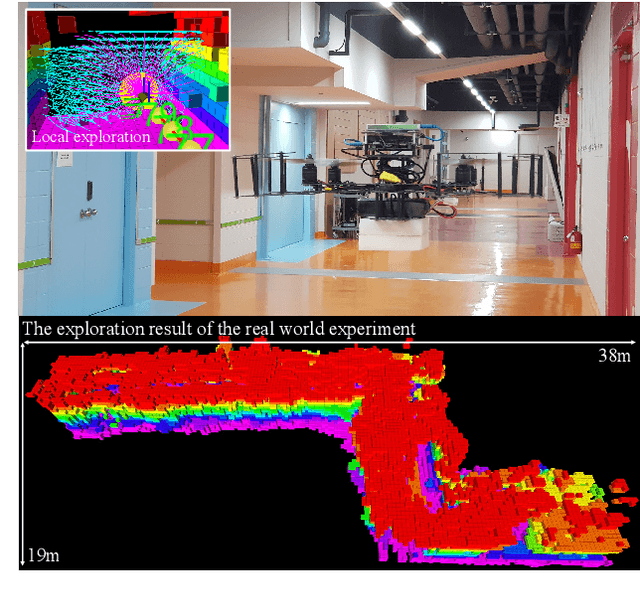

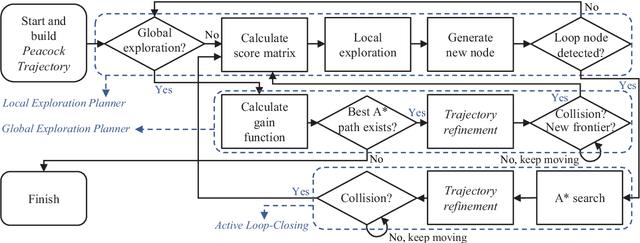

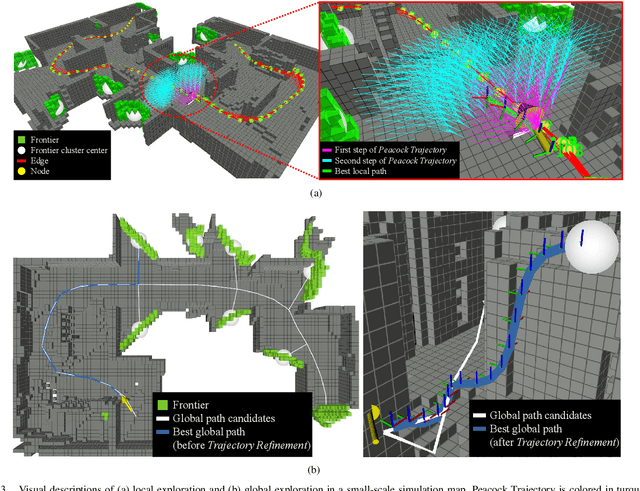

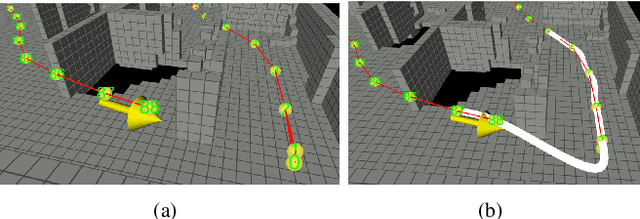

Abstract:Exploring an unknown environment without colliding with obstacles is one of the essentials of autonomous vehicles to perform diverse missions such as structural inspections, rescues, deliveries, and so forth. Therefore, unmanned aerial vehicles (UAVs), which are fast, agile, and have high degrees of freedom, have been widely used. However, previous approaches have two limitations: a) First, they may not be appropriate for exploring large-scale environments because they mainly depend on random sampling-based path planning that causes unnecessary movements. b) Second, they assume the pose estimation is accurate enough, which is the most critical factor in obtaining an accurate map. In this paper, to explore and map unknown large-scale environments rapidly and accurately, we propose a novel exploration method that combines the pre-calculated Peacock Trajectory with graph-based global exploration and active loop-closing. Because the two-step trajectory that considers the kinodynamics of UAVs is used, obstacle avoidance is guaranteed in the receding-horizon manner. In addition, local exploration that considers the frontier and global exploration based on the graph maximizes the speed of exploration by minimizing unnecessary revisiting. In addition, by actively closing the loop based on the likelihood, pose estimation performance is improved. The proposed method's performance is verified by exploring 3D simulation environments in comparison with the state-of-the-art methods. Finally, the proposed approach is validated in a real-world experiment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge