Ethan Chang

FrontierScience: Evaluating AI's Ability to Perform Expert-Level Scientific Tasks

Jan 29, 2026Abstract:We introduce FrontierScience, a benchmark evaluating expert-level scientific reasoning in frontier language models. Recent model progress has nearly saturated existing science benchmarks, which often rely on multiple-choice knowledge questions or already published information. FrontierScience addresses this gap through two complementary tracks: (1) Olympiad, consisting of international olympiad problems at the level of IPhO, IChO, and IBO, and (2) Research, consisting of PhD-level, open-ended problems representative of sub-tasks in scientific research. FrontierScience contains several hundred questions (including 160 in the open-sourced gold set) covering subfields across physics, chemistry, and biology, from quantum electrodynamics to synthetic organic chemistry. All Olympiad problems are originally produced by international Olympiad medalists and national team coaches to ensure standards of difficulty, originality, and factuality. All Research problems are research sub-tasks written and verified by PhD scientists (doctoral candidates, postdoctoral researchers, or professors). For Research, we introduce a granular rubric-based evaluation framework to assess model capabilities throughout the process of solving a research task, rather than judging only a standalone final answer.

Knowledge Tagging with Large Language Model based Multi-Agent System

Sep 12, 2024

Abstract:Knowledge tagging for questions is vital in modern intelligent educational applications, including learning progress diagnosis, practice question recommendations, and course content organization. Traditionally, these annotations have been performed by pedagogical experts, as the task demands not only a deep semantic understanding of question stems and knowledge definitions but also a strong ability to link problem-solving logic with relevant knowledge concepts. With the advent of advanced natural language processing (NLP) algorithms, such as pre-trained language models and large language models (LLMs), pioneering studies have explored automating the knowledge tagging process using various machine learning models. In this paper, we investigate the use of a multi-agent system to address the limitations of previous algorithms, particularly in handling complex cases involving intricate knowledge definitions and strict numerical constraints. By demonstrating its superior performance on the publicly available math question knowledge tagging dataset, MathKnowCT, we highlight the significant potential of an LLM-based multi-agent system in overcoming the challenges that previous methods have encountered. Finally, through an in-depth discussion of the implications of automating knowledge tagging, we underscore the promising results of deploying LLM-based algorithms in educational contexts.

Graph Learning Augmented Heterogeneous Graph Neural Network for Social Recommendation

Sep 24, 2021

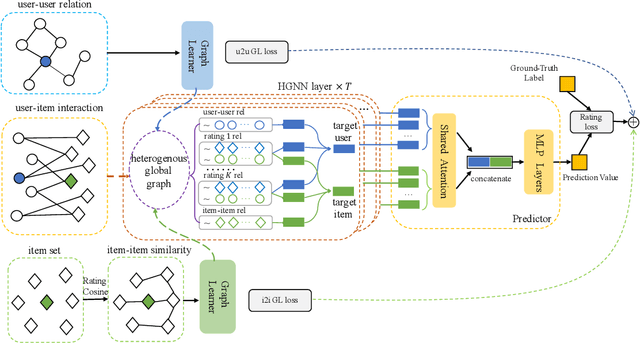

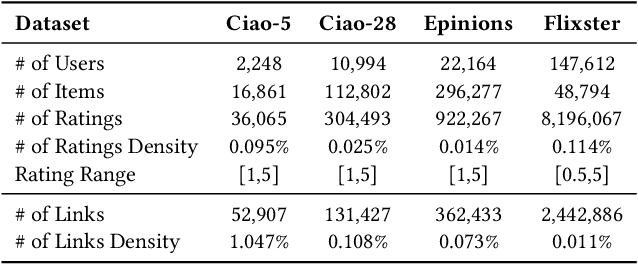

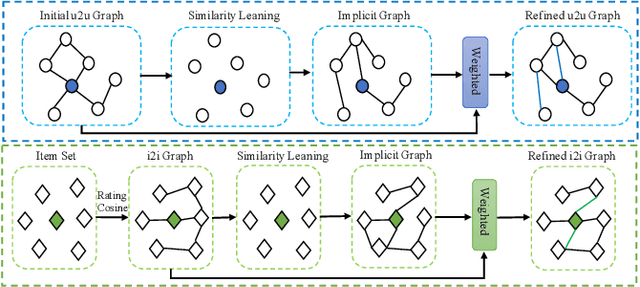

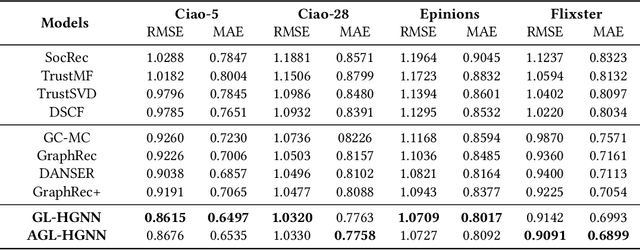

Abstract:Social recommendation based on social network has achieved great success in improving the performance of recommendation system. Since social network (user-user relations) and user-item interactions are both naturally represented as graph-structured data, Graph Neural Networks (GNNs) have thus been widely applied for social recommendation. In this work, we propose an end-to-end heterogeneous global graph learning framework, namely Graph Learning Augmented Heterogeneous Graph Neural Network (GL-HGNN) for social recommendation. GL-HGNN aims to learn a heterogeneous global graph that makes full use of user-user relations, user-item interactions and item-item similarities in a unified perspective. To this end, we design a Graph Learner (GL) method to learn and optimize user-user and item-item connections separately. Moreover, we employ a Heterogeneous Graph Neural Network (HGNN) to capture the high-order complex semantic relations from our learned heterogeneous global graph. To scale up the computation of graph learning, we further present the Anchor-based Graph Learner (AGL) to reduce computational complexity. Extensive experiments on four real-world datasets demonstrate the effectiveness of our model.

Heterogeneous Global Graph Neural Networks for Personalized Session-based Recommendation

Jul 08, 2021

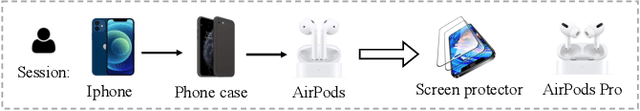

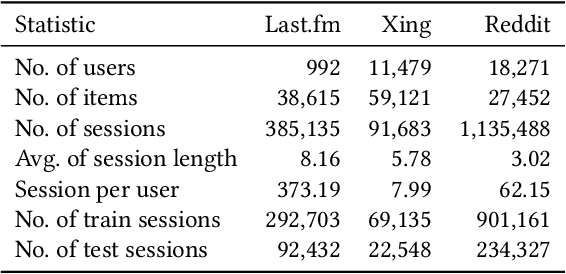

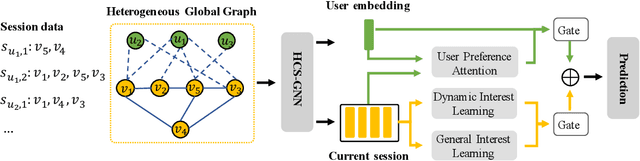

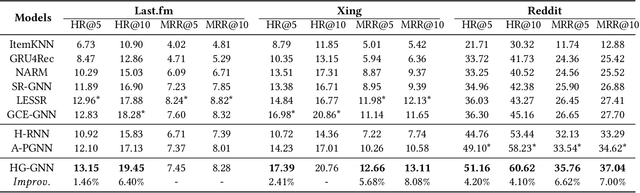

Abstract:Predicting the next interaction of a short-term interaction session is a challenging task in session-based recommendation. Almost all existing works rely on item transition patterns, and neglect the impact of user historical sessions while modeling user preference, which often leads to non-personalized recommendation. Additionally, existing personalized session-based recommenders capture user preference only based on the sessions of the current user, but ignore the useful item-transition patterns from other user's historical sessions. To address these issues, we propose a novel Heterogeneous Global Graph Neural Networks (HG-GNN) to exploit the item transitions over all sessions in a subtle manner for better inferring user preference from the current and historical sessions. To effectively exploit the item transitions over all sessions from users, we propose a novel heterogeneous global graph that contains item transitions of sessions, user-item interactions and global co-occurrence items. Moreover, to capture user preference from sessions comprehensively, we propose to learn two levels of user representations from the global graph via two graph augmented preference encoders. Specifically, we design a novel heterogeneous graph neural network (HGNN) on the heterogeneous global graph to learn the long-term user preference and item representations with rich semantics. Based on the HGNN, we propose the Current Preference Encoder and the Historical Preference Encoder to capture the different levels of user preference from the current and historical sessions, respectively. To achieve personalized recommendation, we integrate the representations of the user current preference and historical interests to generate the final user preference representation. Extensive experimental results on three real-world datasets show that our model outperforms other state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge