Erik Bodin

Linear combinations of latents in diffusion models: interpolation and beyond

Aug 16, 2024

Abstract:Generative models are crucial for applications like data synthesis and augmentation. Diffusion, Flow Matching and Continuous Normalizing Flows have shown effectiveness across various modalities, and rely on Gaussian latent variables for generation. As any generated object is directly associated with a particular latent variable, we can manipulate the variables to exert control over the generation process. However, standard approaches for combining latent variables, such as spherical interpolation, only apply or work well in special cases. Moreover, current methods for obtaining low-dimensional representations of the data, important for e.g. surrogate models for search and creative applications, are network and data modality specific. In this work we show that the standard methods to combine variables do not yield intermediates following the distribution the models are trained to expect. We propose Combination of Gaussian variables (COG), a novel interpolation method that addresses this, is easy to implement yet matches or improves upon current methods. COG addresses linear combinations in general and, as we demonstrate, also supports other operations including e.g. defining subspaces of the latent space, simplifying the creation of expressive low-dimensional spaces of high-dimensional objects using generative models based on Gaussian latents.

Making Differentiable Architecture Search less local

Apr 21, 2021

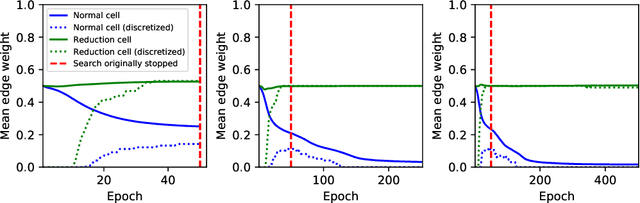

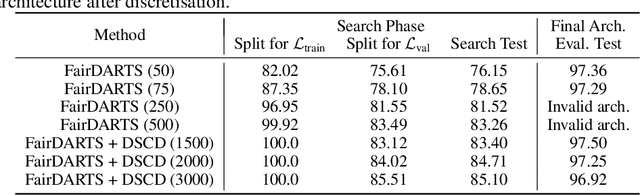

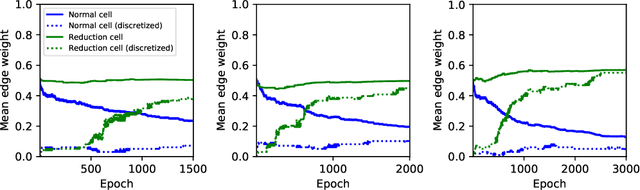

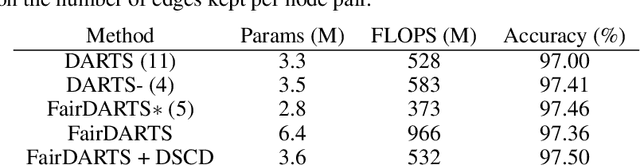

Abstract:Neural architecture search (NAS) is a recent methodology for automating the design of neural network architectures. Differentiable neural architecture search (DARTS) is a promising NAS approach that dramatically increases search efficiency. However, it has been shown to suffer from performance collapse, where the search often leads to detrimental architectures. Many recent works try to address this issue of DARTS by identifying indicators for early stopping, regularising the search objective to reduce the dominance of some operations, or changing the parameterisation of the search problem. In this work, we hypothesise that performance collapses can arise from poor local optima around typical initial architectures and weights. We address this issue by developing a more global optimisation scheme that is able to better explore the space without changing the DARTS problem formulation. Our experiments show that our changes in the search algorithm allow the discovery of architectures with both better test performance and fewer parameters.

Black-box density function estimation using recursive partitioning

Oct 26, 2020

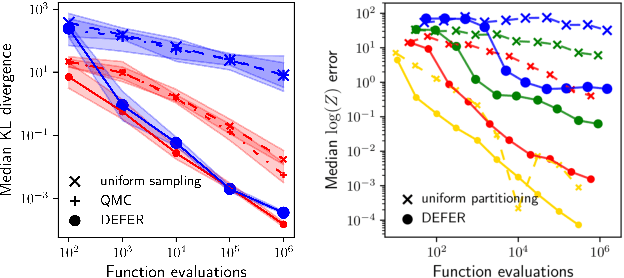

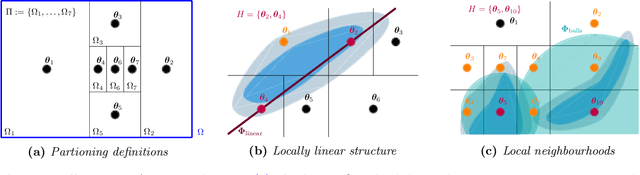

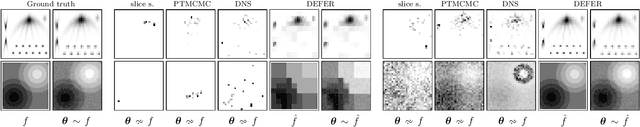

Abstract:We present a novel approach to Bayesian inference and general Bayesian computation that is defined through a recursive partitioning of the sample space. It does not rely on gradients, nor require any problem-specific tuning, and is asymptotically exact for any density function with a bounded domain. The output is an approximation to the whole density function including the normalization constant, via partitions organized in efficient data structures. This allows for evidence estimation, as well as approximate posteriors that allow for fast sampling and fast evaluations of the density. It shows competitive performance to recent state-of-the-art methods on synthetic and real-world problem examples including parameter inference for gravitational-wave physics.

Compositional uncertainty in deep Gaussian processes

Sep 17, 2019

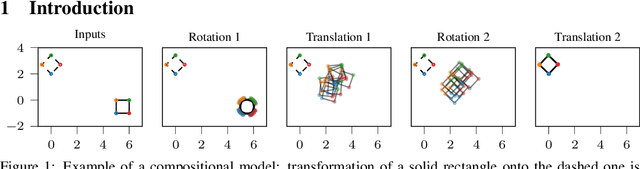

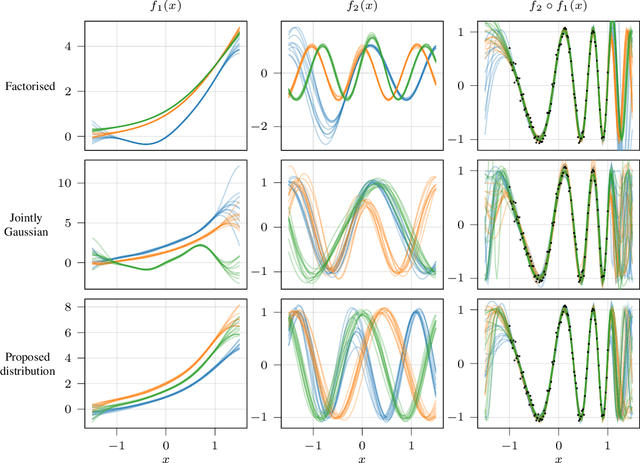

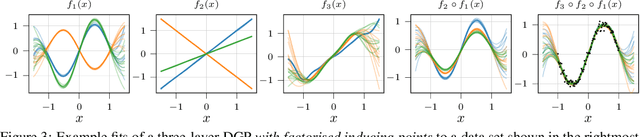

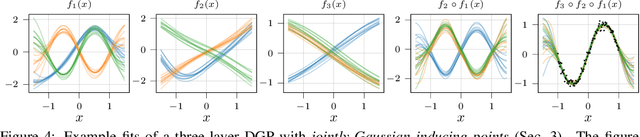

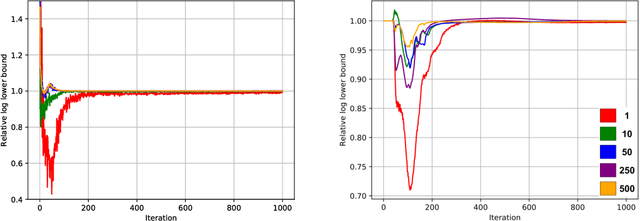

Abstract:Gaussian processes (GPs) are nonparametric priors over functions, and fitting a GP to the data implies computing the posterior distribution of the functions consistent with the observed data. Similarly, deep Gaussian processes (DGPs) [Damianou:2013] should allow us to compute the posterior distribution of compositions of multiple functions giving rise to the observations. However, exact Bayesian inference is usually intractable for DGPs, motivating the use of various approximations. We show that the simplifying assumptions for a common type of Variational inference approximation imply that all but one layer of a DGP collapse to a deterministic transformation. We argue that such an inference scheme is suboptimal, not taking advantage of the potential of the model to discover the compositional structure in the data, and propose possible modifications addressing this issue.

Modulated Bayesian Optimization using Latent Gaussian Process Models

Jun 26, 2019

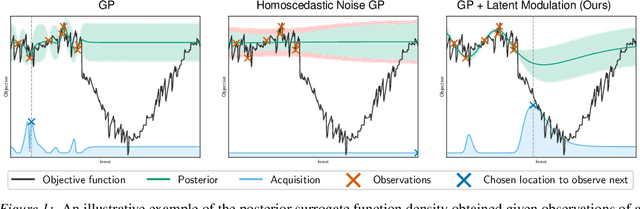

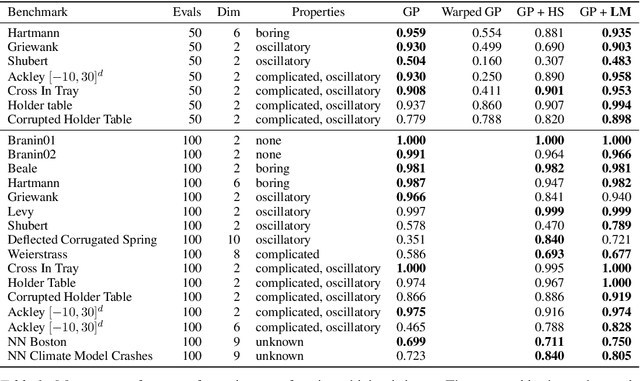

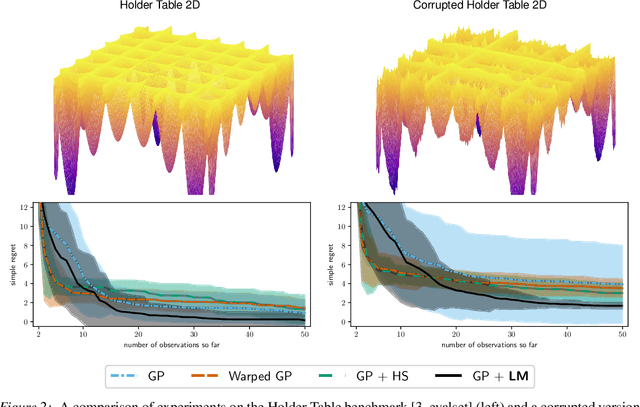

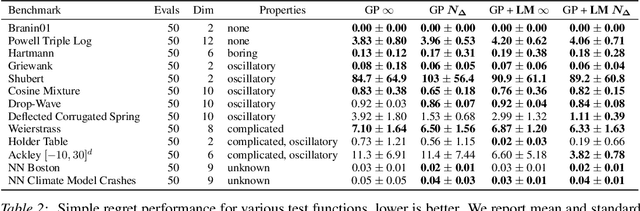

Abstract:We present an approach to Bayesian Optimization that allows for robust search strategies over a large class of challenging functions. Our method is motivated by the belief that the trends useful to exploit in search of the optimum typically are a subset of the characteristics of the true objective function. At the core of our approach is the use of a Latent Gaussian Process Regression model that allows us to modulate the input domain with an orthogonal latent space. Using this latent space we can encapsulate local information about each observed data point that can be used to guide the search problem. We show experimentally that our method can be used to significantly improve performance on challenging benchmarks.

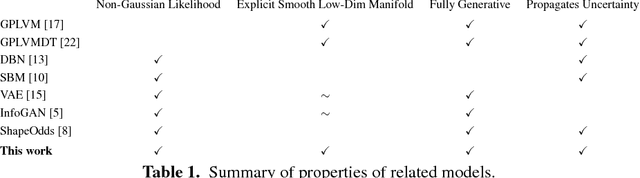

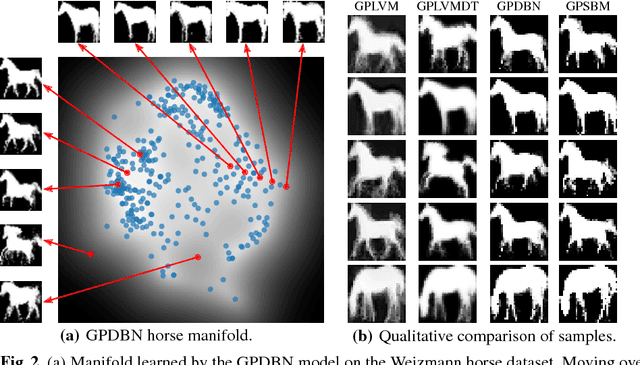

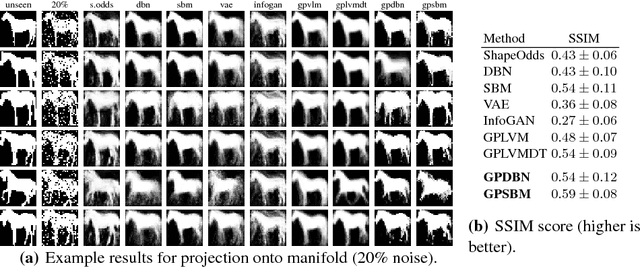

Gaussian Process Deep Belief Networks: A Smooth Generative Model of Shape with Uncertainty Propagation

Dec 13, 2018

Abstract:The shape of an object is an important characteristic for many vision problems such as segmentation, detection and tracking. Being independent of appearance, it is possible to generalize to a large range of objects from only small amounts of data. However, shapes represented as silhouette images are challenging to model due to complicated likelihood functions leading to intractable posteriors. In this paper we present a generative model of shapes which provides a low dimensional latent encoding which importantly resides on a smooth manifold with respect to the silhouette images. The proposed model propagates uncertainty in a principled manner allowing it to learn from small amounts of data and providing predictions with associated uncertainty. We provide experiments that show how our proposed model provides favorable quantitative results compared with the state-of-the-art while simultaneously providing a representation that resides on a low-dimensional interpretable manifold.

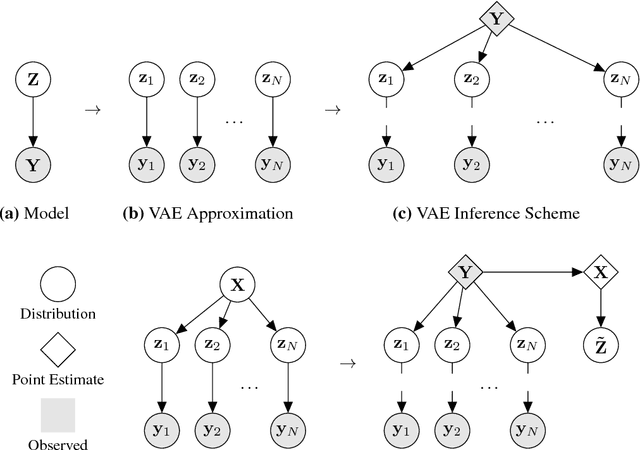

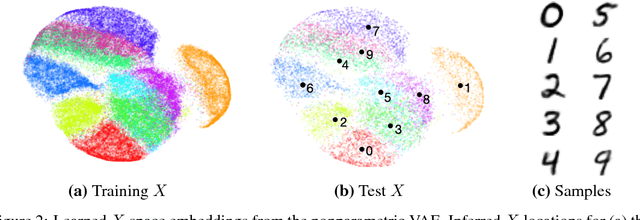

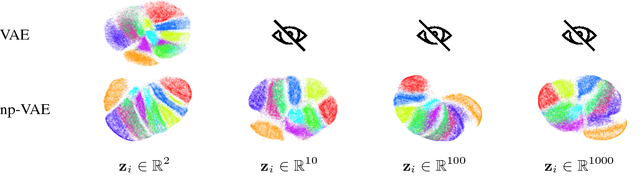

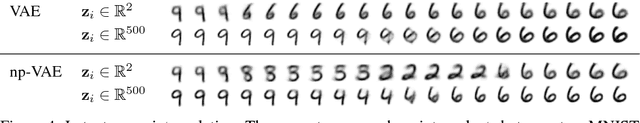

Nonparametric Inference for Auto-Encoding Variational Bayes

Dec 18, 2017

Abstract:We would like to learn latent representations that are low-dimensional and highly interpretable. A model that has these characteristics is the Gaussian Process Latent Variable Model. The benefits and negative of the GP-LVM are complementary to the Variational Autoencoder, the former provides interpretable low-dimensional latent representations while the latter is able to handle large amounts of data and can use non-Gaussian likelihoods. Our inspiration for this paper is to marry these two approaches and reap the benefits of both. In order to do so we will introduce a novel approximate inference scheme inspired by the GP-LVM and the VAE. We show experimentally that the approximation allows the capacity of the generative bottle-neck (Z) of the VAE to be arbitrarily large without losing a highly interpretable representation, allowing reconstruction quality to be unlimited by Z at the same time as a low-dimensional space can be used to perform ancestral sampling from as well as a means to reason about the embedded data.

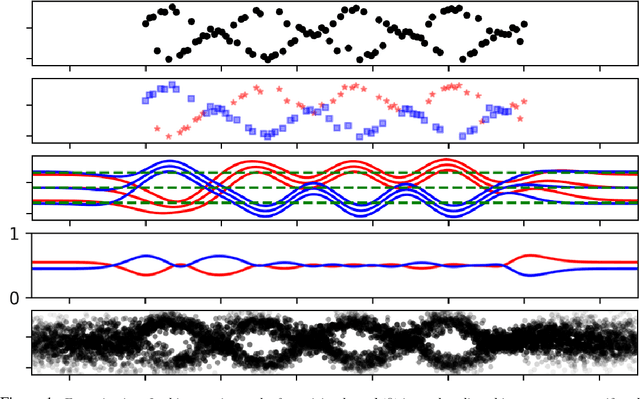

Latent Gaussian Process Regression

Sep 16, 2017

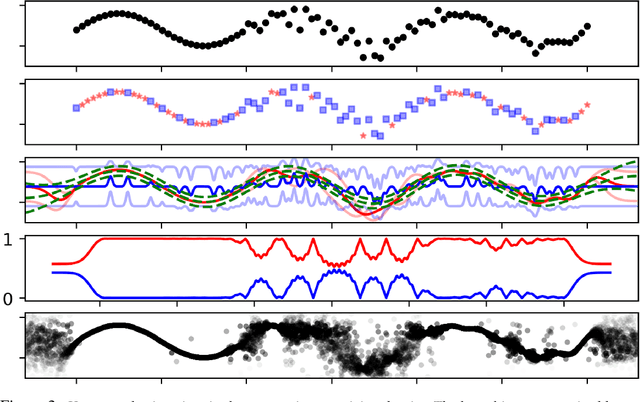

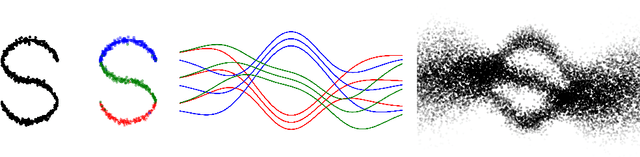

Abstract:We introduce Latent Gaussian Process Regression which is a latent variable extension allowing modelling of non-stationary multi-modal processes using GPs. The approach is built on extending the input space of a regression problem with a latent variable that is used to modulate the covariance function over the training data. We show how our approach can be used to model multi-modal and non-stationary processes. We exemplify the approach on a set of synthetic data and provide results on real data from motion capture and geostatistics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge