Elizabeth A. Barnes

Colorado State University, Fort Collins, USA

Forecasting the Future with Yesterday's Climate: Temperature Bias in AI Weather and Climate Models

Sep 26, 2025Abstract:AI-based climate and weather models have rapidly gained popularity, providing faster forecasts with skill that can match or even surpass that of traditional dynamical models. Despite this success, these models face a key challenge: predicting future climates while being trained only with historical data. In this study, we investigate this issue by analyzing boreal winter land temperature biases in AI weather and climate models. We examine two weather models, FourCastNet V2 Small (FourCastNet) and Pangu Weather (Pangu), evaluating their predictions for 2020-2025 and Ai2 Climate Emulator version 2 (ACE2) for 1996-2010. These time periods lie outside of the respective models' training sets and are significantly more recent than the bulk of their training data, allowing us to assess how well the models generalize to new, i.e. more modern, conditions. We find that all three models produce cold-biased mean temperatures, resembling climates from 15-20 years earlier than the period they are predicting. In some regions, like the Eastern U.S., the predictions resemble climates from as much as 20-30 years earlier. Further analysis shows that FourCastNet's and Pangu's cold bias is strongest in the hottest predicted temperatures, indicating limited training exposure to modern extreme heat events. In contrast, ACE2's bias is more evenly distributed but largest in regions, seasons, and parts of the temperature distribution where climate change has been most pronounced. These findings underscore the challenge of training AI models exclusively on historical data and highlight the need to account for such biases when applying them to future climate prediction.

Turning Up the Heat: Assessing 2-m Temperature Forecast Errors in AI Weather Prediction Models During Heat Waves

Apr 29, 2025

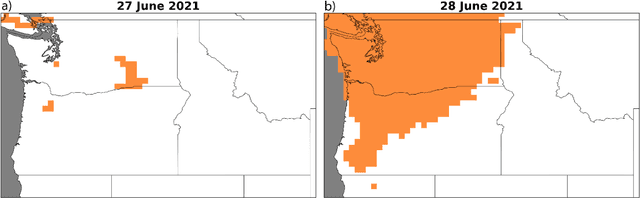

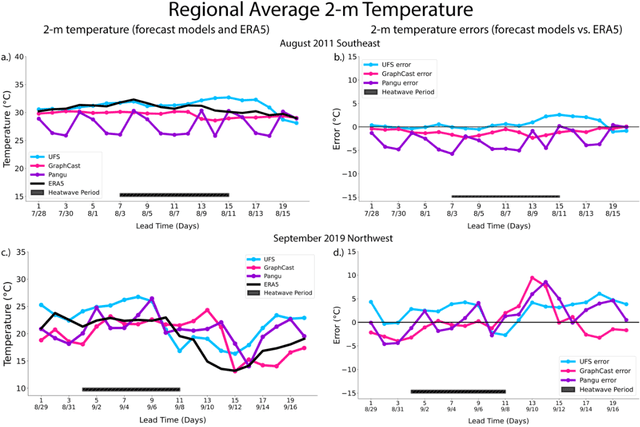

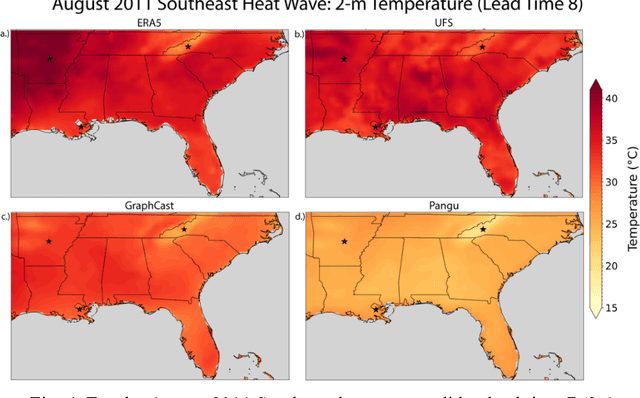

Abstract:Extreme heat is the deadliest weather-related hazard in the United States. Furthermore, it is increasing in intensity, frequency, and duration, making skillful forecasts vital to protecting life and property. Traditional numerical weather prediction (NWP) models struggle with extreme heat for medium-range and subseasonal-to-seasonal (S2S) timescales. Meanwhile, artificial intelligence-based weather prediction (AIWP) models are progressing rapidly. However, it is largely unknown how well AIWP models forecast extremes, especially for medium-range and S2S timescales. This study investigates 2-m temperature forecasts for 60 heat waves across the four boreal seasons and over four CONUS regions at lead times up to 20 days, using two AIWP models (Google GraphCast and Pangu-Weather) and one traditional NWP model (NOAA United Forecast System Global Ensemble Forecast System (UFS GEFS)). First, case study analyses show that both AIWP models and the UFS GEFS exhibit consistent cold biases on regional scales in the 5-10 days of lead time before heat wave onset. GraphCast is the more skillful AIWP model, outperforming UFS GEFS and Pangu-Weather in most locations. Next, the two AIWP models are isolated and analyzed across all heat waves and seasons, with events split among the model's testing (2018-2023) and training (1979-2017) periods. There are cold biases before and during the heat waves in both models and all seasons, except Pangu-Weather in winter, which exhibits a mean warm bias before heat wave onset. Overall, results offer encouragement that AIWP models may be useful for medium-range and S2S predictability of extreme heat.

Predicting Tropical Cyclone Track Forecast Errors using a Probabilistic Neural Network

Mar 12, 2025

Abstract:A new method for estimating tropical cyclone track uncertainty is presented and tested. This method uses a neural network to predict a bivariate normal distribution, which serves as an estimate for track uncertainty. We train the network and make predictions on forecasts from the National Hurricane Center (NHC), which currently uses static error distributions based on forecasts from the past five years for most applications. The neural network-based method produces uncertainty estimates that are dynamic and probabilistic. Further, the neural network-based method allows for probabilistic statements about tropical cyclone trajectories, including landfall probability, which we highlight. We show that our predictions are well calibrated using multiple metrics, that our method produces better uncertainty estimates than current NHC approaches, and that our method achieves similar performance to the Global Ensemble Forecast System. Once trained, the computational cost of predictions using this method is negligible, making it a strong candidate to improve the NHC's operational estimations of tropical cyclone track uncertainty.

Multi-Year-to-Decadal Temperature Prediction using a Machine Learning Model-Analog Framework

Feb 24, 2025Abstract:Multi-year-to-decadal climate prediction is a key tool in understanding the range of potential regional and global climate futures. Here, we present a framework that combines machine learning and analog forecasting for predictions on these timescales. A neural network is used to learn a mask, specific to a region and lead time, with global weights based on relative importance as precursors to the evolution of that prediction target. A library of mask-weighted model states, or potential analogs, are then compared to a single mask-weighted observational state. The known future of the best matching potential analogs serve as the prediction for the future of the observational state. We match and predict 2-meter temperature using the Berkeley Earth Surface Temperature dataset for observations, and a set of CMIP6 models as the analog library. We find improved performance over traditional analog methods and initialized decadal predictions.

Recommendations for Comprehensive and Independent Evaluation of Machine Learning-Based Earth System Models

Oct 24, 2024Abstract:Machine learning (ML) is a revolutionary technology with demonstrable applications across multiple disciplines. Within the Earth science community, ML has been most visible for weather forecasting, producing forecasts that rival modern physics-based models. Given the importance of deepening our understanding and improving predictions of the Earth system on all time scales, efforts are now underway to develop forecasting models into Earth-system models (ESMs), capable of representing all components of the coupled Earth system (or their aggregated behavior) and their response to external changes. Modeling the Earth system is a much more difficult problem than weather forecasting, not least because the model must represent the alternate (e.g., future) coupled states of the system for which there are no historical observations. Given that the physical principles that enable predictions about the response of the Earth system are often not explicitly coded in these ML-based models, demonstrating the credibility of ML-based ESMs thus requires us to build evidence of their consistency with the physical system. To this end, this paper puts forward five recommendations to enhance comprehensive, standardized, and independent evaluation of ML-based ESMs to strengthen their credibility and promote their wider use.

ClimSim: An open large-scale dataset for training high-resolution physics emulators in hybrid multi-scale climate simulators

Jun 16, 2023Abstract:Modern climate projections lack adequate spatial and temporal resolution due to computational constraints. A consequence is inaccurate and imprecise prediction of critical processes such as storms. Hybrid methods that combine physics with machine learning (ML) have introduced a new generation of higher fidelity climate simulators that can sidestep Moore's Law by outsourcing compute-hungry, short, high-resolution simulations to ML emulators. However, this hybrid ML-physics simulation approach requires domain-specific treatment and has been inaccessible to ML experts because of lack of training data and relevant, easy-to-use workflows. We present ClimSim, the largest-ever dataset designed for hybrid ML-physics research. It comprises multi-scale climate simulations, developed by a consortium of climate scientists and ML researchers. It consists of 5.7 billion pairs of multivariate input and output vectors that isolate the influence of locally-nested, high-resolution, high-fidelity physics on a host climate simulator's macro-scale physical state. The dataset is global in coverage, spans multiple years at high sampling frequency, and is designed such that resulting emulators are compatible with downstream coupling into operational climate simulators. We implement a range of deterministic and stochastic regression baselines to highlight the ML challenges and their scoring. The data (https://huggingface.co/datasets/LEAP/ClimSim_high-res) and code (https://leap-stc.github.io/ClimSim) are released openly to support the development of hybrid ML-physics and high-fidelity climate simulations for the benefit of science and society.

Carefully choose the baseline: Lessons learned from applying XAI attribution methods for regression tasks in geoscience

Aug 19, 2022

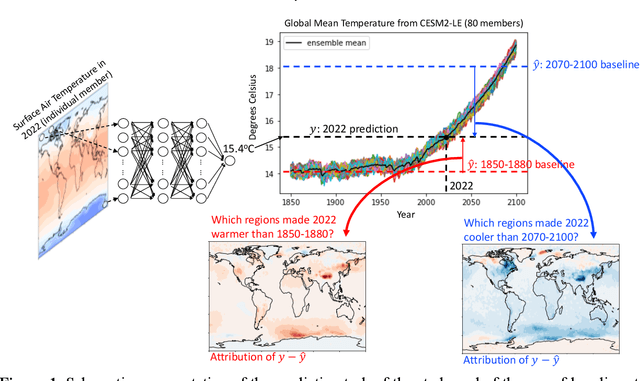

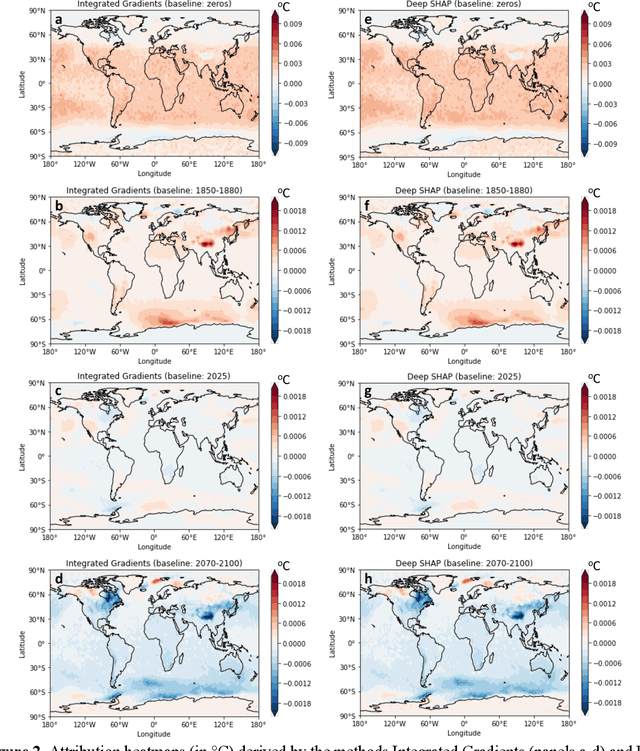

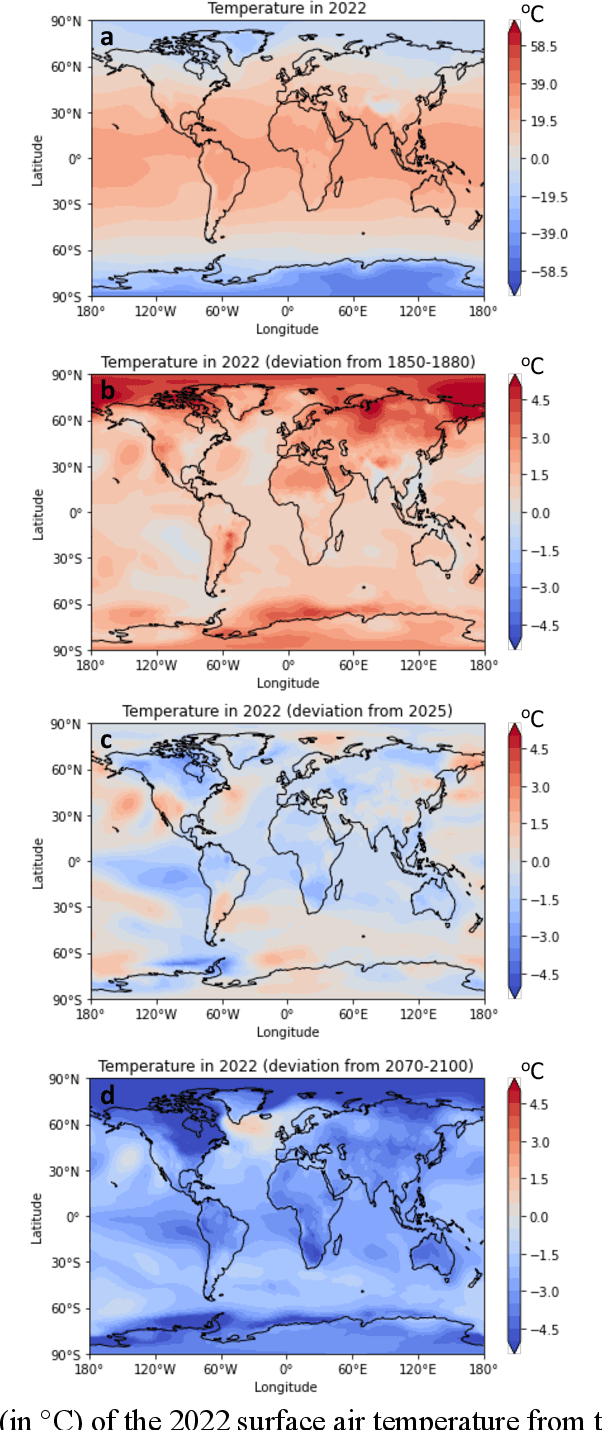

Abstract:Methods of eXplainable Artificial Intelligence (XAI) are used in geoscientific applications to gain insights into the decision-making strategy of Neural Networks (NNs) highlighting which features in the input contribute the most to a NN prediction. Here, we discuss our lesson learned that the task of attributing a prediction to the input does not have a single solution. Instead, the attribution results and their interpretation depend greatly on the considered baseline (sometimes referred to as reference point) that the XAI method utilizes; a fact that has been overlooked so far in the literature. This baseline can be chosen by the user or it is set by construction in the method s algorithm, often without the user being aware of that choice. We highlight that different baselines can lead to different insights for different science questions and, thus, should be chosen accordingly. To illustrate the impact of the baseline, we use a large ensemble of historical and future climate simulations forced with the SSP3-7.0 scenario and train a fully connected NN to predict the ensemble- and global-mean temperature (i.e., the forced global warming signal) given an annual temperature map from an individual ensemble member. We then use various XAI methods and different baselines to attribute the network predictions to the input. We show that attributions differ substantially when considering different baselines, as they correspond to answering different science questions. We conclude by discussing some important implications and considerations about the use of baselines in XAI research.

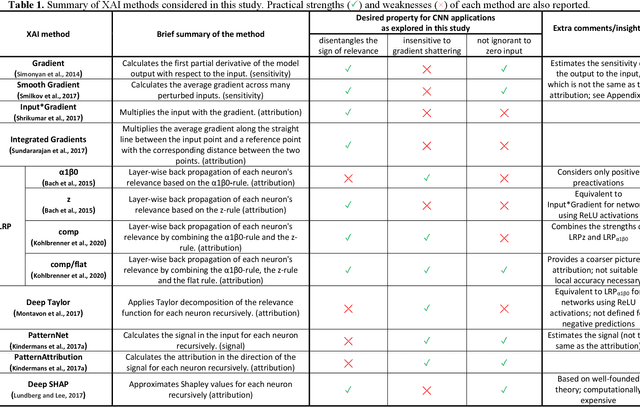

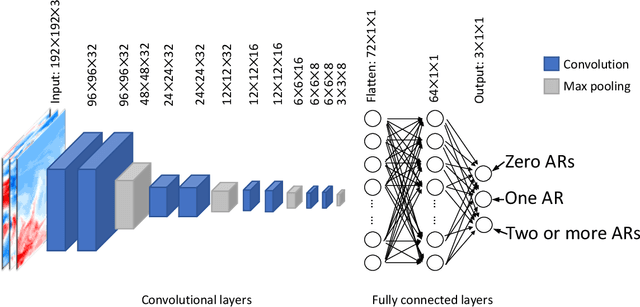

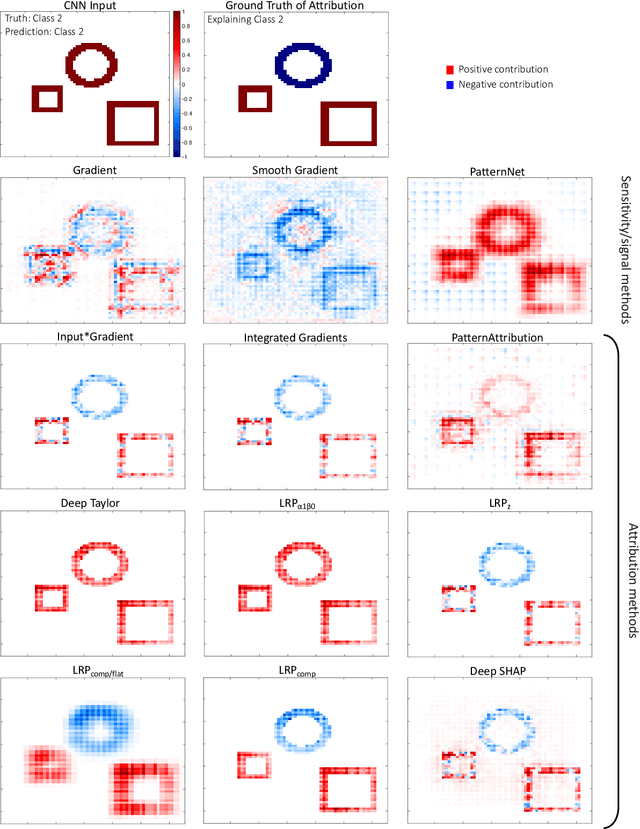

Investigating the fidelity of explainable artificial intelligence methods for applications of convolutional neural networks in geoscience

Feb 07, 2022

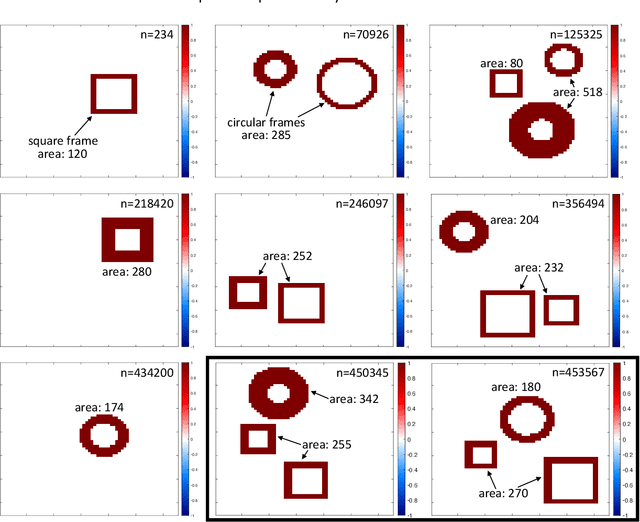

Abstract:Convolutional neural networks (CNNs) have recently attracted great attention in geoscience due to their ability to capture non-linear system behavior and extract predictive spatiotemporal patterns. Given their black-box nature however, and the importance of prediction explainability, methods of explainable artificial intelligence (XAI) are gaining popularity as a means to explain the CNN decision-making strategy. Here, we establish an intercomparison of some of the most popular XAI methods and investigate their fidelity in explaining CNN decisions for geoscientific applications. Our goal is to raise awareness of the theoretical limitations of these methods and gain insight into the relative strengths and weaknesses to help guide best practices. The considered XAI methods are first applied to an idealized attribution benchmark, where the ground truth of explanation of the network is known a priori, to help objectively assess their performance. Secondly, we apply XAI to a climate-related prediction setting, namely to explain a CNN that is trained to predict the number of atmospheric rivers in daily snapshots of climate simulations. Our results highlight several important issues of XAI methods (e.g., gradient shattering, inability to distinguish the sign of attribution, ignorance to zero input) that have previously been overlooked in our field and, if not considered cautiously, may lead to a distorted picture of the CNN decision-making strategy. We envision that our analysis will motivate further investigation into XAI fidelity and will help towards a cautious implementation of XAI in geoscience, which can lead to further exploitation of CNNs and deep learning for prediction problems.

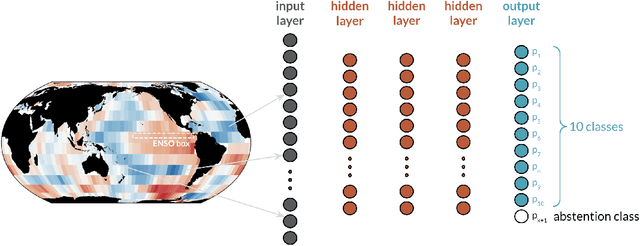

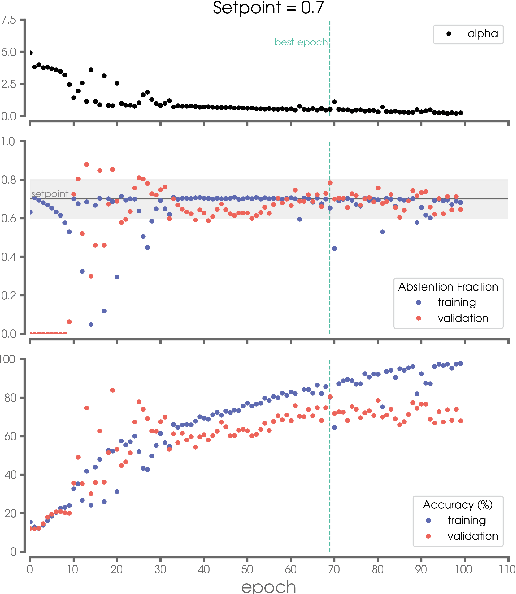

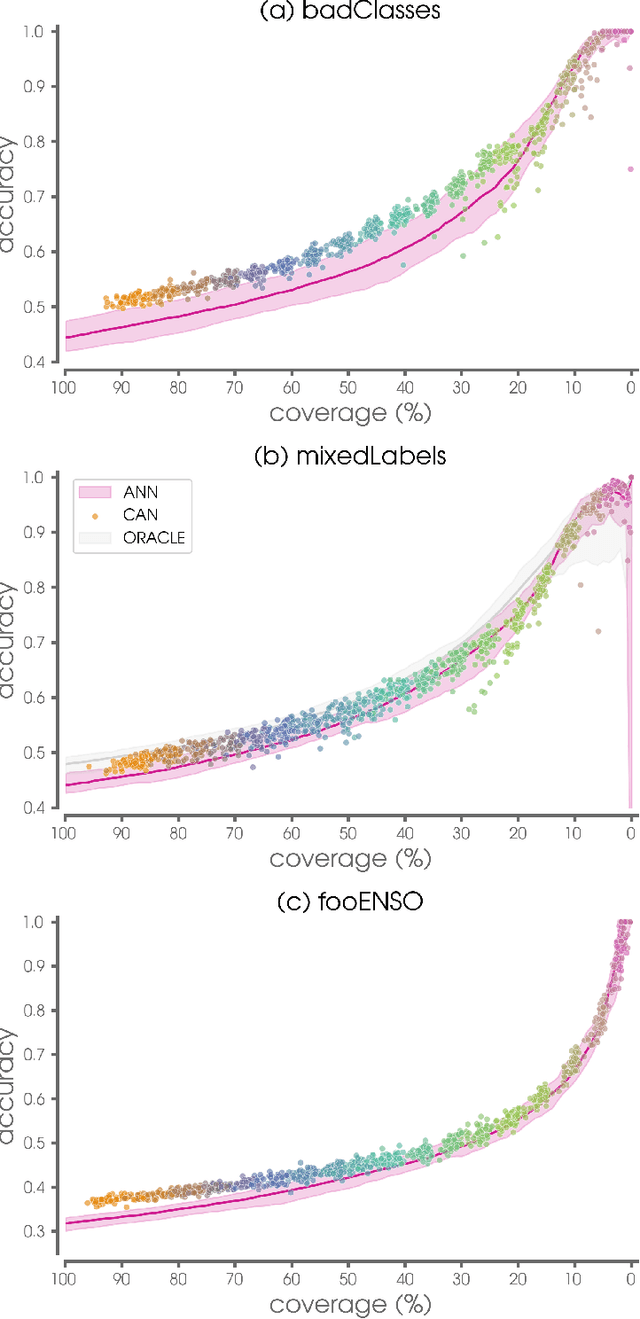

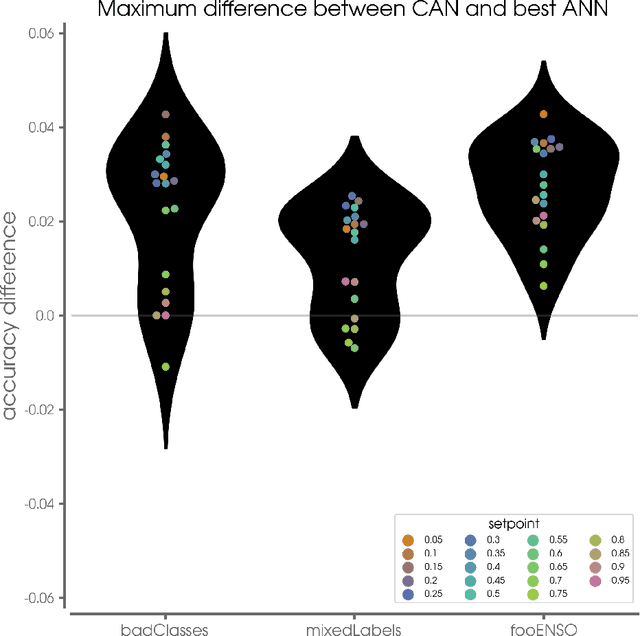

Controlled abstention neural networks for identifying skillful predictions for classification problems

Apr 16, 2021

Abstract:The earth system is exceedingly complex and often chaotic in nature, making prediction incredibly challenging: we cannot expect to make perfect predictions all of the time. Instead, we look for specific states of the system that lead to more predictable behavior than others, often termed "forecasts of opportunity." When these opportunities are not present, scientists need prediction systems that are capable of saying "I don't know." We introduce a novel loss function, termed the "NotWrong loss", that allows neural networks to identify forecasts of opportunity for classification problems. The NotWrong loss introduces an abstention class that allows the network to identify the more confident samples and abstain (say "I don't know") on the less confident samples. The abstention loss is designed to abstain on a user-defined fraction of the samples via a PID controller. Unlike many machine learning methods used to reject samples post-training, the NotWrong loss is applied during training to preferentially learn from the more confident samples. We show that the NotWrong loss outperforms other existing loss functions for multiple climate use cases. The implementation of the proposed loss function is straightforward in most network architectures designed for classification as it only requires the addition of an abstention class to the output layer and modification of the loss function.

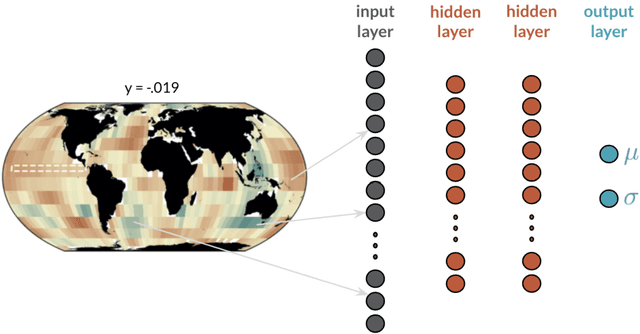

Controlled abstention neural networks for identifying skillful predictions for regression problems

Apr 16, 2021

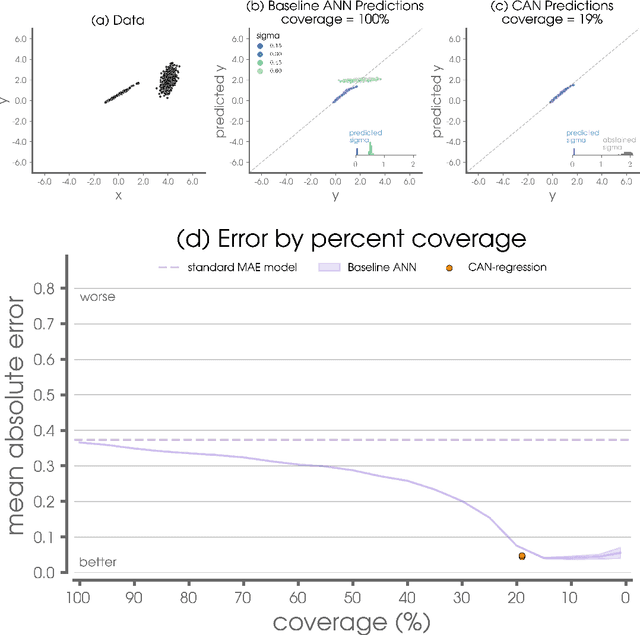

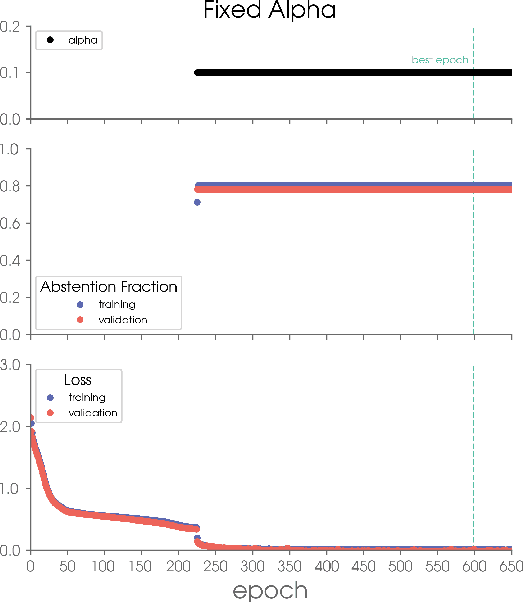

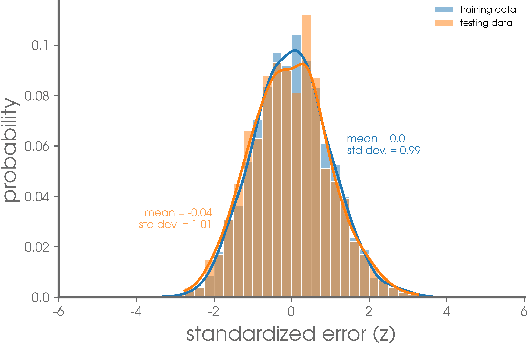

Abstract:The earth system is exceedingly complex and often chaotic in nature, making prediction incredibly challenging: we cannot expect to make perfect predictions all of the time. Instead, we look for specific states of the system that lead to more predictable behavior than others, often termed "forecasts of opportunity". When these opportunities are not present, scientists need prediction systems that are capable of saying "I don't know." We introduce a novel loss function, termed "abstention loss", that allows neural networks to identify forecasts of opportunity for regression problems. The abstention loss works by incorporating uncertainty in the network's prediction to identify the more confident samples and abstain (say "I don't know") on the less confident samples. The abstention loss is designed to determine the optimal abstention fraction, or abstain on a user-defined fraction via a PID controller. Unlike many methods for attaching uncertainty to neural network predictions post-training, the abstention loss is applied during training to preferentially learn from the more confident samples. The abstention loss is built upon a standard computer science method. While the standard approach is itself a simple yet powerful tool for incorporating uncertainty in regression problems, we demonstrate that the abstention loss outperforms this more standard method for the synthetic climate use cases explored here. The implementation of proposed loss function is straightforward in most network architectures designed for regression, as it only requires modification of the output layer and loss function.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge