Elijah Stanger-Jones

Tailoring Solution Accuracy for Fast Whole-body Model Predictive Control of Legged Robots

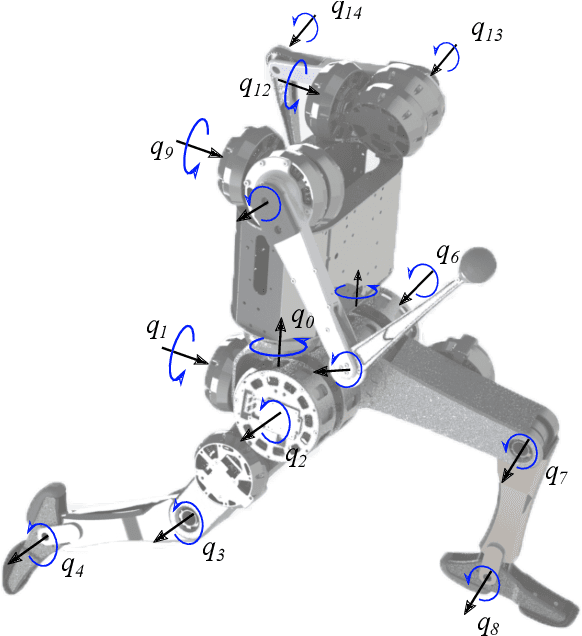

Jul 15, 2024Abstract:Thanks to recent advancements in accelerating non-linear model predictive control (NMPC), it is now feasible to deploy whole-body NMPC at real-time rates for humanoid robots. However, enforcing inequality constraints in real time for such high-dimensional systems remains challenging due to the need for additional iterations. This paper presents an implementation of whole-body NMPC for legged robots that provides low-accuracy solutions to NMPC with general equality and inequality constraints. Instead of aiming for highly accurate optimal solutions, we leverage the alternating direction method of multipliers to rapidly provide low-accuracy solutions to quadratic programming subproblems. Our extensive simulation results indicate that real robots often cannot benefit from highly accurate solutions due to dynamics discretization errors, inertial modeling errors and delays. We incorporate control barrier functions (CBFs) at the initial timestep of the NMPC for the self-collision constraints, resulting in up to a 26-fold reduction in the number of self-collisions without adding computational burden. The controller is reliably deployed on hardware at 90 Hz for a problem involving 32 timesteps, 2004 variables, and 3768 constraints. The NMPC delivers sufficiently accurate solutions, enabling the MIT Humanoid to plan complex crossed-leg and arm motions that enhance stability when walking and recovering from significant disturbances.

FLD: Fourier Latent Dynamics for Structured Motion Representation and Learning

Feb 21, 2024Abstract:Motion trajectories offer reliable references for physics-based motion learning but suffer from sparsity, particularly in regions that lack sufficient data coverage. To address this challenge, we introduce a self-supervised, structured representation and generation method that extracts spatial-temporal relationships in periodic or quasi-periodic motions. The motion dynamics in a continuously parameterized latent space enable our method to enhance the interpolation and generalization capabilities of motion learning algorithms. The motion learning controller, informed by the motion parameterization, operates online tracking of a wide range of motions, including targets unseen during training. With a fallback mechanism, the controller dynamically adapts its tracking strategy and automatically resorts to safe action execution when a potentially risky target is proposed. By leveraging the identified spatial-temporal structure, our work opens new possibilities for future advancements in general motion representation and learning algorithms.

Design of a Multimodal Fingertip Sensor for Dynamic Manipulation

Sep 23, 2022

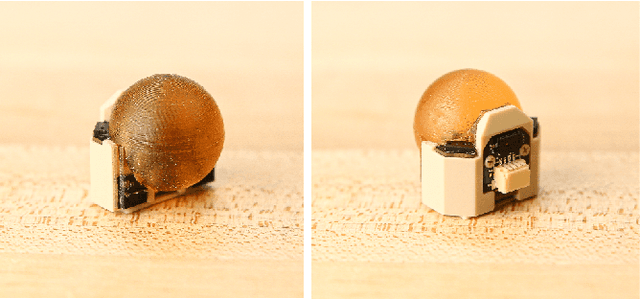

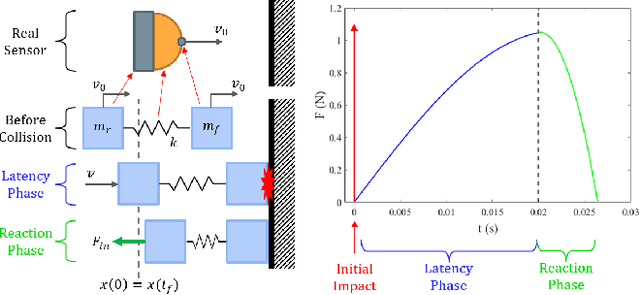

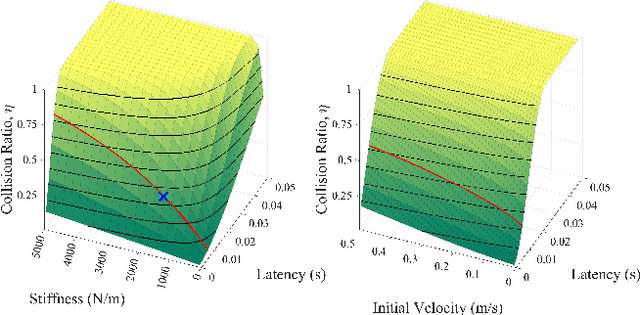

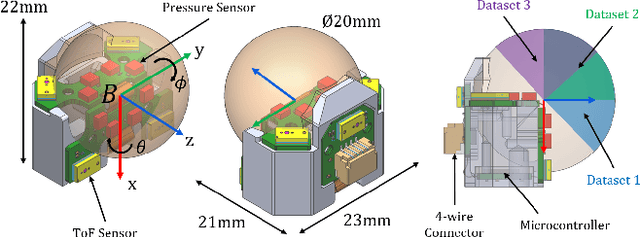

Abstract:We introduce a spherical fingertip sensor for dynamic manipulation. It is based on barometric pressure and time-of-flight proximity sensors and is low-latency, compact, and physically robust. The sensor uses a trained neural network to estimate the contact location and three-axis contact forces based on data from the pressure sensors, which are embedded within the sensor's sphere of polyurethane rubber. The time-of-flight sensors face in three different outward directions, and an integrated microcontroller samples each of the individual sensors at up to 200 Hz. To quantify the effect of system latency on dynamic manipulation performance, we develop and analyze a metric called the collision impulse ratio and characterize the end-to-end latency of our new sensor. We also present experimental demonstrations with the sensor, including measuring contact transitions, performing coarse mapping, maintaining a contact force with a moving object, and reacting to avoid collisions.

Towards Robust Autonomous Grasping with Reflexes Using High-Bandwidth Sensing and Actuation

Sep 23, 2022

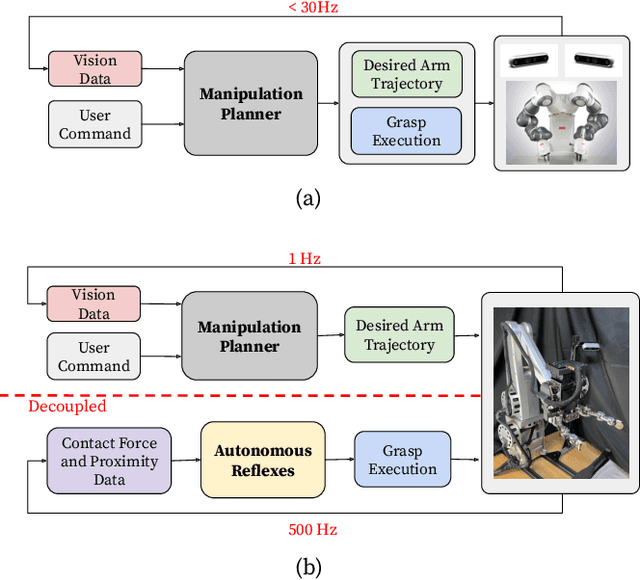

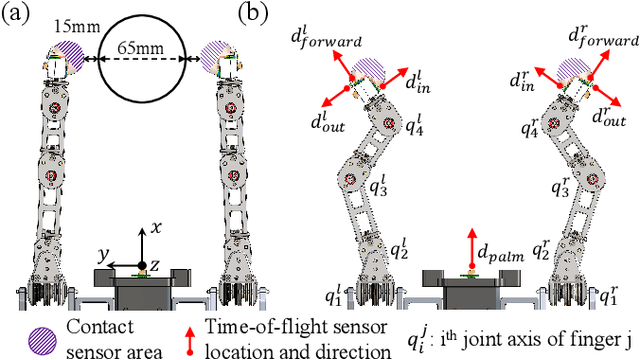

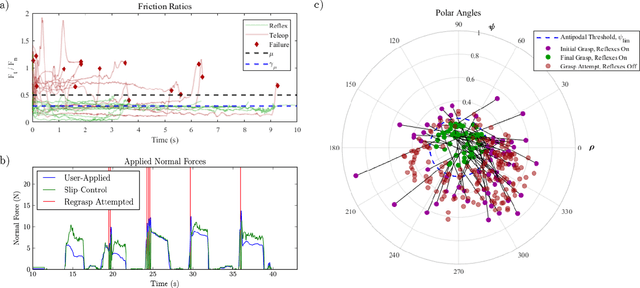

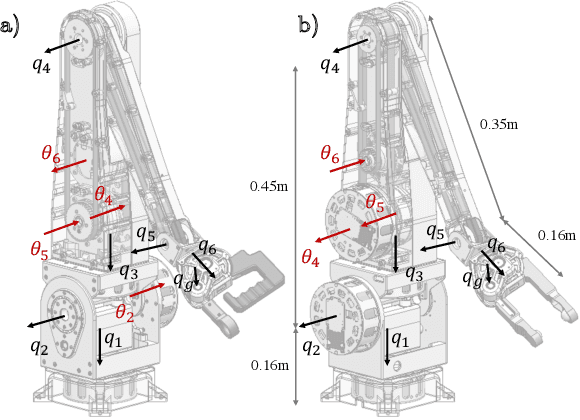

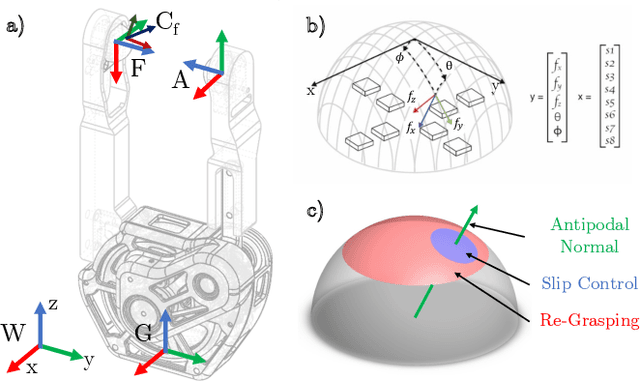

Abstract:Modern robotic manipulation systems fall short of human manipulation skills partly because they rely on closing feedback loops exclusively around vision data, which reduces system bandwidth and speed. By developing autonomous grasping reflexes that rely on high-bandwidth force, contact, and proximity data, the overall system speed and robustness can be increased while reducing reliance on vision data. We are developing a new system built around a low-inertia, high-speed arm with nimble fingers that combines a high-level trajectory planner operating at less than 1 Hz with low-level autonomous reflex controllers running upwards of 300 Hz. We characterize the reflex system by comparing the volume of the set of successful grasps for a naive baseline controller and variations of our reflexive grasping controller, finding that our controller expands the set of successful grasps by 55% relative to the baseline. We also deploy our reflexive grasping controller with a simple vision-based planner in an autonomous clutter clearing task, achieving a grasp success rate above 90% while clearing over 100 items.

Fast Reflexive Grasping with a Proprioceptive Teleoperation Platform

Aug 09, 2022

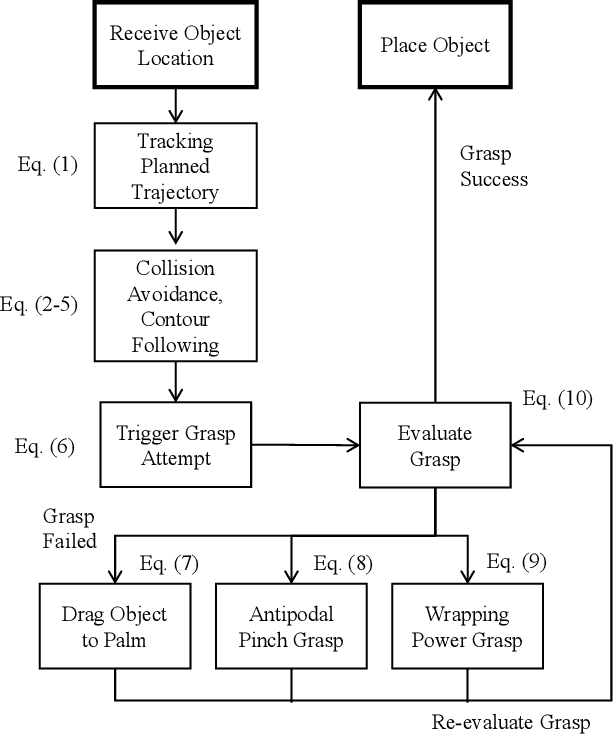

Abstract:We present a proprioceptive teleoperation system that uses a reflexive grasping algorithm to enhance the speed and robustness of pick-and-place tasks. The system consists of two manipulators that use quasi-direct-drive actuation to provide highly transparent force feedback. The end-effector has bimodal force sensors that measure 3-axis force information and 2-dimensional contact location. This information is used for anti-slip and re-grasping reflexes. When the user makes contact with the desired object, the re-grasping reflex aligns the gripper fingers with antipodal points on the object to maximize the grasp stability. The reflex takes only 150ms to correct for inaccurate grasps chosen by the user, so the user's motion is only minimally disturbed by the execution of the re-grasp. Once antipodal contact is established, the anti-slip reflex ensures that the gripper applies enough normal force to prevent the object from slipping out of the grasp. The combination of proprioceptive manipulators and reflexive grasping allows the user to complete teleoperated tasks with precision at high speed.

The MIT Humanoid Robot: Design, Motion Planning, and Control For Acrobatic Behaviors

Apr 19, 2021

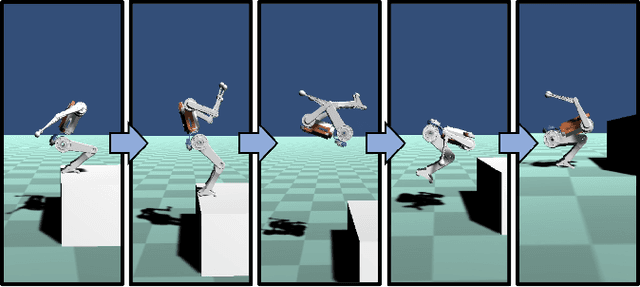

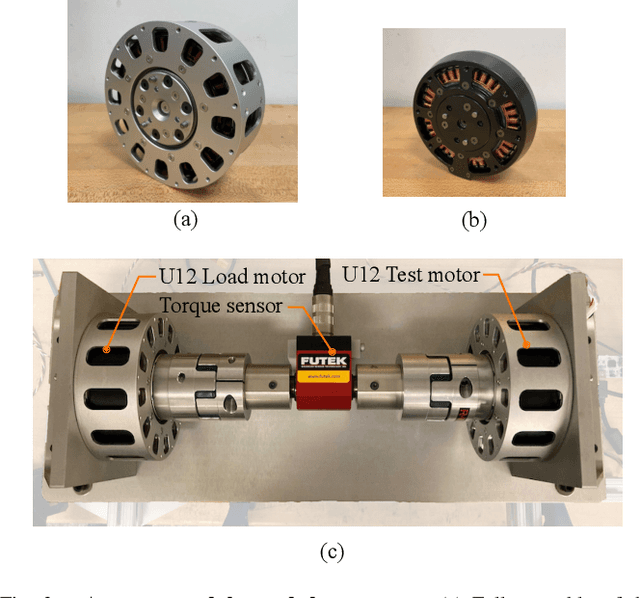

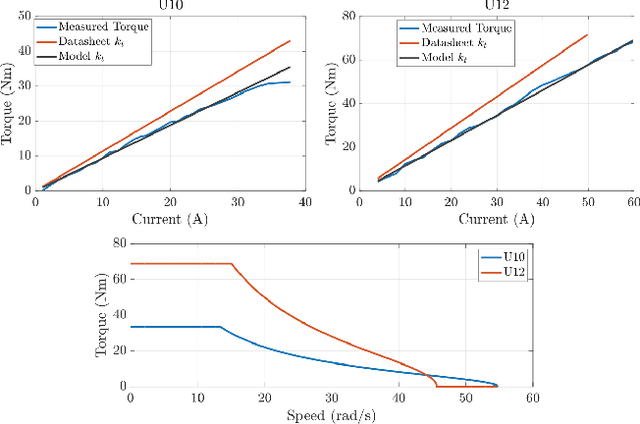

Abstract:Demonstrating acrobatic behavior of a humanoid robot such as flips and spinning jumps requires systematic approaches across hardware design, motion planning, and control. In this paper, we present a new humanoid robot design, an actuator-aware kino-dynamic motion planner, and a landing controller as part of a practical system design for highly dynamic motion control of the humanoid robot. To achieve the impulsive motions, we develop two new proprioceptive actuators and experimentally evaluate their performance using our custom-designed dynamometer. The actuator's torque, velocity, and power limits are reflected in our kino-dynamic motion planner by approximating the configuration-dependent reaction force limits and in our dynamics simulator by including actuator dynamics along with the robot's full-body dynamics. For the landing control, we effectively integrate model-predictive control and whole-body impulse control by connecting them in a dynamically consistent way to accomplish both the long-time horizon optimal control and high-bandwidth full-body dynamics-based feedback. Actuators' torque output over the entire motion are validated based on the velocity-torque model including battery voltage droop and back-EMF voltage. With the carefully designed hardware and control framework, we successfully demonstrate dynamic behaviors such as back flips, front flips, and spinning jumps in our realistic dynamics simulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge