Hongmin Kim

Design of a Multimodal Fingertip Sensor for Dynamic Manipulation

Sep 23, 2022

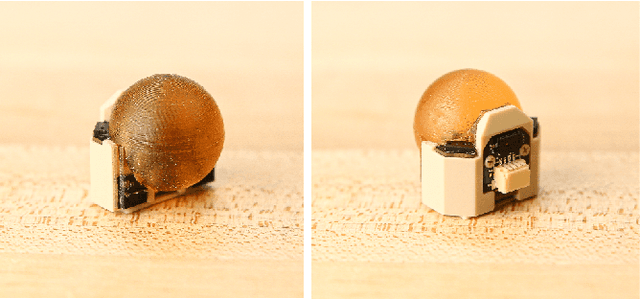

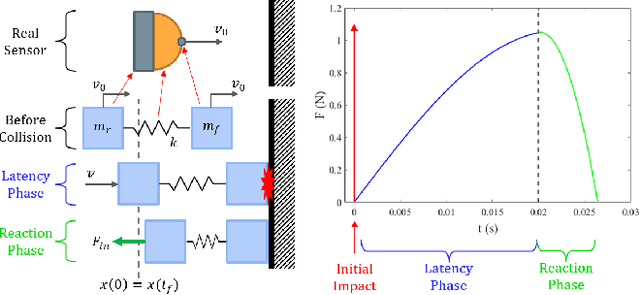

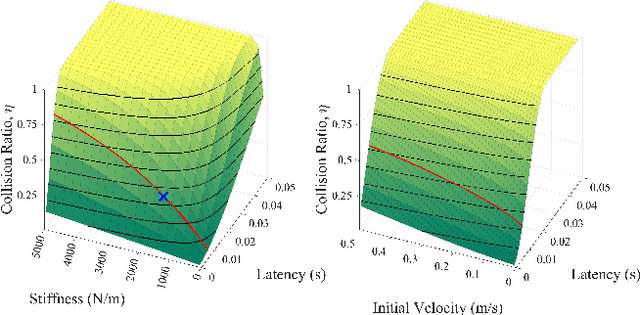

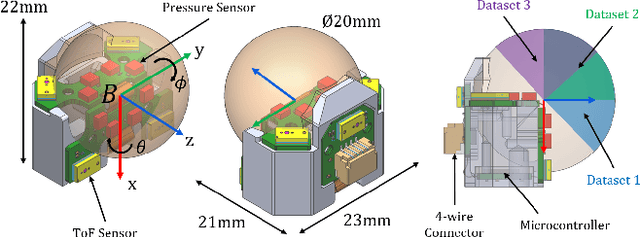

Abstract:We introduce a spherical fingertip sensor for dynamic manipulation. It is based on barometric pressure and time-of-flight proximity sensors and is low-latency, compact, and physically robust. The sensor uses a trained neural network to estimate the contact location and three-axis contact forces based on data from the pressure sensors, which are embedded within the sensor's sphere of polyurethane rubber. The time-of-flight sensors face in three different outward directions, and an integrated microcontroller samples each of the individual sensors at up to 200 Hz. To quantify the effect of system latency on dynamic manipulation performance, we develop and analyze a metric called the collision impulse ratio and characterize the end-to-end latency of our new sensor. We also present experimental demonstrations with the sensor, including measuring contact transitions, performing coarse mapping, maintaining a contact force with a moving object, and reacting to avoid collisions.

Towards Robust Autonomous Grasping with Reflexes Using High-Bandwidth Sensing and Actuation

Sep 23, 2022

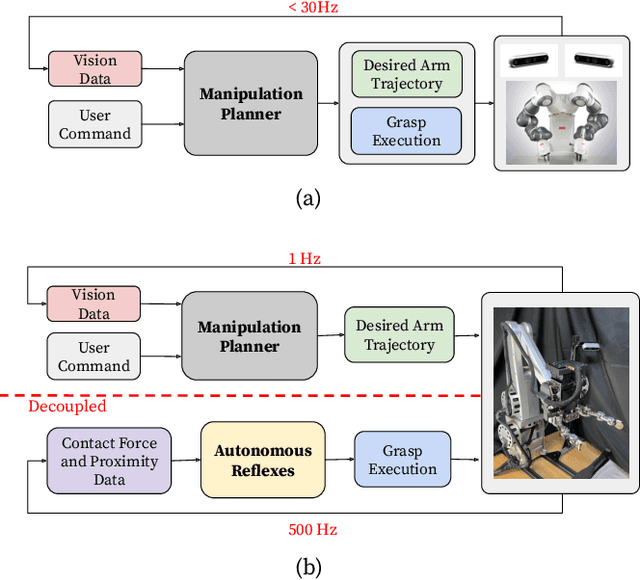

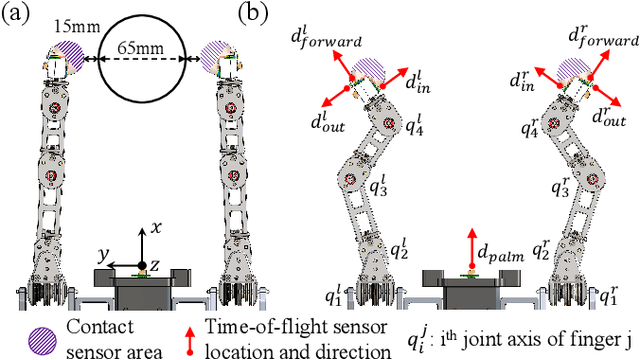

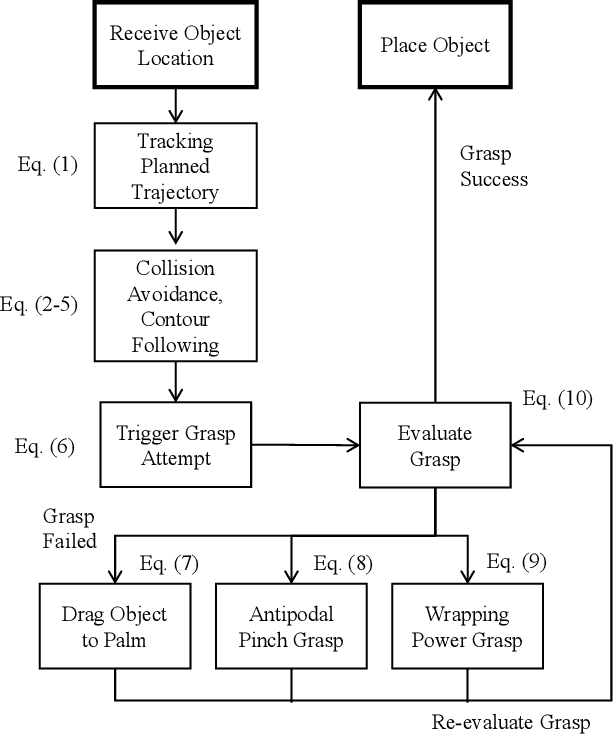

Abstract:Modern robotic manipulation systems fall short of human manipulation skills partly because they rely on closing feedback loops exclusively around vision data, which reduces system bandwidth and speed. By developing autonomous grasping reflexes that rely on high-bandwidth force, contact, and proximity data, the overall system speed and robustness can be increased while reducing reliance on vision data. We are developing a new system built around a low-inertia, high-speed arm with nimble fingers that combines a high-level trajectory planner operating at less than 1 Hz with low-level autonomous reflex controllers running upwards of 300 Hz. We characterize the reflex system by comparing the volume of the set of successful grasps for a naive baseline controller and variations of our reflexive grasping controller, finding that our controller expands the set of successful grasps by 55% relative to the baseline. We also deploy our reflexive grasping controller with a simple vision-based planner in an autonomous clutter clearing task, achieving a grasp success rate above 90% while clearing over 100 items.

The 1st Data Science for Pavements Challenge

Jun 10, 2022

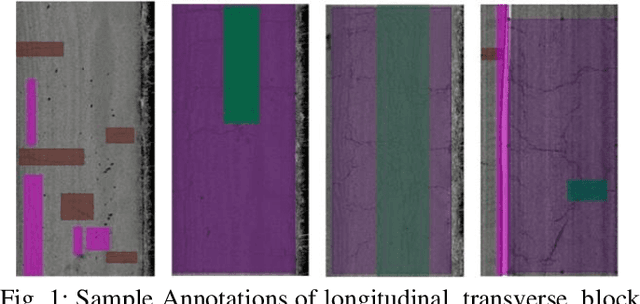

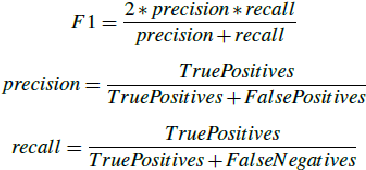

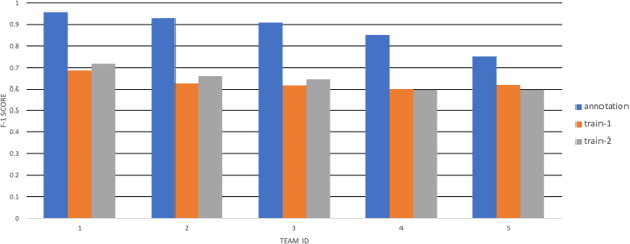

Abstract:The Data Science for Pavement Challenge (DSPC) seeks to accelerate the research and development of automated vision systems for pavement condition monitoring and evaluation by providing a platform with benchmarked datasets and codes for teams to innovate and develop machine learning algorithms that are practice-ready for use by industry. The first edition of the competition attracted 22 teams from 8 countries. Participants were required to automatically detect and classify different types of pavement distresses present in images captured from multiple sources, and under different conditions. The competition was data-centric: teams were tasked to increase the accuracy of a predefined model architecture by utilizing various data modification methods such as cleaning, labeling and augmentation. A real-time, online evaluation system was developed to rank teams based on the F1 score. Leaderboard results showed the promise and challenges of machine for advancing automation in pavement monitoring and evaluation. This paper summarizes the solutions from the top 5 teams. These teams proposed innovations in the areas of data cleaning, annotation, augmentation, and detection parameter tuning. The F1 score for the top-ranked team was approximately 0.9. The paper concludes with a review of different experiments that worked well for the current challenge and those that did not yield any significant improvement in model accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge