Eduardo Ros

Multi-objective tuning for torque PD controllers of cobots

Sep 16, 2023Abstract:Collaborative robotics is a new and challenging field in the realm of motion control and human-robot interaction. The safety measures needed for a reliable interaction between the robot and its environment hinder the use of classical control methods, pushing researchers to try new techniques such as machine learning (ML). In this context, reinforcement learning has been adopted as the primary way to create intelligent controllers for collaborative robots, however supervised learning shows great promise in the hope of developing data-driven model based ML controllers in a faster and safer way. In this work we study several aspects of the methodology needed to create a dataset to be used to learn the dynamics of a robot. For this we tune several PD controllers to several trajectories, using a multi-objective genetic algorithm (GA) which takes into account not only their accuracy, but also their safety. We demonstrate the need to tune the controllers individually to each trajectory and empirically explore the best population size for the GA and how the speed of the trajectory affects the tuning and the dynamics of the robot.

Event-based Vision for Early Prediction of Manipulation Actions

Jul 26, 2023

Abstract:Neuromorphic visual sensors are artificial retinas that output sequences of asynchronous events when brightness changes occur in the scene. These sensors offer many advantages including very high temporal resolution, no motion blur and smart data compression ideal for real-time processing. In this study, we introduce an event-based dataset on fine-grained manipulation actions and perform an experimental study on the use of transformers for action prediction with events. There is enormous interest in the fields of cognitive robotics and human-robot interaction on understanding and predicting human actions as early as possible. Early prediction allows anticipating complex stages for planning, enabling effective and real-time interaction. Our Transformer network uses events to predict manipulation actions as they occur, using online inference. The model succeeds at predicting actions early on, building up confidence over time and achieving state-of-the-art classification. Moreover, the attention-based transformer architecture allows us to study the role of the spatio-temporal patterns selected by the model. Our experiments show that the Transformer network captures action dynamic features outperforming video-based approaches and succeeding with scenarios where the differences between actions lie in very subtle cues. Finally, we release the new event dataset, which is the first in the literature for manipulation action recognition. Code will be available at https://github.com/DaniDeniz/EventVisionTransformer.

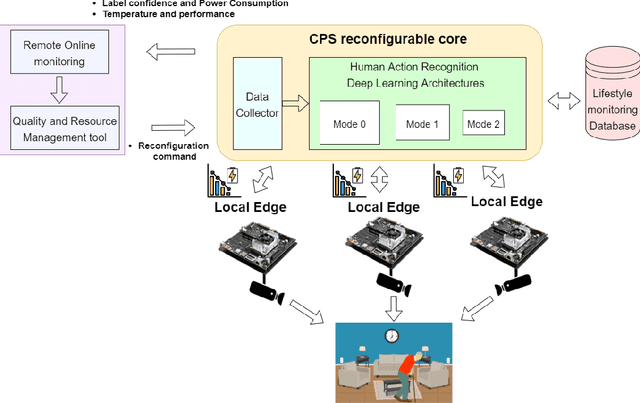

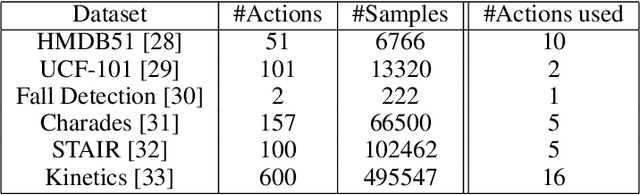

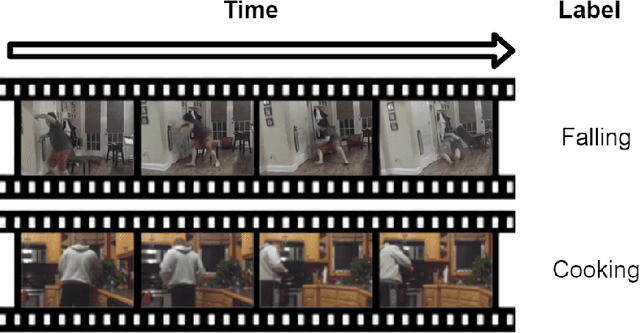

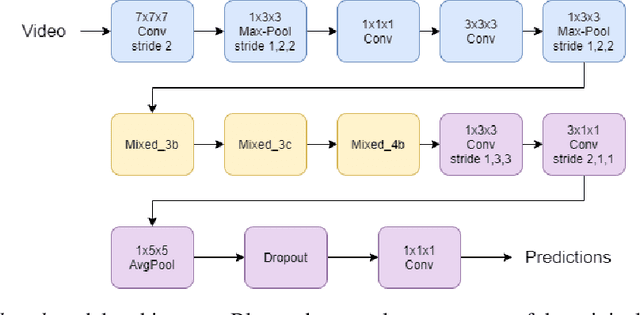

Reconfigurable Cyber-Physical System for Lifestyle Video-Monitoring via Deep Learning

Oct 07, 2020

Abstract:Indoor monitoring of people at their homes has become a popular application in Smart Health. With the advances in Machine Learning and hardware for embedded devices, new distributed approaches for Cyber-Physical Systems (CPSs) are enabled. Also, changing environments and need for cost reduction motivate novel reconfigurable CPS architectures. In this work, we propose an indoor monitoring reconfigurable CPS that uses embedded local nodes (Nvidia Jetson TX2). We embed Deep Learning architectures to address Human Action Recognition. Local processing at these nodes let us tackle some common issues: reduction of data bandwidth usage and preservation of privacy (no raw images are transmitted). Also real-time processing is facilitated since optimized nodes compute only its local video feed. Regarding the reconfiguration, a remote platform monitors CPS qualities and a Quality and Resource Management (QRM) tool sends commands to the CPS core to trigger its reconfiguration. Our proposal is an energy-aware system that triggers reconfiguration based on energy consumption for battery-powered nodes. Reconfiguration reduces up to 22% the local nodes energy consumption extending the device operating time, preserving similar accuracy with respect to the alternative with no reconfiguration.

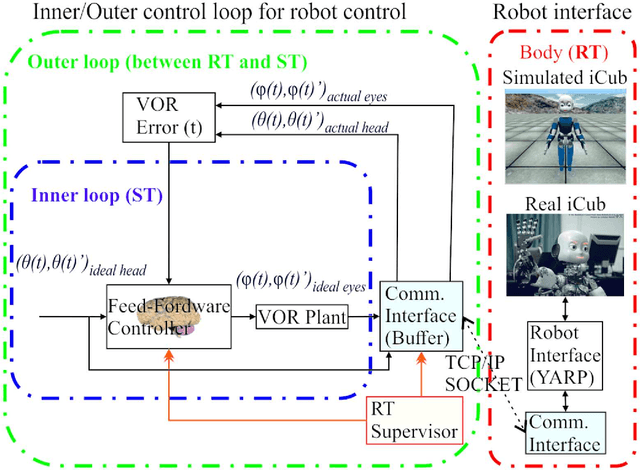

VOR Adaptation on a Humanoid iCub Robot Using a Spiking Cerebellar Model

Mar 31, 2020

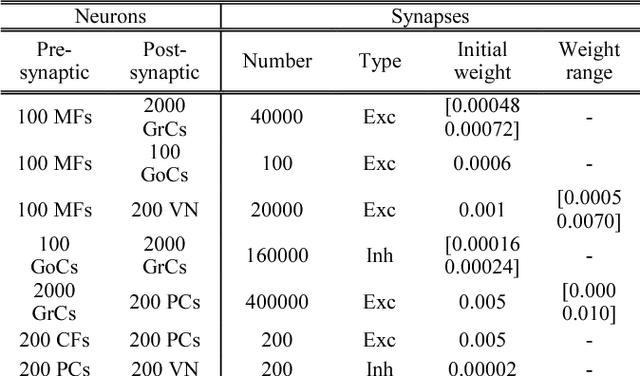

Abstract:We embed a spiking cerebellar model within an adaptive real-time (RT) control loop that is able to operate a real robotic body (iCub) when performing different vestibulo-ocular reflex (VOR) tasks. The spiking neural network computation, including event- and time-driven neural dynamics, neural activity, and spike-timing dependent plasticity (STDP) mechanisms, leads to a nondeterministic computation time caused by the neural activity volleys encountered during cerebellar simulation. This nondeterministic computation time motivates the integration of an RT supervisor module that is able to ensure a well-orchestrated neural computation time and robot operation. Actually, our neurorobotic experimental setup (VOR) benefits from the biological sensory motor delay between the cerebellum and the body to buffer the computational overloads as well as providing flexibility in adjusting the neural computation time and RT operation. The RT supervisor module provides for incremental countermeasures that dynamically slow down or speed up the cerebellar simulation by either halting the simulation or disabling certain neural computation features (i.e., STDP mechanisms, spike propagation, and neural updates) to cope with the RT constraints imposed by the real robot operation. This neurorobotic experimental setup is applied to different horizontal and vertical VOR adaptive tasks that are widely used by the neuroscientific community to address cerebellar functioning. We aim to elucidate the manner in which the combination of the cerebellar neural substrate and the distributed plasticity shapes the cerebellar neural activity to mediate motor adaptation. This paper underlies the need for a two-stage learning process to facilitate VOR acquisition.

On robot compliance. A cerebellar control approach

Mar 31, 2020

Abstract:The work presented here is a novel biological approach for the compliant control of a robotic arm in real time (RT). We integrate a spiking cerebellar network at the core of a feedback control loop performing torque-driven control. The spiking cerebellar controller provides torque commands allowing for accurate and coordinated arm movements. To compute these output motor commands, the spiking cerebellar controller receives the robot's sensorial signals, the robot's goal behavior, and an instructive signal. These input signals are translated into a set of evolving spiking patterns representing univocally a specific system state at every point of time. Spike-timing-dependent plasticity (STDP) is then supported, allowing for building adaptive control. The spiking cerebellar controller continuously adapts the torque commands provided to the robot from experience as STDP is deployed. Adaptive torque commands, in turn, help the spiking cerebellar controller to cope with built-in elastic elements within the robot's actuators mimicking human muscles (inherently elastic). We propose a natural integration of a bio inspired control scheme, based on the cerebellum, with a compliant robot. We prove that our compliant approach outperforms the accuracy of the default factory-installed position control in a set of tasks used for addressing cerebellar motor behavior: controlling six degrees of freedom (DoF) in smooth movements, fast ballistic movements, and unstructured scenario compliant movements.

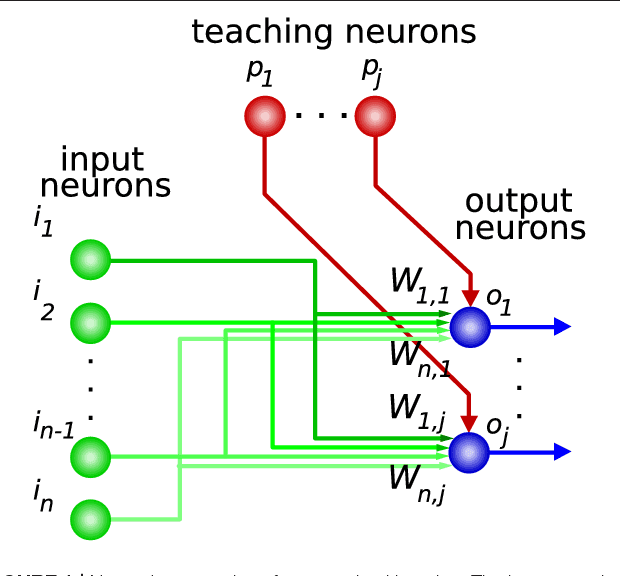

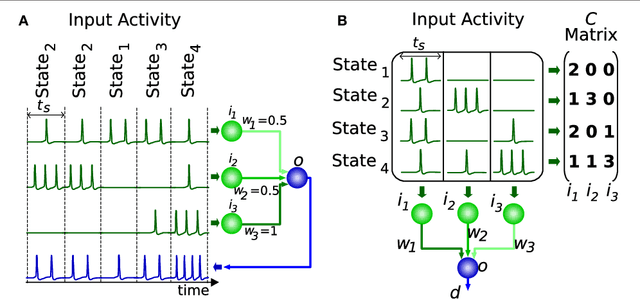

A Metric for Evaluating Neural Input Representation in Supervised Learning Networks

Mar 03, 2020

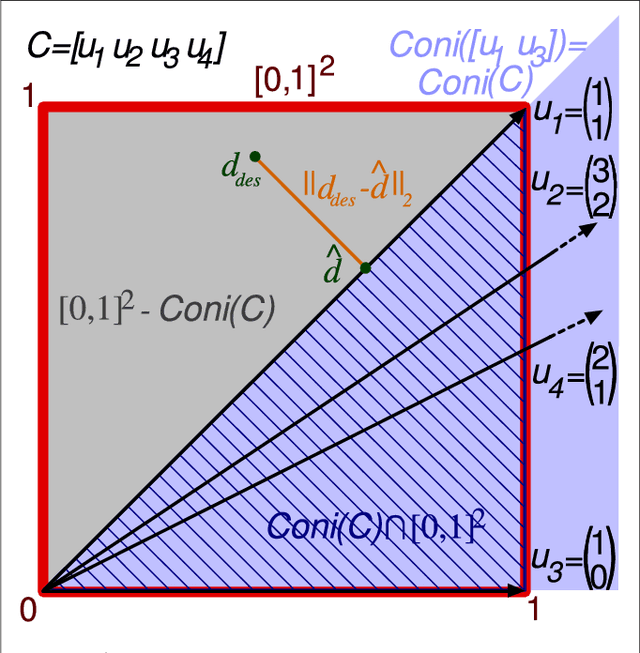

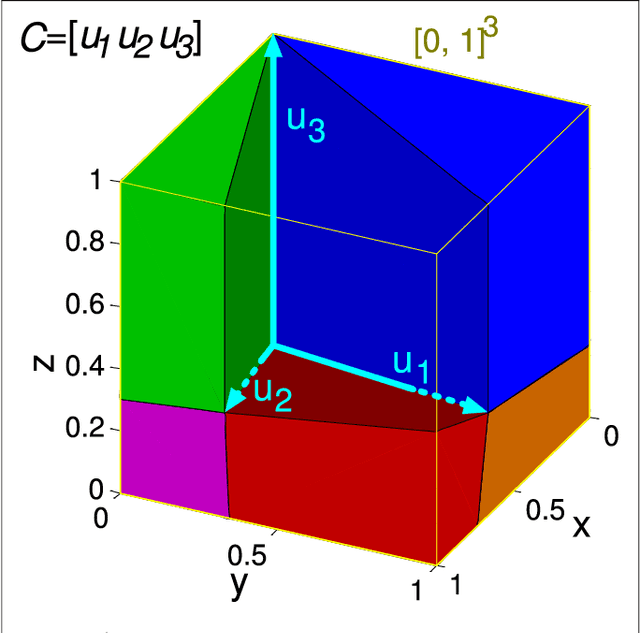

Abstract:Supervised learning has long been attributed to several feed-forward neural circuits within the brain, with attention being paid to the cerebellar granular layer. The focus of this study is to evaluate the input activity representation of these feed-forward neural networks. The activity of cerebellar granule cells is conveyed by parallel fibers and translated into Purkinje cell activity; the sole output of the cerebellar cortex. The learning process at this parallel-fiber-to-Purkinje-cell connection makes each Purkinje cell sensitive to a set of specific cerebellar states, determined by the granule-cell activity during a certain time window. A Purkinje cell becomes sensitive to each neural input state and, consequently, the network operates as a function able to generate a desired output for each provided input by means of supervised learning. However, not all sets of Purkinje cell responses can be assigned to any set of input states due to the network's own limitations (inherent to the network neurobiological substrate), that is, not all input-output mapping can be learned. A limiting factor is the representation of the input states through granule-cell activity. The quality of this representation will determine the capacity of the network to learn a varied set of outputs. In this study we present an algorithm for evaluating quantitatively the level of compatibility/interference amongst a set of given cerebellar states according to their representation (granule-cell activation patterns) without the need for actually conducting simulations and network training. The algorithm input consists of a real-number matrix that codifies the activity level of every considered granule-cell in each state. The capability of this representation to generate a varied set of outputs is evaluated geometrically, thus resulting in a real number that assesses the goodness of the representation

Exploring vestibulo-ocular adaptation in a closed-loop neuro-robotic experiment using STDP. A simulation study

Mar 03, 2020

Abstract:Studying and understanding the computational primitives of our neural system requires for a diverse and complementary set of techniques. In this work, we use the Neuro-robotic Platform (NRP)to evaluate the vestibulo ocular cerebellar adaptatIon (Vestibulo-ocular reflex, VOR)mediated by two STDP mechanisms located at the cerebellar molecular layer and the vestibular nuclei respectively. This simulation study adopts an experimental setup (rotatory VOR)widely used by neuroscientists to better understand the contribution of certain specific cerebellar properties (i.e. distributed STDP, neural properties, coding cerebellar topology, etc.)to r-VOR adaptation. The work proposes and describes an embodiment solution for which we endow a simulated humanoid robot (iCub)with a spiking cerebellar model by means of the NRP, and we face the humanoid to an r-VOR task. The results validate the adaptive capabilities of the spiking cerebellar model (with STDP)in a perception-action closed-loop (r- VOR)causing the simulated iCub robot to mimic a human behavior.

Real-time clustering and multi-target tracking using event-based sensors

Jul 08, 2018

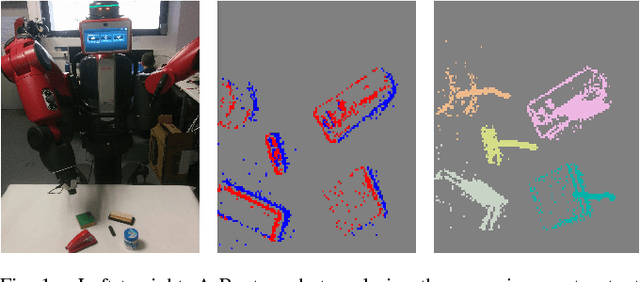

Abstract:Clustering is crucial for many computer vision applications such as robust tracking, object detection and segmentation. This work presents a real-time clustering technique that takes advantage of the unique properties of event-based vision sensors. Since event-based sensors trigger events only when the intensity changes, the data is sparse, with low redundancy. Thus, our approach redefines the well-known mean-shift clustering method using asynchronous events instead of conventional frames. The potential of our approach is demonstrated in a multi-target tracking application using Kalman filters to smooth the trajectories. We evaluated our method on an existing dataset with patterns of different shapes and speeds, and a new dataset that we collected. The sensor was attached to the Baxter robot in an eye-in-hand setup monitoring real-world objects in an action manipulation task. Clustering accuracy achieved an F-measure of 0.95, reducing the computational cost by 88% compared to the frame-based method. The average error for tracking was 2.5 pixels and the clustering achieved a consistent number of clusters along time.

Joint direct estimation of 3D geometry and 3D motion using spatio temporal gradients

May 17, 2018

Abstract:Conventional image motion based structure from motion methods first compute optical flow, then solve for the 3D motion parameters based on the epipolar constraint, and finally recover the 3D geometry of the scene. However, errors in optical flow due to regularization can lead to large errors in 3D motion and structure. This paper investigates whether performance and consistency can be improved by avoiding optical flow estimation in the early stages of the structure from motion pipeline, and it proposes a new direct method based on image gradients (normal flow) only. The main idea lies in a reformulation of the positive-depth constraint, which allows the use of well-known minimization techniques to solve for 3D motion. The 3D motion estimate is then refined and structure estimated adding a regularization based on depth. Experimental comparisons on standard synthetic datasets and the real-world driving benchmark dataset KITTI using three different optic flow algorithms show that the method achieves better accuracy in all but one case. Furthermore, it outperforms existing normal flow based 3D motion estimation techniques. Finally, the recovered 3D geometry is shown to be also very accurate.

Scalability in Neural Control of Musculoskeletal Robots

Jan 19, 2016

Abstract:Anthropomimetic robots are robots that sense, behave, interact and feel like humans. By this definition, anthropomimetic robots require human-like physical hardware and actuation, but also brain-like control and sensing. The most self-evident realization to meet those requirements would be a human-like musculoskeletal robot with a brain-like neural controller. While both musculoskeletal robotic hardware and neural control software have existed for decades, a scalable approach that could be used to build and control an anthropomimetic human-scale robot has not been demonstrated yet. Combining Myorobotics, a framework for musculoskeletal robot development, with SpiNNaker, a neuromorphic computing platform, we present the proof-of-principle of a system that can scale to dozens of neurally-controlled, physically compliant joints. At its core, it implements a closed-loop cerebellar model which provides real-time low-level neural control at minimal power consumption and maximal extensibility: higher-order (e.g., cortical) neural networks and neuromorphic sensors like silicon-retinae or -cochleae can naturally be incorporated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge