Daniel Deniz

Overview of the TalentCLEF 2025: Skill and Job Title Intelligence for Human Capital Management

Jul 17, 2025Abstract:Advances in natural language processing and large language models are driving a major transformation in Human Capital Management, with a growing interest in building smart systems based on language technologies for talent acquisition, upskilling strategies, and workforce planning. However, the adoption and progress of these technologies critically depend on the development of reliable and fair models, properly evaluated on public data and open benchmarks, which have so far been unavailable in this domain. To address this gap, we present TalentCLEF 2025, the first evaluation campaign focused on skill and job title intelligence. The lab consists of two tasks: Task A - Multilingual Job Title Matching, covering English, Spanish, German, and Chinese; and Task B - Job Title-Based Skill Prediction, in English. Both corpora were built from real job applications, carefully anonymized, and manually annotated to reflect the complexity and diversity of real-world labor market data, including linguistic variability and gender-marked expressions. The evaluations included monolingual and cross-lingual scenarios and covered the evaluation of gender bias. TalentCLEF attracted 76 registered teams with more than 280 submissions. Most systems relied on information retrieval techniques built with multilingual encoder-based models fine-tuned with contrastive learning, and several of them incorporated large language models for data augmentation or re-ranking. The results show that the training strategies have a larger effect than the size of the model alone. TalentCLEF provides the first public benchmark in this field and encourages the development of robust, fair, and transferable language technologies for the labor market.

MELO: An Evaluation Benchmark for Multilingual Entity Linking of Occupations

Oct 10, 2024

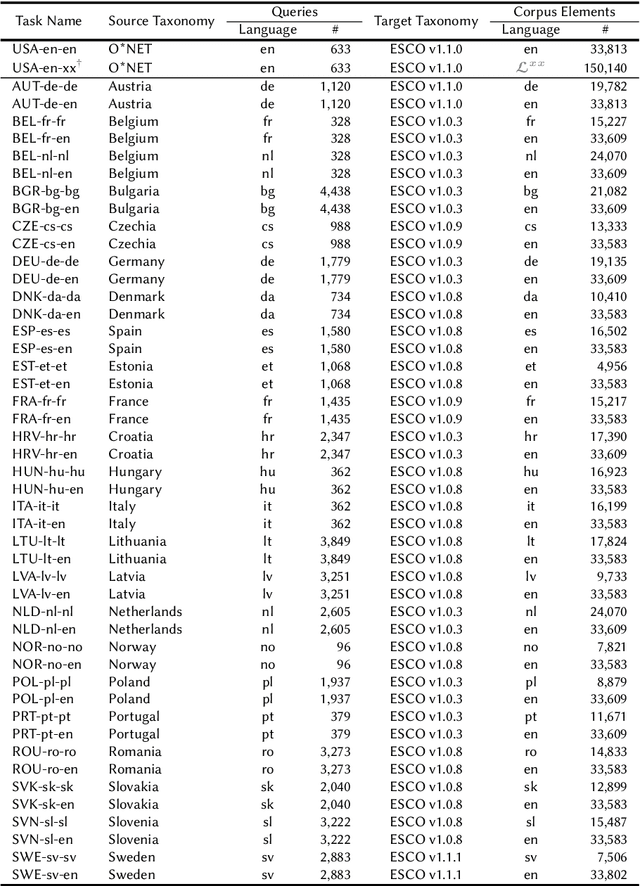

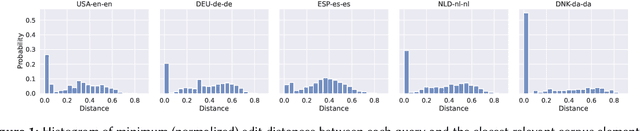

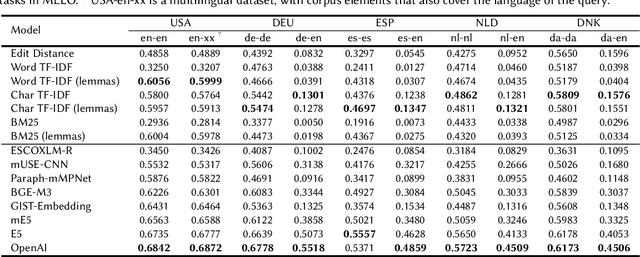

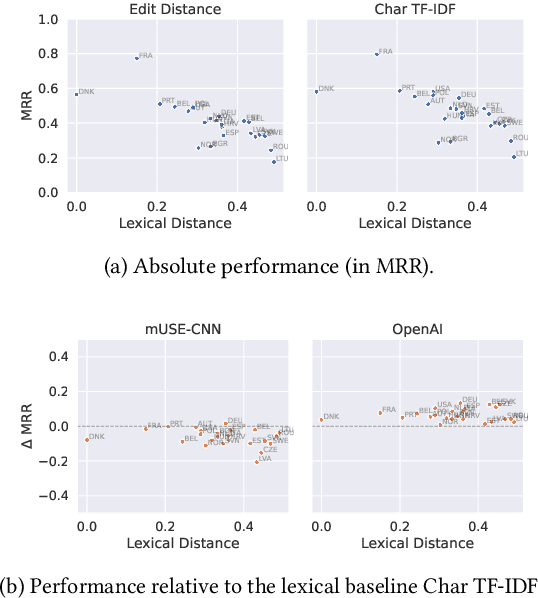

Abstract:We present the Multilingual Entity Linking of Occupations (MELO) Benchmark, a new collection of 48 datasets for evaluating the linking of entity mentions in 21 languages to the ESCO Occupations multilingual taxonomy. MELO was built using high-quality, pre-existent human annotations. We conduct experiments with simple lexical models and general-purpose sentence encoders, evaluated as bi-encoders in a zero-shot setup, to establish baselines for future research. The datasets and source code for standardized evaluation are publicly available at https://github.com/Avature/melo-benchmark

Event-based Vision for Early Prediction of Manipulation Actions

Jul 26, 2023

Abstract:Neuromorphic visual sensors are artificial retinas that output sequences of asynchronous events when brightness changes occur in the scene. These sensors offer many advantages including very high temporal resolution, no motion blur and smart data compression ideal for real-time processing. In this study, we introduce an event-based dataset on fine-grained manipulation actions and perform an experimental study on the use of transformers for action prediction with events. There is enormous interest in the fields of cognitive robotics and human-robot interaction on understanding and predicting human actions as early as possible. Early prediction allows anticipating complex stages for planning, enabling effective and real-time interaction. Our Transformer network uses events to predict manipulation actions as they occur, using online inference. The model succeeds at predicting actions early on, building up confidence over time and achieving state-of-the-art classification. Moreover, the attention-based transformer architecture allows us to study the role of the spatio-temporal patterns selected by the model. Our experiments show that the Transformer network captures action dynamic features outperforming video-based approaches and succeeding with scenarios where the differences between actions lie in very subtle cues. Finally, we release the new event dataset, which is the first in the literature for manipulation action recognition. Code will be available at https://github.com/DaniDeniz/EventVisionTransformer.

Reconfigurable Cyber-Physical System for Critical Infrastructure Protection in Smart Cities via Smart Video-Surveillance

Nov 29, 2020

Abstract:Automated surveillance is essential for the protection of Critical Infrastructures (CIs) in future Smart Cities. The dynamic environments and bandwidth requirements demand systems that adapt themselves to react when events of interest occur. We present a reconfigurable Cyber Physical System for the protection of CIs using distributed cloud-edge smart video surveillance. Our local edge nodes perform people detection via Deep Learning. Processing is embedded in high performance SoCs (System-on-Chip) achieving real-time performance ($\approx$ 100 fps - frames per second) which enables efficiently managing video streams of more cameras source at lower frame rate. Cloud server gathers results from nodes to carry out biometric facial identification, tracking, and perimeter monitoring. A Quality and Resource Management module monitors data bandwidth and triggers reconfiguration adapting the transmitted video resolution. This also enables a flexible use of the network by multiple cameras while maintaining the accuracy of biometric identification. A real-world example shows a reduction of $\approx$ 75\% bandwidth use with respect to the no-reconfiguration scenario.

* 13 pages, 8 figures and 5 tables

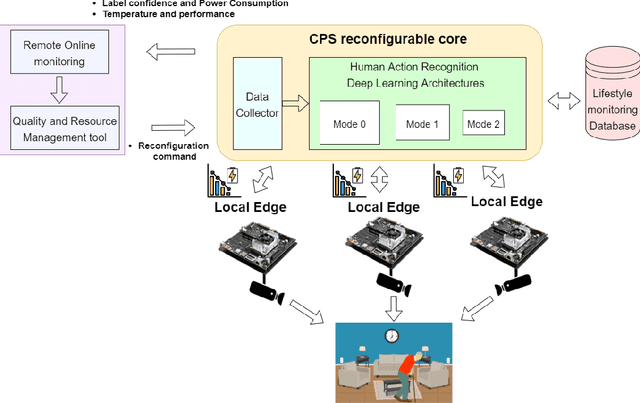

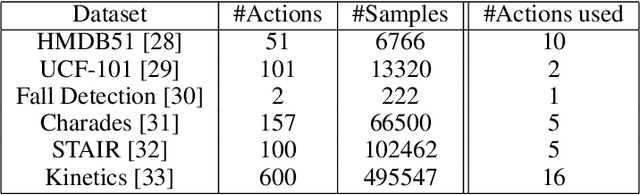

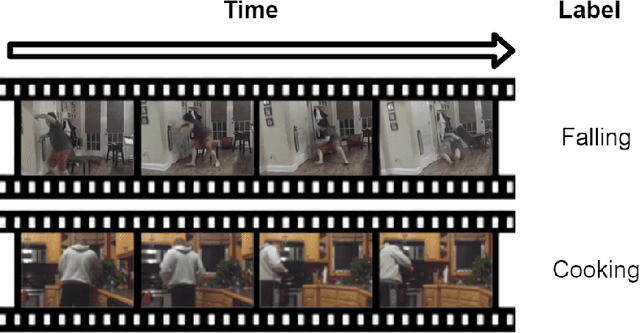

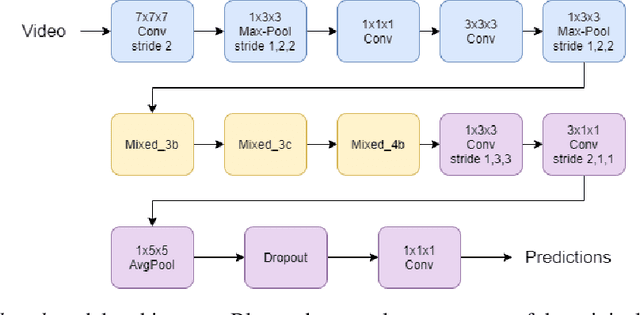

Reconfigurable Cyber-Physical System for Lifestyle Video-Monitoring via Deep Learning

Oct 07, 2020

Abstract:Indoor monitoring of people at their homes has become a popular application in Smart Health. With the advances in Machine Learning and hardware for embedded devices, new distributed approaches for Cyber-Physical Systems (CPSs) are enabled. Also, changing environments and need for cost reduction motivate novel reconfigurable CPS architectures. In this work, we propose an indoor monitoring reconfigurable CPS that uses embedded local nodes (Nvidia Jetson TX2). We embed Deep Learning architectures to address Human Action Recognition. Local processing at these nodes let us tackle some common issues: reduction of data bandwidth usage and preservation of privacy (no raw images are transmitted). Also real-time processing is facilitated since optimized nodes compute only its local video feed. Regarding the reconfiguration, a remote platform monitors CPS qualities and a Quality and Resource Management (QRM) tool sends commands to the CPS core to trigger its reconfiguration. Our proposal is an energy-aware system that triggers reconfiguration based on energy consumption for battery-powered nodes. Reconfiguration reduces up to 22% the local nodes energy consumption extending the device operating time, preserving similar accuracy with respect to the alternative with no reconfiguration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge