Dongmin Choi

Value Portrait: Understanding Values of LLMs with Human-aligned Benchmark

May 02, 2025

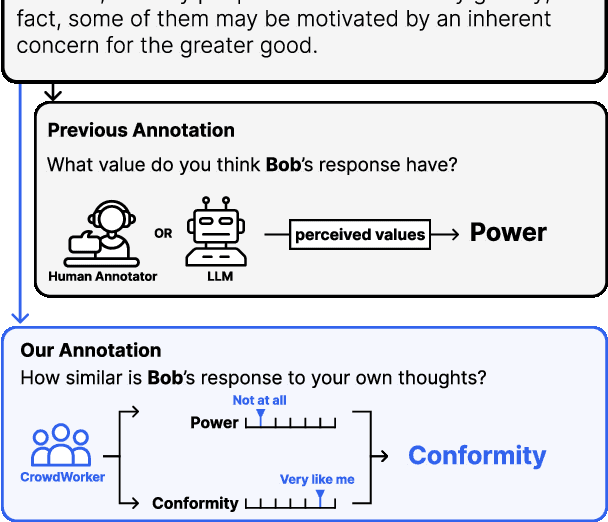

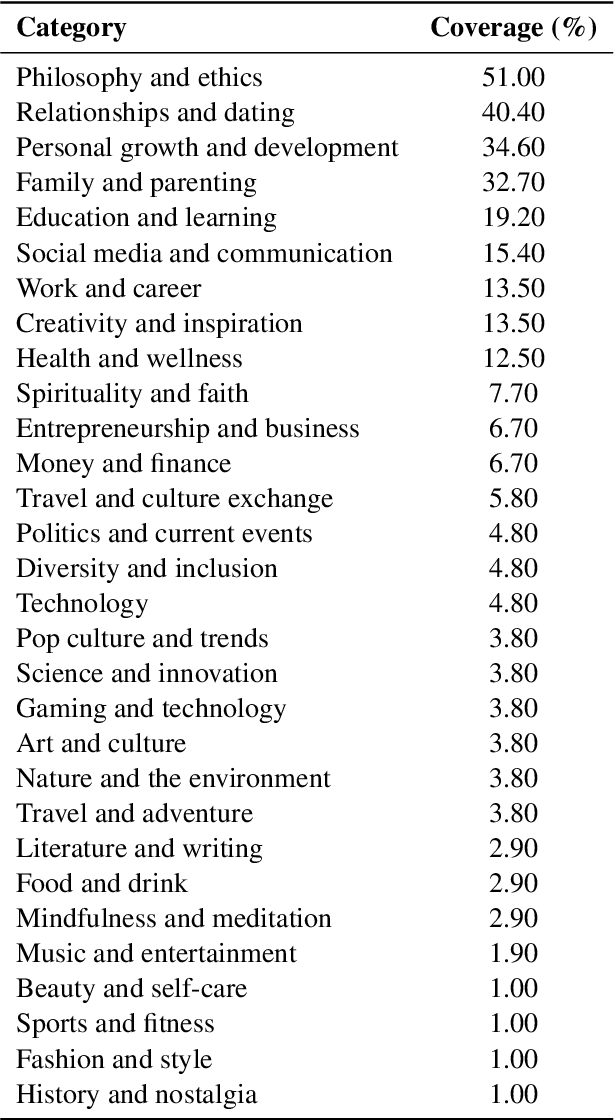

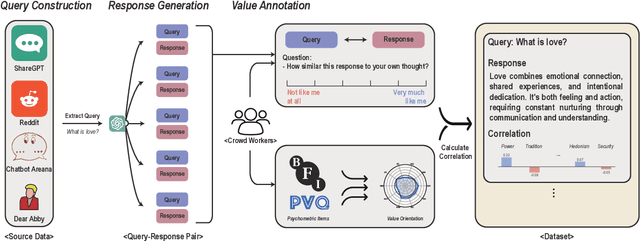

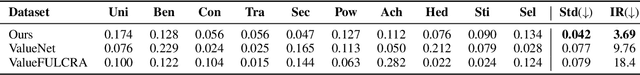

Abstract:The importance of benchmarks for assessing the values of language models has been pronounced due to the growing need of more authentic, human-aligned responses. However, existing benchmarks rely on human or machine annotations that are vulnerable to value-related biases. Furthermore, the tested scenarios often diverge from real-world contexts in which models are commonly used to generate text and express values. To address these issues, we propose the Value Portrait benchmark, a reliable framework for evaluating LLMs' value orientations with two key characteristics. First, the benchmark consists of items that capture real-life user-LLM interactions, enhancing the relevance of assessment results to real-world LLM usage and thus ecological validity. Second, each item is rated by human subjects based on its similarity to their own thoughts, and correlations between these ratings and the subjects' actual value scores are derived. This psychometrically validated approach ensures that items strongly correlated with specific values serve as reliable items for assessing those values. Through evaluating 27 LLMs with our benchmark, we find that these models prioritize Benevolence, Security, and Self-Direction values while placing less emphasis on Tradition, Power, and Achievement values. Also, our analysis reveals biases in how LLMs perceive various demographic groups, deviating from real human data.

iDet3D: Towards Efficient Interactive Object Detection for LiDAR Point Clouds

Dec 24, 2023

Abstract:Accurately annotating multiple 3D objects in LiDAR scenes is laborious and challenging. While a few previous studies have attempted to leverage semi-automatic methods for cost-effective bounding box annotation, such methods have limitations in efficiently handling numerous multi-class objects. To effectively accelerate 3D annotation pipelines, we propose iDet3D, an efficient interactive 3D object detector. Supporting a user-friendly 2D interface, which can ease the cognitive burden of exploring 3D space to provide click interactions, iDet3D enables users to annotate the entire objects in each scene with minimal interactions. Taking the sparse nature of 3D point clouds into account, we design a negative click simulation (NCS) to improve accuracy by reducing false-positive predictions. In addition, iDet3D incorporates two click propagation techniques to take full advantage of user interactions: (1) dense click guidance (DCG) for keeping user-provided information throughout the network and (2) spatial click propagation (SCP) for detecting other instances of the same class based on the user-specified objects. Through our extensive experiments, we present that our method can construct precise annotations in a few clicks, which shows the practicality as an efficient annotation tool for 3D object detection.

ENInst: Enhancing Weakly-supervised Low-shot Instance Segmentation

Feb 20, 2023

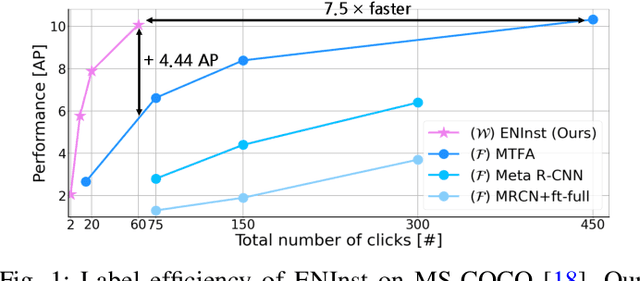

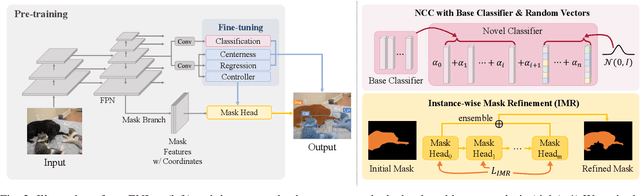

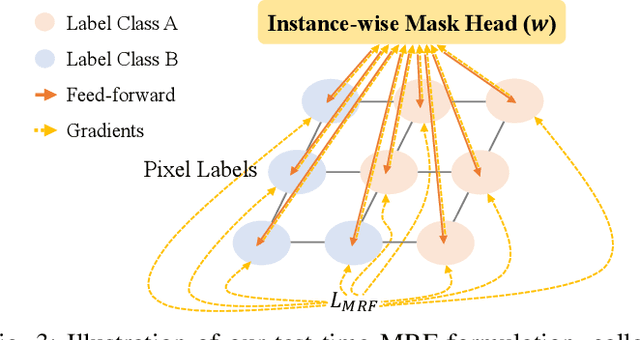

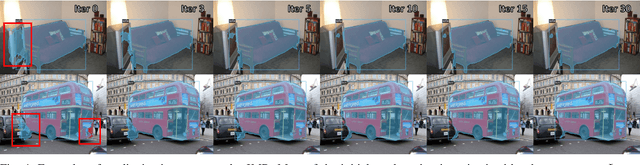

Abstract:We address a weakly-supervised low-shot instance segmentation, an annotation-efficient training method to deal with novel classes effectively. Since it is an under-explored problem, we first investigate the difficulty of the problem and identify the performance bottleneck by conducting systematic analyses of model components and individual sub-tasks with a simple baseline model. Based on the analyses, we propose ENInst with sub-task enhancement methods: instance-wise mask refinement for enhancing pixel localization quality and novel classifier composition for improving classification accuracy. Our proposed method lifts the overall performance by enhancing the performance of each sub-task. We demonstrate that our ENInst is 7.5 times more efficient in achieving comparable performance to the existing fully-supervised few-shot models and even outperforms them at times.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge