Donghoon Baek

FSL-LVLM: Friction-Aware Safety Locomotion using Large Vision Language Model in Wheeled Robots

Sep 15, 2024

Abstract:Wheeled-legged robots offer significant mobility and versatility but face substantial challenges when operating on slippery terrains. Traditional model-based controllers for these robots assume no slipping. While reinforcement learning (RL) helps quadruped robots adapt to different surfaces, recovering from slips remains challenging, especially for systems with few contact points. Estimating the ground friction coefficient is another open challenge. In this paper, we propose a novel friction-aware safety locomotion framework that integrates Large Vision Language Models (LVLMs) with a RL policy. Our approach explicitly incorporates the estimated friction coefficient into the RL policy, enabling the robot to adapt its behavior in advance based on the surface type before reaching it. We introduce a Friction-From-Vision (FFV) module, which leverages LVLMs to estimate ground friction coefficients, eliminating the need for large datasets and extensive training. The framework was validated on a customized wheeled inverted pendulum, and experimental results demonstrate that our framework increases the success rate in completing driving tasks by adjusting speed according to terrain type, while achieving better tracking performance compared to baseline methods. Our framework can be simply integrated with any other RL policies.

Real-to-Sim Adaptation via High-Fidelity Simulation to Control a Wheeled-Humanoid Robot with Unknown Dynamics

Mar 16, 2024

Abstract:Model-based controllers using a linearized model around the system's equilibrium point is a common approach in the control of a wheeled humanoid due to their less computational load and ease of stability analysis. However, controlling a wheeled humanoid robot while it lifts an unknown object presents significant challenges, primarily due to the lack of knowledge in object dynamics. This paper presents a framework designed for predicting the new equilibrium point explicitly to control a wheeled-legged robot with unknown dynamics. We estimated the total mass and center of mass of the system from its response to initially unknown dynamics, then calculated the new equilibrium point accordingly. To avoid using additional sensors (e.g., force torque sensor) and reduce the effort of obtaining expensive real data, a data-driven approach is utilized with a novel real-to-sim adaptation. A more accurate nonlinear dynamics model, offering a closer representation of real-world physics, is injected into a rigid-body simulation for real-to-sim adaptation. The nonlinear dynamics model parameters were optimized using Particle Swarm Optimization. The efficacy of this framework was validated on a physical wheeled inverted pendulum, a simplified model of a wheeled-legged robot. The experimental results indicate that employing a more precise analytical model with optimized parameters significantly reduces the gap between simulation and reality, thus improving the efficiency of a model-based controller in controlling a wheeled robot with unknown dynamics.

Learning Inertial Parameter Identification of Unknown Object with Humanoid Robot using Sim-to-Real Adaptation

Sep 18, 2023

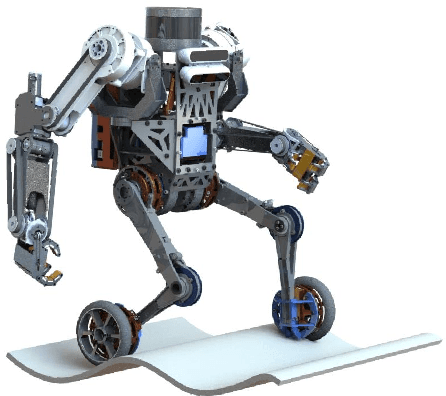

Abstract:Understanding the dynamics of unknown object is crucial for collaborative robots including humanoids to more safely and accurately interact with humans. Most relevant literature leverage a force/torque sensor, prior knowledge of object, vision system, and a long-horizon trajectory which are often impractical. Moreover, these methods often entail solving non-linear optimization problem, sometimes yielding physically inconsistent results. In this work, we propose a fast learningbased inertial parameter estimation as more practical manner. We acquire a reliable dataset in a high-fidelity simulation and train a time-series data-driven regression model (e.g., LSTM) to estimate the inertial parameter of unknown objects. We also introduce a novel sim-to-real adaptation method combining Robot System Identification and Gaussian Processes to directly transfer the trained model to real-world application. We demonstrate our method with a 4-DOF single manipulator of physical wheeled humanoid robot, SATYRR. Results show that our method can identify the inertial parameters of various unknown objects faster and more accurately than conventional methods.

Hybrid LMC: Hybrid Learning and Model-based Control for Wheeled Humanoid Robot via Ensemble Deep Reinforcement Learning

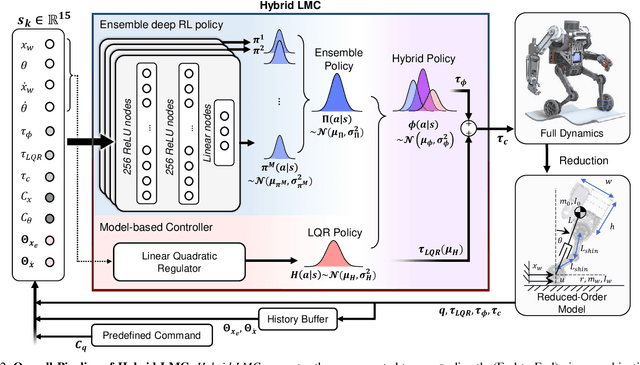

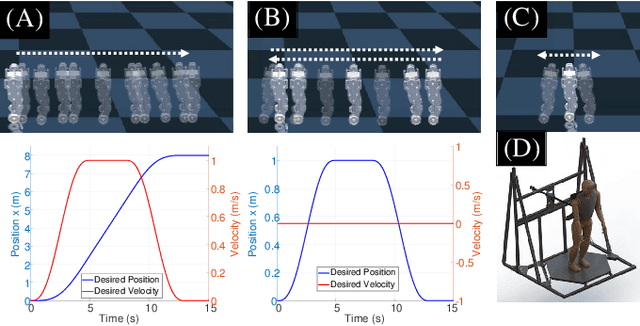

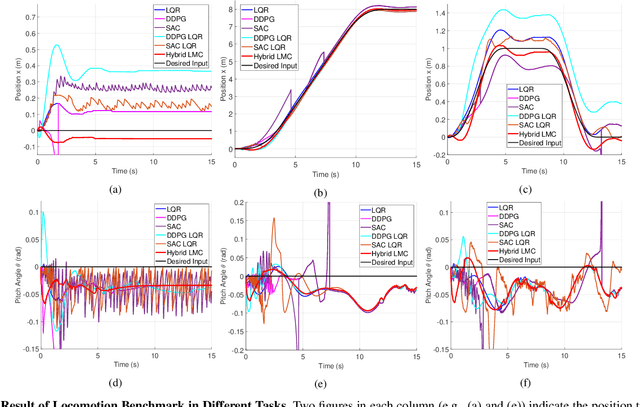

Apr 07, 2022

Abstract:Control of wheeled humanoid locomotion is a challenging problem due to the nonlinear dynamics and under-actuated characteristics of these robots. Traditionally, feedback controllers have been utilized for stabilization and locomotion. However, these methods are often limited by the fidelity of the underlying model used, choice of controller, and environmental variables considered (surface type, ground inclination, etc). Recent advances in reinforcement learning (RL) offer promising methods to tackle some of these conventional feedback controller issues, but require large amounts of interaction data to learn. Here, we propose a hybrid learning and model-based controller Hybrid LMC that combines the strengths of a classical linear quadratic regulator (LQR) and ensemble deep reinforcement learning. Ensemble deep reinforcement learning is composed of multiple Soft Actor-Critic (SAC) and is utilized in reducing the variance of RL networks. By using a feedback controller in tandem the network exhibits stable performance in the early stages of training. As a preliminary step, we explore the viability of Hybrid LMC in controlling wheeled locomotion of a humanoid robot over a set of different physical parameters in MuJoCo simulator. Our results show that Hybrid LMC achieves better performance compared to other existing techniques and has increased sample efficiency

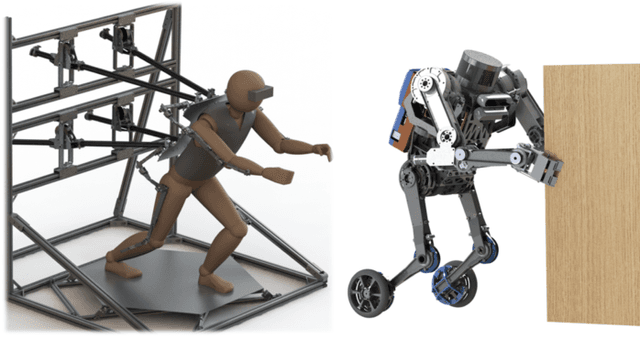

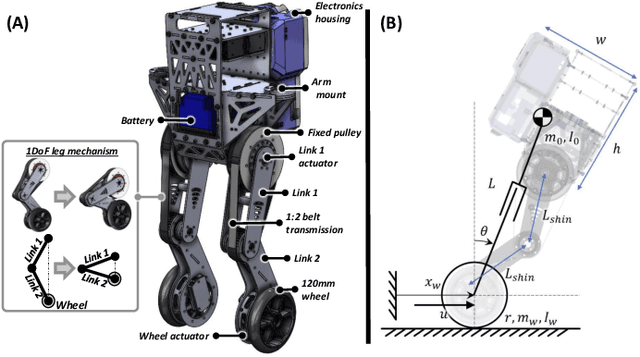

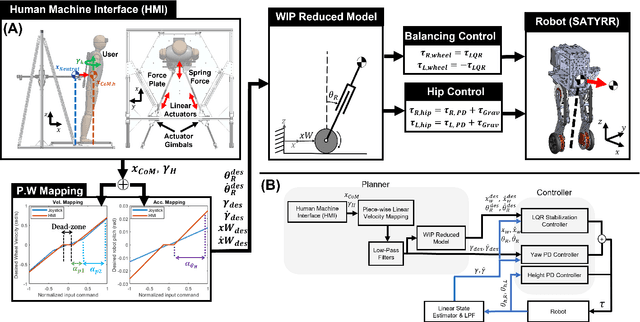

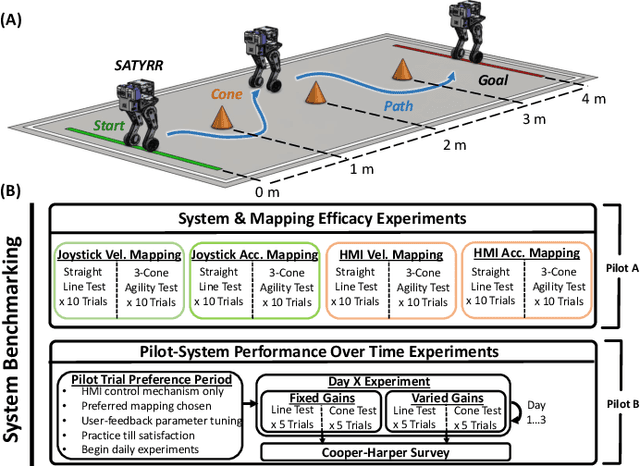

Hands-free Telelocomotion of a Wheeled Humanoid toward Dynamic Mobile Manipulation via Teleoperation

Mar 07, 2022

Abstract:Robotic systems that can dynamically combine manipulation and locomotion could facilitate dangerous or physically demanding labor. For instance, firefighter humanoid robots could leverage their body by leaning against collapsed building rubble to push it aside. Here we introduce a teleoperation system that targets the realization of these tasks using human whole-body motor skills. We describe a new wheeled humanoid platform, SATYRR, and a novel hands-free teleoperation architecture using a whole-body Human Machine Interface (HMI). This system enables telelocomotion of the humanoid robot using the operator body motion, freeing their arms for manipulation tasks. In this study we evaluate the efficacy of the proposed system on hardware, and explore the control of SATYRR using two teleoperation mappings that map the operators body pitch and twist to the robot velocity or acceleration. Through experiments and user feedback we showcase our preliminary findings of the pilot-system response. Results suggest that the HMI is capable of effectively telelocomoting SATYRR, that pilot preferences should dictate the appropriate motion mapping and gains, and finally that the pilot can better learn to control the system over time. This study represents a fundamental step towards the realization of combined manipulation and locomotion via teleoperation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge