Domenick Poster

Synthesis-Guided Feature Learning for Cross-Spectral Periocular Recognition

Nov 16, 2021

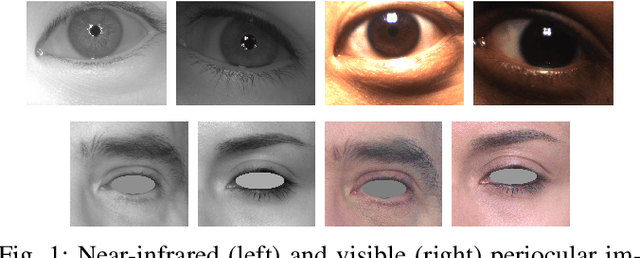

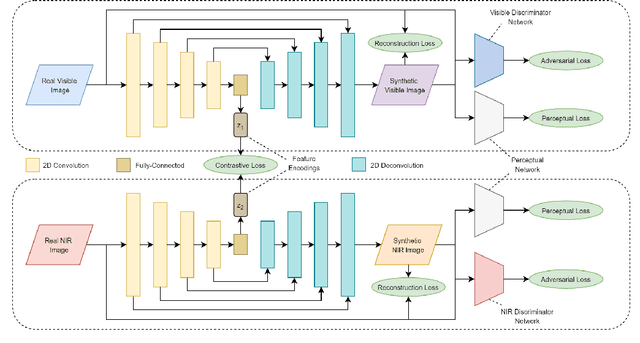

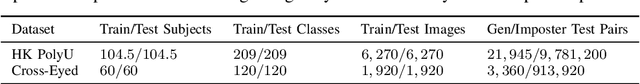

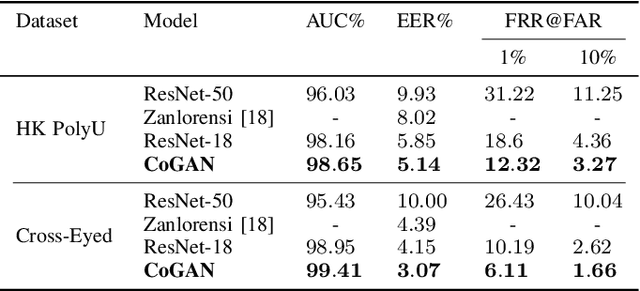

Abstract:A common yet challenging scenario in periocular biometrics is cross-spectral matching - in particular, the matching of visible wavelength against near-infrared (NIR) periocular images. We propose a novel approach to cross-spectral periocular verification that primarily focuses on learning a mapping from visible and NIR periocular images to a shared latent representational subspace, and supports this effort by simultaneously learning intra-spectral image reconstruction. We show the auxiliary image reconstruction task (and in particular the reconstruction of high-level, semantic features) results in learning a more discriminative, domain-invariant subspace compared to the baseline while incurring no additional computational or memory costs at test-time. The proposed Coupled Conditional Generative Adversarial Network (CoGAN) architecture uses paired generator networks (one operating on visible images and the other on NIR) composed of U-Nets with ResNet-18 encoders trained for feature learning via contrastive loss and for intra-spectral image reconstruction with adversarial, pixel-based, and perceptual reconstruction losses. Moreover, the proposed CoGAN model beats the current state-of-art (SotA) in cross-spectral periocular recognition. On the Hong Kong PolyU benchmark dataset, we achieve 98.65% AUC and 5.14% EER compared to the SotA EER of 8.02%. On the Cross-Eyed dataset, we achieve 99.31% AUC and 3.99% EER versus SotA EER of 4.39%.

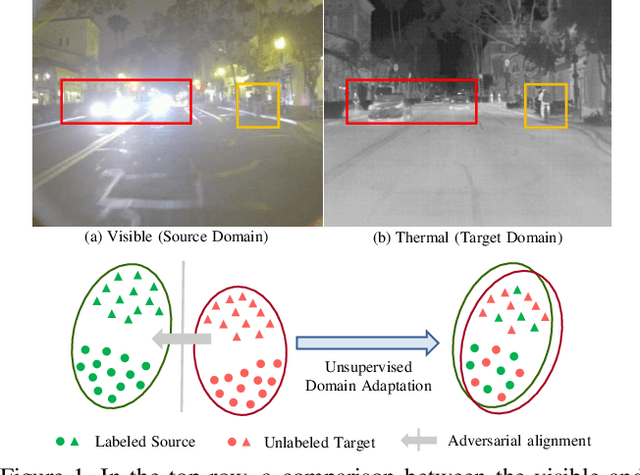

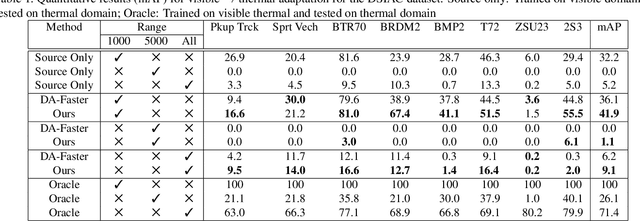

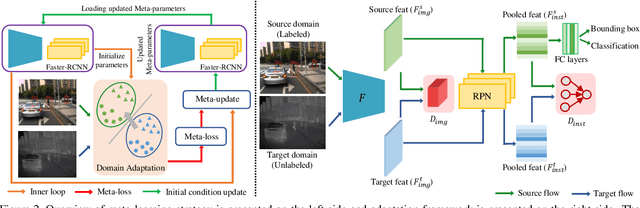

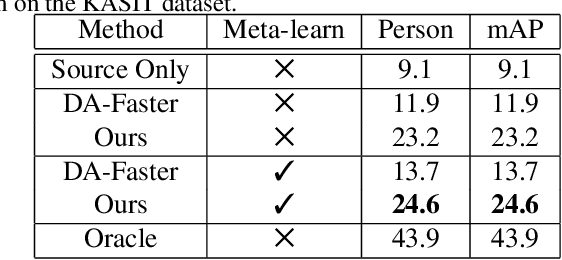

Meta-UDA: Unsupervised Domain Adaptive Thermal Object Detection using Meta-Learning

Oct 07, 2021

Abstract:Object detectors trained on large-scale RGB datasets are being extensively employed in real-world applications. However, these RGB-trained models suffer a performance drop under adverse illumination and lighting conditions. Infrared (IR) cameras are robust under such conditions and can be helpful in real-world applications. Though thermal cameras are widely used for military applications and increasingly for commercial applications, there is a lack of robust algorithms to robustly exploit the thermal imagery due to the limited availability of labeled thermal data. In this work, we aim to enhance the object detection performance in the thermal domain by leveraging the labeled visible domain data in an Unsupervised Domain Adaptation (UDA) setting. We propose an algorithm agnostic meta-learning framework to improve existing UDA methods instead of proposing a new UDA strategy. We achieve this by meta-learning the initial condition of the detector, which facilitates the adaptation process with fine updates without overfitting or getting stuck at local optima. However, meta-learning the initial condition for the detection scenario is computationally heavy due to long and intractable computation graphs. Therefore, we propose an online meta-learning paradigm which performs online updates resulting in a short and tractable computation graph. To this end, we demonstrate the superiority of our method over many baselines in the UDA setting, producing a state-of-the-art thermal detector for the KAIST and DSIAC datasets.

A Large-Scale, Time-Synchronized Visible and Thermal Face Dataset

Jan 07, 2021

Abstract:Thermal face imagery, which captures the naturally emitted heat from the face, is limited in availability compared to face imagery in the visible spectrum. To help address this scarcity of thermal face imagery for research and algorithm development, we present the DEVCOM Army Research Laboratory Visible-Thermal Face Dataset (ARL-VTF). With over 500,000 images from 395 subjects, the ARL-VTF dataset represents, to the best of our knowledge, the largest collection of paired visible and thermal face images to date. The data was captured using a modern long wave infrared (LWIR) camera mounted alongside a stereo setup of three visible spectrum cameras. Variability in expressions, pose, and eyewear has been systematically recorded. The dataset has been curated with extensive annotations, metadata, and standardized protocols for evaluation. Furthermore, this paper presents extensive benchmark results and analysis on thermal face landmark detection and thermal-to-visible face verification by evaluating state-of-the-art models on the ARL-VTF dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge