Dimitrije Antić

InteractVLM: 3D Interaction Reasoning from 2D Foundational Models

Apr 07, 2025Abstract:We introduce InteractVLM, a novel method to estimate 3D contact points on human bodies and objects from single in-the-wild images, enabling accurate human-object joint reconstruction in 3D. This is challenging due to occlusions, depth ambiguities, and widely varying object shapes. Existing methods rely on 3D contact annotations collected via expensive motion-capture systems or tedious manual labeling, limiting scalability and generalization. To overcome this, InteractVLM harnesses the broad visual knowledge of large Vision-Language Models (VLMs), fine-tuned with limited 3D contact data. However, directly applying these models is non-trivial, as they reason only in 2D, while human-object contact is inherently 3D. Thus we introduce a novel Render-Localize-Lift module that: (1) embeds 3D body and object surfaces in 2D space via multi-view rendering, (2) trains a novel multi-view localization model (MV-Loc) to infer contacts in 2D, and (3) lifts these to 3D. Additionally, we propose a new task called Semantic Human Contact estimation, where human contact predictions are conditioned explicitly on object semantics, enabling richer interaction modeling. InteractVLM outperforms existing work on contact estimation and also facilitates 3D reconstruction from an in-the wild image. Code and models are available at https://interactvlm.is.tue.mpg.de.

SDFit: 3D Object Pose and Shape by Fitting a Morphable SDF to a Single Image

Sep 24, 2024

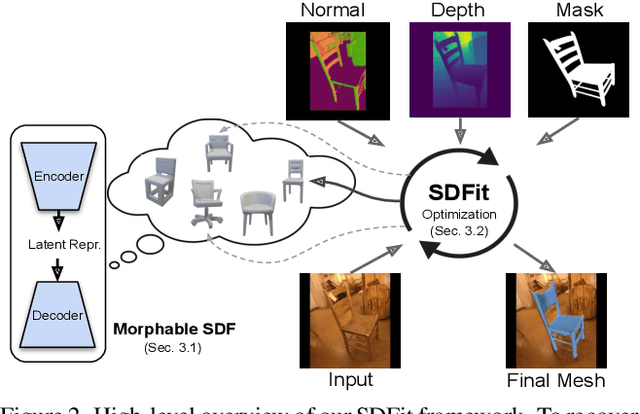

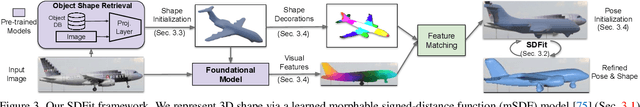

Abstract:We focus on recovering 3D object pose and shape from single images. This is highly challenging due to strong (self-)occlusions, depth ambiguities, the enormous shape variance, and lack of 3D ground truth for natural images. Recent work relies mostly on learning from finite datasets, so it struggles generalizing, while it focuses mostly on the shape itself, largely ignoring the alignment with pixels. Moreover, it performs feed-forward inference, so it cannot refine estimates. We tackle these limitations with a novel framework, called SDFit. To this end, we make three key observations: (1) Learned signed-distance-function (SDF) models act as a strong morphable shape prior. (2) Foundational models embed 2D images and 3D shapes in a joint space, and (3) also infer rich features from images. SDFit exploits these as follows. First, it uses a category-level morphable SDF (mSDF) model, called DIT, to generate 3D shape hypotheses. This mSDF is initialized by querying OpenShape's latent space conditioned on the input image. Then, it computes 2D-to-3D correspondences, by extracting and matching features from the image and mSDF. Last, it fits the mSDF to the image in an render-and-compare fashion, to iteratively refine estimates. We evaluate SDFit on the Pix3D and Pascal3D+ datasets of real-world images. SDFit performs roughly on par with state-of-the-art learned methods, but, uniquely, requires no re-training. Thus, SDFit is promising for generalizing in the wild, paving the way for future research. Code will be released

3D Whole-body Grasp Synthesis with Directional Controllability

Aug 29, 2024Abstract:Synthesizing 3D whole-bodies that realistically grasp objects is useful for animation, mixed reality, and robotics. This is challenging, because the hands and body need to look natural w.r.t. each other, the grasped object, as well as the local scene (i.e., a receptacle supporting the object). Only recent work tackles this, with a divide-and-conquer approach; it first generates a "guiding" right-hand grasp, and then searches for bodies that match this. However, the guiding-hand synthesis lacks controllability and receptacle awareness, so it likely has an implausible direction (i.e., a body can't match this without penetrating the receptacle) and needs corrections through major post-processing. Moreover, the body search needs exhaustive sampling and is expensive. These are strong limitations. We tackle these with a novel method called CWGrasp. Our key idea is that performing geometry-based reasoning "early on," instead of "too late," provides rich "control" signals for inference. To this end, CWGrasp first samples a plausible reaching-direction vector (used later for both the arm and hand) from a probabilistic model built via raycasting from the object and collision checking. Then, it generates a reaching body with a desired arm direction, as well as a "guiding" grasping hand with a desired palm direction that complies with the arm's one. Eventually, CWGrasp refines the body to match the "guiding" hand, while plausibly contacting the scene. Notably, generating already-compatible "parts" greatly simplifies the "whole." Moreover, CWGrasp uniquely tackles both right- and left-hand grasps. We evaluate on the GRAB and ReplicaGrasp datasets. CWGrasp outperforms baselines, at lower runtime and budget, while all components help performance. Code and models will be released.

CloSe: A 3D Clothing Segmentation Dataset and Model

Jan 22, 2024

Abstract:3D Clothing modeling and datasets play crucial role in the entertainment, animation, and digital fashion industries. Existing work often lacks detailed semantic understanding or uses synthetic datasets, lacking realism and personalization. To address this, we first introduce CloSe-D: a novel large-scale dataset containing 3D clothing segmentation of 3167 scans, covering a range of 18 distinct clothing classes. Additionally, we propose CloSe-Net, the first learning-based 3D clothing segmentation model for fine-grained segmentation from colored point clouds. CloSe-Net uses local point features, body-clothing correlation, and a garment-class and point features-based attention module, improving performance over baselines and prior work. The proposed attention module enables our model to learn appearance and geometry-dependent clothing prior from data. We further validate the efficacy of our approach by successfully segmenting publicly available datasets of people in clothing. We also introduce CloSe-T, a 3D interactive tool for refining segmentation labels. Combining the tool with CloSe-T in a continual learning setup demonstrates improved generalization on real-world data. Dataset, model, and tool can be found at https://virtualhumans.mpi-inf.mpg.de/close3dv24/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge