Dieter Pfoser

Near-real time fires detection using satellite imagery in Sudan conflict

Dec 08, 2025

Abstract:The challenges of ongoing war in Sudan highlight the need for rapid monitoring and analysis of such conflicts. Advances in deep learning and readily available satellite remote sensing imagery allow for near real-time monitoring. This paper uses 4-band imagery from Planet Labs with a deep learning model to show that fire damage in armed conflicts can be monitored with minimal delay. We demonstrate the effectiveness of our approach using five case studies in Sudan. We show that, compared to a baseline, the automated method captures the active fires and charred areas more accurately. Our results indicate that using 8-band imagery or time series of such imagery only result in marginal gains.

Extracting the U.S. building types from OpenStreetMap data

Sep 09, 2024

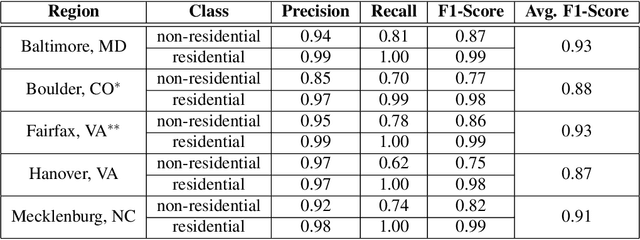

Abstract:Building type information is crucial for population estimation, traffic planning, urban planning, and emergency response applications. Although essential, such data is often not readily available. To alleviate this problem, this work creates a comprehensive dataset by providing residential/non-residential building classification covering the entire United States. We propose and utilize an unsupervised machine learning method to classify building types based on building footprints and available OpenStreetMap information. The classification result is validated using authoritative ground truth data for select counties in the U.S. The validation shows a high precision for non-residential building classification and a high recall for residential buildings. We identified various approaches to improving the quality of the classification, such as removing sheds and garages from the dataset. Furthermore, analyzing the misclassifications revealed that they are mainly due to missing and scarce metadata in OSM. A major result of this work is the resulting dataset of classifying 67,705,475 buildings. We hope that this data is of value to the scientific community, including urban and transportation planners.

Are Large Language Models Geospatially Knowledgeable?

Oct 09, 2023Abstract:Despite the impressive performance of Large Language Models (LLM) for various natural language processing tasks, little is known about their comprehension of geographic data and related ability to facilitate informed geospatial decision-making. This paper investigates the extent of geospatial knowledge, awareness, and reasoning abilities encoded within such pretrained LLMs. With a focus on autoregressive language models, we devise experimental approaches related to (i) probing LLMs for geo-coordinates to assess geospatial knowledge, (ii) using geospatial and non-geospatial prepositions to gauge their geospatial awareness, and (iii) utilizing a multidimensional scaling (MDS) experiment to assess the models' geospatial reasoning capabilities and to determine locations of cities based on prompting. Our results confirm that it does not only take larger, but also more sophisticated LLMs to synthesize geospatial knowledge from textual information. As such, this research contributes to understanding the potential and limitations of LLMs in dealing with geospatial information.

Disentangled Dynamic Graph Deep Generation

Oct 14, 2020

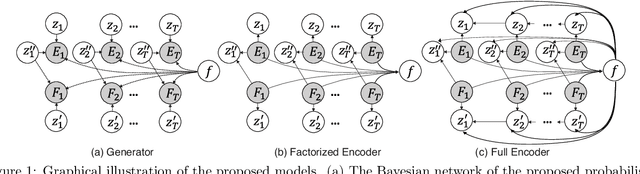

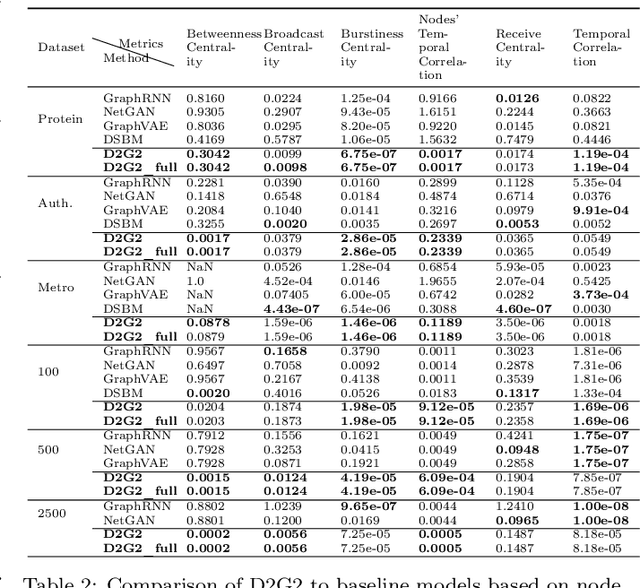

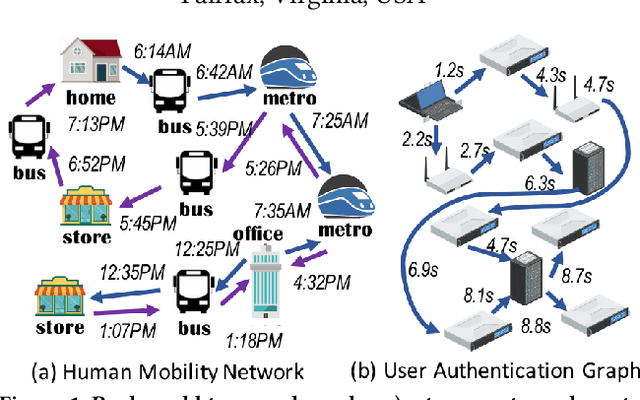

Abstract:Deep generative models for graphs have exhibited promising performance in ever-increasing domains such as design of molecules (i.e, graph of atoms) and structure prediction of proteins (i.e., graph of amino acids). Existing work typically focuses on static rather than dynamic graphs, which are actually very important in the applications such as protein folding, molecule reactions, and human mobility. Extending existing deep generative models from static to dynamic graphs is a challenging task, which requires to handle the factorization of static and dynamic characteristics as well as mutual interactions among node and edge patterns. Here, this paper proposes a novel framework of factorized deep generative models to achieve interpretable dynamic graph generation. Various generative models are proposed to characterize conditional independence among node, edge, static, and dynamic factors. Then, variational optimization strategies as well as dynamic graph decoders are proposed based on newly designed factorized variational autoencoders and recurrent graph deconvolutions. Extensive experiments on multiple datasets demonstrate the effectiveness of the proposed models.

Factorized Deep Generative Models for Trajectory Generation with Spatiotemporal-Validity Constraints

Sep 20, 2020

Abstract:Trajectory data generation is an important domain that characterizes the generative process of mobility data. Traditional methods heavily rely on predefined heuristics and distributions and are weak in learning unknown mechanisms. Inspired by the success of deep generative neural networks for images and texts, a fast-developing research topic is deep generative models for trajectory data which can learn expressively explanatory models for sophisticated latent patterns. This is a nascent yet promising domain for many applications. We first propose novel deep generative models factorizing time-variant and time-invariant latent variables that characterize global and local semantics, respectively. We then develop new inference strategies based on variational inference and constrained optimization to encapsulate the spatiotemporal validity. New deep neural network architectures have been developed to implement the inference and generation models with newly-generalized latent variable priors. The proposed methods achieved significant improvements in quantitative and qualitative evaluations in extensive experiments.

TG-GAN: Continuous-time Temporal Graph Generation with Deep Generative Models

Jun 09, 2020

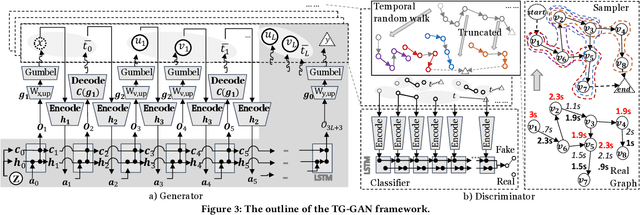

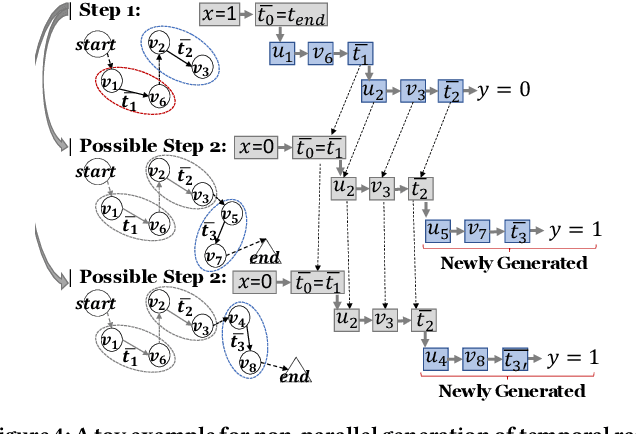

Abstract:The recent deep generative models for static graphs that are now being actively developed have achieved significant success in areas such as molecule design. However, many real-world problems involve temporal graphs whose topology and attribute values evolve dynamically over time, including important applications such as protein folding, human mobility networks, and social network growth. As yet, deep generative models for temporal graphs are not yet well understood and existing techniques for static graphs are not adequate for temporal graphs since they cannot 1) encode and decode continuously-varying graph topology chronologically, 2) enforce validity via temporal constraints, or 3) ensure efficiency for information-lossless temporal resolution. To address these challenges, we propose a new model, called ``Temporal Graph Generative Adversarial Network'' (TG-GAN) for continuous-time temporal graph generation, by modeling the deep generative process for truncated temporal random walks and their compositions. Specifically, we first propose a novel temporal graph generator that jointly model truncated edge sequences, time budgets, and node attributes, with novel activation functions that enforce temporal validity constraints under recurrent architecture. In addition, a new temporal graph discriminator is proposed, which combines time and node encoding operations over a recurrent architecture to distinguish the generated sequences from the real ones sampled by a newly-developed truncated temporal random walk sampler. Extensive experiments on both synthetic and real-world datasets demonstrate TG-GAN significantly outperforms the comparison methods in efficiency and effectiveness.

Station-to-User Transfer Learning: Towards Explainable User Clustering Through Latent Trip Signatures Using Tidal-Regularized Non-Negative Matrix Factorization

Apr 27, 2020

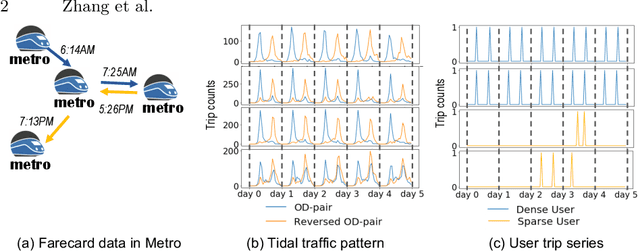

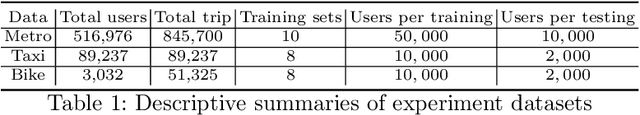

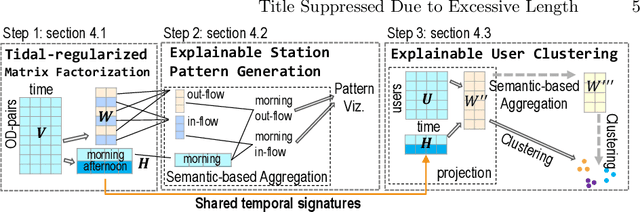

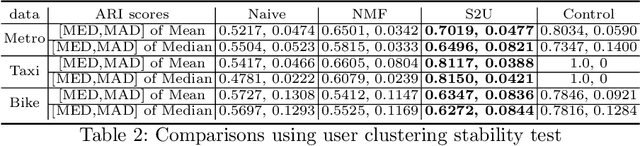

Abstract:Urban areas provide us with a treasure trove of available data capturing almost every aspect of a population's life. This work focuses on mobility data and how it will help improve our understanding of urban mobility patterns. Readily available and sizable farecard data captures trips in a public transportation network. However, such data typically lacks temporal modalities and as such the task of inferring trip semantic, station function, and user profile is quite challenging. As existing approaches either focus on station-level or user-level signals, they are prone to overfitting and generate less credible and insightful results. To properly learn such characteristics from trip data, we propose a Collective Learning Framework through Latent Representation, which augments user-level learning with collective patterns learned from station-level signals. This framework uses a novel, so-called Tidal-Regularized Non-negative Matrix Factorization method, which incorporates domain knowledge in the form of temporal passenger flow patterns in generic Non-negative Matrix Factorization. To evaluate our model performance, a user stability test based on the classical Rand Index is introduced as a metric to benchmark different unsupervised learning models. We provide a qualitative analysis of the station functions and user profiles for the Washington D.C. metro and show how our method supports spatiotemporal intra-city mobility exploration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge