Dewald Swart

High Speed Neuromorphic Vision-Based Inspection of Countersinks in Automated Manufacturing Processes

Apr 08, 2023Abstract:Countersink inspection is crucial in various automated assembly lines, especially in the aerospace and automotive sectors. Advancements in machine vision introduced automated robotic inspection of countersinks using laser scanners and monocular cameras. Nevertheless, the aforementioned sensing pipelines require the robot to pause on each hole for inspection due to high latency and measurement uncertainties with motion, leading to prolonged execution times of the inspection task. The neuromorphic vision sensor, on the other hand, has the potential to expedite the countersink inspection process, but the unorthodox output of the neuromorphic technology prohibits utilizing traditional image processing techniques. Therefore, novel event-based perception algorithms need to be introduced. We propose a countersink detection approach on the basis of event-based motion compensation and the mean-shift clustering principle. In addition, our framework presents a robust event-based circle detection algorithm to precisely estimate the depth of the countersink specimens. The proposed approach expedites the inspection process by a factor of 10$\times$ compared to conventional countersink inspection methods. The work in this paper was validated for over 50 trials on three countersink workpiece variants. The experimental results show that our method provides a precision of 0.025 mm for countersink depth inspection despite the low resolution of commercially available neuromorphic cameras.

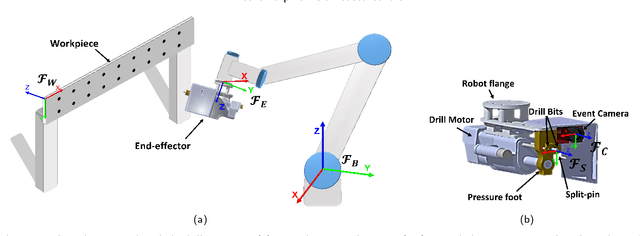

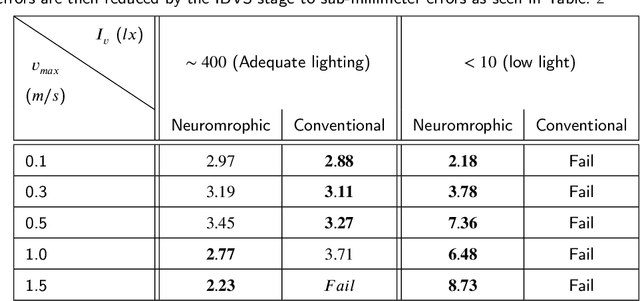

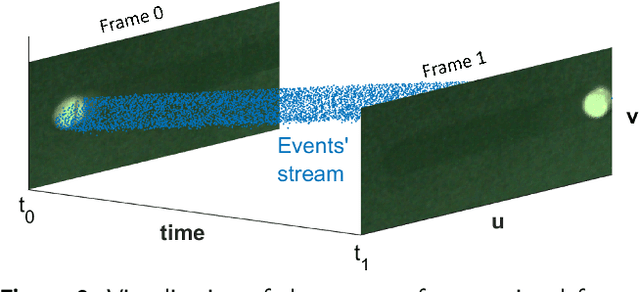

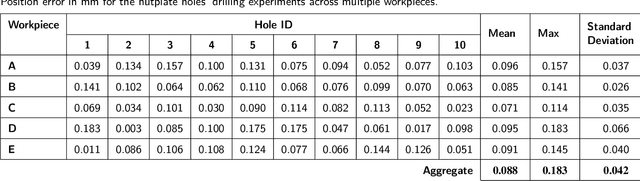

Neuromorphic Vision Based Control for the Precise Positioning of Robotic Drilling Systems

Jan 05, 2022

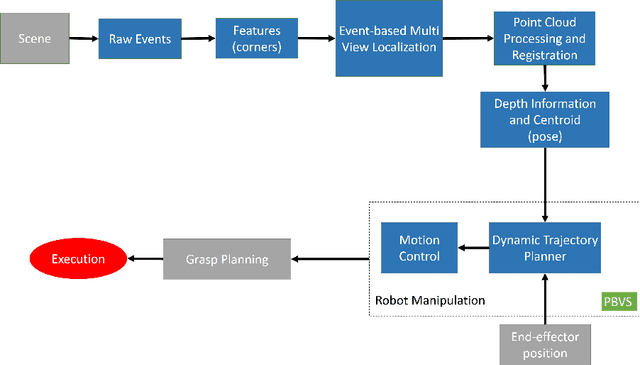

Abstract:The manufacturing industry is currently witnessing a paradigm shift with the unprecedented adoption of industrial robots, and machine vision is a key perception technology that enables these robots to perform precise operations in unstructured environments. However, the sensitivity of conventional vision sensors to lighting conditions and high-speed motion sets a limitation on the reliability and work-rate of production lines. Neuromorphic vision is a recent technology with the potential to address the challenges of conventional vision with its high temporal resolution, low latency, and wide dynamic range. In this paper and for the first time, we propose a novel neuromorphic vision based controller for faster and more reliable machining operations, and present a complete robotic system capable of performing drilling tasks with sub-millimeter accuracy. Our proposed system localizes the target workpiece in 3D using two perception stages that we developed specifically for the asynchronous output of neuromorphic cameras. The first stage performs multi-view reconstruction for an initial estimate of the workpiece's pose, and the second stage refines this estimate for a local region of the workpiece using circular hole detection. The robot then precisely positions the drilling end-effector and drills the target holes on the workpiece using a combined position-based and image-based visual servoing approach. The proposed solution is validated experimentally for drilling nutplate holes on workpieces placed arbitrarily in an unstructured environment with uncontrolled lighting. Experimental results prove the effectiveness of our solution with an average positional errors of less than 0.1 mm, and demonstrate that the use of neuromorphic vision overcomes the lighting and speed limitations of conventional cameras.

Real-Time Grasping Strategies Using Event Camera

Jul 15, 2021

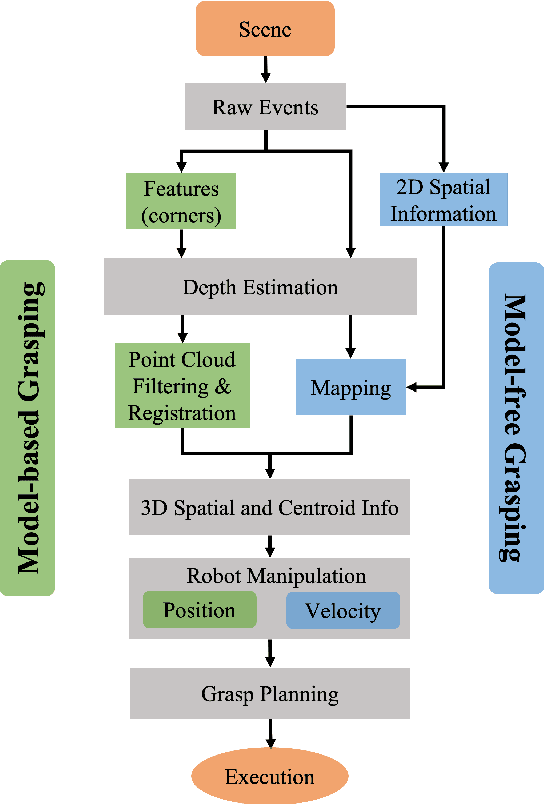

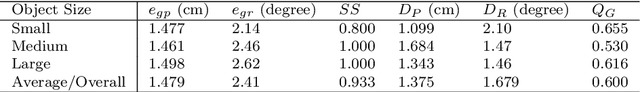

Abstract:Robotic vision plays a key role for perceiving the environment in grasping applications. However, the conventional framed-based robotic vision, suffering from motion blur and low sampling rate, may not meet the automation needs of evolving industrial requirements. This paper, for the first time, proposes an event-based robotic grasping framework for multiple known and unknown objects in a cluttered scene. Compared with standard frame-based vision, neuromorphic vision has advantages of microsecond-level sampling rate and no motion blur. Building on that, the model-based and model-free approaches are developed for known and unknown objects' grasping respectively. For the model-based approach, event-based multi-view approach is used to localize the objects in the scene, and then point cloud processing allows for the clustering and registering of objects. Differently, the proposed model-free approach utilizes the developed event-based object segmentation, visual servoing and grasp planning to localize, align to, and grasp the targeting object. The proposed approaches are experimentally validated with objects of different sizes, using a UR10 robot with an eye-in-hand neuromorphic camera and a Barrett hand gripper. Moreover, the robustness of the two proposed event-based grasping approaches are validated in a low-light environment. This low-light operating ability shows a great advantage over the grasping using the standard frame-based vision. Furthermore, the developed model-free approach demonstrates the advantage of dealing with unknown object without prior knowledge compared to the proposed model-based approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge