David R. Johnson

InfoGain Wavelets: Furthering the Design of Diffusion Wavelets for Graph-Structured Data

Apr 08, 2025

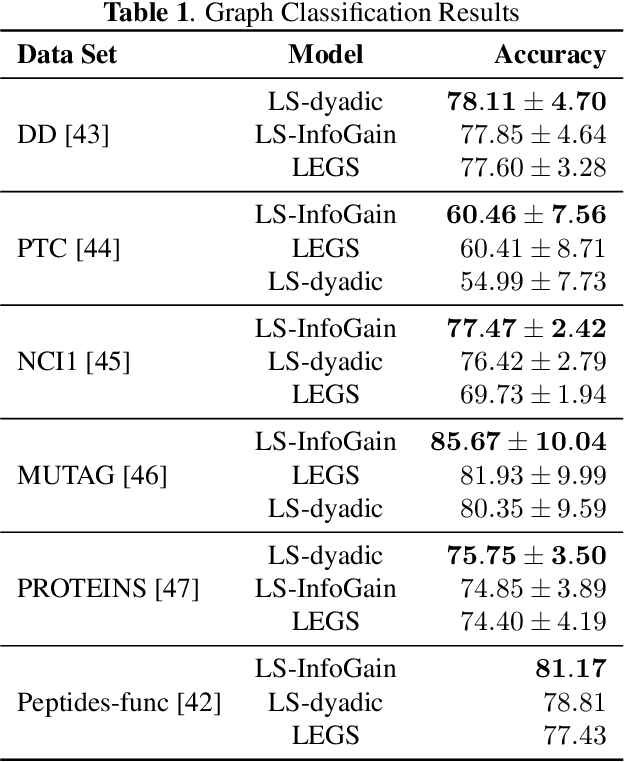

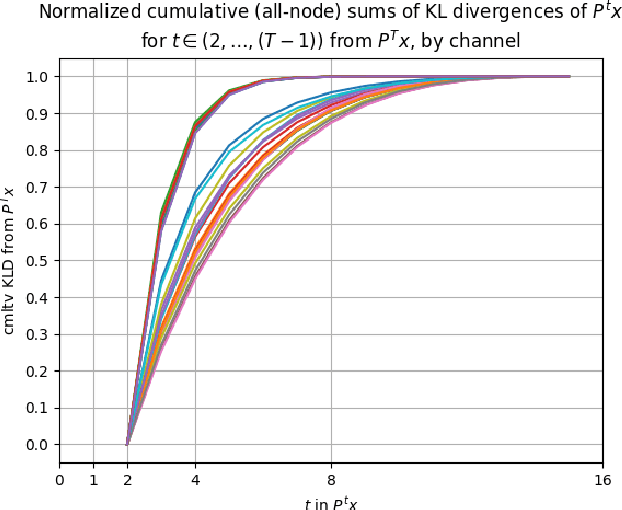

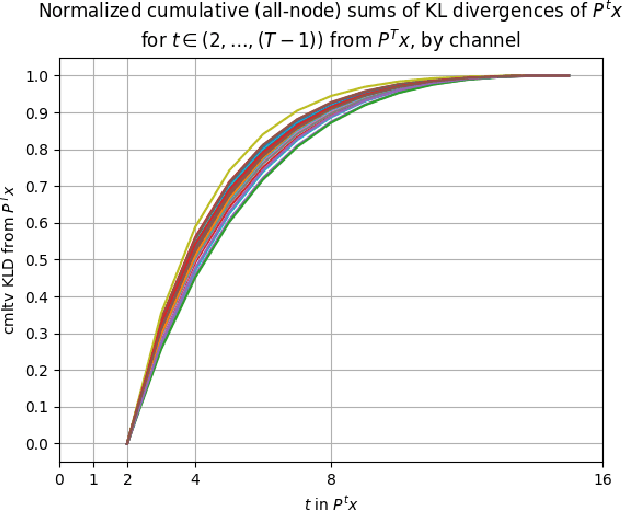

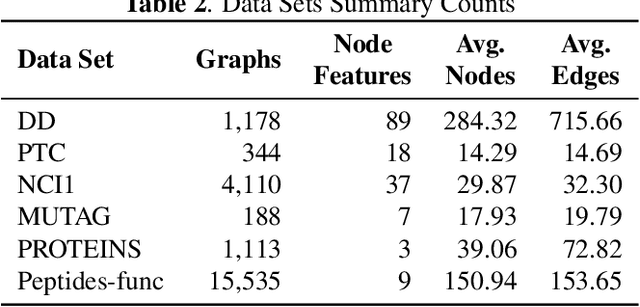

Abstract:Diffusion wavelets extract information from graph signals at different scales of resolution by utilizing graph diffusion operators raised to various powers, known as diffusion scales. Traditionally, the diffusion scales are chosen to be dyadic integers, $\mathbf{2^j}$. Here, we propose a novel, unsupervised method for selecting the diffusion scales based on ideas from information theory. We then show that our method can be incorporated into wavelet-based GNNs via graph classification experiments.

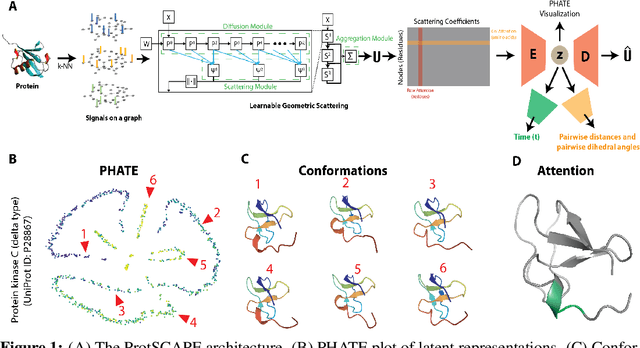

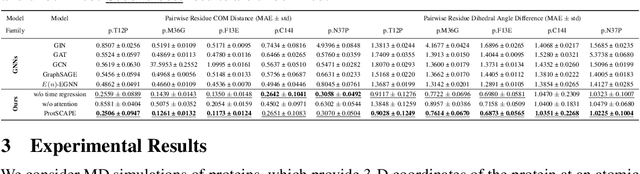

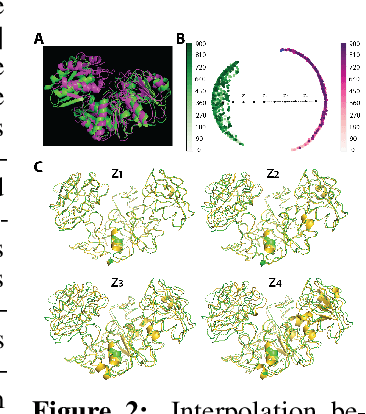

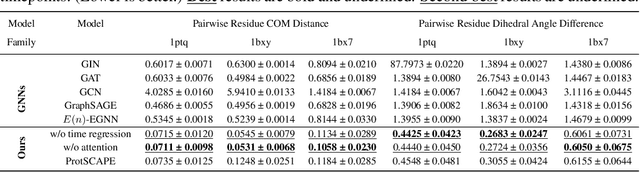

ProtSCAPE: Mapping the landscape of protein conformations in molecular dynamics

Oct 27, 2024

Abstract:Understanding the dynamic nature of protein structures is essential for comprehending their biological functions. While significant progress has been made in predicting static folded structures, modeling protein motions on microsecond to millisecond scales remains challenging. To address these challenges, we introduce a novel deep learning architecture, Protein Transformer with Scattering, Attention, and Positional Embedding (ProtSCAPE), which leverages the geometric scattering transform alongside transformer-based attention mechanisms to capture protein dynamics from molecular dynamics (MD) simulations. ProtSCAPE utilizes the multi-scale nature of the geometric scattering transform to extract features from protein structures conceptualized as graphs and integrates these features with dual attention structures that focus on residues and amino acid signals, generating latent representations of protein trajectories. Furthermore, ProtSCAPE incorporates a regression head to enforce temporally coherent latent representations.

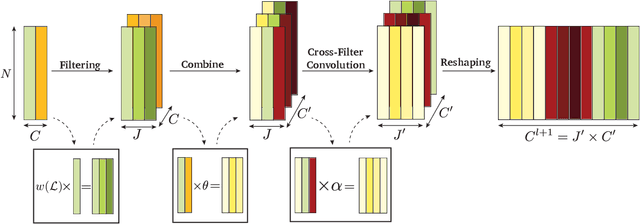

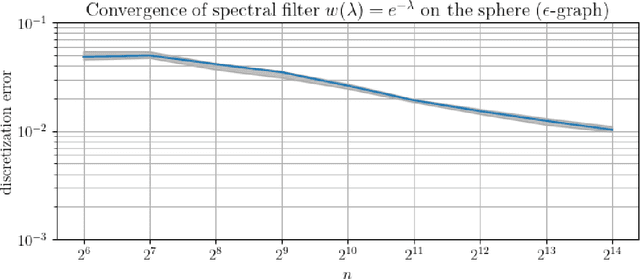

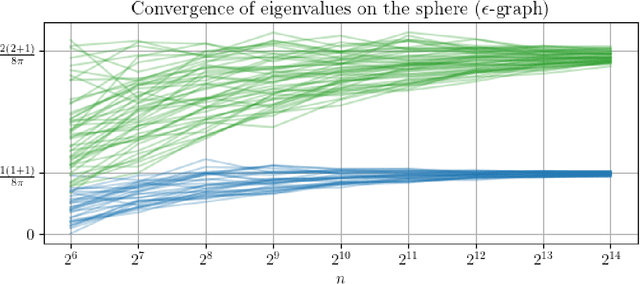

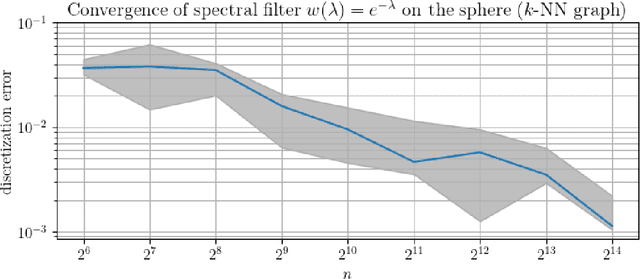

Convergence of Manifold Filter-Combine Networks

Oct 18, 2024

Abstract:In order to better understand manifold neural networks (MNNs), we introduce Manifold Filter-Combine Networks (MFCNs). The filter-combine framework parallels the popular aggregate-combine paradigm for graph neural networks (GNNs) and naturally suggests many interesting families of MNNs which can be interpreted as the manifold analog of various popular GNNs. We then propose a method for implementing MFCNs on high-dimensional point clouds that relies on approximating the manifold by a sparse graph. We prove that our method is consistent in the sense that it converges to a continuum limit as the number of data points tends to infinity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge