David Broniatowski

Detecting East Asian Prejudice on Social Media

May 08, 2020

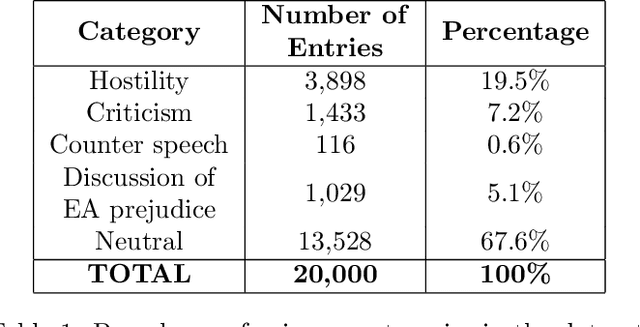

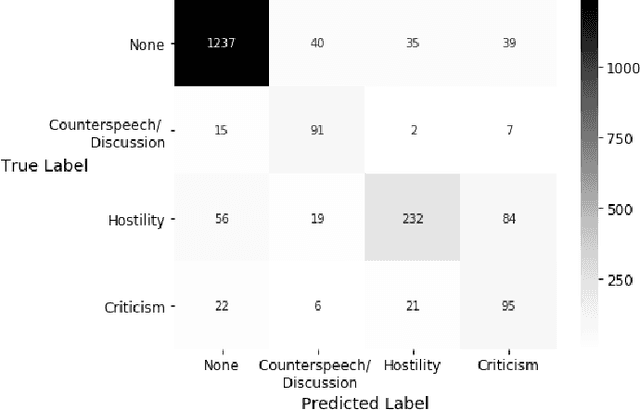

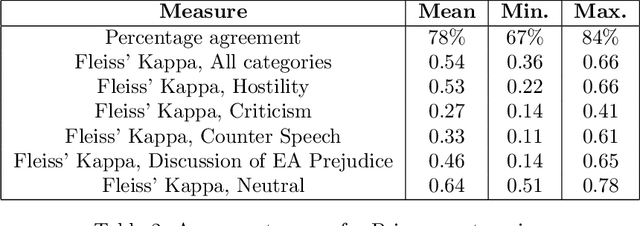

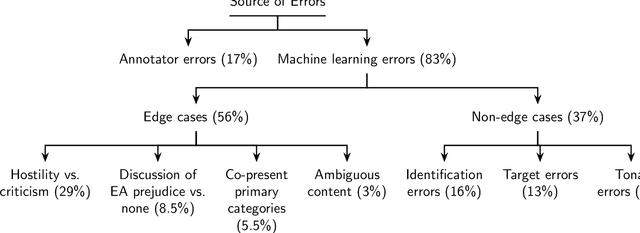

Abstract:The outbreak of COVID-19 has transformed societies across the world as governments tackle the health, economic and social costs of the pandemic. It has also raised concerns about the spread of hateful language and prejudice online, especially hostility directed against East Asia. In this paper we report on the creation of a classifier that detects and categorizes social media posts from Twitter into four classes: Hostility against East Asia, Criticism of East Asia, Meta-discussions of East Asian prejudice and a neutral class. The classifier achieves an F1 score of 0.83 across all four classes. We provide our final model (coded in Python), as well as a new 20,000 tweet training dataset used to make the classifier, two analyses of hashtags associated with East Asian prejudice and the annotation codebook. The classifier can be implemented by other researchers, assisting with both online content moderation processes and further research into the dynamics, prevalence and impact of East Asian prejudice online during this global pandemic.

Pro-Russian Biases in Anti-Chinese Tweets about the Novel Coronavirus

Apr 18, 2020

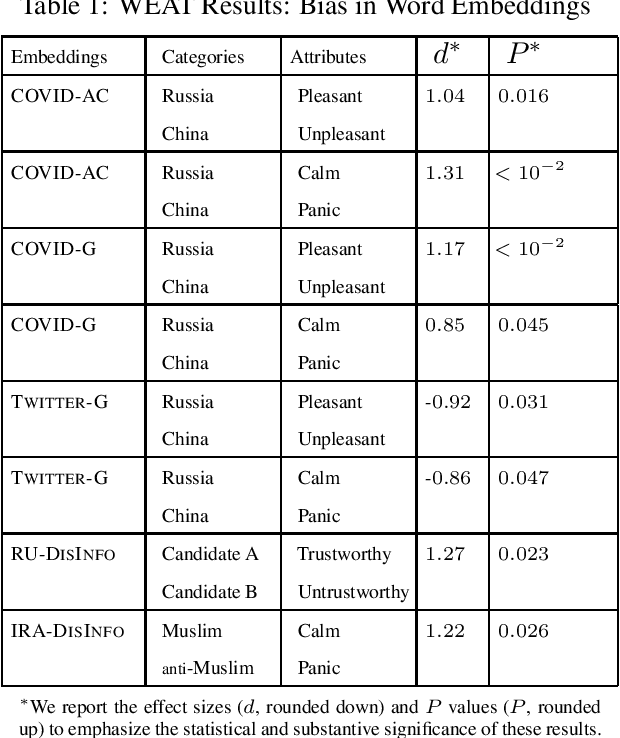

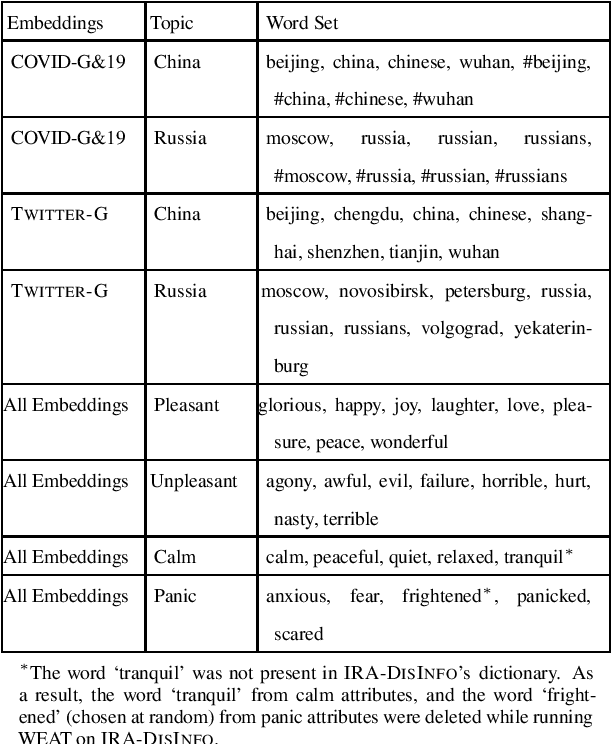

Abstract:The recent COVID-19 pandemic, which was first detected in Wuhan, China, has been linked to increased anti-Chinese sentiment in the United States. Recently, Broniatowski et al. found that foreign powers, and especially Russia, were implicated in information operations using public health crises to promote discord -- including racial conflict -- in American society (Broniatowski, 2018). This brief considers the problem of automatically detecting changes in overall attitudes, that may be associated with emerging information operations, via artificial intelligence. Accurate analysis of these emerging topics usually requires laborious, manual analysis by experts to annotate millions of tweets to identify biases in new topics. We introduce extensions of the Word Embedding Association Test from Caliskan et. al to a new domain (Caliskan, 2017). This practical and unsupervised method is applied to quantify biases being promoted in information operations. Analyzing historical information operations from Russia's interference in the 2016 U.S. presidential elections, we quantify biased attitudes for presidential candidates, and sentiment toward Muslim groups. We next apply this method to a corpus of tweets containing anti-Chinese hashtags. We find that roughly 1% of tweets in our corpus reference Russian-funded news sources and use anti-Chinese hashtags and, beyond the expected anti-Chinese attitudes, we find that this corpus as a whole contains pro-Russian attitudes, which are not present in a control Twitter corpus containing general tweets. Additionally, 4% of the users in this corpus were suspended within a week. These findings may indicate the presence of abusive account activity associated with rapid changes in attitudes around the COVID-19 public health crisis, suggesting potential information operations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge