Autumn Toney

A Large-Scale, Automated Study of Language Surrounding Artificial Intelligence

Feb 24, 2021

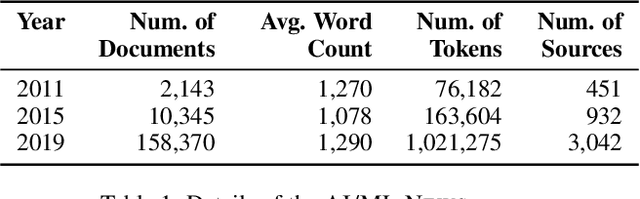

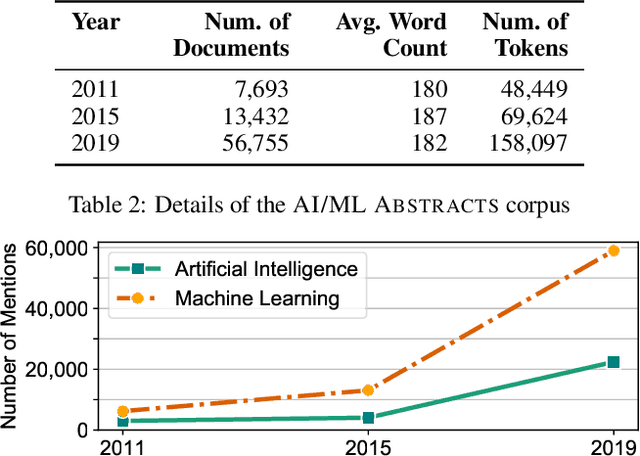

Abstract:This work presents a large-scale analysis of artificial intelligence (AI) and machine learning (ML) references within news articles and scientific publications between 2011 and 2019. We implement word association measurements that automatically identify shifts in language co-occurring with AI/ML and quantify the strength of these word associations. Our results highlight the evolution of perceptions and definitions around AI/ML and detect emerging application areas, models, and systems (e.g., blockchain and cybersecurity). Recent small-scale, manual studies have explored AI/ML discourse within the general public, the policymaker community, and researcher community, but are limited in their scalability and longevity. Our methods provide new views into public perceptions and subject-area expert discussions of AI/ML and greatly exceed the explanative power of prior work.

ValNorm: A New Word Embedding Intrinsic Evaluation Method Reveals Valence Biases are Consistent Across Languages and Over Decades

Jun 18, 2020

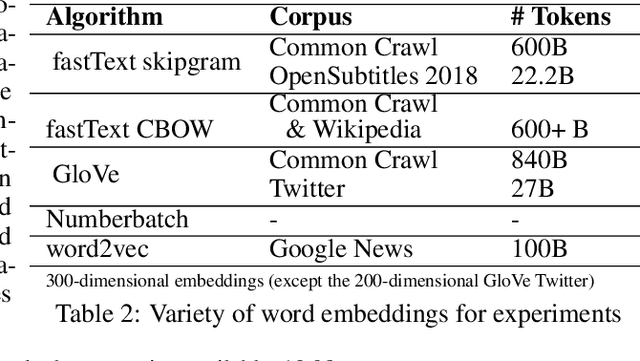

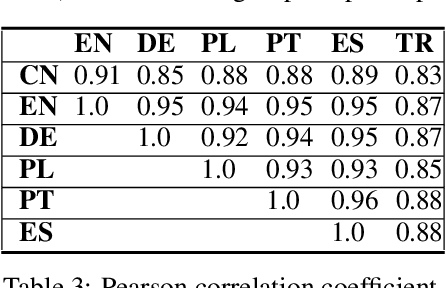

Abstract:Word embeddings learn implicit biases from linguistic regularities captured by word co-occurrence information. As a result, statistical methods can detect and quantify social biases as well as widely shared associations imbibed by the corpus the word embeddings are trained on. By extending methods that quantify human-like biases in word embeddings, we introduce ValNorm, a new word embedding intrinsic evaluation task, and the first unsupervised method that estimates the affective meaning of valence in words with high accuracy. The correlation between human scores of valence for 399 words collected to establish pleasantness norms in English and ValNorm scores is r=0.88. These 399 words, obtained from social psychology literature, are used to measure biases that are non-discriminatory among social groups. We hypothesize that the valence associations for these words are widely shared across languages and consistent over time. We estimate valence associations of these words using word embeddings from six languages representing various language structures and from historical text covering 200 years. Our method achieves consistently high accuracy, suggesting that the valence associations for these words are widely shared. In contrast, we measure gender stereotypes using the same set of word embeddings and find that social biases vary across languages. Our results signal that valence associations of this word set represent widely shared associations and consequently an intrinsic quality of words.

Pro-Russian Biases in Anti-Chinese Tweets about the Novel Coronavirus

Apr 18, 2020

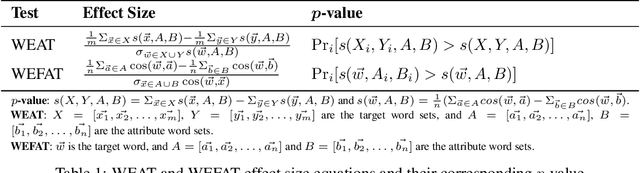

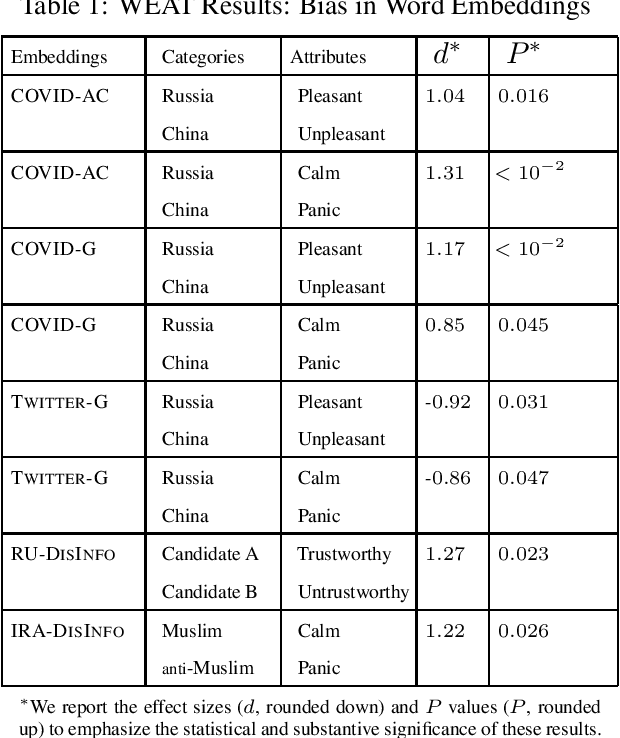

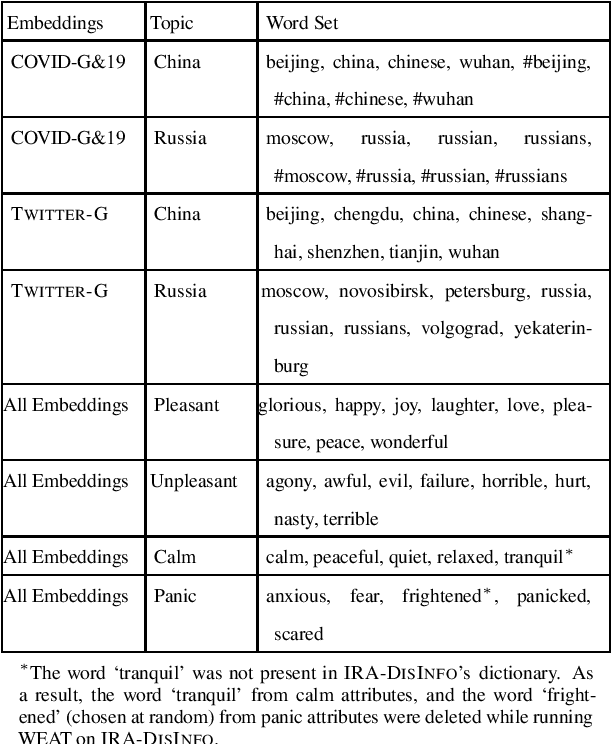

Abstract:The recent COVID-19 pandemic, which was first detected in Wuhan, China, has been linked to increased anti-Chinese sentiment in the United States. Recently, Broniatowski et al. found that foreign powers, and especially Russia, were implicated in information operations using public health crises to promote discord -- including racial conflict -- in American society (Broniatowski, 2018). This brief considers the problem of automatically detecting changes in overall attitudes, that may be associated with emerging information operations, via artificial intelligence. Accurate analysis of these emerging topics usually requires laborious, manual analysis by experts to annotate millions of tweets to identify biases in new topics. We introduce extensions of the Word Embedding Association Test from Caliskan et. al to a new domain (Caliskan, 2017). This practical and unsupervised method is applied to quantify biases being promoted in information operations. Analyzing historical information operations from Russia's interference in the 2016 U.S. presidential elections, we quantify biased attitudes for presidential candidates, and sentiment toward Muslim groups. We next apply this method to a corpus of tweets containing anti-Chinese hashtags. We find that roughly 1% of tweets in our corpus reference Russian-funded news sources and use anti-Chinese hashtags and, beyond the expected anti-Chinese attitudes, we find that this corpus as a whole contains pro-Russian attitudes, which are not present in a control Twitter corpus containing general tweets. Additionally, 4% of the users in this corpus were suspended within a week. These findings may indicate the presence of abusive account activity associated with rapid changes in attitudes around the COVID-19 public health crisis, suggesting potential information operations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge