Danwei Li

AdaTT: Adaptive Task-to-Task Fusion Network for Multitask Learning in Recommendations

Apr 11, 2023

Abstract:Multi-task learning (MTL) aims at enhancing the performance and efficiency of machine learning models by training them on multiple tasks simultaneously. However, MTL research faces two challenges: 1) modeling the relationships between tasks to effectively share knowledge between them, and 2) jointly learning task-specific and shared knowledge. In this paper, we present a novel model Adaptive Task-to-Task Fusion Network (AdaTT) to address both challenges. AdaTT is a deep fusion network built with task specific and optional shared fusion units at multiple levels. By leveraging a residual mechanism and gating mechanism for task-to-task fusion, these units adaptively learn shared knowledge and task specific knowledge. To evaluate the performance of AdaTT, we conduct experiments on a public benchmark and an industrial recommendation dataset using various task groups. Results demonstrate AdaTT can significantly outperform existing state-of-the-art baselines.

Efficient Penetration Depth Computation between Rigid Models using Contact Space Propagation Sampling

Nov 13, 2015

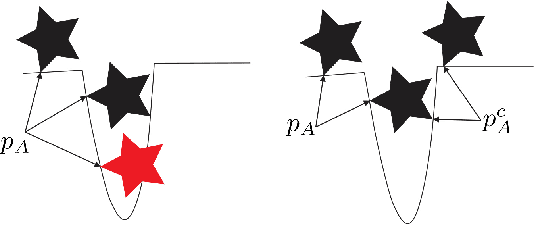

Abstract:We present a novel method to compute the approximate global penetration depth (PD) between two non-convex geometric models. Our approach consists of two phases: offline precomputation and run-time queries. In the first phase, our formulation uses a novel sampling algorithm to precompute an approximation of the high-dimensional contact space between the pair of models. As compared with prior random sampling algorithms for contact space approximation, our propagation sampling considerably speeds up the precomputation and yields a high quality approximation. At run-time, we perform a nearest-neighbor query and local projection to efficiently compute the translational or generalized PD. We demonstrate the performance of our approach on complex 3D benchmarks with tens or hundreds of thousands of triangles, and we observe significant improvement over previous methods in terms of accuracy, with a modest improvement in the run-time performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge