Daniel Flores-Araiza

Causal Scoring Medical Image Explanations: A Case Study On Ex-vivo Kidney Stone Images

Sep 05, 2023Abstract:On the promise that if human users know the cause of an output, it would enable them to grasp the process responsible for the output, and hence provide understanding, many explainable methods have been proposed to indicate the cause for the output of a model based on its input. Nonetheless, little has been reported on quantitative measurements of such causal relationships between the inputs, the explanations, and the outputs of a model, leaving the assessment to the user, independent of his level of expertise in the subject. To address this situation, we explore a technique for measuring the causal relationship between the features from the area of the object of interest in the images of a class and the output of a classifier. Our experiments indicate improvement in the causal relationships measured when the area of the object of interest per class is indicated by a mask from an explainable method than when it is indicated by human annotators. Hence the chosen name of Causal Explanation Score (CaES)

FAU-Net: An Attention U-Net Extension with Feature Pyramid Attention for Prostate Cancer Segmentation

Sep 04, 2023Abstract:This contribution presents a deep learning method for the segmentation of prostate zones in MRI images based on U-Net using additive and feature pyramid attention modules, which can improve the workflow of prostate cancer detection and diagnosis. The proposed model is compared to seven different U-Net-based architectures. The automatic segmentation performance of each model of the central zone (CZ), peripheral zone (PZ), transition zone (TZ) and Tumor were evaluated using Dice Score (DSC), and the Intersection over Union (IoU) metrics. The proposed alternative achieved a mean DSC of 84.15% and IoU of 76.9% in the test set, outperforming most of the studied models in this work except from R2U-Net and attention R2U-Net architectures.

Assessing the performance of deep learning-based models for prostate cancer segmentation using uncertainty scores

Aug 09, 2023Abstract:This study focuses on comparing deep learning methods for the segmentation and quantification of uncertainty in prostate segmentation from MRI images. The aim is to improve the workflow of prostate cancer detection and diagnosis. Seven different U-Net-based architectures, augmented with Monte-Carlo dropout, are evaluated for automatic segmentation of the central zone, peripheral zone, transition zone, and tumor, with uncertainty estimation. The top-performing model in this study is the Attention R2U-Net, achieving a mean Intersection over Union (IoU) of 76.3% and Dice Similarity Coefficient (DSC) of 85% for segmenting all zones. Additionally, Attention R2U-Net exhibits the lowest uncertainty values, particularly in the boundaries of the transition zone and tumor, when compared to the other models.

A metric learning approach for endoscopic kidney stone identification

Jul 13, 2023

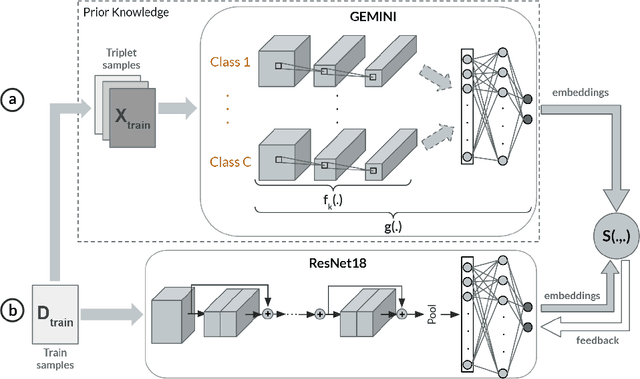

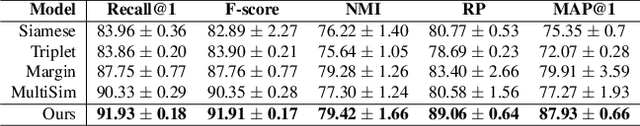

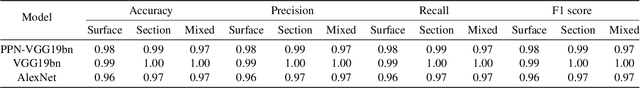

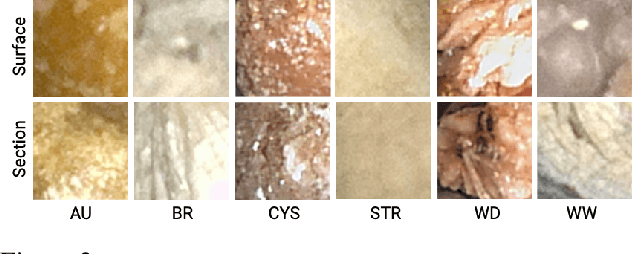

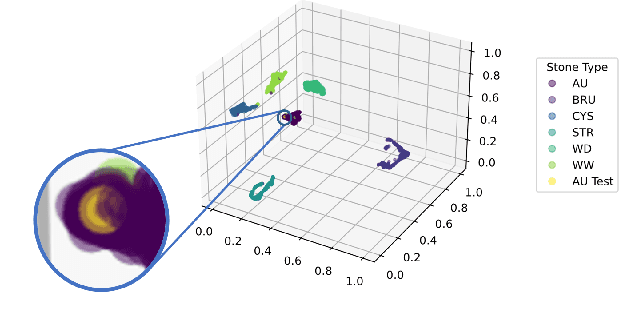

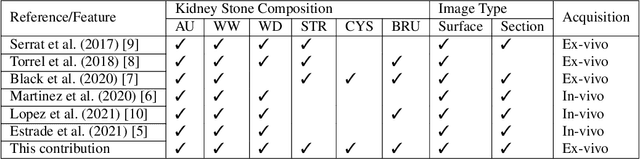

Abstract:Several Deep Learning (DL) methods have recently been proposed for an automated identification of kidney stones during an ureteroscopy to enable rapid therapeutic decisions. Even if these DL approaches led to promising results, they are mainly appropriate for kidney stone types for which numerous labelled data are available. However, only few labelled images are available for some rare kidney stone types. This contribution exploits Deep Metric Learning (DML) methods i) to handle such classes with few samples, ii) to generalize well to out of distribution samples, and iii) to cope better with new classes which are added to the database. The proposed Guided Deep Metric Learning approach is based on a novel architecture which was designed to learn data representations in an improved way. The solution was inspired by Few-Shot Learning (FSL) and makes use of a teacher-student approach. The teacher model (GEMINI) generates a reduced hypothesis space based on prior knowledge from the labeled data, and is used it as a guide to a student model (i.e., ResNet50) through a Knowledge Distillation scheme. Extensive tests were first performed on two datasets separately used for the recognition, namely a set of images acquired for the surfaces of the kidney stone fragments, and a set of images of the fragment sections. The proposed DML-approach improved the identification accuracy by 10% and 12% in comparison to DL-methods and other DML-approaches, respectively. Moreover, model embeddings from the two dataset types were merged in an organized way through a multi-view scheme to simultaneously exploit the information of surface and section fragments. Test with the resulting mixed model improves the identification accuracy by at least 3% and up to 30% with respect to DL-models and shallow machine learning methods, respectively.

SuSana Distancia is all you need: Enforcing class separability in metric learning via two novel distance-based loss functions for few-shot image classification

May 18, 2023Abstract:Few-shot learning is a challenging area of research that aims to learn new concepts with only a few labeled samples of data. Recent works based on metric-learning approaches leverage the meta-learning approach, which is encompassed by episodic tasks that make use a support (training) and query set (test) with the objective of learning a similarity comparison metric between those sets. Due to the lack of data, the learning process of the embedding network becomes an important part of the few-shot task. Previous works have addressed this problem using metric learning approaches, but the properties of the underlying latent space and the separability of the difference classes on it was not entirely enforced. In this work, we propose two different loss functions which consider the importance of the embedding vectors by looking at the intra-class and inter-class distance between the few data. The first loss function is the Proto-Triplet Loss, which is based on the original triplet loss with the modifications needed to better work on few-shot scenarios. The second loss function, which we dub ICNN loss is based on an inter and intra class nearest neighbors score, which help us to assess the quality of embeddings obtained from the trained network. Our results, obtained from a extensive experimental setup show a significant improvement in accuracy in the miniImagenNet benchmark compared to other metric-based few-shot learning methods by a margin of 2%, demonstrating the capability of these loss functions to allow the network to generalize better to previously unseen classes. In our experiments, we demonstrate competitive generalization capabilities to other domains, such as the Caltech CUB, Dogs and Cars datasets compared with the state of the art.

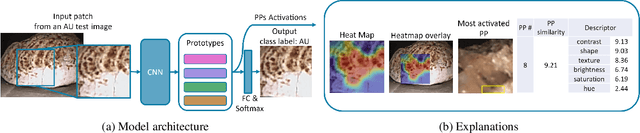

Deep Prototypical-Parts Ease Morphological Kidney Stone Identification and are Competitively Robust to Photometric Perturbations

Apr 08, 2023Abstract:Identifying the type of kidney stones can allow urologists to determine their cause of formation, improving the prescription of appropriate treatments to diminish future relapses. Currently, the associated ex-vivo diagnosis (known as Morpho-constitutional Analysis, MCA) is time-consuming, expensive and requires a great deal of experience, as it requires a visual analysis component that is highly operator dependant. Recently, machine learning methods have been developed for in-vivo endoscopic stone recognition. Deep Learning (DL) based methods outperform non-DL methods in terms of accuracy but lack explainability. Despite this trade-off, when it comes to making high-stakes decisions, it's important to prioritize understandable Computer-Aided Diagnosis (CADx) that suggests a course of action based on reasonable evidence, rather than a model prescribing a course of action. In this proposal, we learn Prototypical Parts (PPs) per kidney stone subtype, which are used by the DL model to generate an output classification. Using PPs in the classification task enables case-based reasoning explanations for such output, thus making the model interpretable. In addition, we modify global visual characteristics to describe their relevance to the PPs and the sensitivity of our model's performance. With this, we provide explanations with additional information at the sample, class and model levels in contrast to previous works. Although our implementation's average accuracy is lower than state-of-the-art (SOTA) non-interpretable DL models by 1.5 %, our models perform 2.8% better on perturbed images with a lower standard deviation, without adversarial training. Thus, Learning PPs has the potential to create more robust DL models.

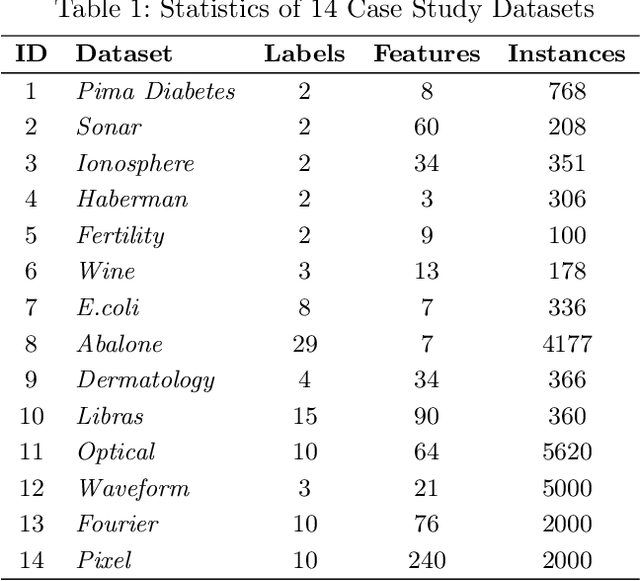

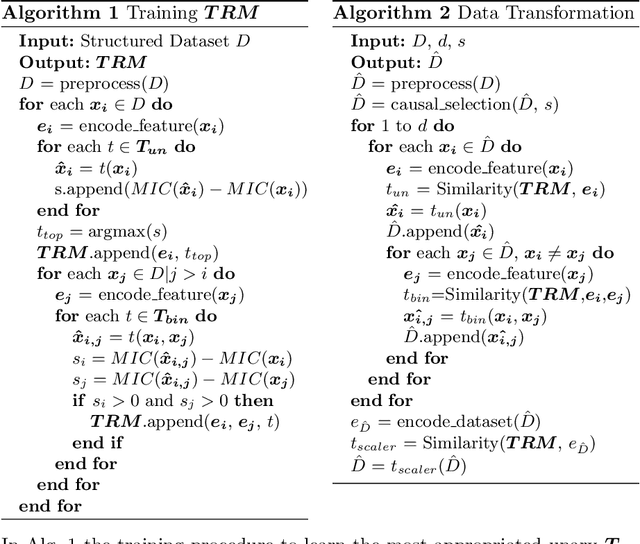

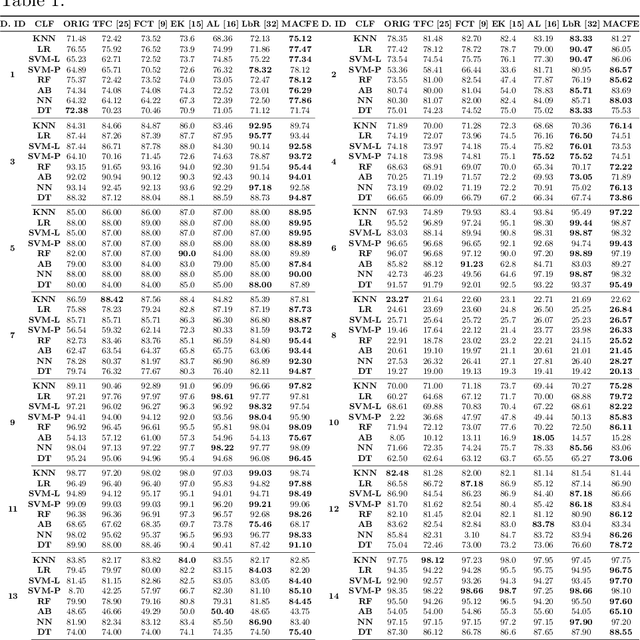

MACFE: A Meta-learning and Causality Based Feature Engineering Framework

Jul 08, 2022

Abstract:Feature engineering has become one of the most important steps to improve model prediction performance, and to produce quality datasets. However, this process requires non-trivial domain-knowledge which involves a time-consuming process. Thereby, automating such process has become an active area of research and of interest in industrial applications. In this paper, a novel method, called Meta-learning and Causality Based Feature Engineering (MACFE), is proposed; our method is based on the use of meta-learning, feature distribution encoding, and causality feature selection. In MACFE, meta-learning is used to find the best transformations, then the search is accelerated by pre-selecting "original" features given their causal relevance. Experimental evaluations on popular classification datasets show that MACFE can improve the prediction performance across eight classifiers, outperforms the current state-of-the-art methods in average by at least 6.54%, and obtains an improvement of 2.71% over the best previous works.

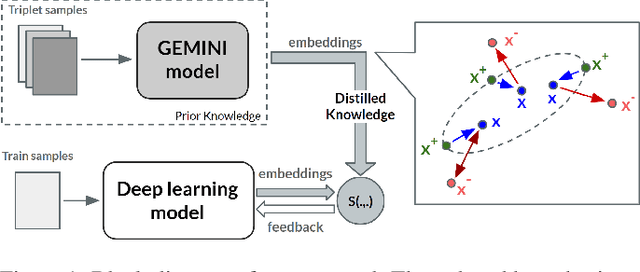

Guided Deep Metric Learning

Jun 04, 2022

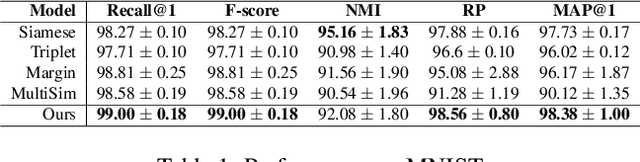

Abstract:Deep Metric Learning (DML) methods have been proven relevant for visual similarity learning. However, they sometimes lack generalization properties because they are trained often using an inappropriate sample selection strategy or due to the difficulty of the dataset caused by a distributional shift in the data. These represent a significant drawback when attempting to learn the underlying data manifold. Therefore, there is a pressing need to develop better ways of obtaining generalization and representation of the underlying manifold. In this paper, we propose a novel approach to DML that we call Guided Deep Metric Learning, a novel architecture oriented to learning more compact clusters, improving generalization under distributional shifts in DML. This novel architecture consists of two independent models: A multi-branch master model, inspired from a Few-Shot Learning (FSL) perspective, generates a reduced hypothesis space based on prior knowledge from labeled data, which guides or regularizes the decision boundary of a student model during training under an offline knowledge distillation scheme. Experiments have shown that the proposed method is capable of a better manifold generalization and representation to up to 40% improvement (Recall@1, CIFAR10), using guidelines suggested by Musgrave et al. to perform a more fair and realistic comparison, which is currently absent in the literature

Interpretable Deep Learning Classifier by Detection of Prototypical Parts on Kidney Stones Images

Jun 02, 2022

Abstract:Identifying the type of kidney stones can allow urologists to determine their formation cause, improving the early prescription of appropriate treatments to diminish future relapses. However, currently, the associated ex-vivo diagnosis (known as morpho-constitutional analysis, MCA) is time-consuming, expensive, and requires a great deal of experience, as it requires a visual analysis component that is highly operator dependant. Recently, machine learning methods have been developed for in-vivo endoscopic stone recognition. Shallow methods have been demonstrated to be reliable and interpretable but exhibit low accuracy, while deep learning-based methods yield high accuracy but are not explainable. However, high stake decisions require understandable computer-aided diagnosis (CAD) to suggest a course of action based on reasonable evidence, rather than merely prescribe one. Herein, we investigate means for learning part-prototypes (PPs) that enable interpretable models. Our proposal suggests a classification for a kidney stone patch image and provides explanations in a similar way as those used on the MCA method.

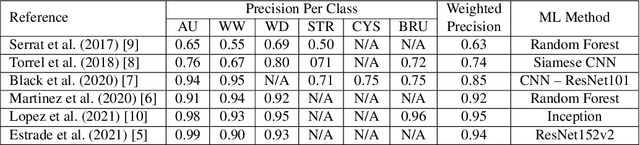

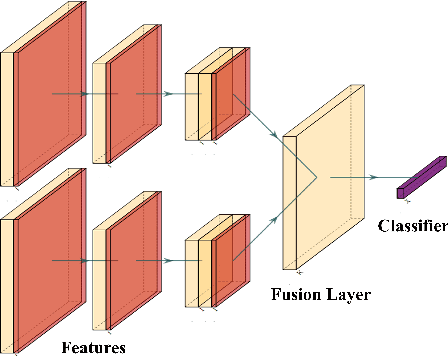

Comparing feature fusion strategies for Deep Learning-based kidney stone identification

May 31, 2022

Abstract:This contribution presents a deep-learning method for extracting and fusing image information acquired from different viewpoints with the aim to produce more discriminant object features. Our approach was specifically designed to mimic the morpho-constitutional analysis used by urologists to visually classify kidney stones by inspecting the sections and surfaces of their fragments. Deep feature fusion strategies improved the results of single view extraction backbone models by more than 10\% in terms of precision of the kidney stones classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge