Christian Mata

FAU-Net: An Attention U-Net Extension with Feature Pyramid Attention for Prostate Cancer Segmentation

Sep 04, 2023Abstract:This contribution presents a deep learning method for the segmentation of prostate zones in MRI images based on U-Net using additive and feature pyramid attention modules, which can improve the workflow of prostate cancer detection and diagnosis. The proposed model is compared to seven different U-Net-based architectures. The automatic segmentation performance of each model of the central zone (CZ), peripheral zone (PZ), transition zone (TZ) and Tumor were evaluated using Dice Score (DSC), and the Intersection over Union (IoU) metrics. The proposed alternative achieved a mean DSC of 84.15% and IoU of 76.9% in the test set, outperforming most of the studied models in this work except from R2U-Net and attention R2U-Net architectures.

Assessing the performance of deep learning-based models for prostate cancer segmentation using uncertainty scores

Aug 09, 2023Abstract:This study focuses on comparing deep learning methods for the segmentation and quantification of uncertainty in prostate segmentation from MRI images. The aim is to improve the workflow of prostate cancer detection and diagnosis. Seven different U-Net-based architectures, augmented with Monte-Carlo dropout, are evaluated for automatic segmentation of the central zone, peripheral zone, transition zone, and tumor, with uncertainty estimation. The top-performing model in this study is the Attention R2U-Net, achieving a mean Intersection over Union (IoU) of 76.3% and Dice Similarity Coefficient (DSC) of 85% for segmenting all zones. Additionally, Attention R2U-Net exhibits the lowest uncertainty values, particularly in the boundaries of the transition zone and tumor, when compared to the other models.

Comparison of automatic prostate zones segmentation models in MRI images using U-net-like architectures

Jul 19, 2022

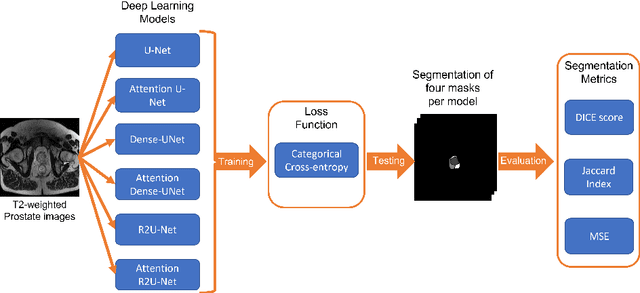

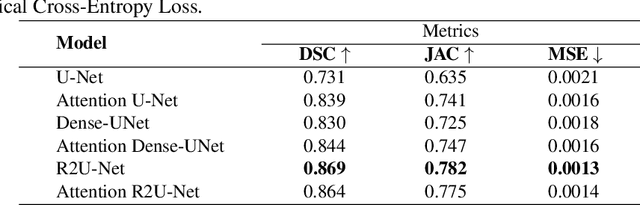

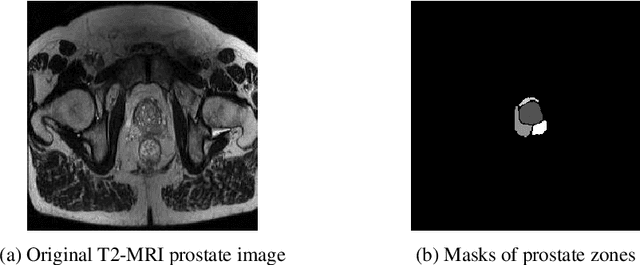

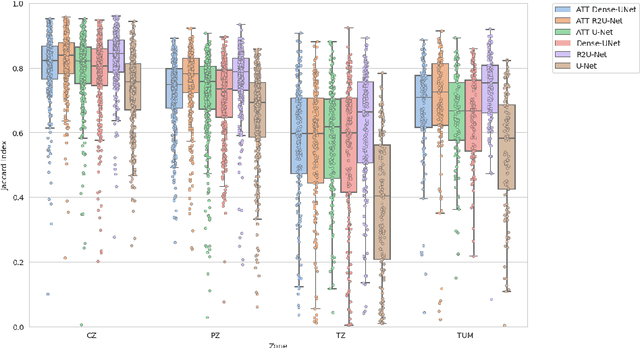

Abstract:Prostate cancer is the second-most frequently diagnosed cancer and the sixth leading cause of cancer death in males worldwide. The main problem that specialists face during the diagnosis of prostate cancer is the localization of Regions of Interest (ROI) containing a tumor tissue. Currently, the segmentation of this ROI in most cases is carried out manually by expert doctors, but the procedure is plagued with low detection rates (of about 27-44%) or overdiagnosis in some patients. Therefore, several research works have tackled the challenge of automatically segmenting and extracting features of the ROI from magnetic resonance images, as this process can greatly facilitate many diagnostic and therapeutic applications. However, the lack of clear prostate boundaries, the heterogeneity inherent to the prostate tissue, and the variety of prostate shapes makes this process very difficult to automate.In this work, six deep learning models were trained and analyzed with a dataset of MRI images obtained from the Centre Hospitalaire de Dijon and Universitat Politecnica de Catalunya. We carried out a comparison of multiple deep learning models (i.e. U-Net, Attention U-Net, Dense-UNet, Attention Dense-UNet, R2U-Net, and Attention R2U-Net) using categorical cross-entropy loss function. The analysis was performed using three metrics commonly used for image segmentation: Dice score, Jaccard index, and mean squared error. The model that give us the best result segmenting all the zones was R2U-Net, which achieved 0.869, 0.782, and 0.00013 for Dice, Jaccard and mean squared error, respectively.

Impact of loss function in Deep Learning methods for accurate retinal vessel segmentation

Jun 01, 2022

Abstract:The retinal vessel network studied through fundus images contributes to the diagnosis of multiple diseases not only found in the eye. The segmentation of this system may help the specialized task of analyzing these images by assisting in the quantification of morphological characteristics. Due to its relevance, several Deep Learning-based architectures have been tested for tackling this problem automatically. However, the impact of loss function selection on the segmentation of the intricate retinal blood vessel system hasn't been systematically evaluated. In this work, we present the comparison of the loss functions Binary Cross Entropy, Dice, Tversky, and Combo loss using the deep learning architectures (i.e. U-Net, Attention U-Net, and Nested UNet) with the DRIVE dataset. Their performance is assessed using four metrics: the AUC, the mean squared error, the dice score, and the Hausdorff distance. The models were trained with the same number of parameters and epochs. Using dice score and AUC, the best combination was SA-UNet with Combo loss, which had an average of 0.9442 and 0.809 respectively. The best average of Hausdorff distance and mean square error were obtained using the Nested U-Net with the Dice loss function, which had an average of 6.32 and 0.0241 respectively. The results showed that there is a significant difference in the selection of loss function

Experimental Large-Scale Jet Flames' Geometrical Features Extraction for Risk Management Using Infrared Images and Deep Learning Segmentation Methods

Jan 20, 2022

Abstract:Jet fires are relatively small and have the least severe effects among the diverse fire accidents that can occur in industrial plants; however, they are usually involved in a process known as the domino effect, that leads to more severe events, such as explosions or the initiation of another fire, making the analysis of such fires an important part of risk analysis. This research work explores the application of deep learning models in an alternative approach that uses the semantic segmentation of jet fires flames to extract main geometrical attributes, relevant for fire risk assessments. A comparison is made between traditional image processing methods and some state-of-the-art deep learning models. It is found that the best approach is a deep learning architecture known as UNet, along with its two improvements, Attention UNet and UNet++. The models are then used to segment a group of vertical jet flames of varying pipe outlet diameters to extract their main geometrical characteristics. Attention UNet obtained the best general performance in the approximation of both height and area of the flames, while also showing a statistically significant difference between it and UNet++. UNet obtained the best overall performance for the approximation of the lift-off distances; however, there is not enough data to prove a statistically significant difference between Attention UNet and UNet++. The only instance where UNet++ outperformed the other models, was while obtaining the lift-off distances of the jet flames with 0.01275 m pipe outlet diameter. In general, the explored models show good agreement between the experimental and predicted values for relatively large turbulent propane jet flames, released in sonic and subsonic regimes; thus, making these radiation zones segmentation models, a suitable approach for different jet flame risk management scenarios.

Comparing Machine Learning based Segmentation Models on Jet Fire Radiation Zones

Aug 02, 2021

Abstract:Risk assessment is relevant in any workplace, however there is a degree of unpredictability when dealing with flammable or hazardous materials so that detection of fire accidents by itself may not be enough. An example of this is the impingement of jet fires, where the heat fluxes of the flame could reach nearby equipment and dramatically increase the probability of a domino effect with catastrophic results. Because of this, the characterization of such fire accidents is important from a risk management point of view. One such characterization would be the segmentation of different radiation zones within the flame, so this paper presents an exploratory research regarding several traditional computer vision and Deep Learning segmentation approaches to solve this specific problem. A data set of propane jet fires is used to train and evaluate the different approaches and given the difference in the distribution of the zones and background of the images, different loss functions, that seek to alleviate data imbalance, are also explored. Additionally, different metrics are correlated to a manual ranking performed by experts to make an evaluation that closely resembles the expert's criteria. The Hausdorff Distance and Adjusted Random Index were the metrics with the highest correlation and the best results were obtained from the UNet architecture with a Weighted Cross-Entropy Loss. These results can be used in future research to extract more geometric information from the segmentation masks or could even be implemented on other types of fire accidents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge