Ivan Reyes-Amezcua

Evaluation of Few-Shot Learning Methods for Kidney Stone Type Recognition in Ureteroscopy

May 23, 2025

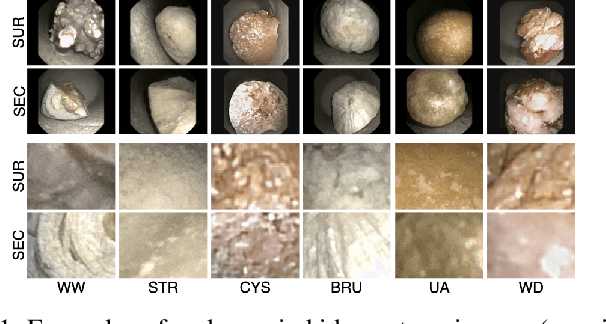

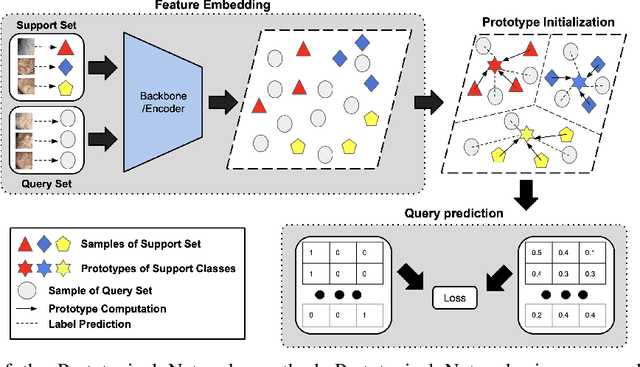

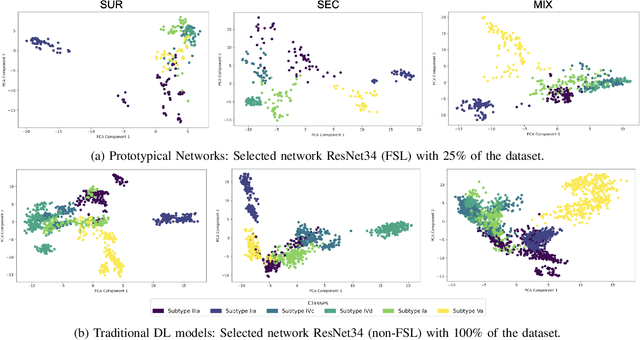

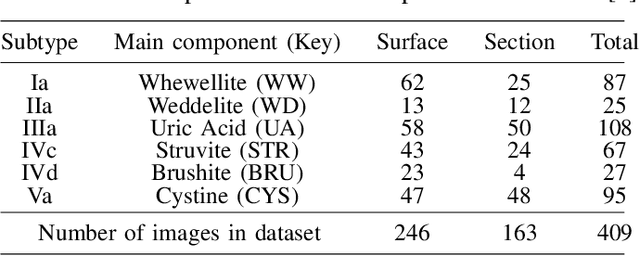

Abstract:Determining the type of kidney stones is crucial for prescribing appropriate treatments to prevent recurrence. Currently, various approaches exist to identify the type of kidney stones. However, obtaining results through the reference ex vivo identification procedure can take several weeks, while in vivo visual recognition requires highly trained specialists. For this reason, deep learning models have been developed to provide urologists with an automated classification of kidney stones during ureteroscopies. Nevertheless, a common issue with these models is the lack of training data. This contribution presents a deep learning method based on few-shot learning, aimed at producing sufficiently discriminative features for identifying kidney stone types in endoscopic images, even with a very limited number of samples. This approach was specifically designed for scenarios where endoscopic images are scarce or where uncommon classes are present, enabling classification even with a limited training dataset. The results demonstrate that Prototypical Networks, using up to 25% of the training data, can achieve performance equal to or better than traditional deep learning models trained with the complete dataset.

EndoDepth: A Benchmark for Assessing Robustness in Endoscopic Depth Prediction

Sep 30, 2024

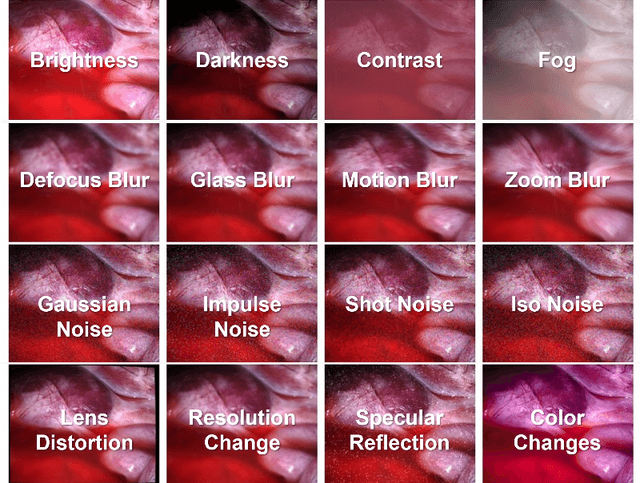

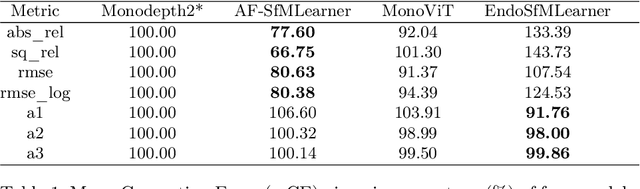

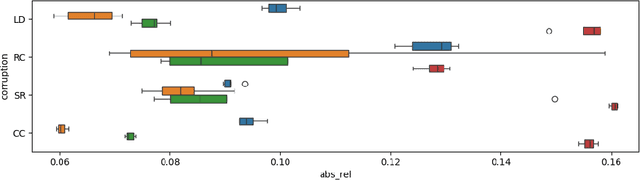

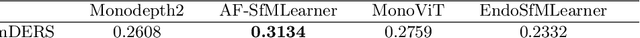

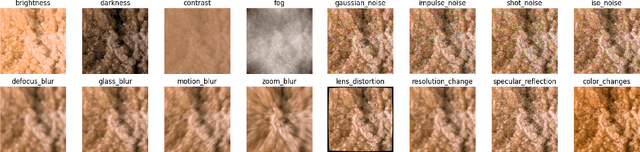

Abstract:Accurate depth estimation in endoscopy is vital for successfully implementing computer vision pipelines for various medical procedures and CAD tools. In this paper, we present the EndoDepth benchmark, an evaluation framework designed to assess the robustness of monocular depth prediction models in endoscopic scenarios. Unlike traditional datasets, the EndoDepth benchmark incorporates common challenges encountered during endoscopic procedures. We present an evaluation approach that is consistent and specifically designed to evaluate the robustness performance of the model in endoscopic scenarios. Among these is a novel composite metric called the mean Depth Estimation Robustness Score (mDERS), which offers an in-depth evaluation of a model's accuracy against errors brought on by endoscopic image corruptions. Moreover, we present SCARED-C, a new dataset designed specifically to assess endoscopy robustness. Through extensive experimentation, we evaluate state-of-the-art depth prediction architectures on the EndoDepth benchmark, revealing their strengths and weaknesses in handling endoscopic challenging imaging artifacts. Our results demonstrate the importance of specialized techniques for accurate depth estimation in endoscopy and provide valuable insights for future research directions.

Leveraging Pre-trained Models for Robust Federated Learning for Kidney Stone Type Recognition

Sep 30, 2024

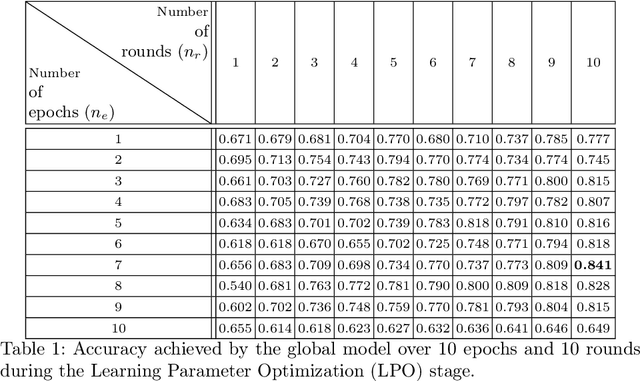

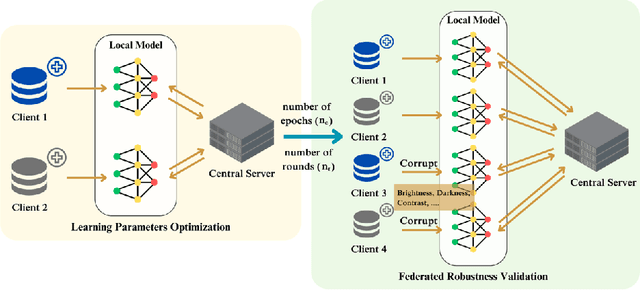

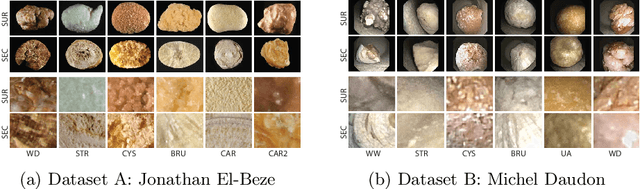

Abstract:Deep learning developments have improved medical imaging diagnoses dramatically, increasing accuracy in several domains. Nonetheless, obstacles continue to exist because of the requirement for huge datasets and legal limitations on data exchange. A solution is provided by Federated Learning (FL), which permits decentralized model training while maintaining data privacy. However, FL models are susceptible to data corruption, which may result in performance degradation. Using pre-trained models, this research suggests a strong FL framework to improve kidney stone diagnosis. Two different kidney stone datasets, each with six different categories of images, are used in our experimental setting. Our method involves two stages: Learning Parameter Optimization (LPO) and Federated Robustness Validation (FRV). We achieved a peak accuracy of 84.1% with seven epochs and 10 rounds during LPO stage, and 77.2% during FRV stage, showing enhanced diagnostic accuracy and robustness against image corruption. This highlights the potential of merging pre-trained models with FL to address privacy and performance concerns in medical diagnostics, and guarantees improved patient care and enhanced trust in FL-based medical systems.

SuSana Distancia is all you need: Enforcing class separability in metric learning via two novel distance-based loss functions for few-shot image classification

May 18, 2023Abstract:Few-shot learning is a challenging area of research that aims to learn new concepts with only a few labeled samples of data. Recent works based on metric-learning approaches leverage the meta-learning approach, which is encompassed by episodic tasks that make use a support (training) and query set (test) with the objective of learning a similarity comparison metric between those sets. Due to the lack of data, the learning process of the embedding network becomes an important part of the few-shot task. Previous works have addressed this problem using metric learning approaches, but the properties of the underlying latent space and the separability of the difference classes on it was not entirely enforced. In this work, we propose two different loss functions which consider the importance of the embedding vectors by looking at the intra-class and inter-class distance between the few data. The first loss function is the Proto-Triplet Loss, which is based on the original triplet loss with the modifications needed to better work on few-shot scenarios. The second loss function, which we dub ICNN loss is based on an inter and intra class nearest neighbors score, which help us to assess the quality of embeddings obtained from the trained network. Our results, obtained from a extensive experimental setup show a significant improvement in accuracy in the miniImagenNet benchmark compared to other metric-based few-shot learning methods by a margin of 2%, demonstrating the capability of these loss functions to allow the network to generalize better to previously unseen classes. In our experiments, we demonstrate competitive generalization capabilities to other domains, such as the Caltech CUB, Dogs and Cars datasets compared with the state of the art.

Boosting Kidney Stone Identification in Endoscopic Images Using Two-Step Transfer Learning

Oct 24, 2022Abstract:Knowing the cause of kidney stone formation is crucial to establish treatments that prevent recurrence. There are currently different approaches for determining the kidney stone type. However, the reference ex-vivo identification procedure can take up to several weeks, while an in-vivo visual recognition requires highly trained specialists. Machine learning models have been developed to provide urologists with an automated classification of kidney stones during an ureteroscopy; however, there is a general lack in terms of quality of the training data and methods. In this work, a two-step transfer learning approach is used to train the kidney stone classifier. The proposed approach transfers knowledge learned on a set of images of kidney stones acquired with a CCD camera (ex-vivo dataset) to a final model that classifies images from endoscopic images (ex-vivo dataset). The results show that learning features from different domains with similar information helps to improve the performance of a model that performs classification in real conditions (for instance, uncontrolled lighting conditions and blur). Finally, in comparison to models that are trained from scratch or by initializing ImageNet weights, the obtained results suggest that the two-step approach extracts features improving the identification of kidney stones in endoscopic images.

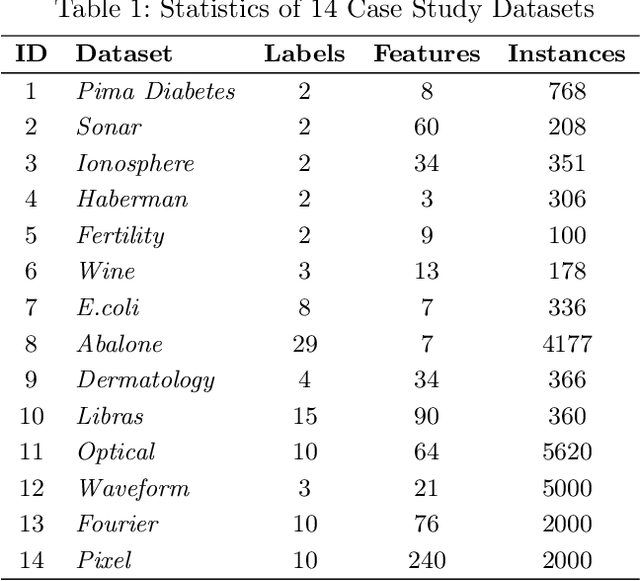

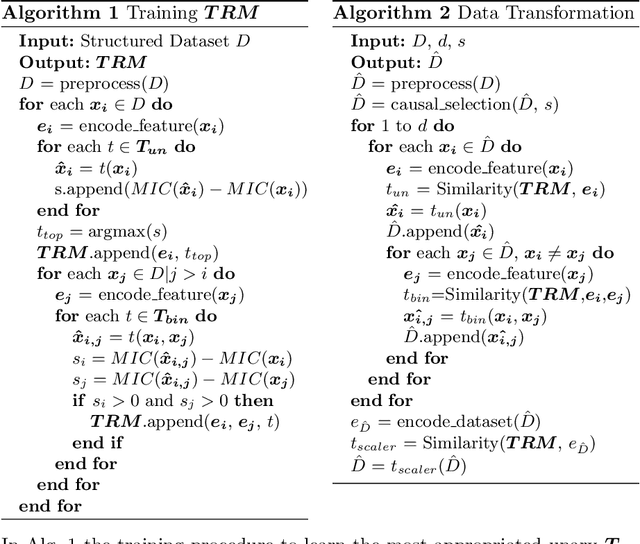

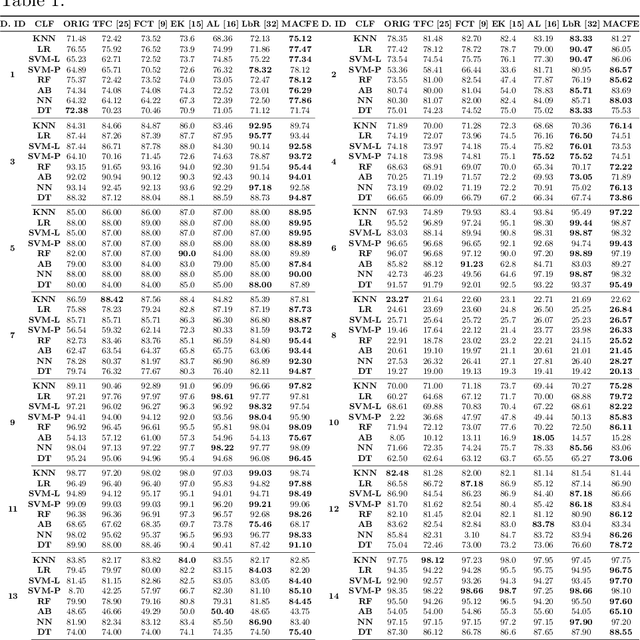

MACFE: A Meta-learning and Causality Based Feature Engineering Framework

Jul 08, 2022

Abstract:Feature engineering has become one of the most important steps to improve model prediction performance, and to produce quality datasets. However, this process requires non-trivial domain-knowledge which involves a time-consuming process. Thereby, automating such process has become an active area of research and of interest in industrial applications. In this paper, a novel method, called Meta-learning and Causality Based Feature Engineering (MACFE), is proposed; our method is based on the use of meta-learning, feature distribution encoding, and causality feature selection. In MACFE, meta-learning is used to find the best transformations, then the search is accelerated by pre-selecting "original" features given their causal relevance. Experimental evaluations on popular classification datasets show that MACFE can improve the prediction performance across eight classifiers, outperforms the current state-of-the-art methods in average by at least 6.54%, and obtains an improvement of 2.71% over the best previous works.

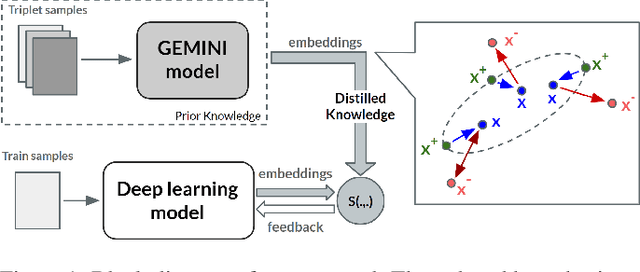

Guided Deep Metric Learning

Jun 04, 2022

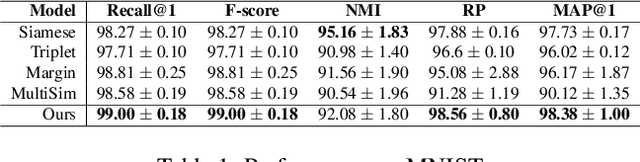

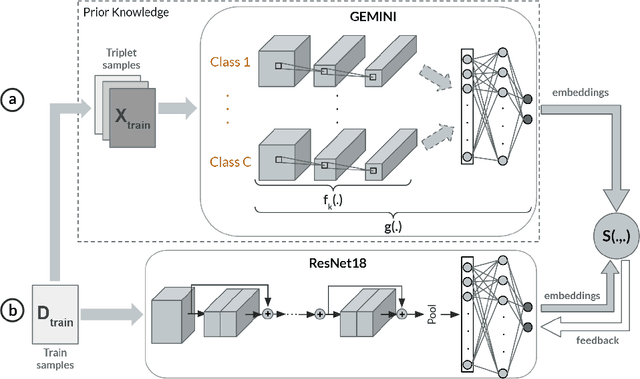

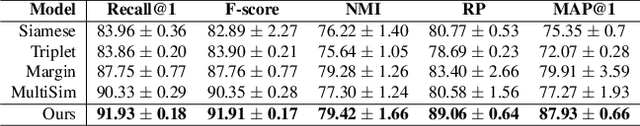

Abstract:Deep Metric Learning (DML) methods have been proven relevant for visual similarity learning. However, they sometimes lack generalization properties because they are trained often using an inappropriate sample selection strategy or due to the difficulty of the dataset caused by a distributional shift in the data. These represent a significant drawback when attempting to learn the underlying data manifold. Therefore, there is a pressing need to develop better ways of obtaining generalization and representation of the underlying manifold. In this paper, we propose a novel approach to DML that we call Guided Deep Metric Learning, a novel architecture oriented to learning more compact clusters, improving generalization under distributional shifts in DML. This novel architecture consists of two independent models: A multi-branch master model, inspired from a Few-Shot Learning (FSL) perspective, generates a reduced hypothesis space based on prior knowledge from labeled data, which guides or regularizes the decision boundary of a student model during training under an offline knowledge distillation scheme. Experiments have shown that the proposed method is capable of a better manifold generalization and representation to up to 40% improvement (Recall@1, CIFAR10), using guidelines suggested by Musgrave et al. to perform a more fair and realistic comparison, which is currently absent in the literature

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge