Dana Brooks

AlignGraph: A Group of Generative Models for Graphs

Jan 26, 2023

Abstract:It is challenging for generative models to learn a distribution over graphs because of the lack of permutation invariance: nodes may be ordered arbitrarily across graphs, and standard graph alignment is combinatorial and notoriously expensive. We propose AlignGraph, a group of generative models that combine fast and efficient graph alignment methods with a family of deep generative models that are invariant to node permutations. Our experiments demonstrate that our framework successfully learns graph distributions, outperforming competitors by 25% -560% in relevant performance scores.

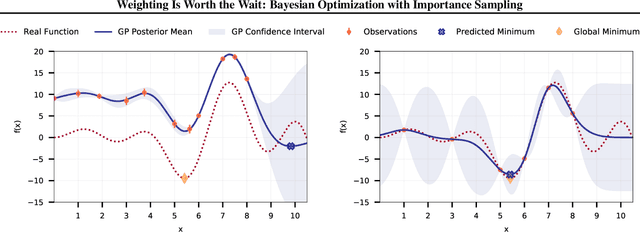

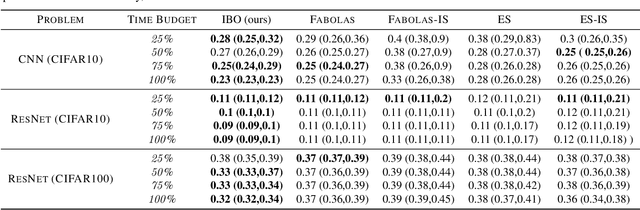

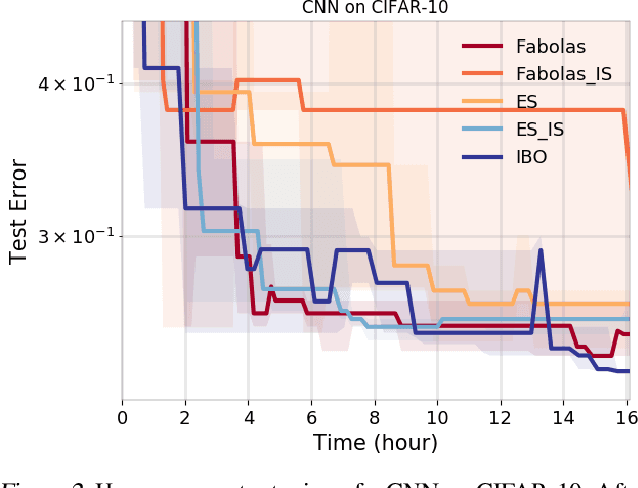

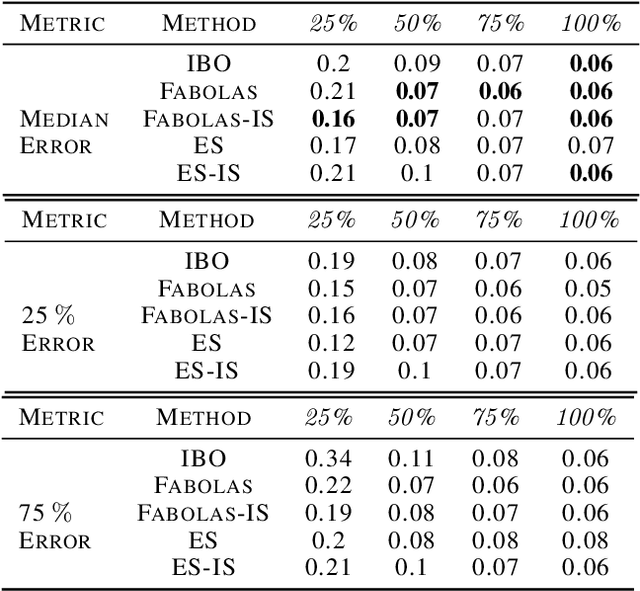

Weighting Is Worth the Wait: Bayesian Optimization with Importance Sampling

Feb 23, 2020

Abstract:Many contemporary machine learning models require extensive tuning of hyperparameters to perform well. A variety of methods, such as Bayesian optimization, have been developed to automate and expedite this process. However, tuning remains extremely costly as it typically requires repeatedly fully training models. We propose to accelerate the Bayesian optimization approach to hyperparameter tuning for neural networks by taking into account the relative amount of information contributed by each training example. To do so, we leverage importance sampling (IS); this significantly increases the quality of the black-box function evaluations, but also their runtime, and so must be done carefully. Casting hyperparameter search as a multi-task Bayesian optimization problem over both hyperparameters and importance sampling design achieves the best of both worlds: by learning a parameterization of IS that trades-off evaluation complexity and quality, we improve upon Bayesian optimization state-of-the-art runtime and final validation error across a variety of datasets and complex neural architectures.

Mapping Motor Cortex Stimulation to Muscle Responses: A Deep Neural Network Modeling Approach

Feb 14, 2020

Abstract:A deep neural network (DNN) that can reliably model muscle responses from corresponding brain stimulation has the potential to increase knowledge of coordinated motor control for numerous basic science and applied use cases. Such cases include the understanding of abnormal movement patterns due to neurological injury from stroke, and stimulation based interventions for neurological recovery such as paired associative stimulation. In this work, potential DNN models are explored and the one with the minimum squared errors is recommended for the optimal performance of the M2M-Net, a network that maps transcranial magnetic stimulation of the motor cortex to corresponding muscle responses, using: a finite element simulation, an empirical neural response profile, a convolutional autoencoder, a separate deep network mapper, and recordings of multi-muscle activation. We discuss the rationale behind the different modeling approaches and architectures, and contrast their results. Additionally, to obtain a comparative insight of the trade-off between complexity and performance analysis, we explore different techniques, including the extension of two classical information criteria for M2M-Net. Finally, we find that the model analogous to mapping the motor cortex stimulation to a combination of direct and synergistic connection to the muscles performs the best, when the neural response profile is used at the input.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge