Claudio Bruschini

Systematic validation of time-resolved diffuse optical simulators via non-contact SPAD-based measurements

Nov 17, 2025

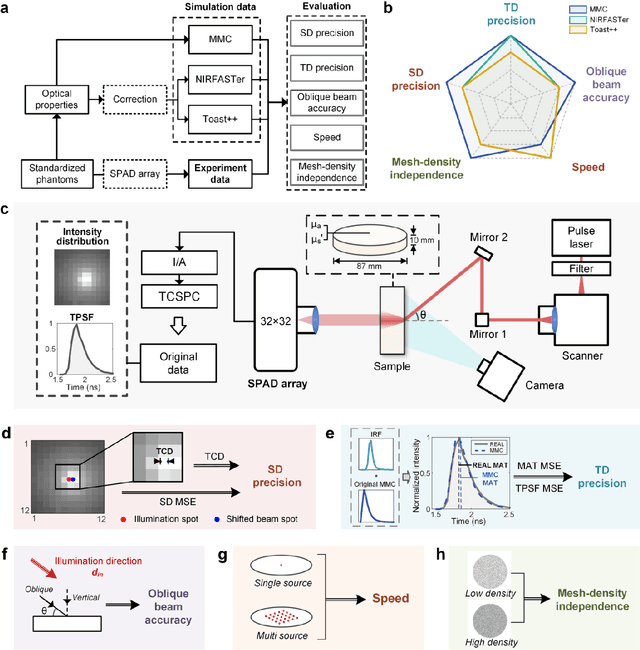

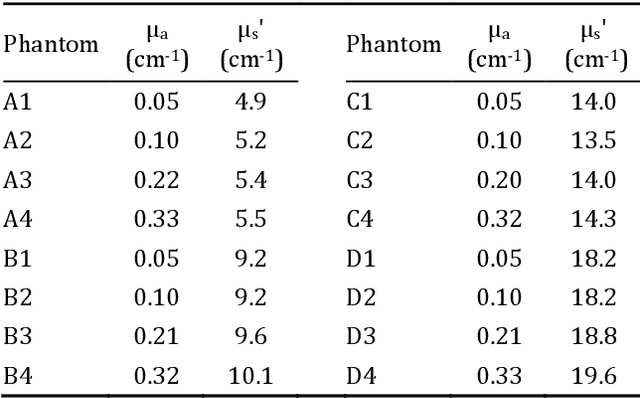

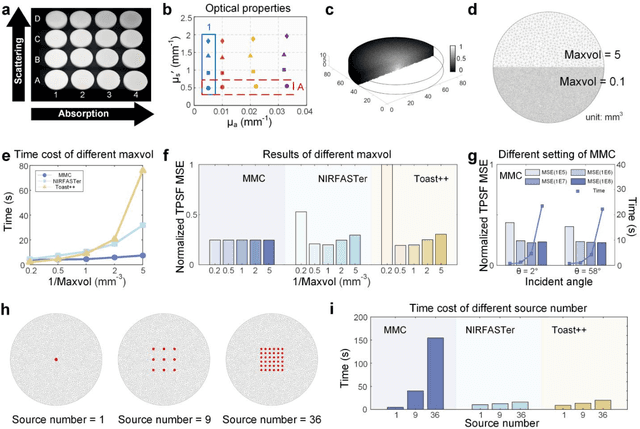

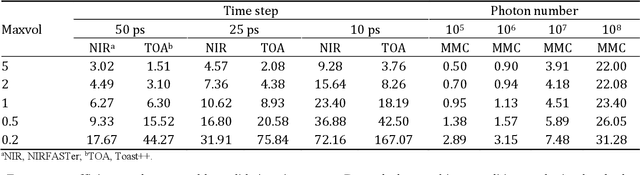

Abstract:Objective: Time-domain diffuse optical imaging (DOI) requires accurate forward models for photon propagation in scattering media. However, existing simulators lack comprehensive experimental validation, especially for non-contact configurations with oblique illumination. This study rigorously evaluates three widely used open-source simulators, including MMC, NIRFASTer, and Toast++, using time-resolved experimental data. Approach: All simulations employed a unified mesh and point-source illumination. Virtual source correction was applied to FEM solvers for oblique incidence. A time-resolved DOI system with a 32 $\times$ 32 single-photon avalanche diode (SPAD) array acquired transmission-mode data from 16 standardized phantoms with varying absorption coefficient $μ_a$ and reduced scattering coefficient $μ_s'$. The simulation results were quantified across five metrics: spatial-domain (SD) precision, time-domain (TD) precision, oblique beam accuracy, computational speed, and mesh-density independence. Results: Among three simulators, MMC achieves superior accuracy in SD and TD metrics, and shows robustness across all optical properties. NIRFASTer and Toast++ demonstrate comparable overall performance. In general, MMC is optimal for accuracy-critical TD-DOI applications, while NIRFASTer and Toast++ suit scenarios prioritizing speed with sufficiently large $μ_s'$. Besides, virtual source correction is essential for non-contact FEM modeling, which reduced average errors by > 34% in large-angle scenarios. Significance: This work provides benchmarked guidelines for simulator selection during the development phase of next-generation TD-DOI systems. Our work represents the first study to systematically validate TD simulators against SPAD array-based data under clinically relevant non-contact conditions, bridging a critical gap in biomedical optical simulation standards.

Transporter: A 128$\times$4 SPAD Imager with On-chip Encoder for Spiking Neural Network-based Processing

Nov 07, 2025

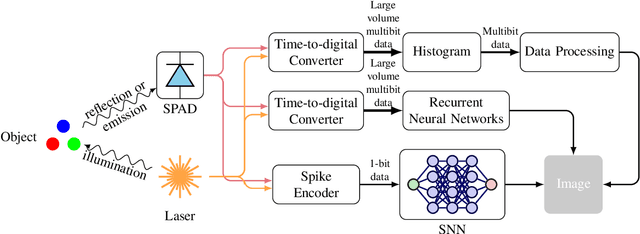

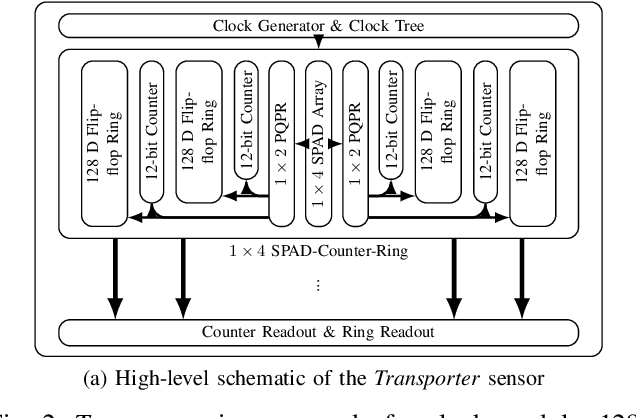

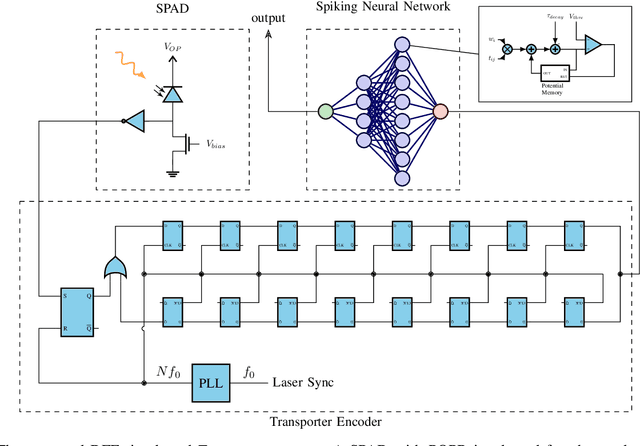

Abstract:Single-photon avalanche diodes (SPADs) are widely used today in time-resolved imaging applications. However, traditional architectures rely on time-to-digital converters (TDCs) and histogram-based processing, leading to significant data transfer and processing challenges. Previous work based on recurrent neural networks has realized histogram-free processing. To further address these limitations, we propose a novel paradigm that eliminates TDCs by integrating in-sensor spike encoders. This approach enables preprocessing of photon arrival events in the sensor while significantly compressing data, reducing complexity, and maintaining real-time edge processing capabilities. A dedicated spike encoder folds multiple laser repetition periods, transforming phase-based spike trains into density-based spike trains optimized for spiking neural network processing and training via backpropagation through time. As a proof of concept, we introduce Transporter, a 128$\times$4 SPAD sensor with a per-pixel D flip-flop ring-based spike encoder, designed for intelligent active time-resolved imaging. This work demonstrates a path toward more efficient, neuromorphic SPAD imaging systems with reduced data overhead and enhanced real-time processing.

GPU-based data processing for speeding-up correlation plenoptic imaging

Jul 30, 2024

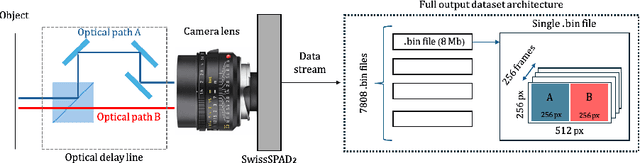

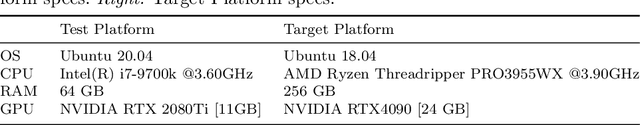

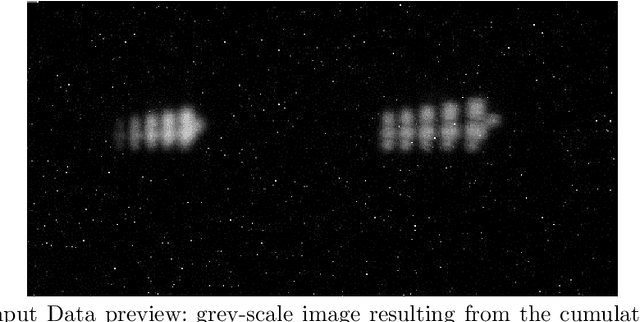

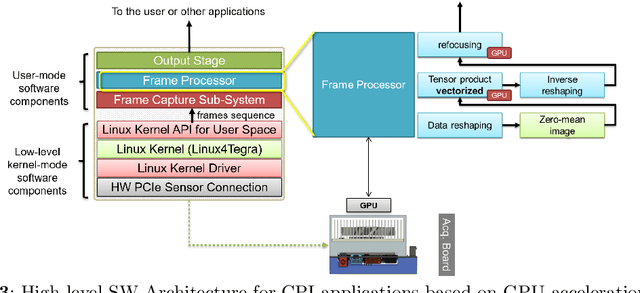

Abstract:Correlation Plenoptic Imaging (CPI) is a novel technological imaging modality enabling to overcome drawbacks of standard plenoptic devices, while preserving their advantages. However, a major challenge in view of real-time application of CPI is related with the relevant amount of required frames and the consequent computational-intensive processing algorithm. In this work, we describe the design and implementation of an optimized processing algorithm that is portable to an efficient computational environment and exploits the highly parallel algorithm offered by GPUs. Improvements by a factor ranging from 20x, for correlation measurement, to 500x, for refocusing, are demonstrated. Exploration of the relation between the improvement in performance achieved and actual GPU capabilities, also indicates the feasibility of near-real time processing capability, opening up to the potential use of CPI for practical real-time application.

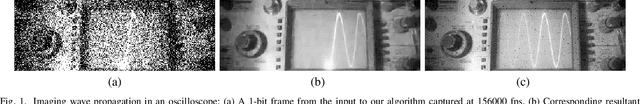

Generalized Event Cameras

Jul 02, 2024Abstract:Event cameras capture the world at high time resolution and with minimal bandwidth requirements. However, event streams, which only encode changes in brightness, do not contain sufficient scene information to support a wide variety of downstream tasks. In this work, we design generalized event cameras that inherently preserve scene intensity in a bandwidth-efficient manner. We generalize event cameras in terms of when an event is generated and what information is transmitted. To implement our designs, we turn to single-photon sensors that provide digital access to individual photon detections; this modality gives us the flexibility to realize a rich space of generalized event cameras. Our single-photon event cameras are capable of high-speed, high-fidelity imaging at low readout rates. Consequently, these event cameras can support plug-and-play downstream inference, without capturing new event datasets or designing specialized event-vision models. As a practical implication, our designs, which involve lightweight and near-sensor-compatible computations, provide a way to use single-photon sensors without exorbitant bandwidth costs.

SoDaCam: Software-defined Cameras via Single-Photon Imaging

Sep 08, 2023

Abstract:Reinterpretable cameras are defined by their post-processing capabilities that exceed traditional imaging. We present "SoDaCam" that provides reinterpretable cameras at the granularity of photons, from photon-cubes acquired by single-photon devices. Photon-cubes represent the spatio-temporal detections of photons as a sequence of binary frames, at frame-rates as high as 100 kHz. We show that simple transformations of the photon-cube, or photon-cube projections, provide the functionality of numerous imaging systems including: exposure bracketing, flutter shutter cameras, video compressive systems, event cameras, and even cameras that move during exposure. Our photon-cube projections offer the flexibility of being software-defined constructs that are only limited by what is computable, and shot-noise. We exploit this flexibility to provide new capabilities for the emulated cameras. As an added benefit, our projections provide camera-dependent compression of photon-cubes, which we demonstrate using an implementation of our projections on a novel compute architecture that is designed for single-photon imaging.

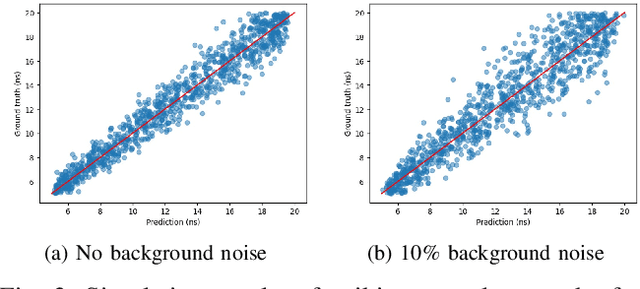

Recurrent Neural Network-coupled SPAD TCSPC System for Real-time Fluorescence Lifetime Imaging

Jun 27, 2023Abstract:Fluorescence lifetime imaging (FLI) has been receiving increased attention in recent years as a powerful imaging technique in biological and medical research. However, existing FLI systems often suffer from a tradeoff between processing speed, accuracy, and robustness. In this paper, we propose a SPAD TCSPC system coupled to a recurrent neural network (RNN) for FLI that accurately estimates on the fly fluorescence lifetime directly from raw timestamps instead of histograms, which drastically reduces the data transfer rate and hardware resource utilization. We train two variants of the RNN on a synthetic dataset and compare the results to those obtained using the center-of-mass method (CMM) and least squares fitting (LS fitting) methods. The results demonstrate that two RNN variants, gated recurrent unit (GRU) and long short-term memory (LSTM), are comparable to CMM and LS fitting in terms of accuracy and outperform CMM and LS fitting by a large margin in the presence of background noise. We also look at the Cramer-Rao lower bound and detailed analysis showed that the RNN models are close to the theoretical optima. The analysis of experimental data shows that our model, which is purely trained on synthetic datasets, works well on real-world data. We build a FLI microscope setup for evaluation based on Piccolo, a 32$\times$32 SPAD sensor developed in our lab. Four quantized GRU cores, capable of processing up to 4 million photons per second, are deployed on a Xilinx Kintex-7 FPGA. Powered by the GRU, the FLI setup can retrieve real-time fluorescence lifetime images at up to 10 frames per second. The proposed FLI system is promising for many important biomedical applications, ranging from biological imaging of fast-moving cells to fluorescence-assisted diagnosis and surgery.

On-chip fully reconfigurable Artificial Neural Network in 16 nm FinFET for Positron Emission Tomography

Feb 16, 2023Abstract:Smarty is a fully-reconfigurable on-chip feed-forward artificial neural network (ANN) with ten integrated time-to-digital converters (TDCs) designed in a 16 nm FinFET CMOS technology node. The integration of TDCs together with an ANN aims to reduce system complexity and minimize data throughput requirements in positron emission tomography (PET) applications. The TDCs have an average LSB of 53.5 ps. The ANN is fully reconfigurable, the user being able to change its topology as desired within a set of constraints. The chip can execute 363 MOPS with a maximum power consumption of 1.9 mW, for an efficiency of 190 GOPS/W. The system performance was tested in a coincidence measurement setup interfacing Smarty with two groups of five 4 mm x 4 mm analog silicon photomultipliers (A-SiPMs) used as inputs for the TDCs. The ANN successfully distinguished between six different positions of a radioactive source placed between the two photodetector arrays by solely using the TDC timestamps.

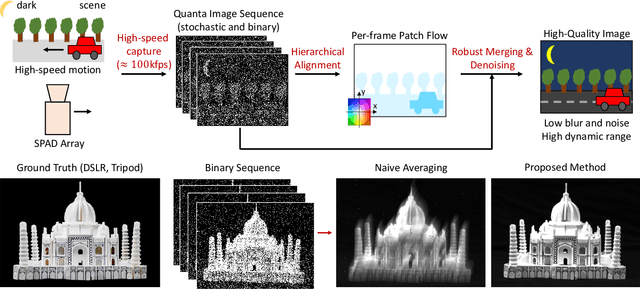

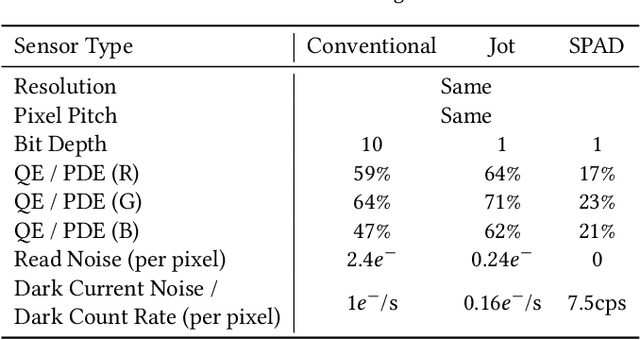

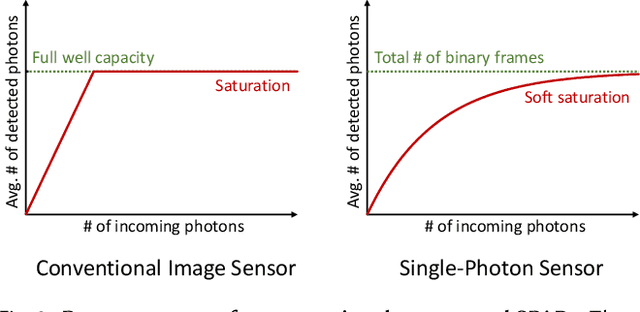

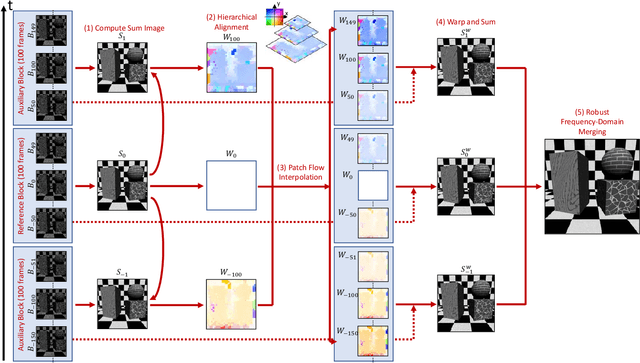

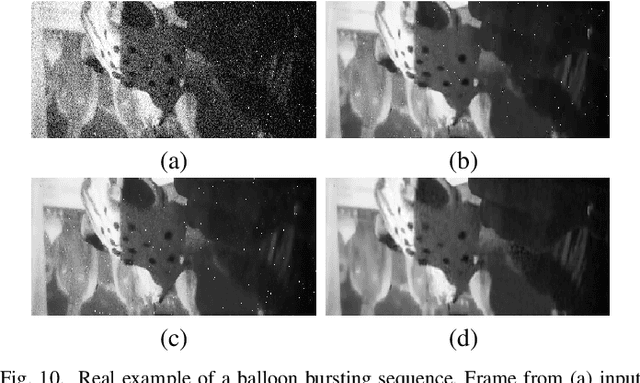

Quanta Burst Photography

Jun 21, 2020

Abstract:Single-photon avalanche diodes (SPADs) are an emerging sensor technology capable of detecting individual incident photons, and capturing their time-of-arrival with high timing precision. While these sensors were limited to single-pixel or low-resolution devices in the past, recently, large (up to 1 MPixel) SPAD arrays have been developed. These single-photon cameras (SPCs) are capable of capturing high-speed sequences of binary single-photon images with no read noise. We present quanta burst photography, a computational photography technique that leverages SPCs as passive imaging devices for photography in challenging conditions, including ultra low-light and fast motion. Inspired by recent success of conventional burst photography, we design algorithms that align and merge binary sequences captured by SPCs into intensity images with minimal motion blur and artifacts, high signal-to-noise ratio (SNR), and high dynamic range. We theoretically analyze the SNR and dynamic range of quanta burst photography, and identify the imaging regimes where it provides significant benefits. We demonstrate, via a recently developed SPAD array, that the proposed method is able to generate high-quality images for scenes with challenging lighting, complex geometries, high dynamic range and moving objects. With the ongoing development of SPAD arrays, we envision quanta burst photography finding applications in both consumer and scientific photography.

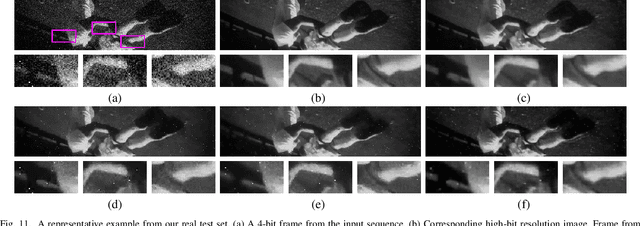

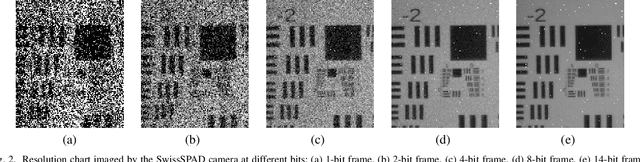

A `Little Bit' Too Much? High Speed Imaging from Sparse Photon Counts

Nov 06, 2018

Abstract:Recent advances in photographic sensing technologies have made it possible to achieve light detection in terms of a single photon. Photon counting sensors are being increasingly used in many diverse applications. We address the problem of jointly recovering spatial and temporal scene radiance from very few photon counts. Our ConvNet-based scheme effectively combines spatial and temporal information present in measurements to reduce noise. We demonstrate that using our method one can acquire videos at a high frame rate and still achieve good quality signal-to-noise ratio. Experiments show that the proposed scheme performs quite well in different challenging scenarios while the existing denoising schemes are unable to handle them.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge