Christopher Lehnert

QueryAdapter: Rapid Adaptation of Vision-Language Models in Response to Natural Language Queries

Feb 26, 2025

Abstract:A domain shift exists between the large-scale, internet data used to train a Vision-Language Model (VLM) and the raw image streams collected by a robot. Existing adaptation strategies require the definition of a closed-set of classes, which is impractical for a robot that must respond to diverse natural language queries. In response, we present QueryAdapter; a novel framework for rapidly adapting a pre-trained VLM in response to a natural language query. QueryAdapter leverages unlabelled data collected during previous deployments to align VLM features with semantic classes related to the query. By optimising learnable prompt tokens and actively selecting objects for training, an adapted model can be produced in a matter of minutes. We also explore how objects unrelated to the query should be dealt with when using real-world data for adaptation. In turn, we propose the use of object captions as negative class labels, helping to produce better calibrated confidence scores during adaptation. Extensive experiments on ScanNet++ demonstrate that QueryAdapter significantly enhances object retrieval performance compared to state-of-the-art unsupervised VLM adapters and 3D scene graph methods. Furthermore, the approach exhibits robust generalization to abstract affordance queries and other datasets, such as Ego4D.

Predicting Class Distribution Shift for Reliable Domain Adaptive Object Detection

Feb 13, 2023

Abstract:Unsupervised Domain Adaptive Object Detection (UDA-OD) uses unlabelled data to improve the reliability of robotic vision systems in open-world environments. Previous approaches to UDA-OD based on self-training have been effective in overcoming changes in the general appearance of images. However, shifts in a robot's deployment environment can also impact the likelihood that different objects will occur, termed class distribution shift. Motivated by this, we propose a framework for explicitly addressing class distribution shift to improve pseudo-label reliability in self-training. Our approach uses the domain invariance and contextual understanding of a pre-trained joint vision and language model to predict the class distribution of unlabelled data. By aligning the class distribution of pseudo-labels with this prediction, we provide weak supervision of pseudo-label accuracy. To further account for low quality pseudo-labels early in self-training, we propose an approach to dynamically adjust the number of pseudo-labels per image based on model confidence. Our method outperforms state-of-the-art approaches on several benchmarks, including a 4.7 mAP improvement when facing challenging class distribution shift.

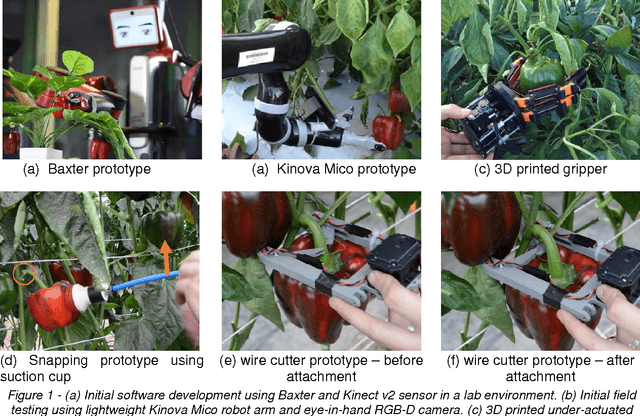

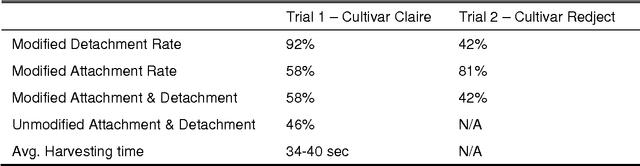

Lessons Learnt from Field Trials of a Robotic Sweet Pepper Harvester

Jun 19, 2017

Abstract:In this paper, we present the lessons learnt during the development of a new robotic harvester (Harvey) that can autonomously harvest sweet pepper (capsicum) in protected cropping environments. Robotic harvesting offers an attractive potential solution to reducing labour costs while enabling more regular and selective harvesting, optimising crop quality, scheduling and therefore profit. Our approach combines effective vision algorithms with a novel end-effector design to enable successful harvesting of sweet peppers. We demonstrate a simple and effective vision-based algorithm for crop detection, a grasp selection method, and a novel end-effector design for harvesting. To reduce the complexity of motion planning and to minimise occlusions we focus on picking sweet peppers in a protected cropping environment where plants are grown on planar trellis structures. Initial field trials in protected cropping environments, with two cultivars, demonstrate the efficacy of this approach. The results show that the robot harvester can successfully detect, grasp, and detach crop from the plant within a real protected cropping system. The novel contributions of this work have resulted in significant and encouraging improvements in sweet pepper picking success rates compared with the state-of-the-art. Future work will look at detecting sweet pepper peduncles and improving the total harvesting cycle time for each sweet pepper. The methods presented in this paper provide steps towards the goal of fully autonomous and reliable crop picking systems that will revolutionise the horticulture industry by reducing labour costs, maximising the quality of produce, and ultimately improving the sustainability of farming enterprises.

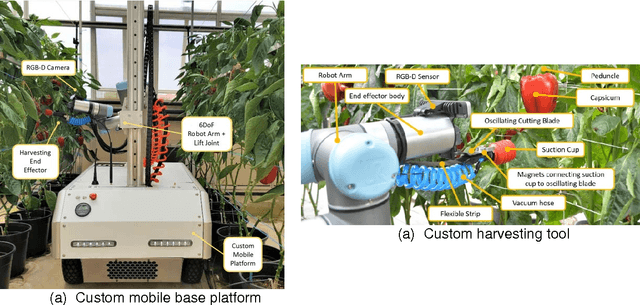

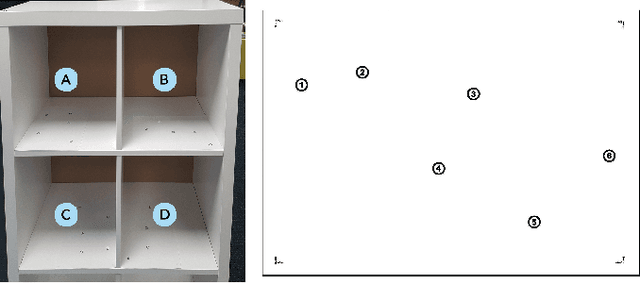

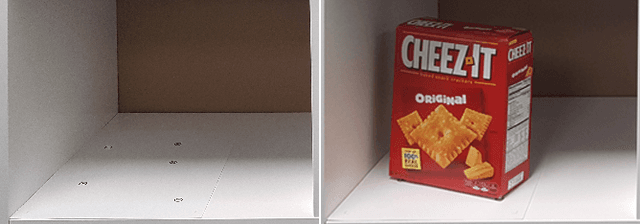

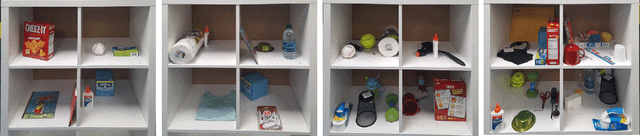

The ACRV Picking Benchmark : A Robotic Shelf Picking Benchmark to Foster Reproducible Research

Dec 14, 2016

Abstract:Robotic challenges like the Amazon Picking Challenge (APC) or the DARPA Challenges are an established and important way to drive scientific progress. They make research comparable on a well-defined benchmark with equal test conditions for all participants. However, such challenge events occur only occasionally, are limited to a small number of contestants, and the test conditions are very difficult to replicate after the main event. We present a new physical benchmark challenge for robotic picking: the ACRV Picking Benchmark (APB). Designed to be reproducible, it consists of a set of 42 common objects, a widely available shelf, and exact guidelines for object arrangement using stencils. A well-defined evaluation protocol enables the comparison of \emph{complete} robotic systems -- including perception and manipulation -- instead of sub-systems only. Our paper also describes and reports results achieved by an open baseline system based on a Baxter robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge